Leo Schramm

Since 2006, Amazon Web Services (AWS) has spurred organizations to embrace Infrastructure-as-a-Service (IaaS) to build, automate, and scale their systems. Over the years, AWS has expanded beyond basic compute resources (such as EC2 and S3), to include tools like CloudWatch for AWS monitoring, managed infrastructure services like Amazon RDS for database management, and AWS Lambda for serverless computing. Although this ever-expanding AWS ecosystem allows developers and operations teams to rapidly deploy and scale their infrastructure in the cloud, it has also made it more challenging to track the real-time health and performance of those services.

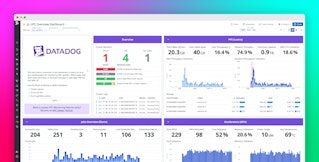

Datadog’s AWS integration aggregates metrics from across your entire AWS environment in one place and enables you to get full visibility into your highly dynamic services in order to efficiently investigate potential issues. This article will explore some key metrics that will help you monitor widely used services like Amazon EC2, EBS, ELB, RDS, and ElastiCache in full context with the rest of your infrastructure and applications. We will also touch on AWS Lambda and the metrics you should focus on in the absence of system-level data from application servers. Each section includes a “Further Reading” section where you can find more comprehensive monitoring strategies for each of the AWS services covered in this post.

Amazon EC2

What is Amazon EC2?

Amazon Elastic Compute Cloud (EC2) allows you to efficiently provision and scale your infrastructure on demand. EC2 instances, or virtual servers, are available in a range of instance types that offer various levels of CPU, memory, storage, and network capacity. EC2 instances integrate seamlessly with other AWS services, such as Auto Scaling and Elastic Load Balancing. As containerization continues to gain steam, EC2 has evolved to serve the needs of orchestrated applications, via Amazon’s Elastic Container Service (ECS) and Elastic Container Service for Kubernetes (EKS).

Metrics to watch

As you deploy and scale EC2 instances to support your applications, you’ll need to continuously monitor them to ensure that your infrastructure is functioning properly. The following metrics provide a foundation for understanding the performance and availability of your instances.

CPUUtilization

CPUUtilization measures the percentage of allocated compute units currently being used by an EC2 instance. If you see degraded application performance alongside continuously high levels of CPU usage (without any accompanying spikes in network, disk I/O, or memory), CPU may be a resource bottleneck. Tracking CPU utilization across your EC2 instances can also help you determine if your instances are over- or undersized for your workload.

DiskReadBytes and DiskWriteBytes

This pair of metrics measures the number of bytes read from and written to instance store (“ephemeral”) volumes attached to your EC2 instances. These metrics also include I/O data for any EBS volumes attached to C5 and M5 instance types. For other instance types, you’ll need to access disk I/O metrics directly from EBS. Monitoring this metric can help you identify application-level issues. For example, if you see that a large amount of data is consistently being read from disk, you may be able to improve application performance by adding a caching layer.

StatusCheck Failed

CloudWatch runs two pass-or-fail status checks at one-minute intervals. One check queries the availability of each EC2 instance, while the second reports information about the system hosting the instance. These checks provide visibility into EC2 health, and also help you determine whether the source of an issue lies with the instance itself or the underlying infrastructure that supports the instance.

Further reading

Monitoring the metrics covered here provides a good starting point for tracking the health and performance of your EC2 instances. However, as your dynamic AWS infrastructure scales up and down to meet demand, you will need to automatically collect, visualize, and alert on these metrics in order to gain further insights into your EC2 instances and the applications and services that depend on them.

For a deep dive into AWS monitoring for EC2, including all the steps you need to start monitoring EC2 with Datadog, consult our three-part guide:

Amazon EBS

What is Amazon EBS?

Amazon Elastic Block Store (EBS) provides persistent block-level storage for EC2 instances. EBS volumes are available in two primary types: solid-state drives (SSD) and hard-disk drives (HDD). Because they provide a reliable, long-term storage solution for EC2 instances, EBS volumes have become an important layer in many AWS deployments. EBS enables you to store snapshots of your volumes in S3 buckets, and copy and transfer replicas across AWS regions whenever you need to scale out your application infrastructure.

Metrics to watch

When monitoring AWS EBS, the following key metrics can help you track the usage and performance of your volumes.

VolumeReadBytes and VolumeWriteBytes

This pair of metrics measures the number of bytes transferred to and from your volumes over a certain time frame. It can be reported through a variety of aggregation methods. For instance, you can request the sum of these metrics to track the total number of bytes read and written over a specific time span, or query the average size of each I/O operation, which provides a useful foundation for profiling your EBS workload. Note that, for volumes attached to C5 and M5 instances, you can only query the average number of bytes read or written over the requested time period, rather than per operation.

VolumeTotalReadTime and VolumeTotalWriteTime

These metrics report the total time taken, in seconds, to complete all read and write operations over the queried time frame. If you see an increase in latency, you can correlate these metrics with throughput metrics to determine if your EBS volumes are hitting their IOPS limits.

VolumeQueueLength

The volume queue length, or the number of queued operations waiting to be executed, can provide insights into your EBS volumes’ workload. AWS recommends aiming for a queue length of one for every 500 IOPS available for SSD volumes, or at least four when processing 1MiB sequential operations on HDD volumes. However, if the volume queue length remains high for extended periods of time, it can lead to increased latency. You can correlate volume queue length with other metrics like IOPS and volume read/write time to see if your volumes have enough provisioned IOPS to manage their workload.

VolumeIdleTime

VolumeIdleTime measures the amount of time, in seconds, that your volumes are inactive. You can monitor this metric to help ensure that your provisioned resources are not going unused. If you see sudden spikes in idle time, along with reduced I/O levels, your applications may be experiencing issues that prevent them from sending requests to your volumes.

VolumeStatus

AWS helps you track the health of your EBS volumes by running a status check on them every five minutes. VolumeStatus will show up as ok if all checks pass, impaired if any check fails, or insufficient-data if any check is incomplete. Provisioned IOPS volumes may also send a warning status if they exhibit lower-than-expected performance. AWS automatically disables I/O operations on a volume if it detects possible inconsistencies in the volume’s data, and the next status check will fail by default. You can manually re-enable I/O on these volumes by configuring the AutoEnableIO volume attribute. This will enable the next status check to pass, but AWS will still record an event that indicates that it detected an issue.

Further reading

The metrics outlined above, which are available from Amazon CloudWatch, can help you track the usage and performance of your EBS volumes, but they only provide part of the picture. For example, system-level metrics like disk usage are not available through CloudWatch, so they will need to be collected using another method. Consult our monitoring guide to learn about other metrics and collection methods that can provide insights into your EBS volumes:

- Key metrics for Amazon EBS monitoring

- Collecting EBS metrics

- Monitoring Amazon EBS volumes with Datadog

Amazon ELB

What is Amazon ELB?

Amazon Elastic Load Balancing (ELB) distributes traffic from your applications across multiple EC2 instances or availability zones. ELB increases the fault tolerance and health of your applications by continuously checking the health of EC2 instances, and automatically routing requests away from unhealthy instances. Combined with Auto Scaling, ELB can help ensure that your infrastructure meets the demands of changing workloads and peaks in traffic.

AWS Elastic Load Balancing (ELB) offers several types of load balancers (e.g., classic load balancers, application load balancers, and network load balancers) for different use cases. This article will focus specifically on the key metrics to monitor for classic ELB load balancers.

Metrics to watch

Since ELB acts as a first point of contact for users of your applications, keeping a close eye on key ELB performance indicators is critical for improving end-user experience. The following metrics will help you track overall ELB performance and health.

RequestCount

This metric measures the number of requests handled by ELB over the queried time frame. You should monitor this metric for drastic changes that can act as an early warning sign of AWS or DNS issues. It can also help you determine when you need to scale your EC2 fleet up and down, if you are not using Auto Scaling.

SurgeQueueLength

SurgeQueueLength measures the number of requests awaiting distribution to available compute resources. A high number of queued requests can lead to increased latency. You should particularly monitor the maximum value of this metric to avoid reaching the queue capacity (1,024 requests) as once this limit is reached, any additional incoming requests will be dropped.

HTTPCode_ELB_5XX

This metric is only available if you’ve configured your ELB listener(s) with the HTTP/HTTPS protocol for both front- and back-end connections. It tracks the number of server errors returned by the load balancer during the queried time period. HTTP 5XX server errors warrant investigation, including Bad Gateway (502), Service Unavailable (503), and Gateway Timeout (504). For example, if you see Gateway Timeout (504) errors increasing along with response latency, you may want to scale up your backends or increase the idle timeout to enable connections to stay open longer.

Latency

This metric measures latency from your backend instances, rather than latency from the load balancer itself. If you are using HTTP listeners, this metric measures the total time (in seconds) it takes for a backend instance to start responding to a request sent by your load balancer. For TCP listeners, this metric measures the number of seconds it takes for the load balancer to connect to a registered instance. In either case, you can use this metric to help troubleshoot increases in request-processing time. High latency can indicate issues with network connections, backend hosts, or web server dependencies that can have a negative impact on application performance.

HealthyHostCount and UnHealthyHostCount

ELB uses these health checks to determine which backend instances are healthy enough to process requests. If an instance has failed a certain number of health checks in a row (as specified by the unhealthy threshold in your health check configuration), ELB will automatically direct traffic away from that instance and route it to available healthy instances. You can correlate HealthyHostCount with other metrics like SurgeQueueLength and Latency to ensure that you have enough healthy instances to process the traffic in each availability zone, and avoid service disruptions.

Further reading

Monitoring these AWS ELB metrics can help improve the performance and uptime of your applications. However, many other metrics, such as SpilloverCount, which measures the number of dropped requests, are also available to help you ensure swift response times to user requests. For a more comprehensive look at ELB monitoring, read our series of posts:

- Top ELB health and performance metrics

- How to collect AWS ELB metrics

- Monitor ELB performance with Datadog

Expanding your AWS infrastructure

While Amazon EC2, EBS, and ELB provide the core building blocks for your infrastructure, the AWS ecosystem has evolved to offer new ways for teams to manage and automate increasingly complex cloud environments. For example, Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS) are two container orchestration systems that enable teams to easily build, scale, and manage dynamic container infrastructure in the AWS cloud to power microservice architectures that have become more commonplace in production environments. And Amazon Relational Database Service (RDS) and Amazon ElastiCache are two examples of services that help users easily migrate and/or deploy infrastructure that has traditionally been managed by hand, like databases or caching engines. The next few sections will explore fundamental metrics for AWS monitoring when using these services.

Amazon ECS

What is Amazon ECS?

Amazon Elastic Container Service (ECS) is a service for managing Docker containers in the AWS cloud. Teams can use ECS to automatically scale and schedule containers for their microservices on either Amazon EC2 instances or AWS Fargate compute resources (or both). EC2 gives you more control over your container infrastructure while Fargate provisions the infrastructure for you.

Metrics to watch

ECS automates the deployment and management of your containers, so you’ll want to monitor the status of your ECS clusters to make sure containers are created, provisioned, and terminated as expected. You’ll also want to keep track of your ECS tasks and the resource usage of your workloads.

Desired task count vs. running task count per service

ECS uses tasks to provision and control containers according to a set of instructions that specify which container images to run, how many resources to allocate to each container, and more. ECS automatically manages running tasks and launches new tasks as needed to support your services, ensuring that the desired number of tasks is running at all times. If the running task count is consistently lower than the desired task count, you should investigate to see why those tasks are not running.

MemoryUtilization

Tracking memory utilization can help you determine if you’ve adequately scaled your ECS infrastructure. For example, you should monitor container-level memory utilization to ensure it does not cross its hard memory limit—ECS will terminate any container that crosses the limit. If memory utilization is consistently high, you may need to update your task definitions to either increase or remove this hard limit so that your tasks continue to run.

CPUUtilization

As with memory, container-level CPU utilization metrics can be helpful for identifying containers that are consuming too many resources, regardless of whether you are running ECS tasks on EC2 or Fargate. For example, if you find that certain containers are hogging resources, then you may need to configure container-level CPU limits so other containers in the ECS task have enough resources to complete their work.

Further reading

Monitoring these Amazon ECS metrics can ensure your containerized microservices are working optimally. For deeper insights into your container infrastructure, ECS also emits metrics for I/O, network throughput, and additional CPU and memory metrics for both EC2 and Fargate-managed resources. You can read our series of ECS posts for a more comprehensive look at monitoring your container infrastructure on AWS:

Amazon EKS

What is Amazon EKS?

Amazon Elastic Kubernetes Service (EKS) is a platform for running Kubernetes-orchestrated applications on AWS. You can manage your cluster and applications on AWS without modification and with the same native Kubernetes tools, such as the kubectl command line utility. EKS is also tightly integrated with other AWS services, including EC2 and AWS Fargate. Monitoring your EKS cluster is important for determining if it has enough resources to adequately launch and scale your applications, and there are a few key metrics you can track for better visibility into cluster health and performance.

Metrics to watch

Node status

Node status is a cluster state metric that gives you a high-level overview of node health. You can use it to monitor whether nodes meet certain conditions, such as running too low on memory or disk capacity, or running too many processes at one time. Kubernetes will attempt to reclaim resources if a node is running low on memory or disk space, which could possibly result in deleting pods from the affected node and disrupting your services.

Memory utilization

Monitoring this metric at the node- and pod-level is important for ensuring that clusters are able to successfully run workloads and can help you determine when you need to scale your cluster. For example, if memory usage is high on a node, it could mean you don’t have enough nodes to share the workload. Kubernetes will start evicting pods in an attempt to reclaim resources if it detects a node is running low on memory. Or if a pod uses more memory than its defined limit, Kubernetes may terminate its running processes.

Disk utilization

This metric tracks disk capacity on a node’s storage volume, which can be set up differently depending on whether you are deploying pods on EC2 or Fargate. Like memory utilization, if there is low disk space on a node’s volume, Kubernetes will attempt to free up resources by evicting pods.

Further reading

Amazon EKS provides other metrics for monitoring cluster health and resource utilization, including metrics for other AWS services you may use in an EKS cluster, such as EC2 and EBS. You can read our in-depth guides for information about monitoring your EKS clusters, worker nodes, persistent storage volumes, and more:

- Key metrics for Amazon EKS monitoring

- Tools for collecting Amazon EKS metrics

- Monitoring your EKS cluster with Datadog

Amazon RDS

What is Amazon RDS?

Amazon Relational Database Service (RDS) enables users to quickly provision and operate relational database management systems (RDBMS) in the cloud. Amazon RDS is available to deploy on several database instance types, as well as widely used database engines (MySQL, Oracle, Microsoft SQL Server, PostgreSQL, and MariaDB). You also have the option to utilize Amazon Aurora, a database built specifically for RDS that offers MySQL- and PostgreSQL-compatible versions.

Metrics to watch

RDS automates many aspects of provisioning and managing a highly scalable and durable relational database, but it also carries its own set of AWS monitoring challenges. As you scale your RDS deployment, you can monitor its health and performance by looking at the key metrics covered below. Note that some of the metrics covered below may not be applicable to your database engine. Refer to the RDS documentation for details.

FreeStorageSpace

This metric measures the amount of allocated storage available for use on each of your database instances. Monitoring this metric can help you detect if your database instance is running out of storage space, which can lead to data loss and cause serious application issues. Keeping an eye on this metric enables you to increase the storage parameters for your instance before it enters the STORAGE_FULL condition (at which point you won’t be able to connect to the instance).

DatabaseConnections

DatabaseConnections tracks the number of open database connections for a given collection period. You can use this metric to gauge current database activity and to avoid reaching the connection limit, as determined by the max_connections parameter, which factors in your database engine and instance type. Once the connection limit has been reached, the RDS instance will refuse new connections and return an error to the client.

ReadLatency and WriteLatency

This pair of metrics measures the average number of seconds each disk I/O request takes to complete. Measuring disk latency can be useful for identifying and investigating resource constraints that affect database performance.

DiskQueueDepth

DiskQueueDepth measures the number of queued I/O requests awaiting disk access. You should monitor this metric alongside latency in order to detect bottlenecks at the storage layer that could degrade application performance.

Further reading

Getting insights into the performance of your Amazon RDS database requires a nuanced approach to AWS monitoring. You need to look at RDS metrics, and supplement them with metrics queried directly from your database engine. The articles below provide a deeper dive into best practices for monitoring RDS on MySQL, PostgreSQL, and Amazon Aurora:

- Monitoring RDS MySQL performance metrics

- Key metrics for Amazon RDS PostgreSQL monitoring

- Monitoring Amazon Aurora performance metrics

Amazon ElastiCache

What is Amazon ElastiCache?

Amazon ElastiCache is a hosted and managed in-memory cache service. ElastiCache can greatly improve the throughput and latency of read-intensive workloads, by allowing applications to quickly access frequently requested files from the cache without querying the backend. ElastiCache gives users the flexibility to choose their preferred caching engine: Redis or Memcached.

Metrics to watch

To ensure that ElastiCache is helping to speed up your applications and improve user experience, you should continuously track CloudWatch performance metrics, and supplement them with higher-granularity metrics queried directly from your caching engine (as explained in the articles listed in the “Further Reading” section below). The following section outlines a few key metrics that apply to ElastiCache, whether it’s running Redis or Memcached.

CurrConnections (current connections)

Current connections measures the number of clients currently connected to the cache. Sudden drops in this metric can indicate infrastructure failures that require further investigation. You should watch this metric and compare it against your connection limit so you can scale up if necessary before the limit is reached (after which point any new connections will be refused). You can collect this data from CloudWatch with the CurrConnections metric, or directly from the caching engine, regardless of whether you’re using Redis or Memcached.

SetTypeCmds and GetTypeCmds (number of Set and Get commands processed)

This pair of metrics measures the number of Set and Get commands processed by the caching engine. You should use these throughput metrics as a key indicator of cache usage and to help diagnose any latency issues.

CloudWatch provides these metrics through the SetTypeCmds/GetTypeCmds metrics for Redis, and the CmdSet/CmdGet metrics for Memcached. You can also query metrics tracking the number of Get and Set commands directly from Memcached, but not from Redis.

CacheHits and CacheMisses

This pair of metrics helps you calculate the hit rate, or percentage of successful lookups, which is a primary indicator of cache efficiency. Low hit rates might indicate that the size of your cache is too small to hold the data your workload requires and that you need to increase the cache size.

These metrics can be collected from CloudWatch using the CacheHits/CacheMisses metrics for Redis, and the GetHits/GetMisses metrics for Memcached. They can also be collected directly from the caching engine, whether you’re using Redis or Memcached.

Evictions

This metric tracks the number of items removed from the cache to make room for new writes. If you see an increasing number of evictions, you should scale up your cache by migrating to a larger node type, or by adding additional nodes (if you use Memcached).

You can collect this metric from CloudWatch by using the Evictions metric (for both Redis and Memcached). You can also access this metric directly from either caching engine.

SwapUsage

SwapUsage measures the amount of disk used to hold data that should be in memory. You should track this metric closely because high swap usage defeats the purpose of in-memory caching and can significantly degrade application performance.

You can collect this metric from CloudWatch by using the SwapUsage metric (for both Redis and Memcached).

Further reading

While understanding these performance and resource utilization metrics can help ensure that your cache is functioning properly, you should supplement CloudWatch metrics with data queried directly from your caching engine. The articles below show you how to comprehensively monitor ElastiCache and native metrics from your caching engine:

- Monitoring ElastiCache performance metrics with Redis or Memcached

- Collecting ElastiCache metrics + its Redis/Memcached metrics

A new mindset for AWS monitoring

Over the years, AWS has been instrumental in driving the adoption of basic cloud computing services, as well as the expansion and ease of use of managed infrastructure services. New technology paradigms like serverless computing have helped abstract away even more of the intricacies of configuring and managing cloud computing resources, enabling developers to focus on running their code without managing the underlying infrastructure.

In 2014, Amazon released AWS Lambda, a Functions-as-a-Service (FaaS) offering for serverless computing. Using this approach to application deployment also demands a shift in the way that users monitor their AWS services. Whereas traditional practices were anchored in tracking system-level metrics, serverless monitoring shifts the focus to performance and usage data from the functions themselves. The next section will explore key concepts and metrics that are fundamental to driving this shift in your AWS monitoring mindset.

AWS Lambda

What is AWS Lambda?

AWS Lambda is an event-based compute service that allows you to execute code without the need to provision and manage the underlying server resources. You can configure Lambda functions to execute in response to AWS events or API calls via AWS API Gateway, or you can trigger them as manual invocations in the AWS user interface. You can also schedule Lambda functions to execute at regular intervals. While Lambda offers an alternative to more traditional AWS infrastructure, it also integrates with established AWS services like ELB, SES, and S3.

Metrics to watch

One of the main benefits of utilizing AWS Lambda is that it runs your code on highly available compute resources, which means that you no longer need to spend time on provisioning and scaling your infrastructure to meet the demands of varying workloads. While this means that you lose access to system-level data, there are a few standard metrics you can use to monitor Lambda usage and performance:

Duration

This metric measures the time it takes to execute a function, in milliseconds. Since this metric measures the entire operation (from invocation to conclusion), you can use it to gauge performance, similar to latency metrics from a traditional application.

Errors

This metric tracks the number of Lambda executions that resulted in an error. If you see an increased error rate, you can investigate by looking at your Lambda logs—they can point to the exact source of the issue (e.g., out-of-memory exception, timeout, or a permissions error).

Invocations

Invocations measures the number of times a function was executed (regardless of whether it failed or succeeded), in response to an event or invocation API call. This metric can help you measure Lambda usage and calculate projected costs.

ConcurrentExecutions

This metric provides the sum of concurrent executions across all functions in your account. If you’ve set function-level concurrency limits, you can also query this metric for each of those individual functions. The concurrent execution limit is set to 1,000 per region, by default. Monitoring this metric can help you understand how to set better concurrency limits on individual Lambda functions, to ensure that a spike in the number of invocations of one function won’t prevent other functions from getting access to the resources they need to handle their workload. This metric can also be aggregated over time and queried as an average to determine function scale and associated costs.

Throttles

This metric provides a count of the invocation attempts that were throttled because they exceeded the concurrent execution limit. Tracking this metric can help you refine concurrency limits to best match your Lambda workload. For example, a high number of throttles could indicate that you need to increase your concurrency limits to lower the number of failed innovations.

ProvisionedConcurrencyInvocations

Since Lambda only runs your function code when needed, you may notice additional latency (cold starts) if your functions haven’t been used in a while. This can make incoming requests take longer to process—especially if Lambda needs to initialize new instances to support a burst of requests. You can mitigate this by using provisioned concurrency, which automatically keeps functions pre-initialized so that they’ll be ready to process requests. As with concurrency, you can reserve provisioned concurrency for specific functions to ensure they always have enough concurrency to scale and quickly process requests.

Similar to the invocations metric, the provisioned concurrency invocations metric tracks any invocations running on provisioned concurrency, if configured. Monitoring this metric is important because a sudden drop in provisioned concurrency invocations could indicate an issue with the function or an upstream service.

ProvisionedConcurrencyUtilization

Monitoring provisioned concurrency utilization allows you to see if a function is using its provisioned concurrency efficiently. A function that consistently reaches its utilization threshold and uses up all of its available provisioned concurrency may need additional concurrency. Or, if utilization is consistently low, you may have overprovisioned a function, which can increase your overall costs.

Further reading

In addition to the performance and usage metrics outlined above, you should also collect detailed Lambda metrics that provide specific insights into the workload and performance of your Lambda functions. To get a more in-depth look at configuring Lambda functions, instrumenting your applications for serverless computing, and monitoring AWS Lambda with Datadog, read the articles below:

- Key metrics for monitoring Lambda functions

- Tools for collecting AWS Lambda data

- Monitoring AWS Lambda functions with Datadog

AWS Fargate

What is AWS Fargate?

AWS Fargate is a tool for building serverless architectures on both Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS), enabling you to run containers without having to manually provision servers or Amazon EC2 instances. To launch applications with Fargate on ECS, you define tasks with specific networking, IAM policies, CPU, and memory requirements. Fargate then automatically launches containers based on that configuration to run your applications. For EKS, you create a Fargate profile that determines which Kubernetes pods should run. AWS will automatically provision them with Fargate compute resources that best match a pod’s resource requirements.

Metrics to watch

Since you can use Fargate on both EKS and ECS, there are some key metrics for monitoring Fargate performance on both platforms.

MemoryUtilization and CPUUtilization

Memory and CPU utilization are critical metrics for ensuring that you have not over- or underprovisioned your containers as AWS pricing is based on a task’s or pod’s configured CPU and memory resources. You may be spending more than what is necessary if you have, for example, allocated more memory for a task than what it typically uses. Monitoring memory utilization can help you determine where to reduce provisioned memory to save costs without affecting performance. Monitoring CPU utilization for EKS pods and ECS clusters can also help you determine when compute resources are close to throttling, so you can scale your deployments accordingly to ensure they are able to accommodate the load.

Further reading

AWS Fargate also provides network throughput and I/O metrics for better visibility into service performance. To get a more in-depth look into monitoring Fargate, read the articles below:

A dynamic approach to AWS monitoring

In this post, we’ve explored the evolution of AWS infrastructure, and the key metrics to monitor if you are running several types of AWS services to keep your applications running at peak performance. Adopting an automated, scalable AWS monitoring strategy will enable you to keep tabs on your infrastructure, even as hosts and services dynamically scale and update in real time. Datadog’s integrations with 1,000+ technologies, including the full suite of AWS services, allow you to gain full visibility into your stack, even as it evolves.

If you don’t yet have a Datadog account, you can sign up for a free 14-day trial and start monitoring your cloud infrastructure and applications today.