- Product

Infrastructure

Applications

Logs

Security

Digital Experience

Software Delivery

Service Management

AI

Platform Capabilities

- Customers

- Pricing

- Solutions

- Financial Services

- Manufacturing & Logistics

- Healthcare/Life Sciences

- Retail/E-Commerce

- Government

- Education

- Media & Entertainment

- Technology

- Gaming

- Amazon Web Services Monitoring

- Azure Monitoring

- Google Cloud Monitoring

- Oracle Cloud Monitoring

- Kubernetes Monitoring

- Red Hat OpenShift

- Pivotal Platform

- OpenAI

- SAP Monitoring

- OpenTelemetry

- Application Security

- Cloud Migration

- Monitoring Consolidation

- Unified Commerce Monitoring

- SOAR

- DevOps

- Shift-Left Testing

- Digital Experience Monitoring

- Security Analytics

- Compliance for CIS Benchmarks

- Hybrid Cloud Monitoring

- IoT Monitoring

- Real-Time BI

- On-Premises Monitoring

- Log Analysis & Correlation

- CNAPP

Industry

Technology

Use Case

- About

- Blog

- Docs

- Login

- Get Started

Full visibility into your OpenAI usage

Organizations of all sizes and industries are increasingly relying on large language models (LLMs) to improve efficiency and scale operations. LLMs mimic human intelligence by analyzing and learning the patterns and connections between words and phrases. Generative artificial intelligence (AI) models are a type of LLM that can be used to create new content such as code, images, text, simulations, and videos. OpenAI is an AI research laboratory that aims to promote and develop safe and beneficial AI solutions. Their products, such as ChatGPT and Dall-E2, use generative AI to help companies rapidly respond to queries, fetch data or information, solve questions or problems, and generate ideas.

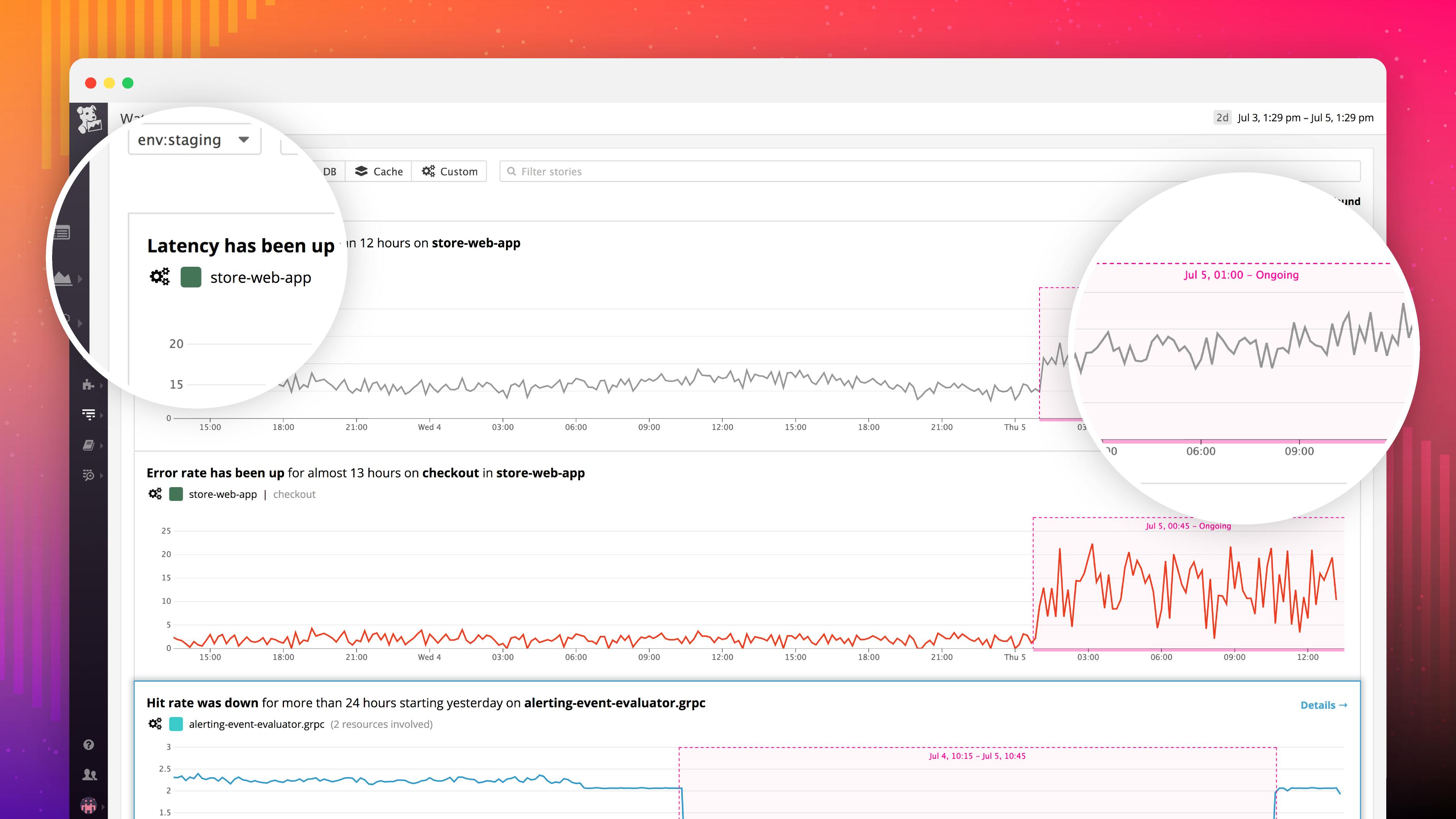

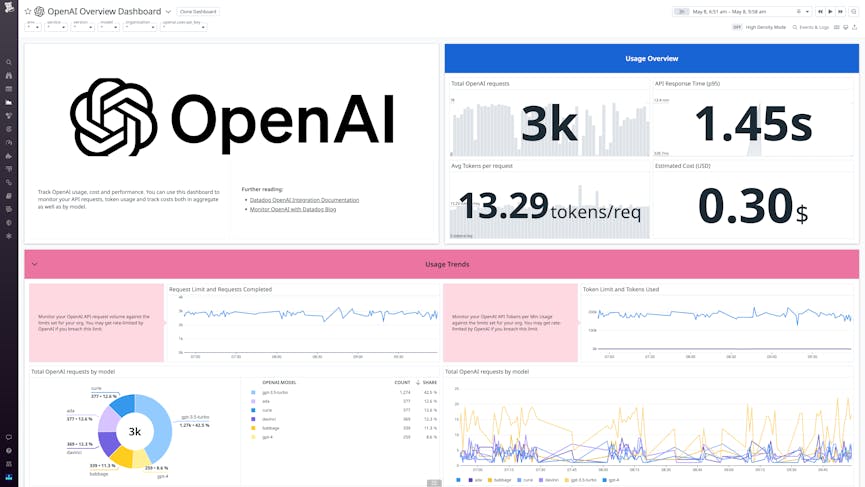

Datadog provides a way for organizations to monitor their consumption of resources associated with OpenAI solutions so they can understand usage trends and more effectively allocate costs. Enabling the OpenAI integration in Datadog provides access to an out-of-the-box dashboard that breaks down requests to the OpenAI API by OpenAI model, service, organization ID, and API keys. Users can also assign unique organization IDs to individual teams, making it easier to track where and to what extent each OpenAI model is used throughout the organization. This granular insight helps users understand which teams, services, and applications are using OpenAI the most, and which models they rely on. It can also help organizations monitor the overall rate and volume of OpenAI usage and avoid breaching API rate limits or causing excess latency.

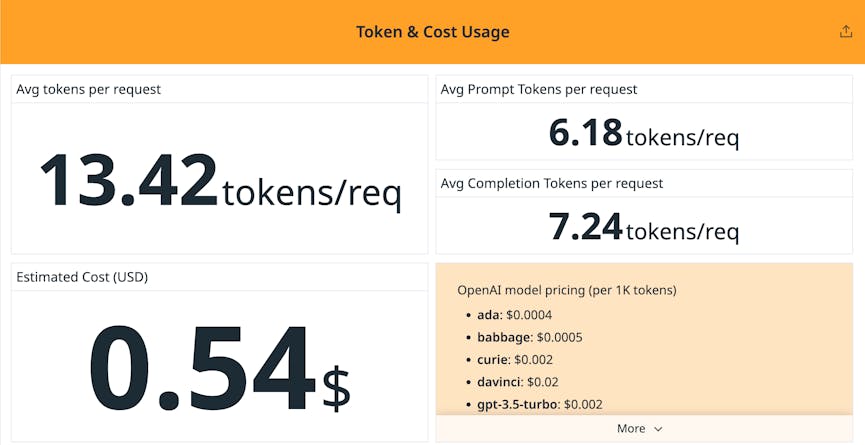

Track costs based on token usage

Usage of the OpenAI API is billed based on the consumption of tokens—common sequences of characters that are “consumed” when fed into the OpenAI API. Datadog helps users understand the primary cost drivers for OpenAI usage by tracking total token consumption, average total number of tokens per request, and the average number of prompt and completion tokens per request. This helps users spot spikes in OpenAI costs and monitor which requests, teams, and services are incurring the highest costs.

Analyze API performance

An additional Datadog dashboard for OpenAI enables users to track metrics like API error rates, response times, and token usage for each of the OpenAI models their organization is using. This dashboard also tracks the ratio of response times to the volume of prompt tokens, which helps users distinguish truly anomalous latencies from prolonged response times caused by spikes in requests. Datadog also provides traces and spans on individual requests to the OpenAI API so users have rich contextual information, like the specific models that were queried and the precise content of prompts and completions.

Get insights across multiple AI models

In addition to covering the GPT family of LLMs, Datadog’s integration enables organizations to track performance, costs, and usage for other OpenAI models, including Ada, Babbage, Curie, and Davinci.

Start monitoring your OpenAI integration usage in minutes

Organizations will continue to deepen their use of AI to transform business operations and improve customer experiences. Datadog enables them to monitor OpenAI usage, resources, and performance, providing end-to-end visibility into cost and utilization data. With this depth of insight, teams can optimize how they use OpenAI and gain greater control over how and when they integrate OpenAI products into their processes.