- Product

Infrastructure

Applications

Logs

Security

Digital Experience

Software Delivery

Service Management

AI

Platform Capabilities

- Customers

- Pricing

- Solutions

- Financial Services

- Manufacturing & Logistics

- Healthcare/Life Sciences

- Retail/E-Commerce

- Government

- Education

- Media & Entertainment

- Technology

- Gaming

- Amazon Web Services Monitoring

- Azure Monitoring

- Google Cloud Monitoring

- Oracle Cloud Monitoring

- Kubernetes Monitoring

- Red Hat OpenShift

- Pivotal Platform

- OpenAI

- SAP Monitoring

- OpenTelemetry

- Application Security

- Cloud Migration

- Monitoring Consolidation

- Unified Commerce Monitoring

- SOAR

- DevOps

- Shift-Left Testing

- Digital Experience Monitoring

- Security Analytics

- Compliance for CIS Benchmarks

- Hybrid Cloud Monitoring

- IoT Monitoring

- Real-Time BI

- On-Premises Monitoring

- Log Analysis & Correlation

- CNAPP

Industry

Technology

Use Case

- About

- Blog

- Docs

- Login

- Get Started

Observability

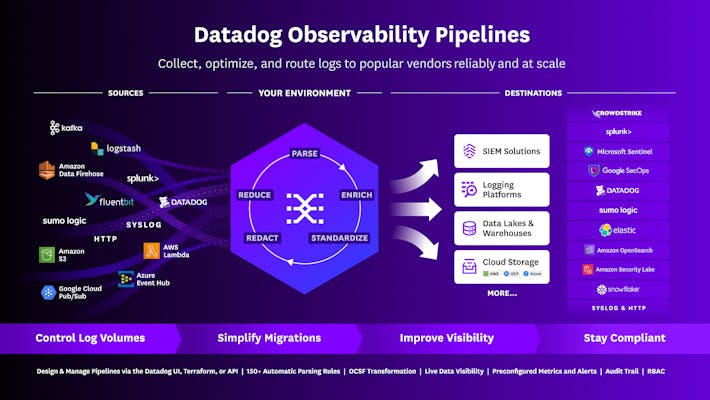

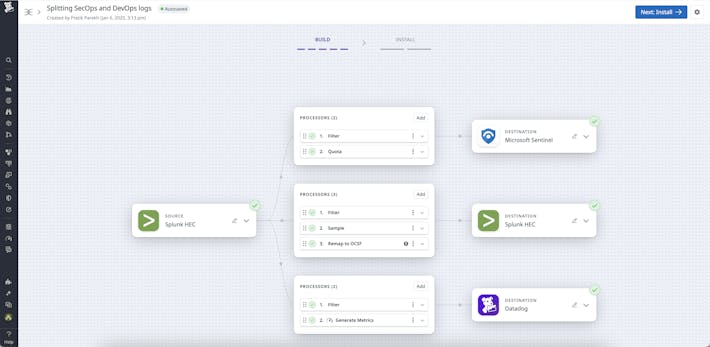

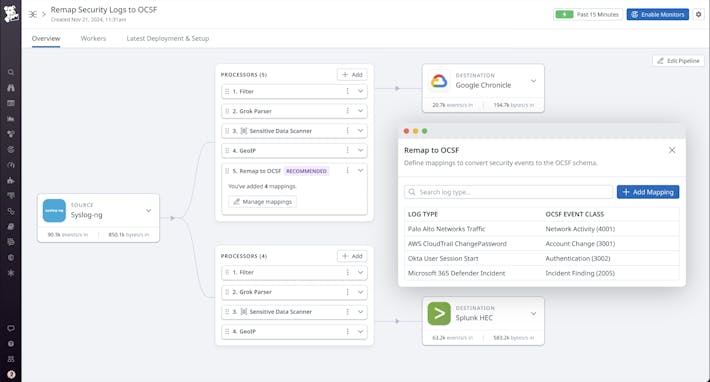

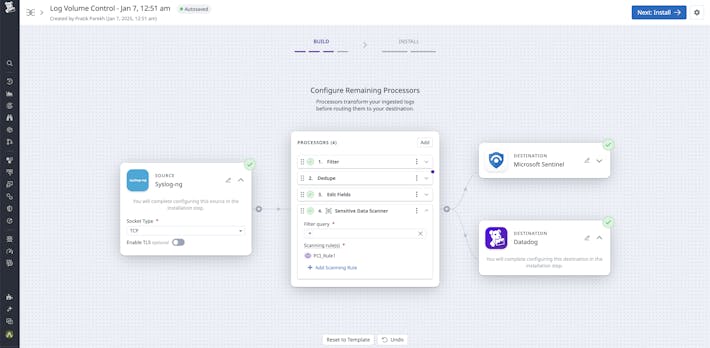

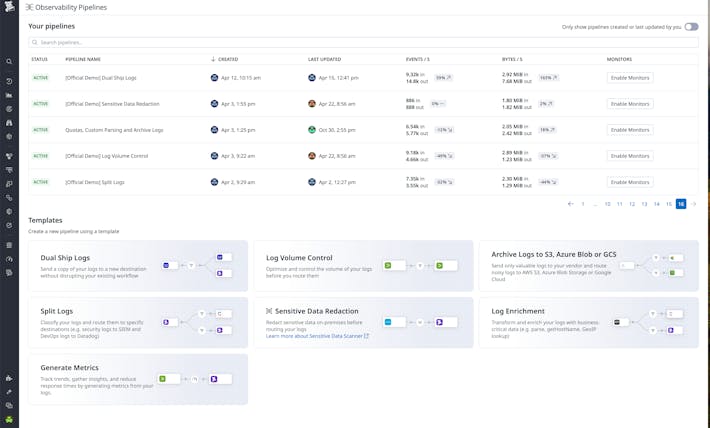

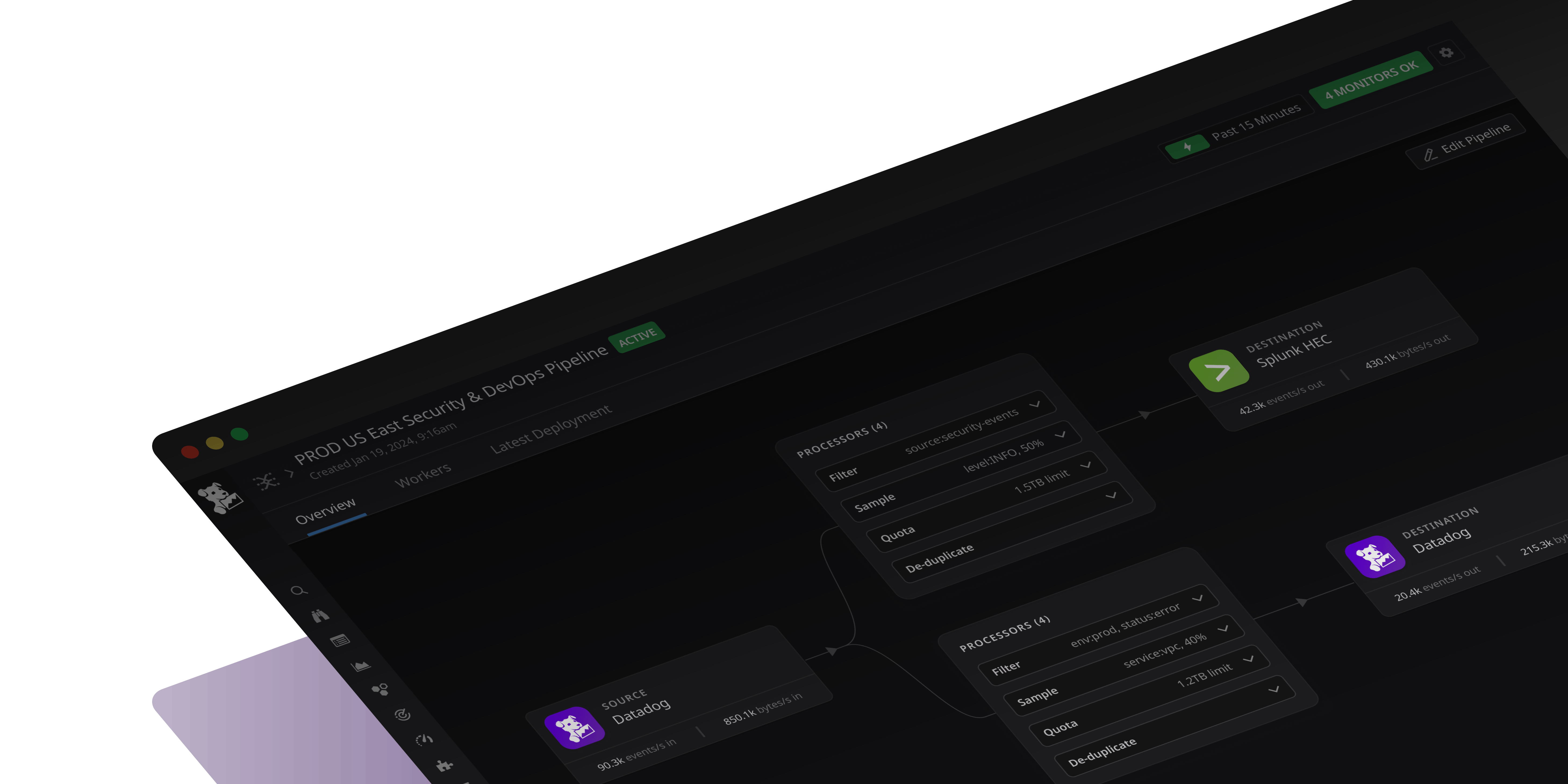

Observability Pipelines

Control log volumes, flexibly adopt your preferred security tools, and manage sensitive data at scale