Evan Mouzakitis

David M. Lentz

Kafka deployments often rely on additional software packages not included in the Kafka codebase itself—in particular, Apache ZooKeeper. A comprehensive monitoring implementation includes all the layers of your deployment so you have visibility into your Kafka cluster and your ZooKeeper ensemble, as well as your producer and consumer applications and the hosts that run them all. To implement ongoing, meaningful monitoring, you will need a platform where you can collect and analyze your Kafka metrics, logs, and distributed request traces alongside monitoring data from the rest of your infrastructure.

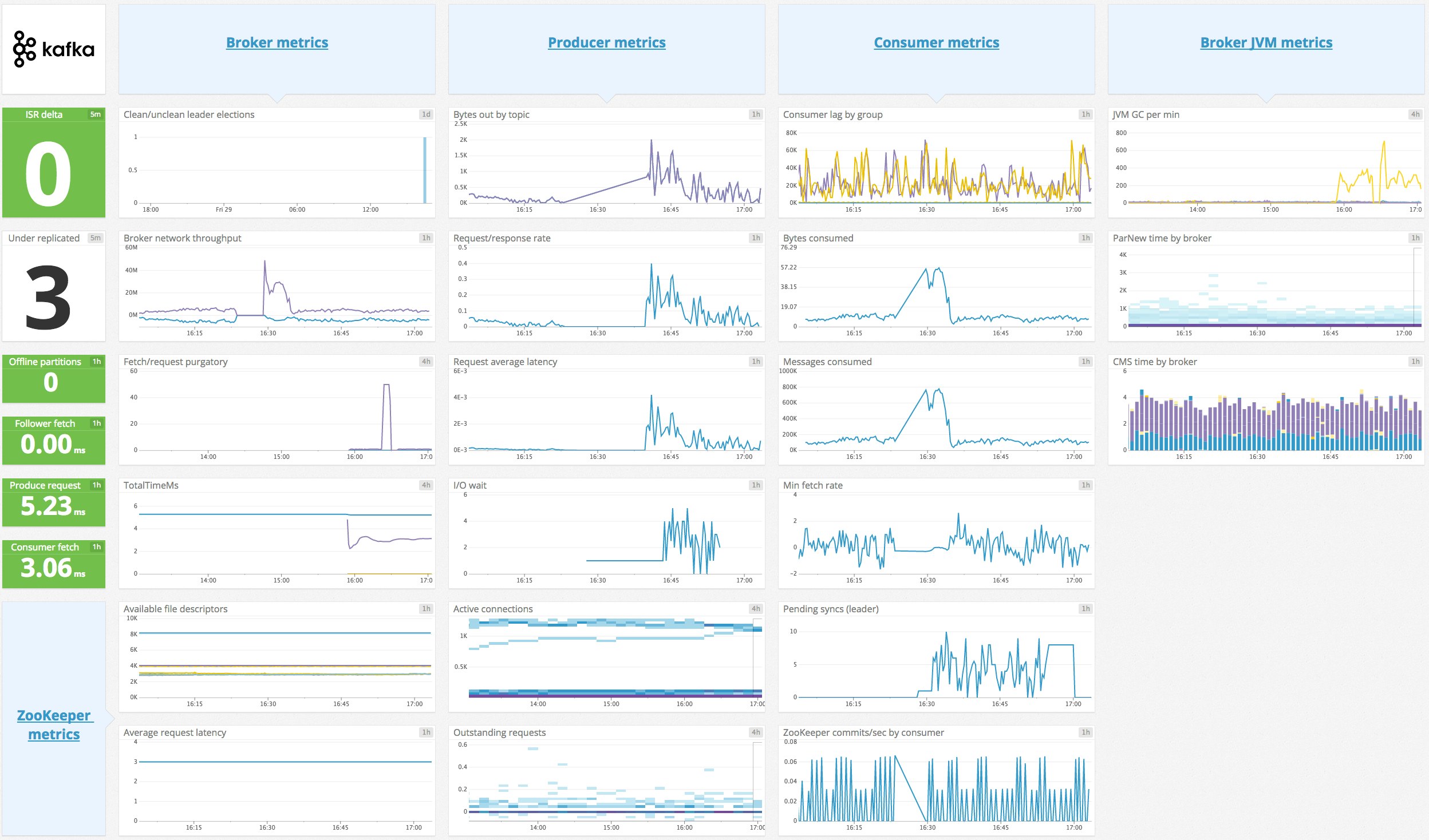

With Datadog, you can collect metrics, logs, and traces from your Kafka deployment to visualize and alert on the performance of your entire Kafka stack. Datadog automatically collects many of the key metrics discussed in Part 1 of this series, and makes them available in a template dashboard, as seen above.

Integrating Datadog, Kafka, and ZooKeeper

In this section, we'll describe how to install the Datadog Agent to collect metrics, logs, and traces from your Kafka deployment. First you'll need to ensure that Kafka and ZooKeeper are sending JMX data, then install and configure the Datadog Agent on each of your producers, consumers, and brokers.

Verify Kafka and ZooKeeper

Before you begin, you must verify that Kafka is configured to report metrics via JMX. Confirm that Kafka is sending JMX data to your chosen JMX port, and then connect to that port with JConsole.

Similarly, check that ZooKeeper is sending JMX data to its designated port by connecting with JConsole. You should see data from the MBeans described in Part 1.

Install the Datadog Agent

The Datadog Agent is open source software that collects metrics, logs, and distributed request traces from your hosts so that you can view and monitor them in Datadog. Installing the Agent usually takes just a single command.

Install the Agent on each host in your deployment—your Kafka brokers, producers, and consumers, as well as each host in your ZooKeeper ensemble. Once the Agent is up and running, you should see each host reporting metrics in your Datadog account.

Configure the Agent

Next you will need to create Agent configuration files for both Kafka and ZooKeeper. You can find the location of the Agent configuration directory for your OS here. In that directory, you will find sample configuration files for Kafka and ZooKeeper. To monitor Kafka with Datadog, you will need to edit both the Kafka and Kafka consumer Agent integration files. (See the documentation for more information on how these two integrations work together.) The configuration file for the Kafka integration is in the kafka.d/ subdirectory, and the Kafka consumer integration's configuration file is in the kafka_consumer.d/ subdirectory. The ZooKeeper integration has its own configuration file, located in the zk.d/ subdirectory.

On each host, copy the sample YAML files in the relevant directories (the kafka.d/ and kafka_consumer.d/ directories on your brokers, and the zk.d/ directory on ZooKeeper hosts) and save them as conf.yaml.

The kafka.d/conf.yaml file includes a list of Kafka metrics to be collected by the Agent. You can use this file to configure the Agent to monitor your brokers, producers, and consumers. Change the host and port values (and user and password, if necessary) to match your setup.

You can add tags to the YAML file to apply custom dimensions to your metrics. This allows you to search and filter your Kafka monitoring data in Datadog. Because a Kafka deployment is made up of multiple components—brokers, producers, and consumers—it can be helpful to use some tags to identify the deployment as a whole, and other tags to distinguish the role of each host. The sample code below uses a role tag to indicate that these metrics are coming from a Kafka broker, and a service tag to place the broker in a broader context. The service value here—signup_processor—could be shared by this deployment's producers and consumers.

tags: - role:broker - service:signup_processorNext, in order to get broker and consumer offset information into Datadog, modify the kafka_consumer/conf.yaml file to match your setup. If your Kafka endpoint differs from the default (localhost:9092), you'll need to update the kafka_connect_str value in this file. If you want to monitor specific consumer groups within your cluster, you can specify them in the consumer_groups value; otherwise, you can set monitor_unlisted_consumer_groups to true to tell the Agent to fetch offset values from all consumer groups.

Collect Kafka and ZooKeeper logs

You can also configure the Datadog Agent to collect logs from Kafka and ZooKeeper. The Agent's log collection is disabled by default, so first you'll need to modify the Agent's configuration file to set logs_enabled: true.

Next, in Kafka's conf.yaml file, uncomment the logs section and modify it if necessary to match your broker's configuration. Do the same in ZooKeeper's conf.yaml file, updating the tags section and the logs section to direct the Agent to collect and tag your ZooKeeper logs and send them to Datadog.

In both of the conf.yaml files, you should modify the service tag to use a common value so that Datadog aggregates logs from all the components in your Kafka deployment. Here is an example of how this looks in a Kafka configuration file that uses the same service tag we applied to Kafka metrics in the previous section:

logs: - type: file path: /var/log/kafka/server.log source: kafka service: signup_processorNote that the default source value in the Kafka configuration file is kafka. Similarly, ZooKeeper's configuration file contains source: zookeeper. This allows Datadog to apply the appropriate integration pipeline to parse the logs and extract key attributes.

You can then filter your logs to display only those from the signup_processor service, making it easy to correlate logs from different components in your deployment so you can troubleshoot quickly.

Collect distributed traces

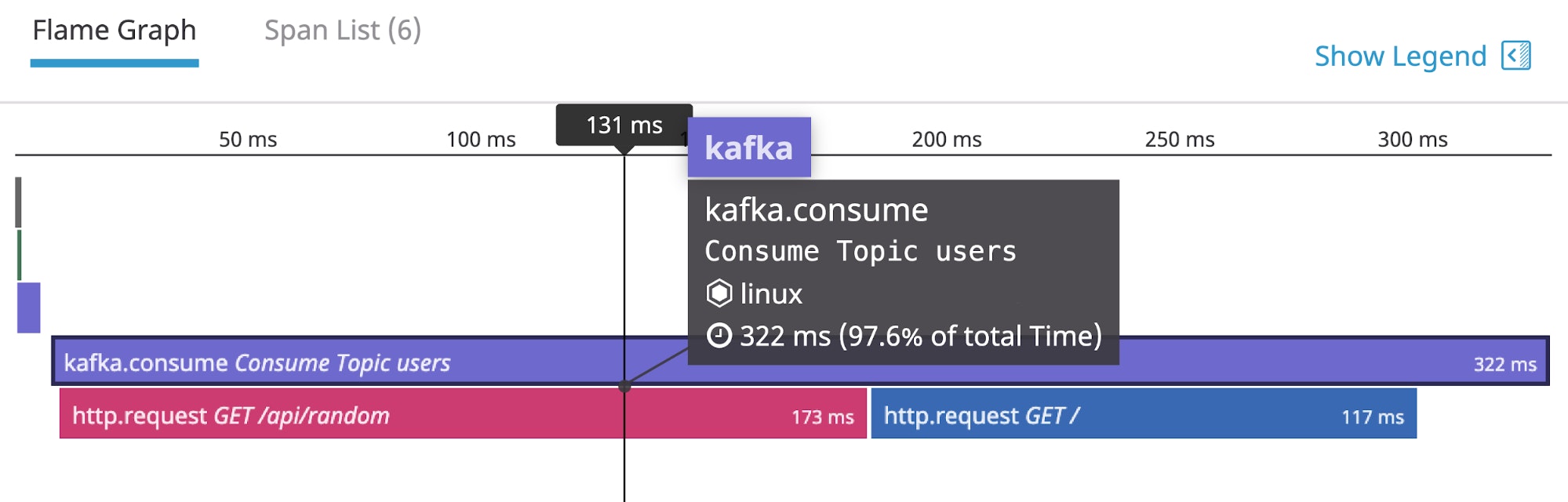

Datadog APM and distributed tracing gives you expanded visibility into the performance of your services by measuring request volume and latency. You create graphs and alerts to monitor your APM data, and you can visualize the activity of a single request in a flame graph like the one shown below to better understand the sources of latency and errors.

Datadog APM can trace requests to and from Kafka clients, and will automatically instrument popular languages and web frameworks. This means you can collect traces without modifying the source code of your producers and consumers. See the documentation for guidance on getting started with APM and distributed tracing.

Verify configuration settings

To check that Datadog, Kafka, and ZooKeeper are properly integrated, first restart the Agent, and then run the status command. If the configuration is correct, the output will contain a section resembling the one below:

Running Checks ======

[...]

kafka_consumer (2.3.0) ---------------------- Instance ID: kafka_consumer:55722fe61fb7f11a [OK] Configuration Source: file:/etc/datadog-agent/conf.d/kafka_consumer.d/conf.yaml Total Runs: 1 Metric Samples: Last Run: 0, Total: 0 Events: Last Run: 0, Total: 0 Service Checks: Last Run: 0, Total: 0 Average Execution Time : 13ms

[...]

zk (2.4.0) ---------- Instance ID: zk:8cd6317982d82def [OK] Configuration Source: file:/etc/datadog-agent/conf.d/zk.d/conf.yaml Total Runs: 1,104 Metric Samples: Last Run: 29, Total: 31,860 Events: Last Run: 0, Total: 0 Service Checks: Last Run: 1, Total: 1,104 Average Execution Time : 6ms metadata: version.major: 3 version.minor: 5 version.patch: 7 version.raw: 3.5.7-f0fdd52973d373ffd9c86b81d99842dc2c7f660e version.release: f0fdd52973d373ffd9c86b81d99842dc2c7f660e version.scheme: semver

========JMXFetch========

Initialized checks ================== kafka instance_name : kafka-localhost-9999 message : metric_count : 61 service_check_count : 0 status : OKEnable the integration

Next, click the Kafka and ZooKeeper Install Integration buttons inside your Datadog account, under the Configuration tab in the Kafka integration settings and ZooKeeper integration settings.

Analyze Kafka metrics alongside data from the rest of your stack with Datadog.

Monitoring your Kafka deployment in Datadog

Once the Agent begins reporting metrics from your deployment, you will see a comprehensive Kafka dashboard among your list of available dashboards in Datadog.

The default Kafka dashboard, as seen at the top of this article, displays the key metrics highlighted in our introduction on how to monitor Kafka.

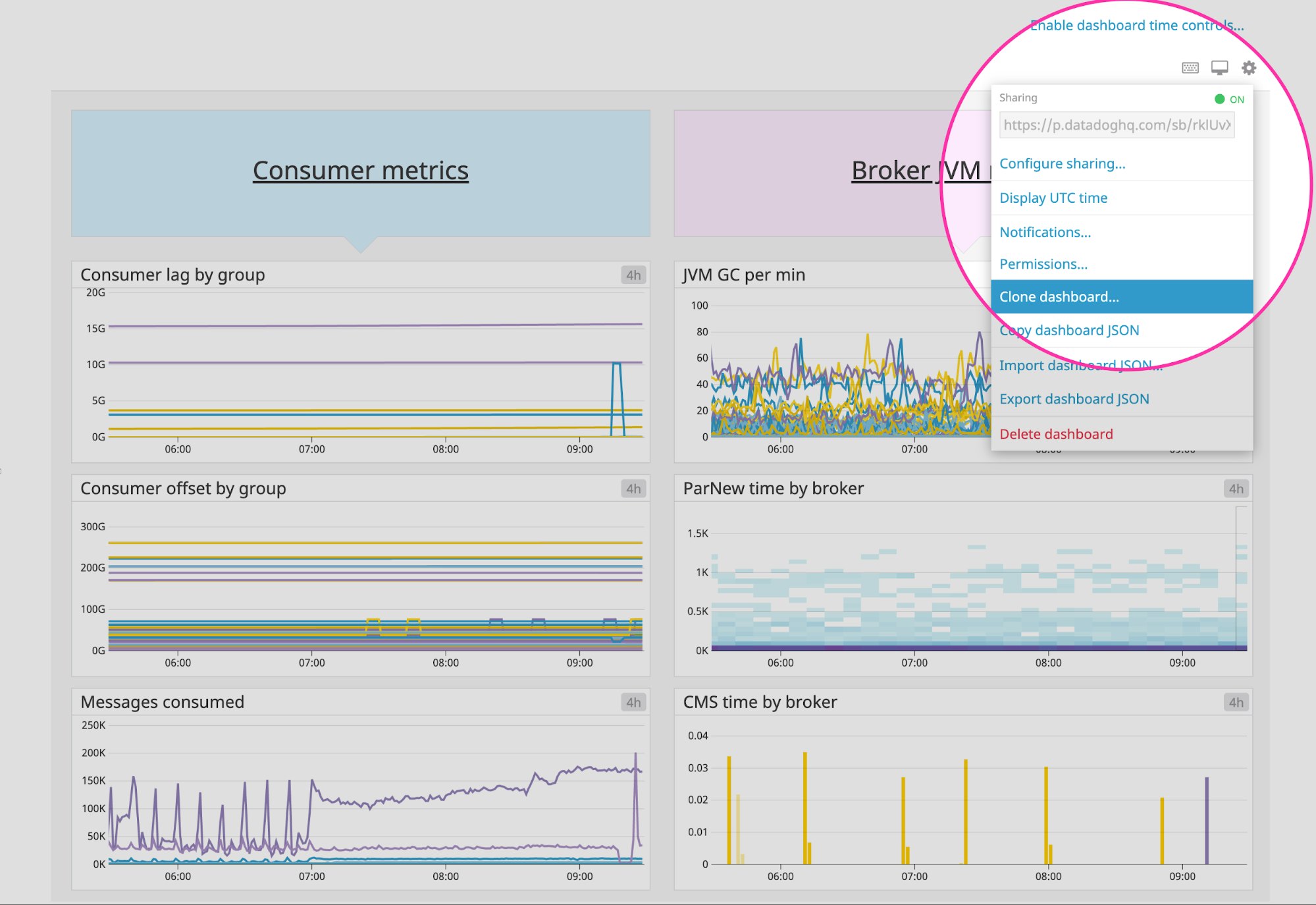

You can easily create a more comprehensive dashboard to monitor your entire web stack by adding additional graphs and metrics from your other systems. For example, you might want to graph Kafka metrics alongside metrics from HAProxy or host-level metrics such as memory usage. To start customizing this dashboard, clone it by clicking on the gear in the upper right and selecting Clone dashboard....

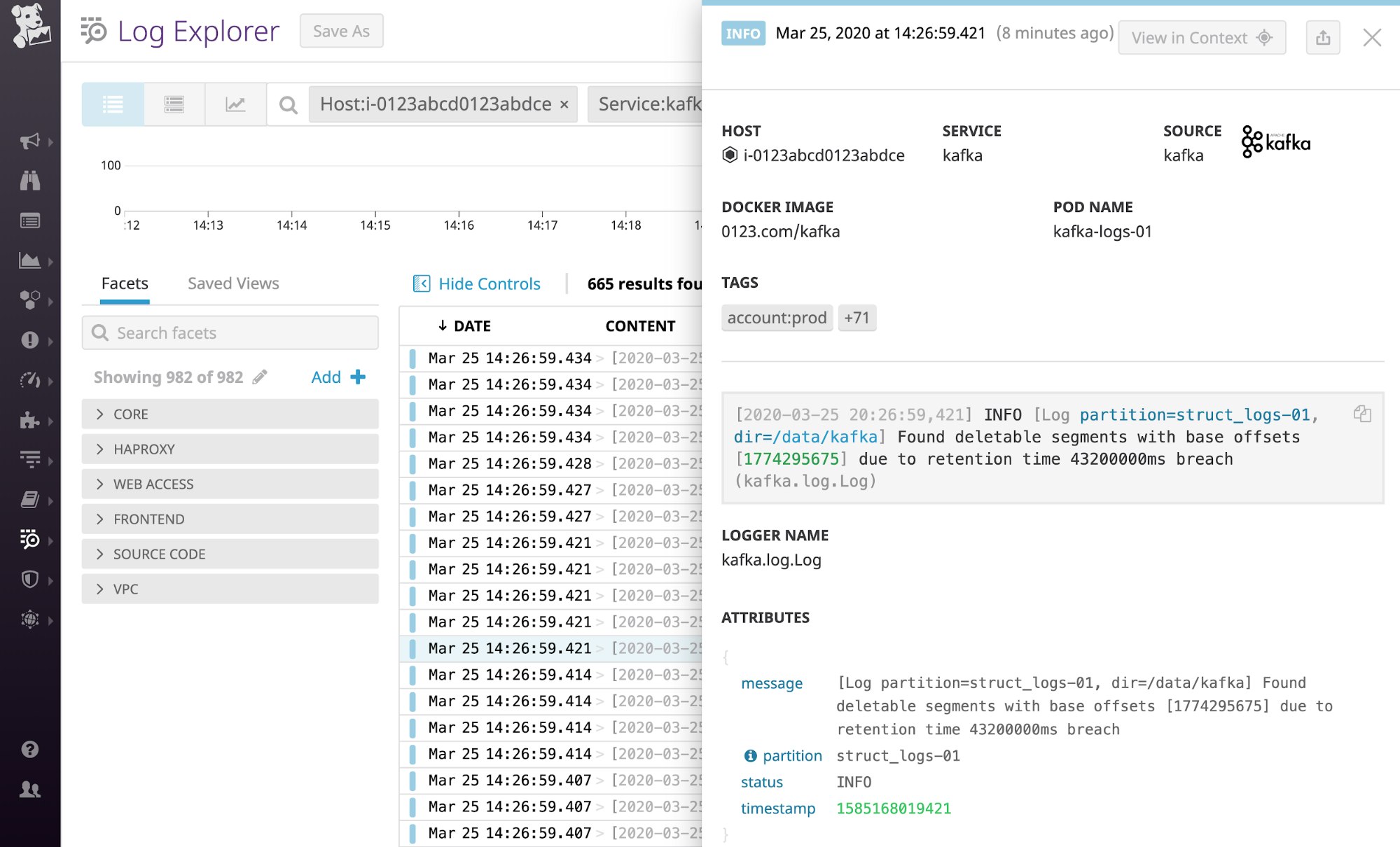

You can click on a graph in the dashboard to quickly view related logs or traces. Or you can navigate to the Log Explorer to search and filter your Kafka and ZooKeeper logs—along with logs from any other technologies you're monitoring with Datadog. The screenshot below shows a stream of logs from a Kafka deployment and highlights a log showing Kafka identifying a log segment to be deleted in accordance with its configured retention policy. You can use Datadog Log Analytics and create log-based metrics to gain insight into the performance of your entire technology stack.

Once Datadog is capturing and visualizing your metrics, logs, and APM data, you will likely want to set up some alerts to be automatically notified of potential issues.

With our powerful outlier detection feature, you can get alerted on the things that matter. For example, you can set an alert to notify you if a particular producer is experiencing an increase in latency while the others are operating normally.

Additionally, Data Streams Monitoring maps and monitors services and queues across your Kafka pipelines from end to end, helping you track and improve the performance of streaming data pipelines and event-driven applications that use Kafka. The deep visibility provided by DSM enables you to monitor latency between any two points within a pipeline, detect message delays, pinpoint the root cause of bottlenecks, and spot floods of backed-up messages.

Get started monitoring Kafka with Datadog

In this post, we’ve walked you through integrating Kafka with Datadog to monitor key metrics, logs, and traces from your environment. If you’ve followed along using your own Datadog account, you should now have improved visibility into Kafka health and performance, as well as the ability to create automated alerts tailored to your infrastructure, your usage patterns, and the data that is most valuable to your organization.

If you don’t yet have a Datadog account, you can sign up for a free trial and start to monitor Kafka right away.

Source Markdown for this post is available on GitHub. Questions, corrections, additions, etc.? Please let us know.