Mallory Mooney

The swift adoption of generative AI (GenAI) by the software industry has introduced a new area of focus for security engineers: threats targeting the various components of their AI applications. Understanding how these areas are vulnerable to attacks will become increasingly significant as the space evolves. In this series, we’ll look at common threats that target the following components of AI applications:

- Infrastructure: The underlying components that host and run AI applications, such as containers, databases, cloud storage buckets, and Model Context Protocol (MCP) servers

- Supply chain: The software and data that an AI application uses to operate, such as training datasets, pre-trained large language models (LLMs), and third-party AI libraries

- AI interfaces: The entry points and business logic that enable a user to interact with an AI application, such as chat interfaces and API endpoints for supporting services

We’ll cover AI infrastructure in this post, mapping threats that target infrastructure components to MITRE’s Adversarial Threat Landscape for Artificial Intelligence Systems (ATLAS) tactics and techniques, which summarize why and how attackers target AI components. We’ll also look at how to detect and minimize these threats.

Common attacks that target AI infrastructure

The infrastructure for generative AI applications serves as a highway that facilitates scalable, reliable usage. It combines established cloud services with emerging tools and frameworks, such as MCP servers and retrieval-augmented generation (RAG) pipelines. Just like any transportation system, this infrastructure is vulnerable to a variety of risks. Common cloud misconfigurations, such as overprivileged IAM roles, can be introduced at any time. And because the AI ecosystem is still maturing, its resources often launch without adequate controls for authentication, authorization, and logging.

Inefficient security controls and monitoring introduce vulnerabilities across an AI application’s various components. The following diagram shows where certain tactics can take advantage of those weaknesses. For example, attackers often target AI infrastructure, including cloud user and service accounts, databases, and compute instances, both in the initial stages of their attack and as they move laterally after a compromise. Visualizing these connections helps you trace the path of an attack from end to end, including when they acquire credentials and gather additional information about resources.

Gaining initial access

Initial access involves an attacker attempting to gain access to a particular AI system, such as parts of its infrastructure. There are several techniques that attackers use to accomplish this, including using valid accounts and exploiting public-facing applications. These particular techniques are also a part of the traditional MITRE ATT&CK framework and take advantage of compromised user account credentials, API keys, or vulnerable infrastructure to gain access.

Let’s look at an example of gaining initial access to infrastructure by exploiting public-facing applications and using prompt injection to poison an instance of M365 Copilot. In this example, the organization’s Copilot instance indexed all company emails in a retrieval augmented generation (RAG) database.

As part of a red teaming exercise, security researchers sent an employee an email that contained a hidden, malicious prompt and fraudulent bank account details. This step successfully exploited the company’s public-facing email service to create a poisoned RAG entry. When certain employees queried the RAG database for financial information, the email service retrieved the poisoned entry and responded with the incorrect bank information. In the real world, using this information for a wire transfer could result in serious consequences for the company and the individuals who sent the query.

RAG systems in particular introduce new security risks to environments that are already prone to secret sprawl. By design, these systems index organizational data, such as knowledge repositories and codebases that may include sensitive information. If secrets are improperly managed or simply overlooked, RAG systems can unintentionally expose them in their output.

Achieving credential access

Credential access is similar to initial access in that it uses credentials from legitimate user accounts to infiltrate an AI system. However, credential access involves actively attempting to find and steal credentials that are not stored securely within an environment.

Attackers were able to use this tactic with Ray, a Python framework that is used for scaling AI applications. They successfully found a public-facing Ray cluster, which included exposed SSH keys, tokens, Kubernetes secrets, and cloud environment keys. These credentials allowed them to steal data and compromise other levels of the AI application, including its supply chain.

Compromised credentials are the leading cause of cloud incidents. This risk grows as organizations integrate third-party libraries into their AI applications, which can be harder to audit than in-house code. If one of these libraries introduces a vulnerability, attackers can exploit it to find and compromise credentials before organizations can react.

Gathering information

Discovery involves an attacker using a variety of techniques to gather information about an AI system and its internal network after a compromise. Many discovery techniques target AI supply chains and integrations, but the cloud service discovery technique attempts to gather information about services running in cloud infrastructure. These can include enabled authentication, serverless, logging, and security services, as well as their associated policies and accounts.

LLM jacking is an example of how this technique shows up in an attack path. After attackers use stolen credentials to access a cloud environment, they look for available services that are running third-party LLMs, such as Amazon Bedrock. To do this, they execute legitimate API calls to these services to test their level of access without immediate detection. AWS CloudTrail has logged these calls, which has enabled researchers to identify atypical call parameters. In cases like these, where the discovery uses available APIs to probe a cloud environment, the value of logging service activity cannot be underestimated. Without this visibility, attackers would have continued to exploit their access to the cloud-hosted LLMs.

Other tactics and techniques

In addition to the three tactics that we covered, others can be mapped to AI infrastructure, including:

- Reconnaissance: Techniques such as active scanning enable an attacker to gather information about available infrastructure resources and networks.

- Resource development: With techniques such as establish accounts and acquire infrastructure, attackers create new cloud user or service accounts to accomplish their goals.

Detect and minimize the risks that lead to AI abuse

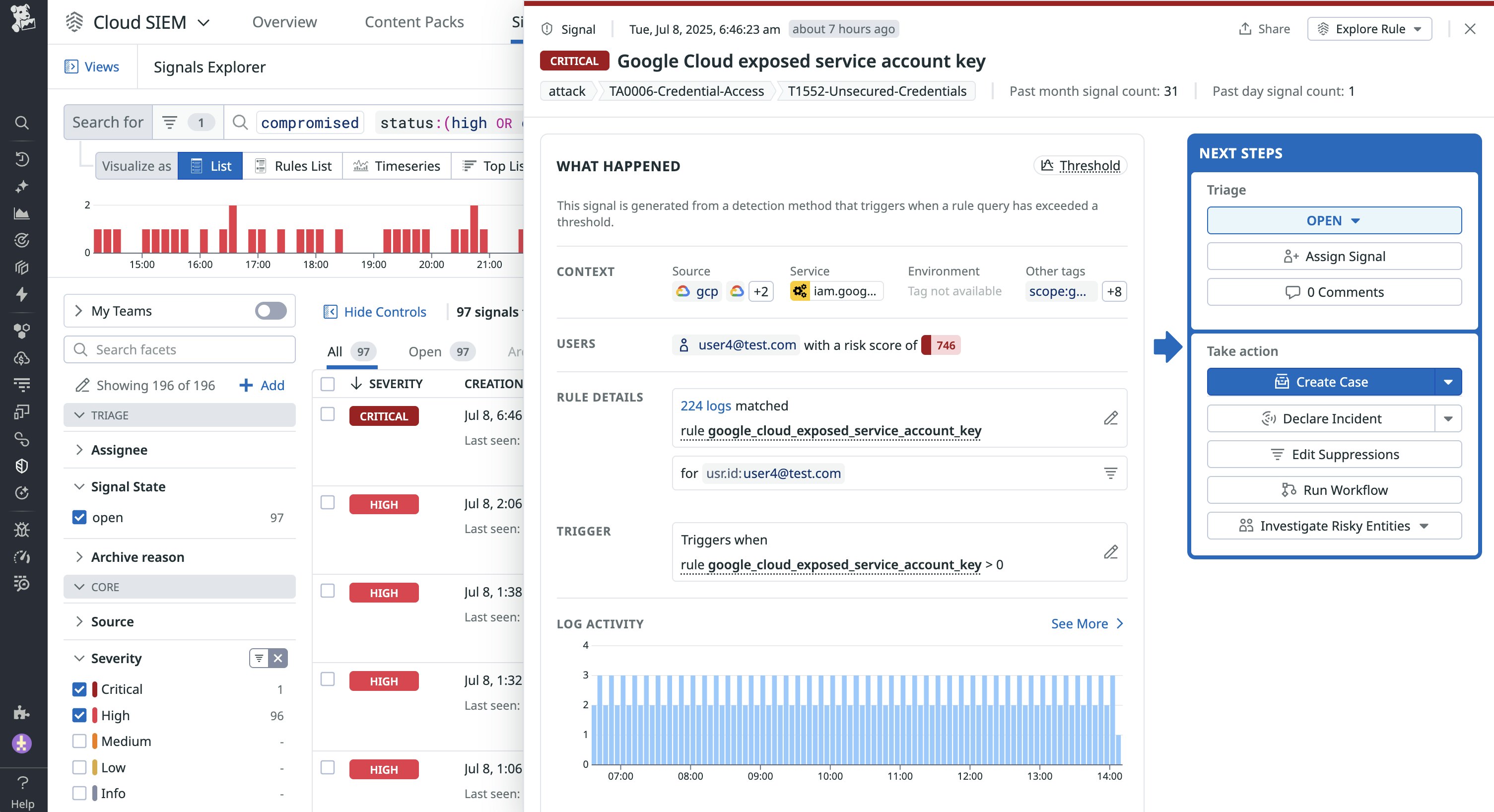

While these tactics are only a few of the ways attackers can target AI infrastructure, they highlight issues that are commonly seen in both cloud and AI security: misconfigured infrastructure and mismanaged credentials. Detecting these risks starts with the same strategies that are used for securing cloud environments. These include scanning them for old or unused credentials or monitoring logs for issues, such as compromised account keys for cloud services, as seen in the following Datadog Cloud SIEM signal:

In cases like these, minimizing the use of long-lived credentials can help reduce the risk of compromised credentials.

Unusual access patterns can also surface the early signs of an attacker attempting to abuse AI infrastructure via the initial access or credential access tactics. It can also capture malicious activity post-compromise, such as the API calls described in the previously mentioned LLM jacking scenario.

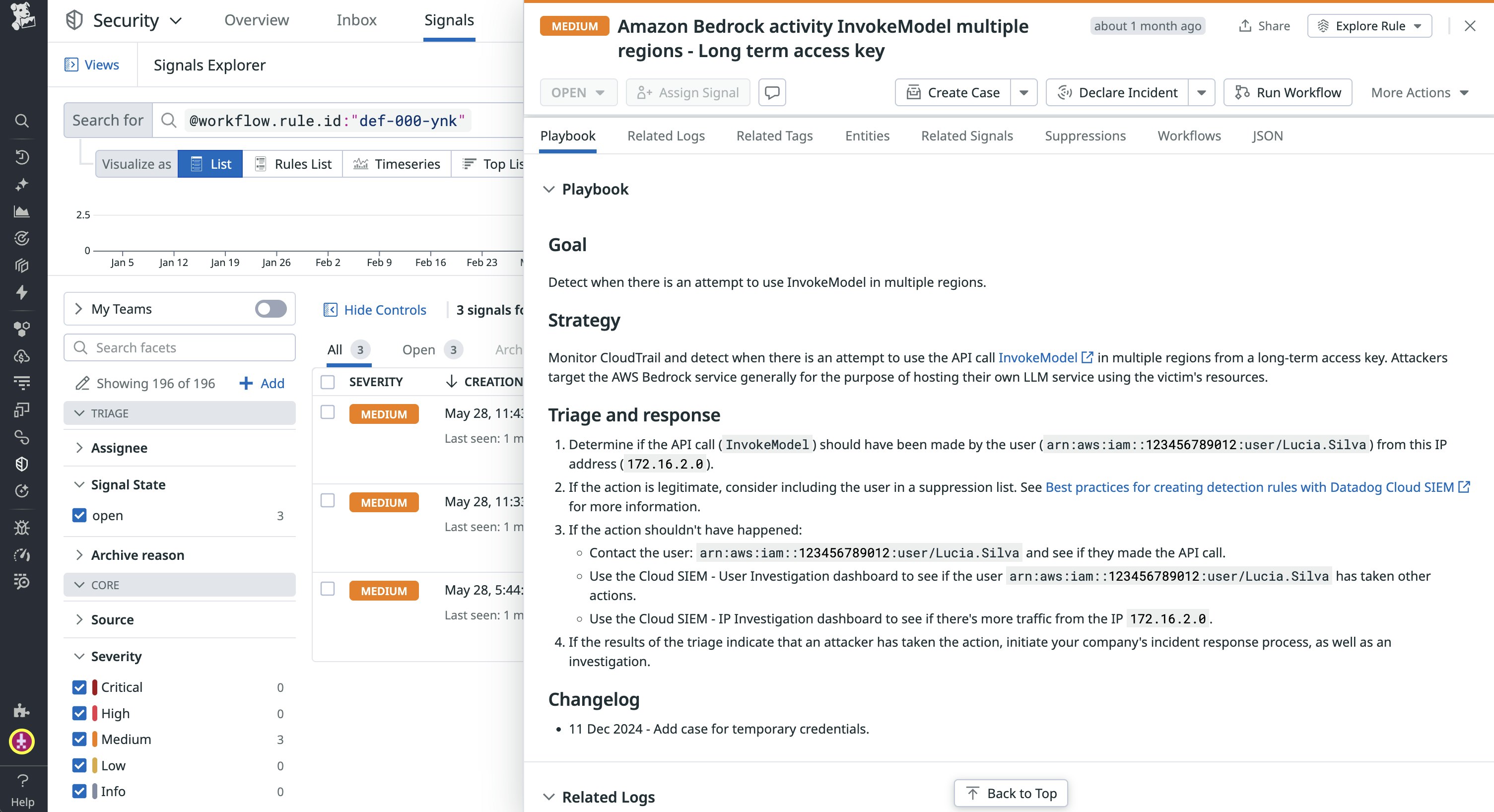

For example, if an attacker executes legitimate InvokeModel calls to Amazon Bedrock with unusual call parameters, Datadog Cloud SIEM will flag these calls and provide details about their source and parameters, as seen in the following screenshot:

Datadog Cloud SIEM will also automatically flag other calls to Amazon Bedrock that could indicate that an attacker is using the discovery tactic to find available LLMs.

Minimize threats to AI infrastructure

In this post, we looked at common threats targeting AI infrastructure and how to detect them in cloud environments. Datadog Cloud SIEM and Cloud Security can help you identify compromised credentials and infrastructure as well as abnormal activity. Check out our documentation to learn more about Datadog’s security offerings. Or, read on to learn how attackers abuse AI artifacts in supply chains. If you don’t already have a Datadog account, you can sign up for a free 14-day trial.