Mallory Mooney

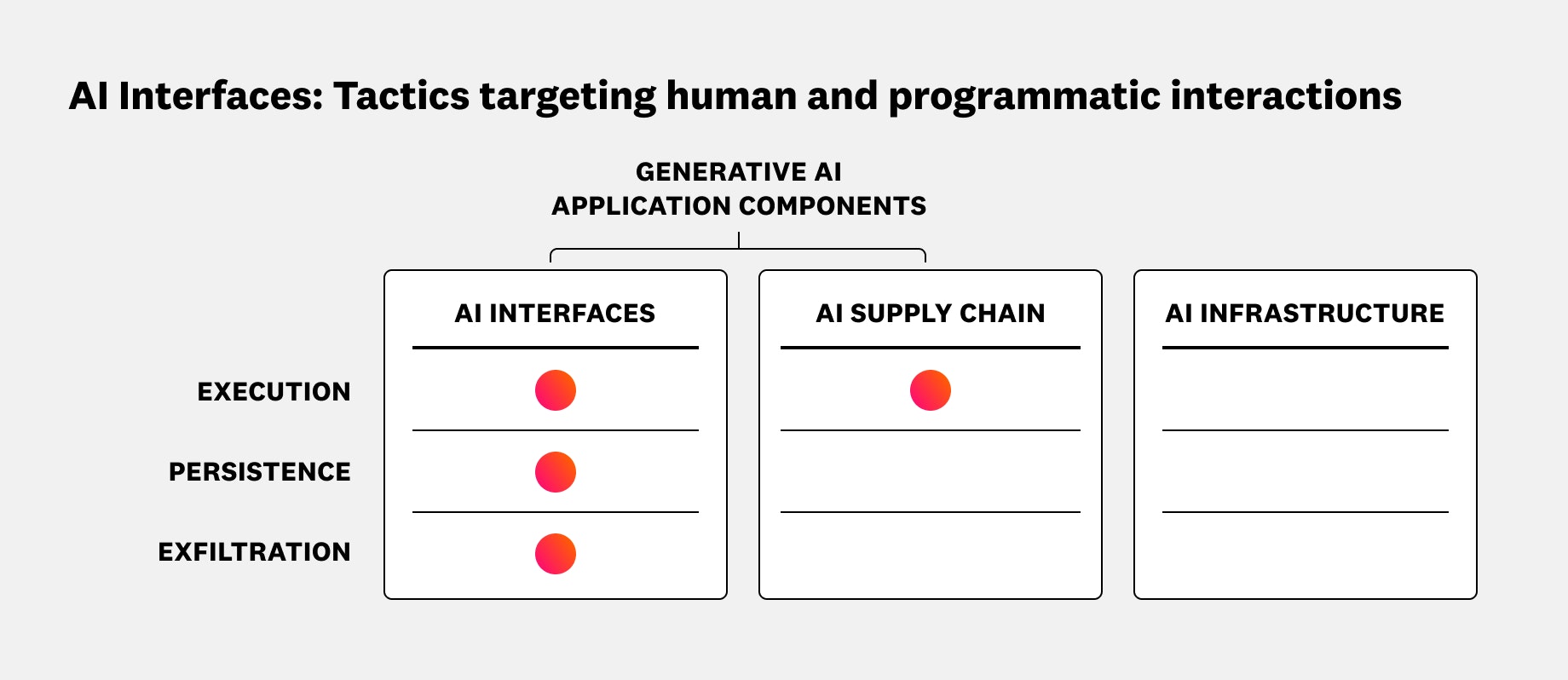

In Parts 1 and 2 of this series, we looked at how attackers get access to and take advantage of the infrastructure and supply chains that shape generative AI applications. In this post, we’ll discuss AI interfaces, which we define as the entry points and logic that determine how a user interacts with an AI application. These elements can include chat interfaces, such as AI assistants, and API endpoints for supporting services. We’ll map the threats that target AI interfaces to MITRE’s Adversarial Threat Landscape for Artificial Intelligence Systems (ATLAS) tactics and techniques, which summarize why and how attackers target various components of generative AI applications. We’ll also look at how to detect and minimize these threats.

Common attacks that target AI interfaces

Continuing with our analogy from Parts 1 and 2, if AI infrastructure serves as the highway and supply chains are the delivery systems, AI interfaces are the entrance ramps that enable users to access an application’s functionality. This layer relies on user input, which is impossible to fully predict. This means that attackers can take advantage of the flexibility and unpredictability of natural language in order to craft malicious prompts that blend in with real user interactions.

This variability makes AI interfaces a key target for many post-compromise tactics, such as hijacking LLM behavior, maintaining control over an interface, and leaking data. The following diagram shows how tactics like these concentrate at the AI interface, but they can also expand an attacker’s reach by exploiting interface dependencies, such as supply chain artifacts. By mapping where these attacks occur, you can identify gaps in security monitoring and response.

Embedding and executing harmful code

For user-facing interfaces, such as AI assistants, attackers may use execution to run harmful code embedded in AI artifacts, such as those used in supply chains. This tactic is typically used as part of a broader attack, supporting other tactics such as initial access and data exfiltration.

Commonly used techniques during this stage include both direct and indirect prompt injections, which attempt to hijack LLM behavior. In one instance, an attacker successfully leaked private SQL database tables via a prompt injection attack.

Researchers used a similar approach to the one in our previously mentioned M365 Copilot scenario, which combined several tactics, including initial access and execution. In this instance, they used an indirect prompt injection to change LLM behavior every time an employee used a poisoned RAG entry. These examples demonstrate how the execution tactic uses AI interfaces to gain access to other parts of the application’s infrastructure and supply chains. Detecting prompt injections can serve as a first line of defense and stop these attacks before they escalate.

Maintaining a foothold

Once attackers have access to an AI application or enough information about how to manipulate it, they can attempt to maintain a foothold via persistence. This enables attackers to continue their attacks against a particular application, even if an organization takes steps to limit their access. For AI interfaces, that can look like manipulating a model or its context. In one case, attackers manipulated a Twitter chatbot’s responses by using specific, coordinated prompts. Because the chatbot learned from user input, the prompts gradually degraded its responses.

This example highlights the complexity of managing user input, where organizations need the ability to reliably identify and prioritize harmful input. One toxic prompt on its own might not signal an attack, for example, but a pattern of that type of input is worth monitoring.

Exfiltrating data

One of the primary goals of an attacker is to find and steal sensitive data. This tactic often starts at the interfaces that enable attackers to interact with a model. LLMs in particular are prone to data leakage, especially if the application using them is deployed without appropriate guardrails for user input. One way that attackers target LLMs and their interfaces is through data leakage, which involves manipulating a model to reveal sensitive data that it has memorized or has access to via API services or other tools.

In one exercise, researchers were able to use a malicious, self-replicating prompt with a RAG-based assistant. The prompt injection, which was part of the execution tactic, included instructions for the assistant to provide sensitive user data in its response.

Detect and minimize the inherent risks in AI interfaces

We’ve covered only a few of the ways attackers exploit interfaces, but they highlight the fact that prompts are one of the primary security concerns for generative AI applications. Because prompts are a new and evolving attack surface, organizations need to create new detection patterns that focus on unpredictable user-to-model interactions and model output, such as sensitive data exposure. Tracking these patterns is especially critical as organizations scale their AI applications to handle more user requests.

Monitoring for toxic prompts, for example, can give organizations visibility into instances where attackers are attempting to maintain their influence over LLM behavior. In the following example evaluation, Datadog LLM Observability automatically flags toxic content submitted to a chatbot:

To help prevent an end user from succeeding in manipulating a model, organizations can sanitize prompt inputs before the model receives them. This can surface suspicious patterns more efficiently than allowing all input. In addition, rate limiting inputs throttles the volume of prompts that attackers may attempt to generate.

Monitoring for more direct attacks against AI interfaces, such as prompt injections, is also an important part of keeping generative AI applications and their data secure. Injection attacks can show up as unusual prompt patterns, such as prompts with long instructions, nested logic, and out-of-scope queries. When successful, these attacks can leak sensitive data, including credentials and personally identifiable information (PII), as output for the attacker.

In the following evaluation, Datadog LLM Observability automatically flags an attempt to exfiltrate data from an SQL database via a prompt injection:

In cases like these, controlling model permissions and which APIs, tools, and data they can call or access will help minimize the risk of successful prompt injections, such as those mentioned as part of the exfiltration tactic. Implementing output filtering can also remove sensitive data and clean degraded responses before a model generates output.

For an in-depth look at how to detect and respond to these tactics, check out our guide to monitoring for prompt injection attacks.

Protect your generative AI applications from abuse

In this post, we looked at common threats that target AI interfaces, such as chatbots and assistants, and how to detect and prevent them. Check out our documentation to learn more about Datadog’s security offerings, such as Datadog Code Security.

Datadog is also introducing real-time AI security guardrails through AI Guard, helping secure your AI apps and agents in real time against prompt injection, jailbreaking, tool misuse, and sensitive data exfiltration attacks. We’re building a suite of seven protection capabilities, including:

- Prompt protection

- Tool protection

- Sensitive data protection

- MCP protection

- Anomaly protection

- Alignment protection

Join the AI Guard Product Preview to learn more. If you don’t already have a Datadog account, you can sign up for a free 14-day trial.