Mallory Mooney

The AI ecosystem is rapidly changing, and with this growth comes unique challenges in securing the infrastructure and services that support it. In Part 1 of this series, we explored how attackers target the underlying resources that host and run AI applications, such as cloud infrastructure and storage. In this post, we’ll look at threats that affect AI-specific resources in supply chains, which are the software and data artifacts that determine how an AI service operates. Supply chain artifacts can include training datasets, pre-trained models, and third-party AI libraries. We’ll map the threats that target supply chain components to MITRE’s Adversarial Threat Landscape for Artificial Intelligence Systems (ATLAS) tactics and techniques, which summarize why and how attackers target AI components. We’ll also look at how to detect and minimize these threats.

Common attacks that target AI artifacts in supply chains

Revisiting our analogy from Part 1, if the infrastructure for generative AI is the highway, then supply chains provide the transportation and routes. They use the infrastructure—cloud storage, compute instances, and servers—to deliver functionality to users. In many cases, organizations integrate both in-house and third-party supply chain artifacts into their AI applications. This approach gives them greater flexibility in how and what they develop, but it also means they have less control over application security. Consequently, organizations may unknowingly include malicious third-party artifacts, such as poisoned training data or models, into their applications’ supply chains. These risks enable attackers to execute specific tactics, such as developing and staging attacks against supply chains, gaining initial access to AI artifacts, and avoiding detection. As seen in the following diagram, visualizing where these tactics occur can help you prioritize monitoring and implement security controls that cover the areas that attackers target the most.

Developing and staging an attack

The early stages of many attacks involve attackers building and testing tools that they will later use to compromise an application. Because this activity often occurs outside the organization’s visibility, it leaves no immediate signs of compromise. The value in understanding these early stages is that they offer insight into how attackers might repurpose available assets in ways you may not expect.

For example, resource development involves an attacker gathering the resources they need to execute other attack phases. During this stage, attackers may attempt to acquire public AI artifacts from organizations in order to publish their own malicious artifacts, such as poisoned datasets, training data, and models, on popular repositories and package registries.

In one case, security researchers were able to publish packages under hallucinated names to the Python Package Index (PyPI). AI-generated code like this is a growing risk to the supply chain, as it leads teams to pull actively malicious dependencies for their applications.

In addition to developing resources in the early stages, attackers may prep and stage their attacks on target AI models to test their readiness. Examples include creating and training proxy models and crafting data to feed to them. This enables them to ensure that their attacks will work against legitimate models.

Because these tactics often occur offline before a direct attack is executed, it’s difficult to anticipate how an attacker will target a part of the supply chain. This gap in visibility into early-stage attacks highlights the need for a defense-in-depth approach to risk detection and remediation. This is especially important as organizations increasingly rely on AI coding tools but may not thoroughly vet created dependencies, which can later become attack vectors.

Gaining initial access

Like with AI infrastructure, attackers will use initial access techniques to exploit the supply chain. This tactic builds on the resource development and staging phases, using the artifacts attackers have collected or prepared to gain entry.

One example of this attack path involved a researcher creating a believable but unverified organization account on a popular public model repository. Employees unknowingly joined this fake organization and uploaded private AI models, which the researcher then had full read and write access to. The researcher compromised an available model by embedding a command and control (C2) server and republishing it on the account. When an employee used the compromised model, the embedded malware enabled the researcher to control the victim’s machine via the established C2 channel.

In this example, attackers used supply chain artifacts hosted on public repositories, where they blended in with legitimate packages and accounts. This highlights the need to maintain visibility into supply chain artifacts, especially as the AI ecosystem evolves.

Avoiding detection

Once an attacker has access to an environment, they will attempt to avoid detection by security monitoring. In supply chains, this can look like attackers publishing corrupted models that successfully evade available scanning tools, such as Hugging Face’s security scanner. In one incident, researchers discovered malicious AI models on the Hugging Face repository that had evaded detection by exploiting a known risk with Python’s pickle files.

Detect and minimize the inherent risks in supply chains

We’ve only covered a few of the ways attackers exploit supply chain vulnerabilities. These examples are part of a much larger and evolving set of tactics targeting its artifacts. Attackers are increasingly taking advantage of the complexity of the supply chain ecosystem by using both direct and indirect methods to manipulate models and their architecture and data.

Because these attacks span the entire supply chain, organizations need to be proactive in how they monitor the artifacts they use. This is especially true considering the risk of unknowingly pulling in potentially vulnerable third-party AI packages, such as the popular Python AI package Langflow.

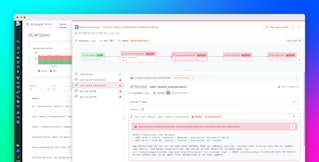

Understanding where vulnerabilities exist is a critical starting point for AI security monitoring. Datadog Security Research recently published an emerging vulnerability notice that highlights risks associated with the Langflow package. The notice also included information about where Datadog Code Security detected this risk within a particular environment:

Vulnerability scanning using tools like Code Security and GuardDog provides readily available visibility into security risks with third-party libraries, which attackers often take advantage of as part of their initial access and defense evasion tactics.

In addition to knowing where vulnerabilities exist in library code, proactive security monitoring requires an understanding of how attackers find and use AI libraries and data. This enables organizations to set guardrails around how both internal and external users access, share, maintain, and build supply chain artifacts. MITRE offers the following recommendations:

- Create authorization controls for using internal model registries, production models, and training data: This step limits an attacker’s capabilities if they gain access to an organization’s environment, such as through its AI infrastructure.

- Restrict which information about production model architecture and data is publicly available: Because attackers use this data to develop their resources and stage AI attacks, limiting what’s available prevents them from taking advantage of it.

- Regularly sanitize training data: Attackers attempt to manipulate models by publishing poisoned training data on public registries. Sanitizing any publicly available data or datasets before use reduces the likelihood of attackers poisoning internal models.

- Routinely check AI model behavior and data: As with sanitizing training data, validating that AI models are operating as expected can help surface signs of compromise, such as poisoning.

- Code signing: This step ensures that supply chain artifacts are verified with digital signatures, which prevents organizations from integrating unauthorized models and other software into their applications.

Minimize threats to AI artifacts in supply chains

In this post, we looked at common threats that target the supply chain and how to detect and mitigate them. Check out our documentation to learn more about Datadog’s security offerings, such as Datadog Code Security. Or, read on to explore the ways attackers abuse AI user interfaces in Part 3 of this series. If you don’t already have a Datadog account, you can sign up for a free 14-day trial.