Ben Cohen

Emaad Khwaja

Afshin Rostamizadeh

Ameet Talwalkar

Othmane Abou-Amal

We are excited to announce a new open-weights release of Toto, our state-of-the-art time series foundation model (TSFM), and BOOM, a new public observability benchmark that contains 350 million observations across 2,807 real-world time series. Both are open source under the Apache 2.0 license and available to use immediately.

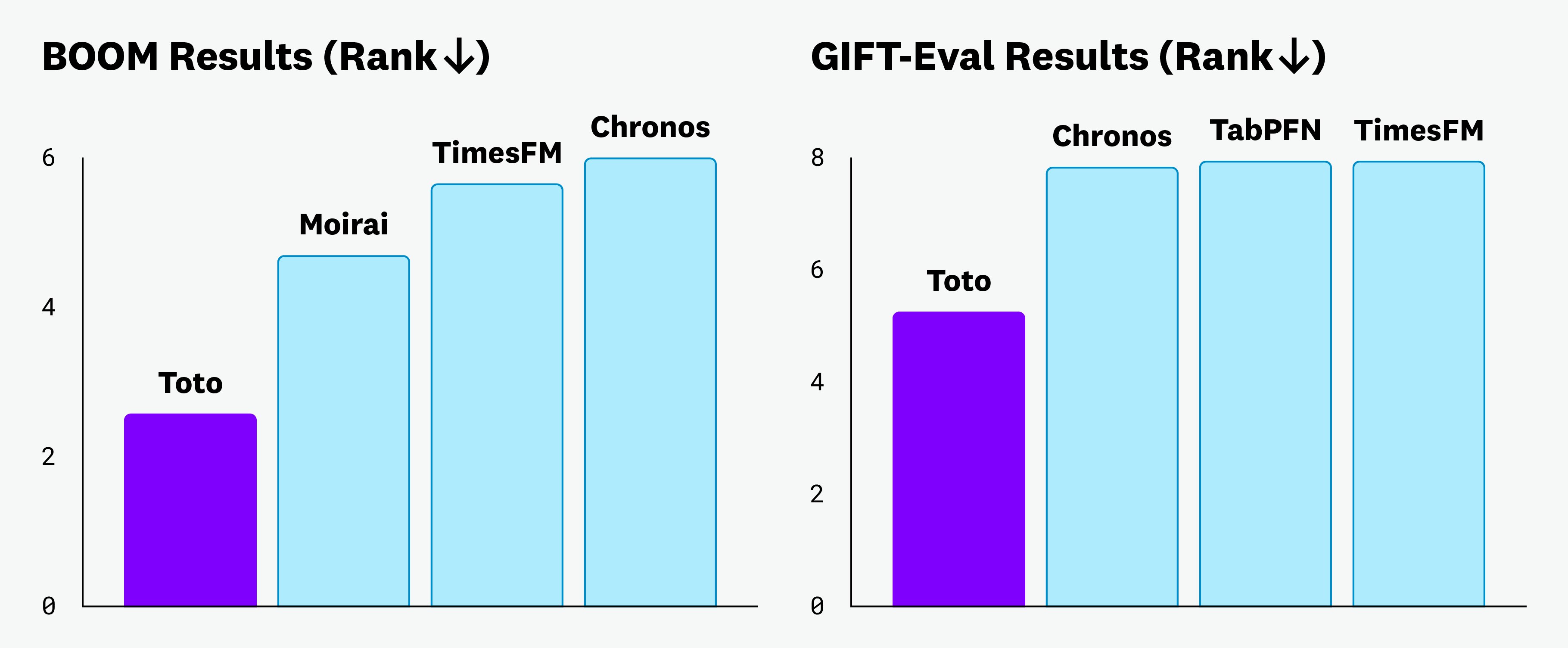

The open-weights Toto model, trained with observability data sourced exclusively from Datadog’s own internal telemetry metrics, achieves state-of-the-art performance by a wide margin compared to all other existing TSFMs. It does so not only on BOOM, but also on the widely used general purpose time series benchmarks GIFT-Eval and LSF (long sequence forecasting).

BOOM, meanwhile, introduces a time series (TS) benchmark that focuses specifically on observability metrics, which contain their own challenging and unique characteristics compared to other typical time series. As with Toto, BOOM’s data is sourced exclusively from our internal metrics, but from a separate environment to eliminate any cross-contamination with Toto training data. Further details can be found in our latest technical paper.

The challenges of observability

Observability metrics represent an important, unique, and challenging subset of TS data, raising the need for observability-focused models and benchmarks. Accurately modeling observability metrics is essential for critical operations like anomaly detection (e.g., identifying spikes in error rates), predictive forecasting (e.g., anticipating resource exhaustion or scaling needs), and dynamic autoscaling. However, observability time series have challenging distributional properties, including sparsity, spikes, and noisiness; anomaly contamination; and contain high-cardinality multivariate series, with complex relationships among variates.

Real-world observability systems also routinely generate millions or even billions of distinct time series, making individual fine-tuning or supervised training of complex models per time series infeasible. Finally, many of these series are short lived and correspond to ephemeral infrastructure, creating a “cold-start” problem. All these operational challenges provide the need for a zero-shot TSFM with excellent performance on observability data specifically.

Open-weights Toto

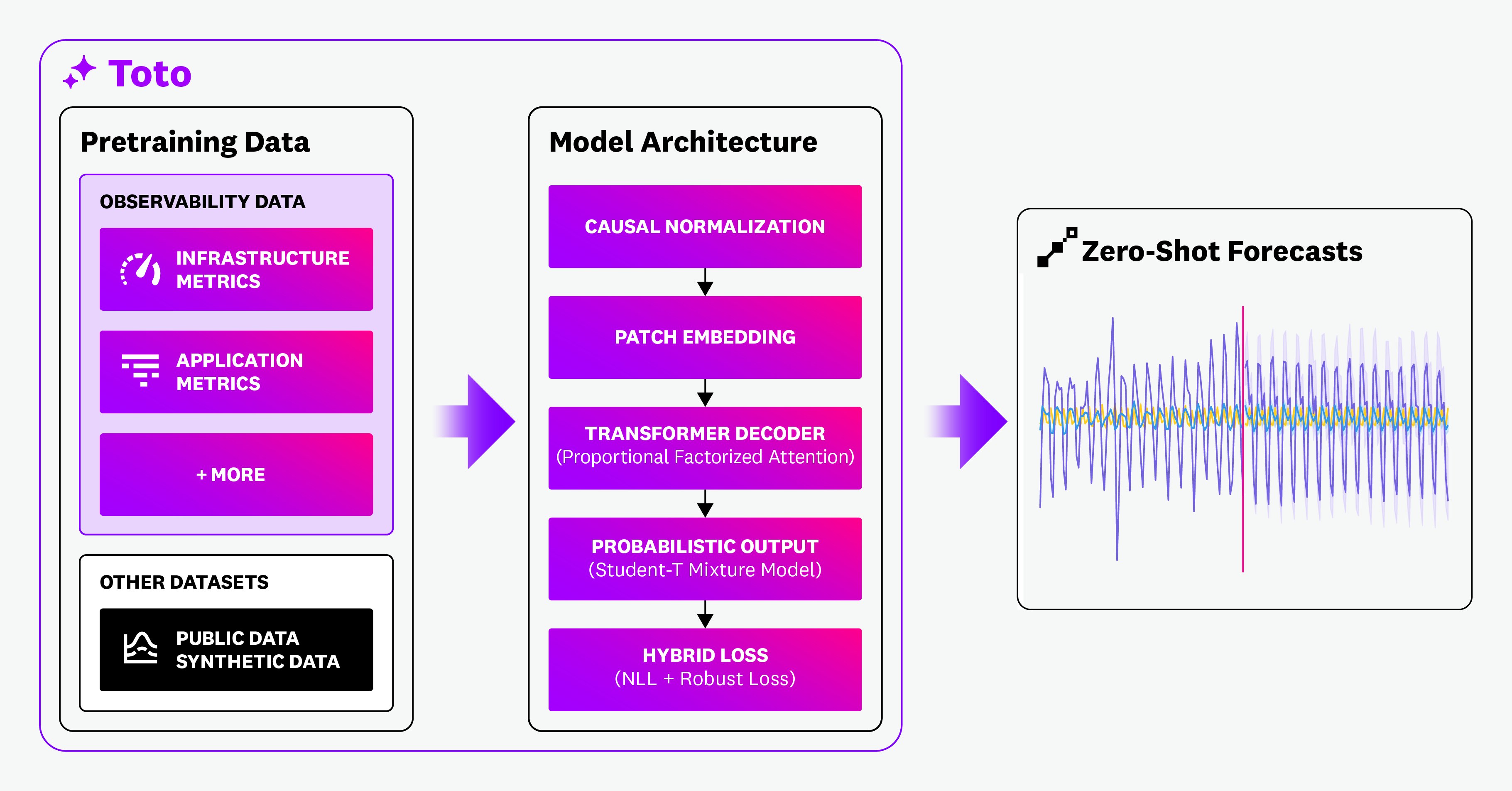

The new (151 million parameter) open-weights Toto model (model card) builds upon our initial proprietary model architecture, which combined a Student-t mixture model prediction head to model heavy-tailed observability time series and a proportional factorized attention mechanism to efficiently capture variate interactions.

Our new model also uses a novel patch-based causal normalization approach, which enables the model to generalize better to highly nonstationary data, and uses a composite robust loss to stabilize training dynamics. Detailed descriptions of all of these features as well as ablations are presented in our paper.

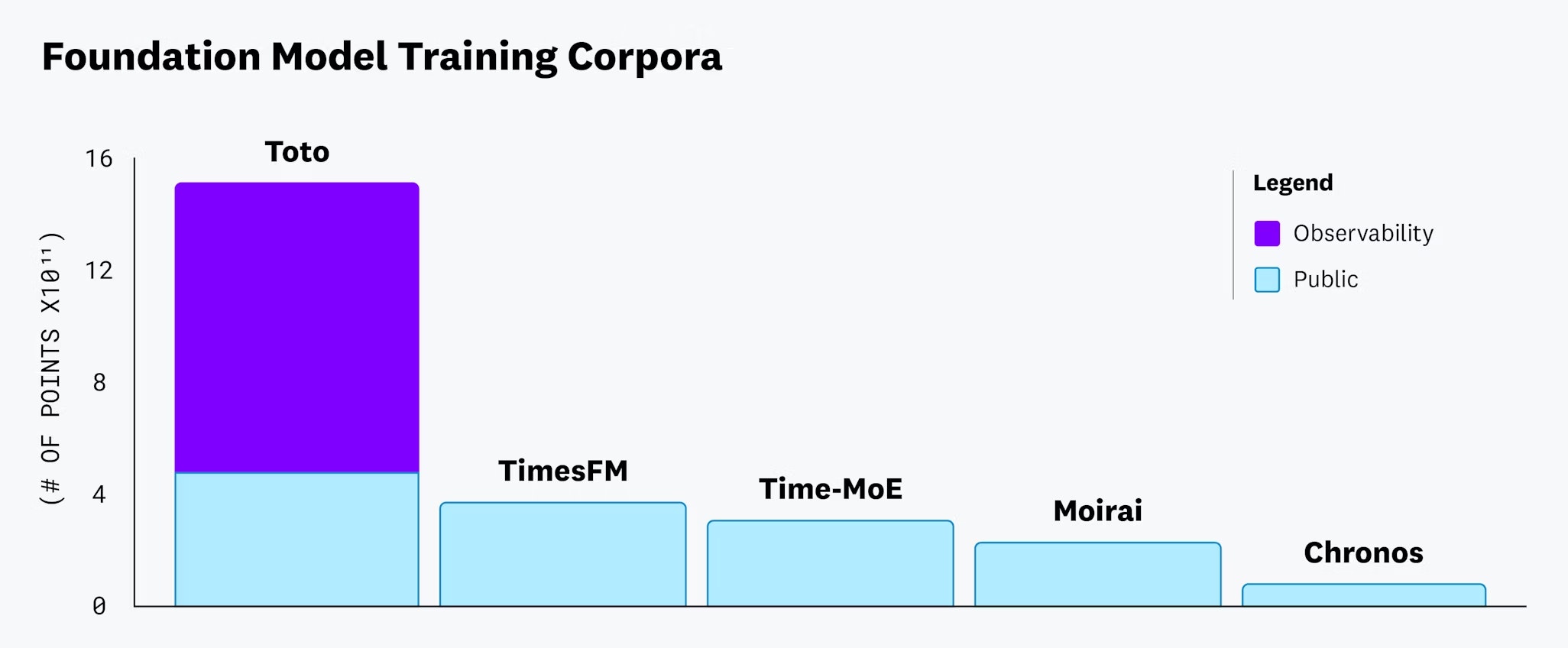

One of the biggest factors in Toto’s success is that it is trained on a large amount of real-world observability data. The bar chart below shows the number of unique (non-synthetic) time series observations in the training repositories of several TSFMs and the fraction of Toto’s training data that consists of observability metrics specifically.

As shown in the bar plots at the top of this post, the Toto model achieves state-of-the-art (SOTA) performance, gaining the best rank score by a significant margin on the BOOM and GIFT-Eval benchmarks (as of May 19, 2025).

On the smaller LSF benchmark, we also measure the performance of a fine-tuned Toto model (TotoFT) and, again, find state-of-the-art performance. As is standard for this benchmark, we compute normalized mean squared error (MSE) and mean absolute error (MAE) for each of six different datasets within the LSF benchmark. Toto achieves the best mean performance across datasets, both in terms of MSE and MAE, in particular achieving a 5.8 percent improvement in MAE over the next best method.

BOOM evaluation framework

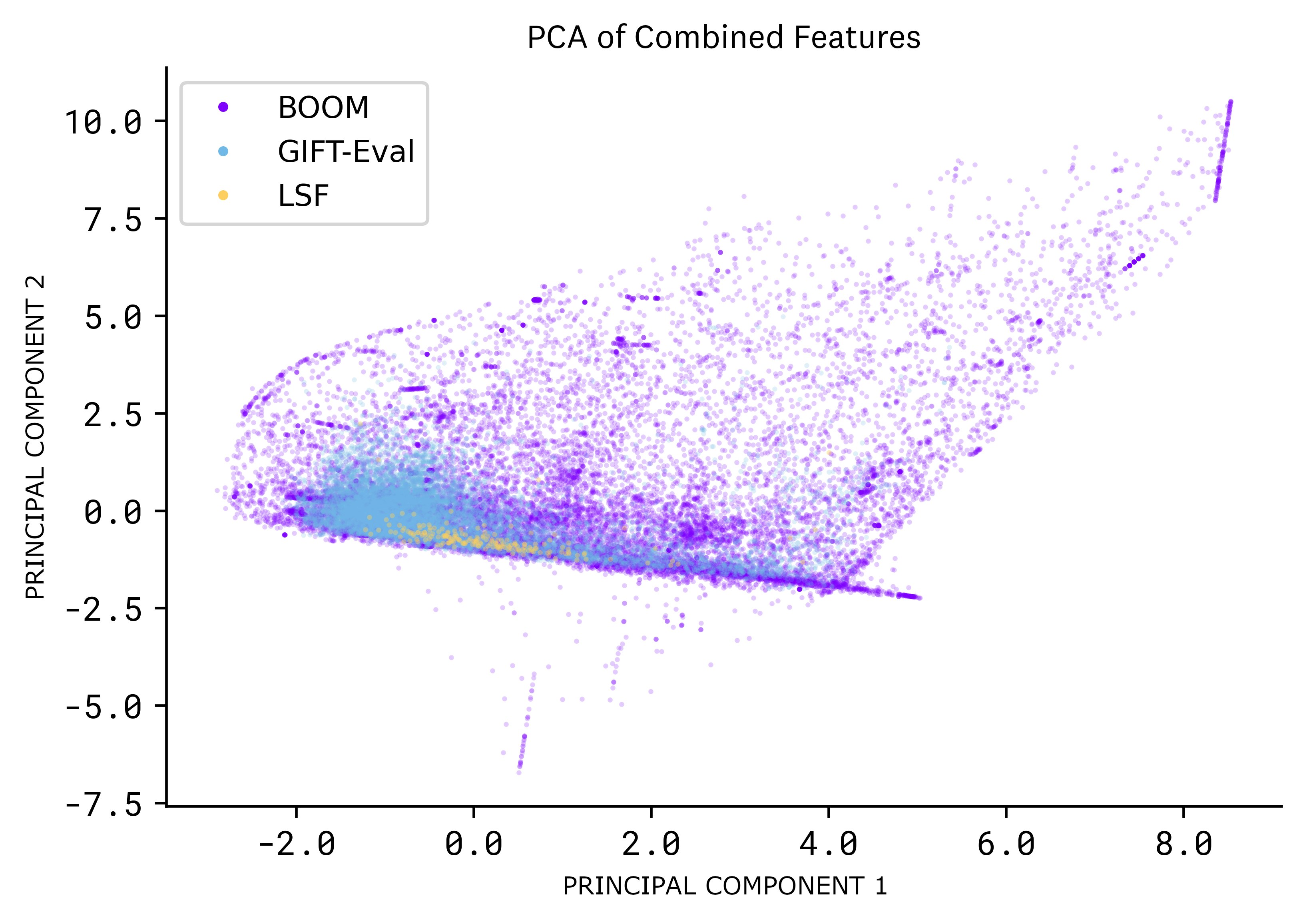

BOOM (Benchmark of Observability Metrics) (data card) contains 350 million observations across 2,807 real-world production series, where each series can contain multiple variates. We highlight the diversity of BOOM by visualizing a two-dimensional projection of each series—along with series from GIFT-Eval and LSF. The projection is generated by using the first two principal components computed from a total of six statistical features of each series.

The evaluation procedure for the benchmark closely follows the methodology established by the GIFT-Eval benchmark. In particular, we calculate MASE (mean absolute scaled error) and CRPS (continuous ranked probability score) on all zero-shot models on three types of forecasting lengths: short, medium, and long (detailed numbers can be found in our paper). We then normalized these metrics by the seasonal naive performance and rank them based on the normalized CRPS.

Get started with Toto and BOOM

Toto

You can use our new Toto model now. The code needed to load the model and run inference is available in our GitHub repository as is an example Python notebook to get you up and running quickly.

Downloading the model and generating your first forecasts can be done with a few lines of code. Because Toto is a zero-shot model, it’s lightning fast—no need to fit a model before getting predictions.

BOOM

The BOOM leaderboard and evaluation code is an actively maintained resource for the TSFM community to leverage and continue pushing the state of the art. We encourage submissions of other models.

If this type of work intrigues you, consider applying to work at Datadog! We are looking to grow our AI research team in NYC and Paris!

Feature image AI-generated by ChatGPT.