Aaron Kaplan

Shri Subramanian

OpenAI is an AI research and development company whose products include the GPT family of large language models. Since the introduction of GPT-3 in 2020, these models’ fluent and adaptable processing of both natural language and code has propelled their rapid adoption across diverse fields. GPT-4, ChatGPT, and InstructGPT are now used extensively in software development, content creation, and more, and OpenAI’s API allows developers to access the company’s newest general-purpose AI models on demand.

We’re pleased to announce Datadog’s OpenAI integration, which you can use to monitor, assess, and optimize your organization’s usage of OpenAI’s API. In this post, we’ll guide you through using the integration to:

- Track OpenAI usage patterns

- Monitor and allocate costs based on token usage

- Analyze API response times to troubleshoot and optimize performance

Track OpenAI usage patterns

Usage of OpenAI’s products is expanding fast. As diverse teams and users experiment with and build upon the company’s models, it’s important for organizations to monitor and understand this usage.

OpenAI’s API accepts requests to a range of models and endpoints. Usage costs vary depending on the models queried and are based on the consumption of tokens, which are common sequences of characters that comprise the overall throughput of prompts (textual input to OpenAI’s models) and completions (the corresponding output). The models are distinguished by their specializations, such as generating or classifying text or writing code, fine-tuning, and embedding, as well as the sophistication of their processing, their capacity to be trained, and their speed in handling requests.

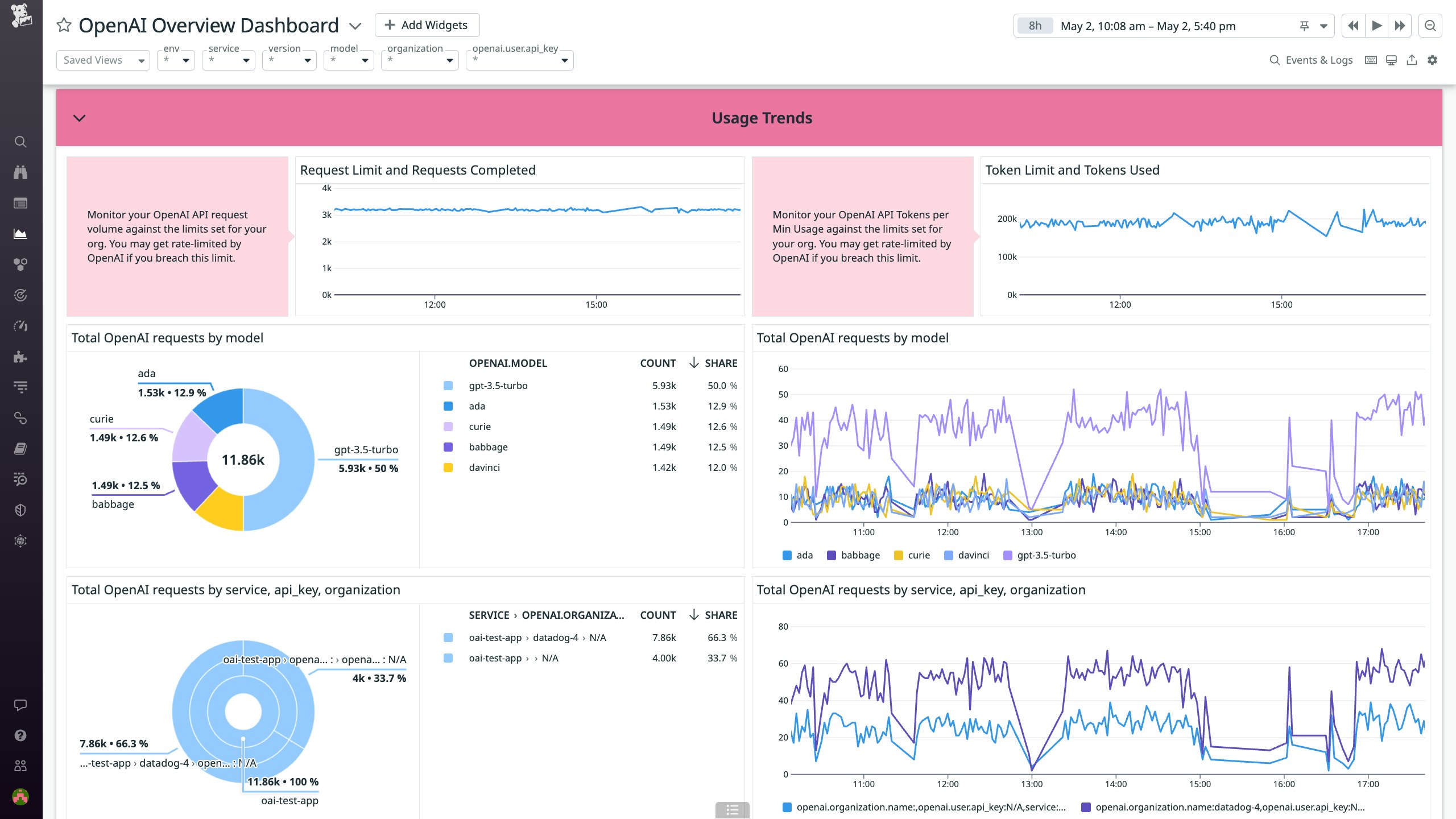

Datadog’s OpenAI integration comes with an out-of-the-box dashboard that helps you understand usage trends throughout your organization by breaking down API requests by OpenAI model, service, organization ID, and API key. Unique organization IDs can be assigned to individual teams, enabling you to track where OpenAI’s models are used in your organization and to what extent. Tracking by API keys can help you break down usage by specific users, enabling you to attribute spikes in usage and costs. It also enables you to trace unauthorized API access back to specific keys and users.

In addition to tracking usage patterns, it’s important to track the overall rate and volume of your OpenAI usage in order to ensure that you don’t breach the API’s rate limits, which apply to both requests per minute and token usage per minute. The openai.ratelimit.requests and openai.ratelimit.remainingrequests metrics measure the rate of your requests, while openai.ratelimit.tokens and openai.ratelimit.remaining.tokens measure the rate of your token usage. In addition to potentially breaching rate limits, high rates of token usage can increase latency. Our recommended monitors for this integration allow you to proactively track these metrics and avoid encountering rate-limit errors or excessive latencies.

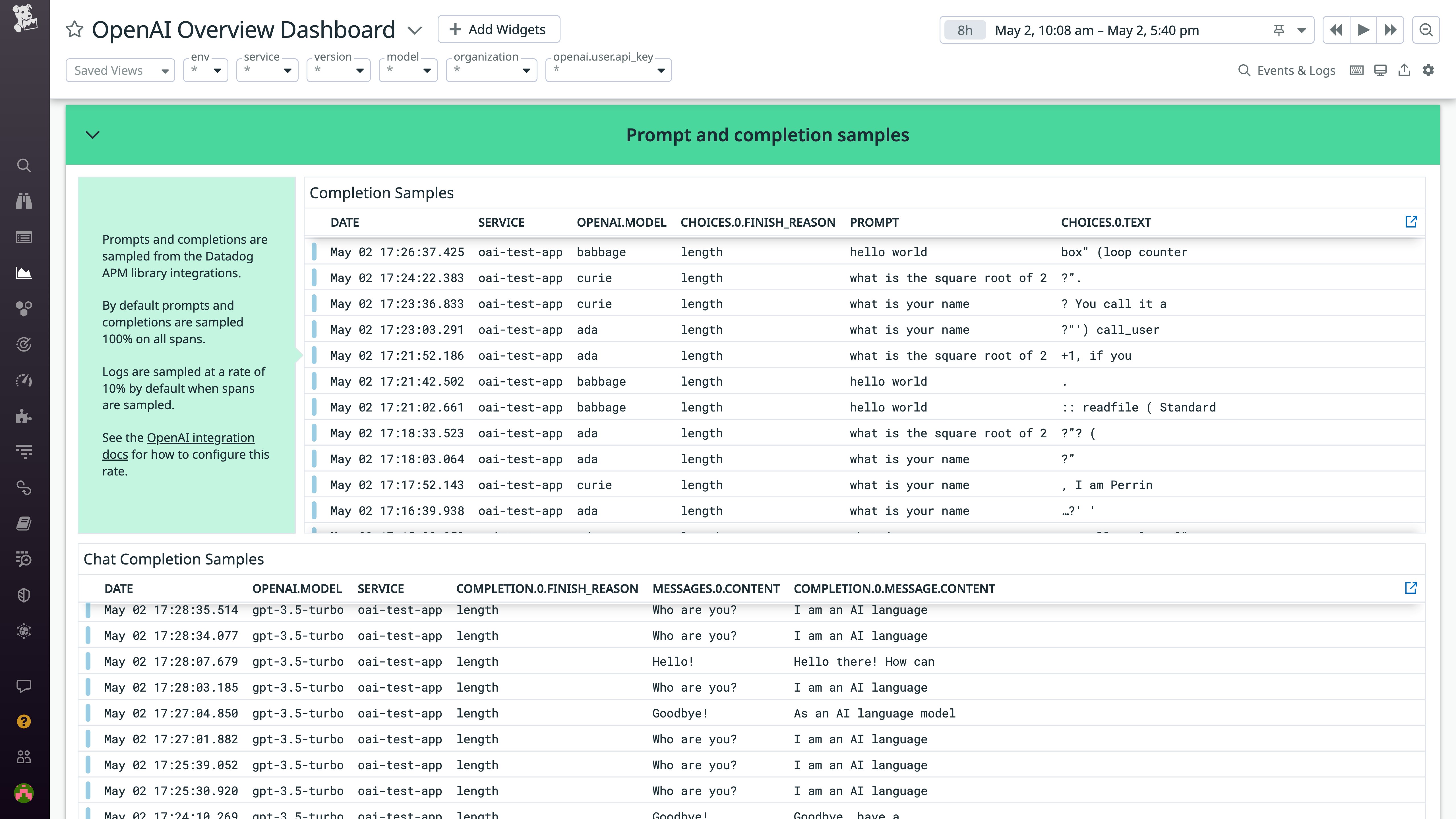

For even more granular visibility into OpenAI usage, you can instrument your Python applications to collect logs that capture prompts entered by users in your organization and via your applications along with their corresponding completions. By default, 10 percent of traced requests will emit logs containing prompts and completions.

Visibility into OpenAI prompts and completions enables you to understand user engagement with your applications and optimize them accordingly. For example, you may want to adjust the max_tokens or temperature parameters of your API requests to control costs and tweak the “randomness” of the responses your users receive to their queries.

Monitor and allocate costs based on token usage

Usage of the OpenAI API is billed according to the consumption of tokens. Token costs vary significantly from model to model. (For its latest models, including GPT-4, OpenAI sets distinct prices for prompt and completion tokens). Every request to the OpenAI API consumes tokens, so usage costs can quickly multiply.

Datadog’s integration for OpenAI helps you understand the primary cost drivers for your OpenAI usage by tracking your total token consumption and breaking it down by prompt and completion tokens. The integration’s out-of-the-box dashboard also tracks the average total number of tokens per request, as well as the average numbers of prompt and completion tokens (i.e., the average prompt and completion lengths) per request.

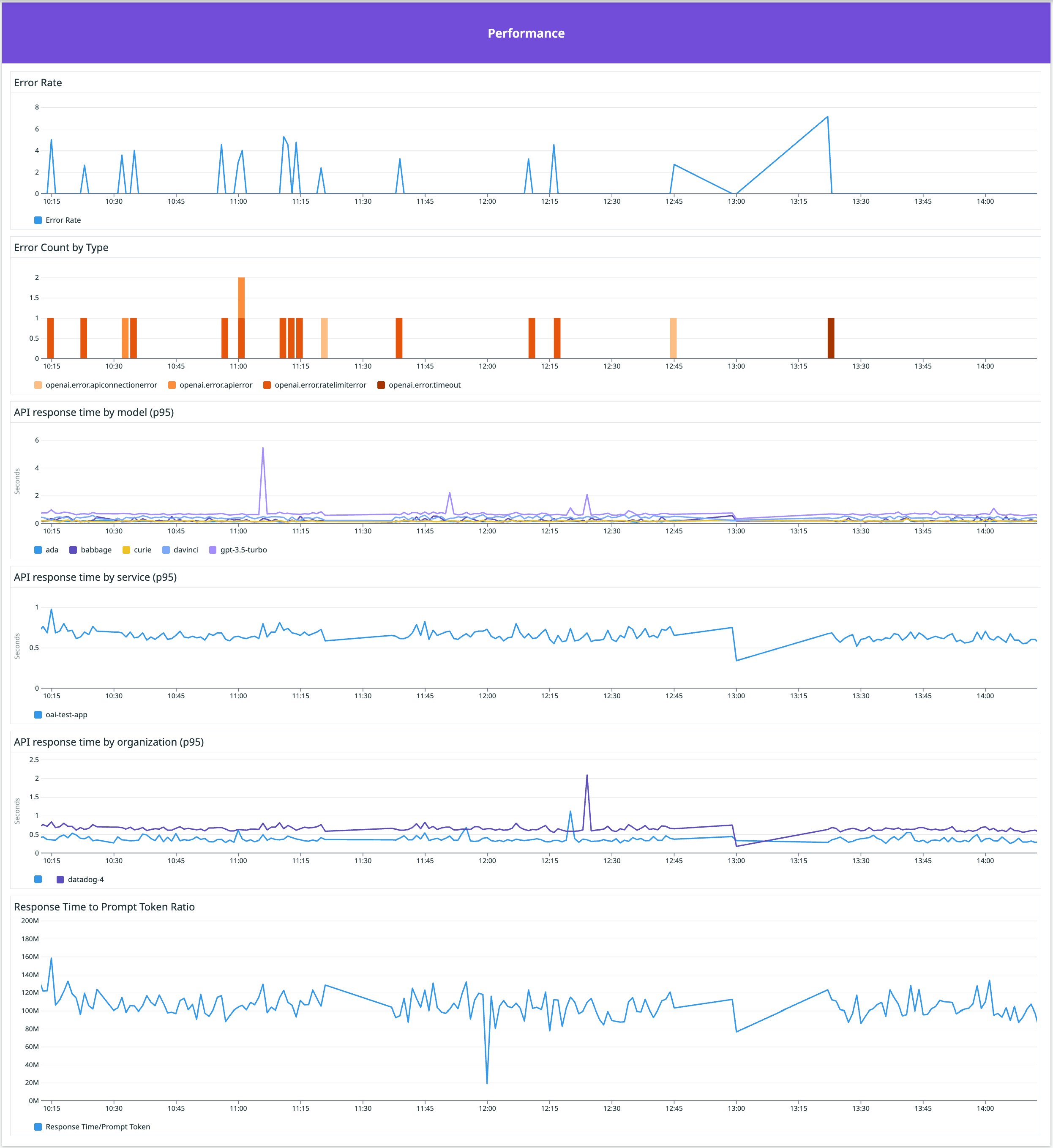

Analyze API response times to troubleshoot and optimize performance

In addition to tracking internal usage patterns and costs, Datadog’s integration helps you monitor the performance of the OpenAI API. Various metrics track API error rates and response times, and the out-of-the-box dashboard supplies this data for each of the models in use and the services and organizations using them.

The out-of-the-box dashboard also tracks the ratio of response times to the volume of prompt tokens, so that you can easily distinguish truly anomalous latencies from prolonged response times caused by spikes in requests.

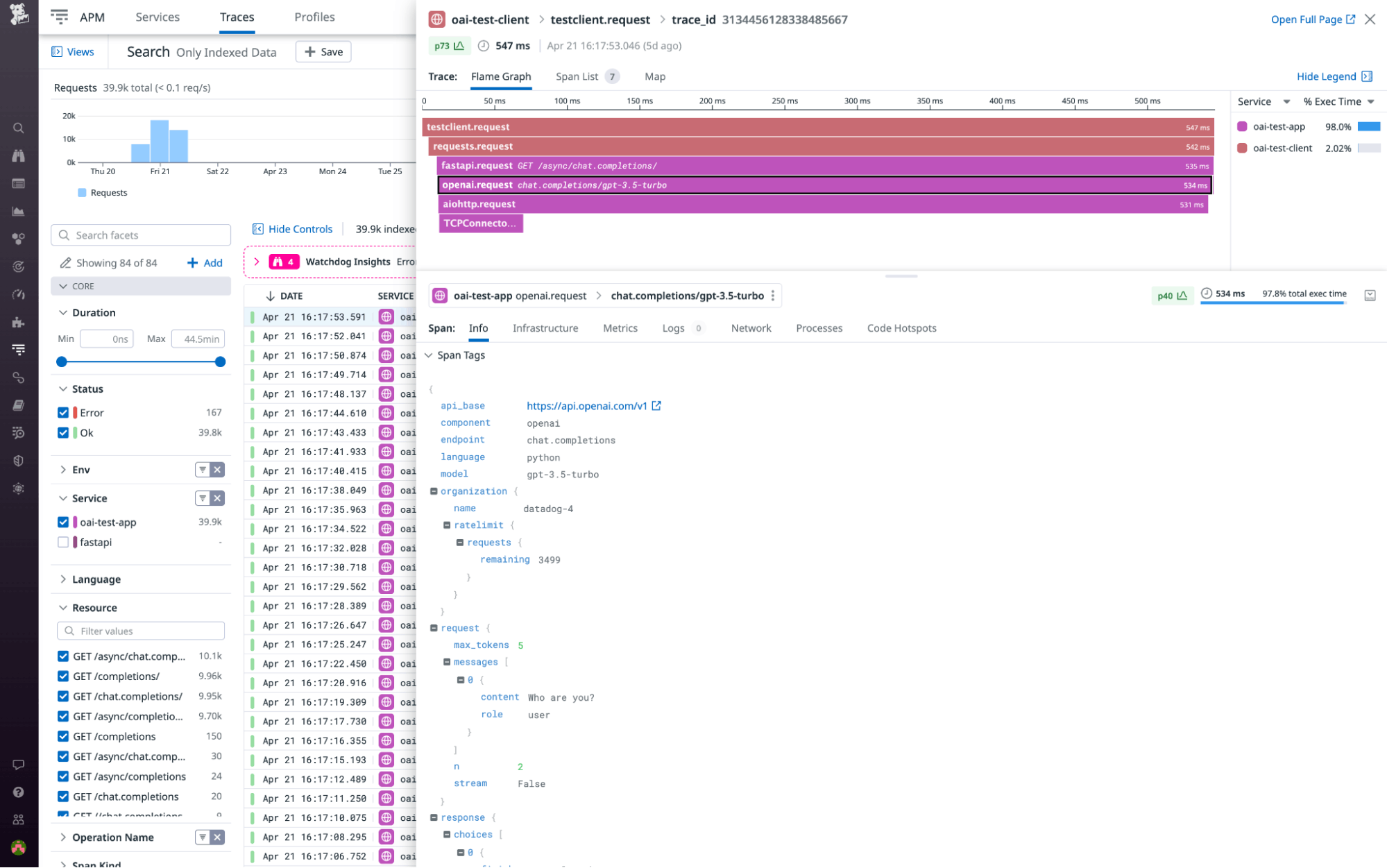

For in-depth insight into performance issues, you can use traces. Traces and spans provide rich contextual information on individual requests, such as the specific models that were queried and the precise content of prompts and completions.

Understand and optimize your organization’s OpenAI usage

Datadog’s OpenAI integration provides critical insights into OpenAI usage patterns, costs, and performance. As the use of OpenAI continues to proliferate rapidly throughout organizations, our integration enables you to track that spread as well as understand and optimize the use of OpenAI models across various teams and within evolving applications. Check out our documentation to start monitoring OpenAI today. If you’re new to Datadog, sign up for a 14-day free trial.