Emily Chang

Editor’s note: Elasticsearch uses the term "master" to describe its architecture and certain metric names. Datadog does not use this term. Within this blog post, we will refer to this term as "primary".

In Elasticsearch, a healthy cluster is a balanced cluster: primary and replica shards are distributed across all nodes for durable reliability in case of node failure.

But what should you do when you see shards lingering in an UNASSIGNED state?

Before we dive into some solutions, let's verify that the unassigned shards contain data that we need to preserve (if not, deleting these shards is the most straightforward way to resolve the issue). If you already know the data's worth saving, jump to the solutions:

- Shard allocation is purposefully delayed

- Too many shards, not enough nodes

- You need to re-enable shard allocation

- Shard data no longer exists in the cluster

- Low disk watermark

- Multiple Elasticsearch versions

The commands in this post are formatted under the assumption that you are running each Elasticsearch instance's HTTP service on the default port (9200). They are also directed to localhost, which assumes that you are submitting the request locally; otherwise, replace localhost with your node's IP address.

Cost-effectively collect, process, search, and analyze logs at scale with Logging without Limits™.

Pinpointing problematic shards

Elasticsearch's cat shards API will tell you which shards are unassigned, and why:

curl -XGET localhost:9200/_cat/shards?h=index,shard,prirep,state,unassigned.reason| grep UNASSIGNEDEach row lists the name of the index, the shard number, whether it is a primary (p) or replica (r) shard, and the reason it is unassigned:

constant-updates 0 p UNASSIGNED NODE_LEFT node_leftIf you're running version 5+ of Elasticsearch, you can also use the cluster allocation explain API to try to garner more information about shard allocation issues:

curl -XGET localhost:9200/_cluster/allocation/explain?prettyThe resulting output will provide helpful details about why certain shards in your cluster remain unassigned:

{ "index" : "testing", "shard" : 0, "primary" : false, "current_state" : "unassigned", "unassigned_info" : { "reason" : "INDEX_CREATED", "at" : "2018-04-09T21:48:23.293Z", "last_allocation_status" : "no_attempt" }, "can_allocate" : "no", "allocate_explanation" : "cannot allocate because allocation is not permitted to any of the nodes", "node_allocation_decisions" : [ { "node_id" : "t_DVRrfNS12IMhWvlvcfCQ", "node_name" : "t_DVRrf", "transport_address" : "127.0.0.1:9300", "node_decision" : "no", "weight_ranking" : 1, "deciders" : [ { "decider" : "same_shard", "decision" : "NO", "explanation" : "the shard cannot be allocated to the same node on which a copy of the shard already exists" } ] } ]}In this case, the API clearly explains why the replica shard remains unassigned: "the shard cannot be allocated to the same node on which a copy of the shard already exists". To view more details about this particular issue and how to resolve it, skip ahead to a later section of this post.

If it looks like the unassigned shards belong to an index you thought you deleted already, or an outdated index that you don't need anymore, then you can delete the index to restore your cluster status to green:

curl -XDELETE 'localhost:9200/index_name/'If that didn't solve the issue, read on to try other solutions.

Reason 1: Shard allocation is purposefully delayed

When a node leaves the cluster, the primary node temporarily delays shard reallocation to avoid needlessly wasting resources on rebalancing shards, in the event the original node is able to recover within a certain period of time (one minute, by default). If this is the case, your logs should look something like this:

[TIMESTAMP][INFO][cluster.routing] [PRIMARY NODE NAME] delaying allocation for [54] unassigned shards, next check in [1m]You can dynamically modify the delay period like so:

curl -XPUT "localhost:9200/<INDEX_NAME>/_settings?pretty" -H 'Content-Type: application/json' -d'{ "settings": { "index.unassigned.node_left.delayed_timeout": "5m" }}'Replacing <INDEX_NAME> with _all will update the threshold for all indices in your cluster.

After the delay period is over, you should start seeing the primary assigning those shards. If not, keep reading to explore solutions to other potential causes.

Reason 2: Too many shards, not enough nodes

As nodes join and leave the cluster, the primary node reassigns shards automatically, ensuring that multiple copies of a shard aren't assigned to the same node. In other words, the primary node will not assign a primary shard to the same node as its replica, nor will it assign two replicas of the same shard to the same node. A shard may linger in an unassigned state if there are not enough nodes to distribute the shards accordingly.

To avoid this issue, make sure that every index in your cluster is initialized with fewer replicas per primary shard than the number of nodes in your cluster by following the formula below:

N >= R + 1Where N is the number of nodes in your cluster, and R is the largest shard replication factor across all indices in your cluster.

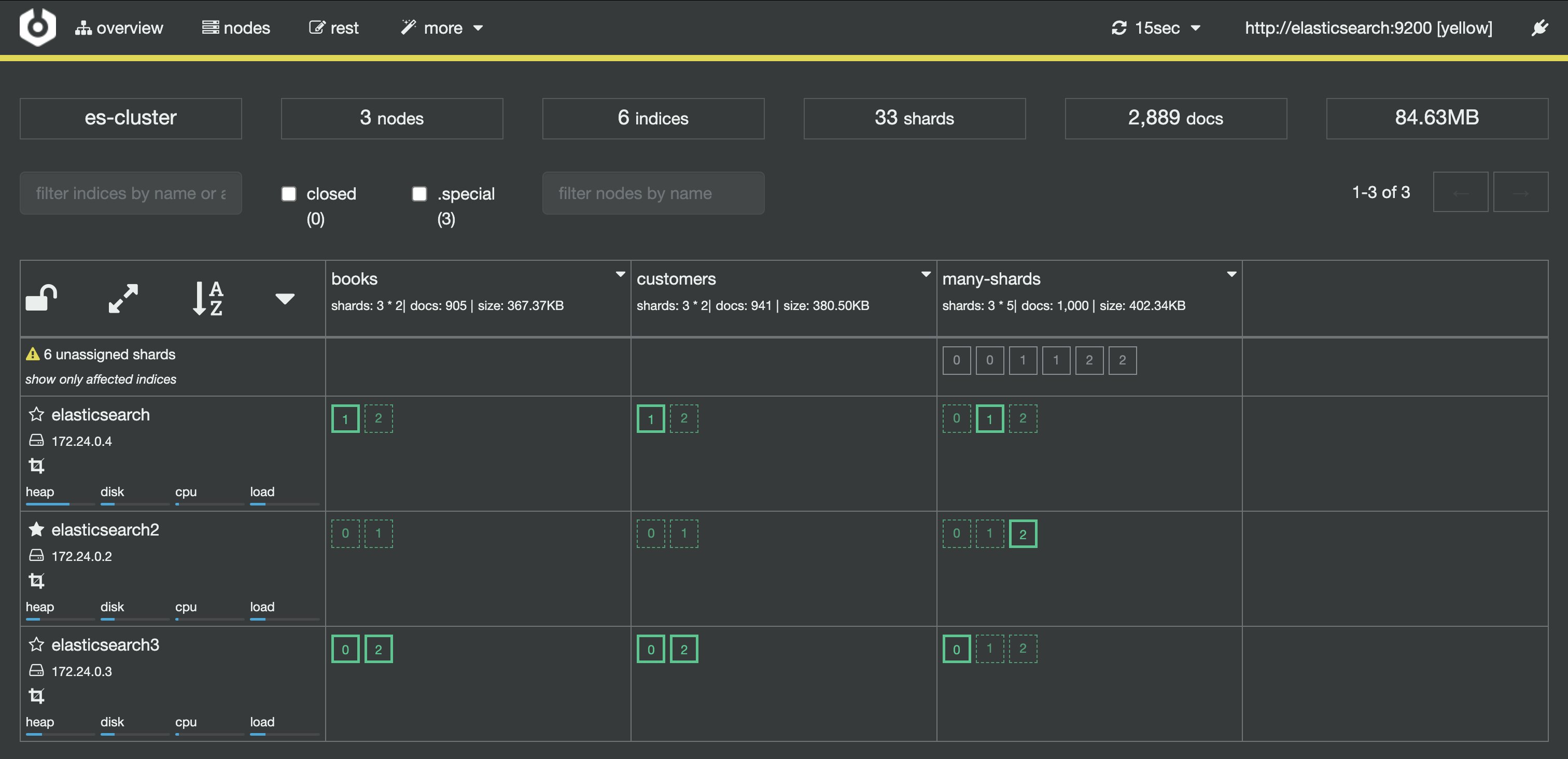

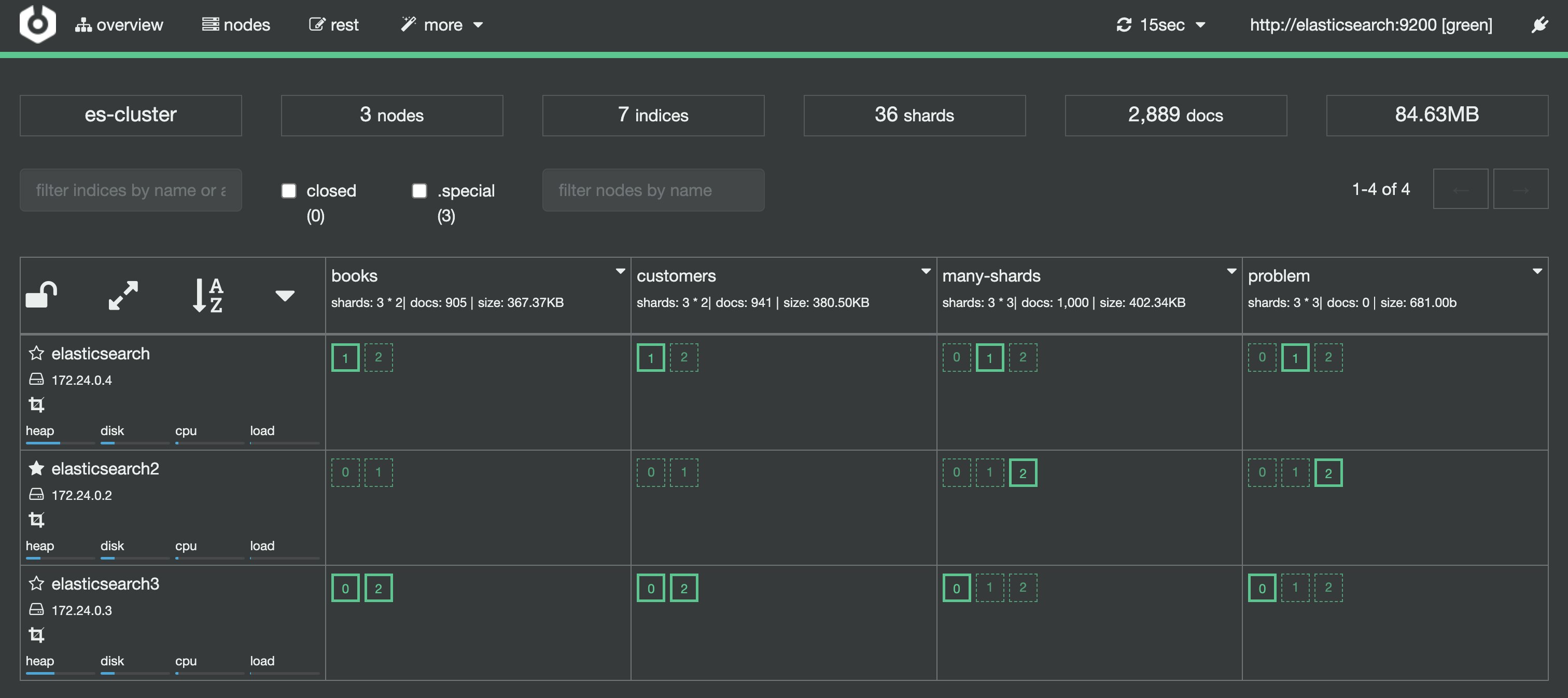

In the screenshot below, the many-shards index is stored on three primary shards and each primary has four replicas. Six of the index's 15 shards are unassigned because our cluster only contains three nodes. Two replicas of each primary shard haven't been assigned because each of the three nodes already contains a copy of that shard.

To resolve this issue, you can either add more data nodes to the cluster or reduce the number of replicas. In our example, we either need to add at least two more nodes in the cluster or reduce the replication factor to two, like so:

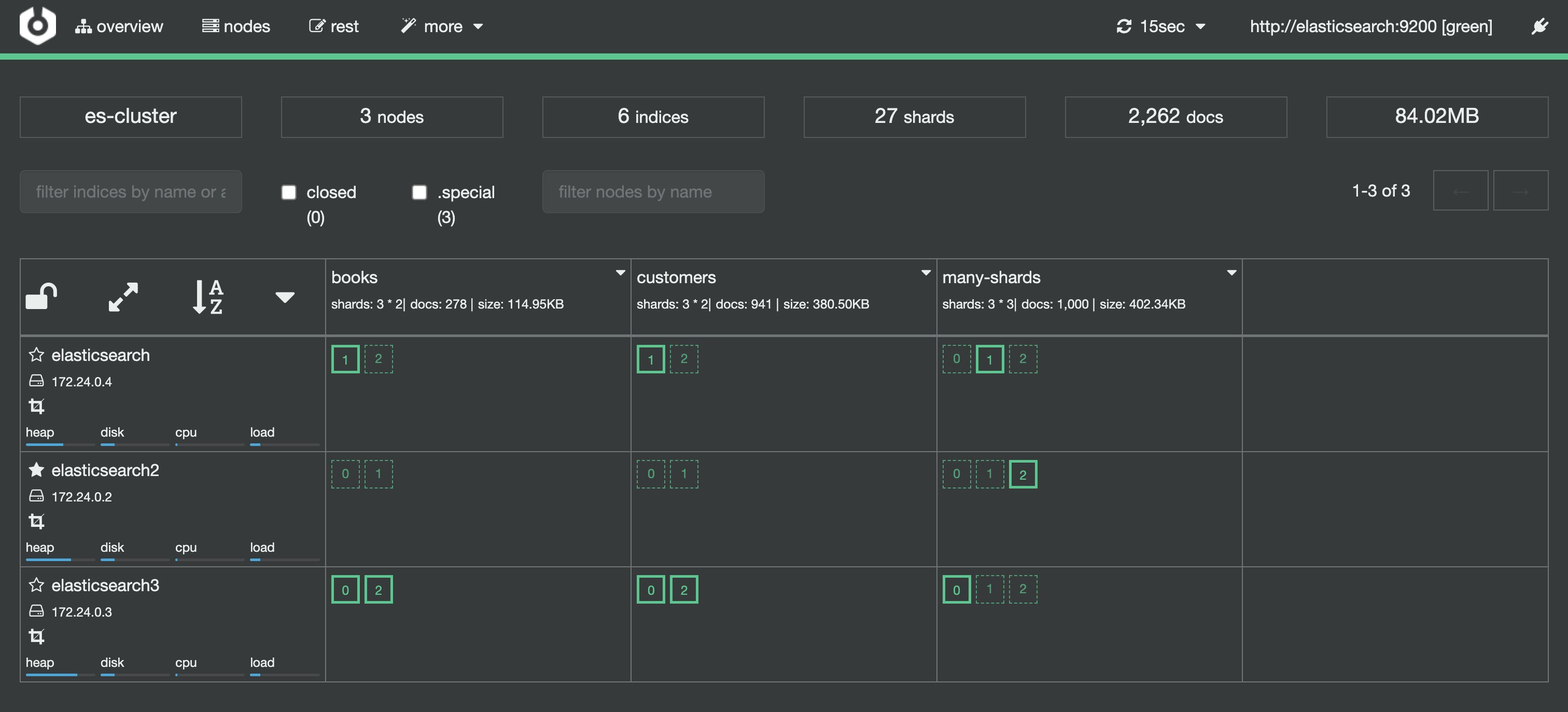

curl -XPUT "localhost:9200/<INDEX_NAME>/_settings?pretty" -H 'Content-Type: application/json' -d'{ "number_of_replicas": 2}'After reducing the number of replicas, take a peek at Cerebro to see if all shards have been assigned.

Reason 3: You need to re-enable shard allocation

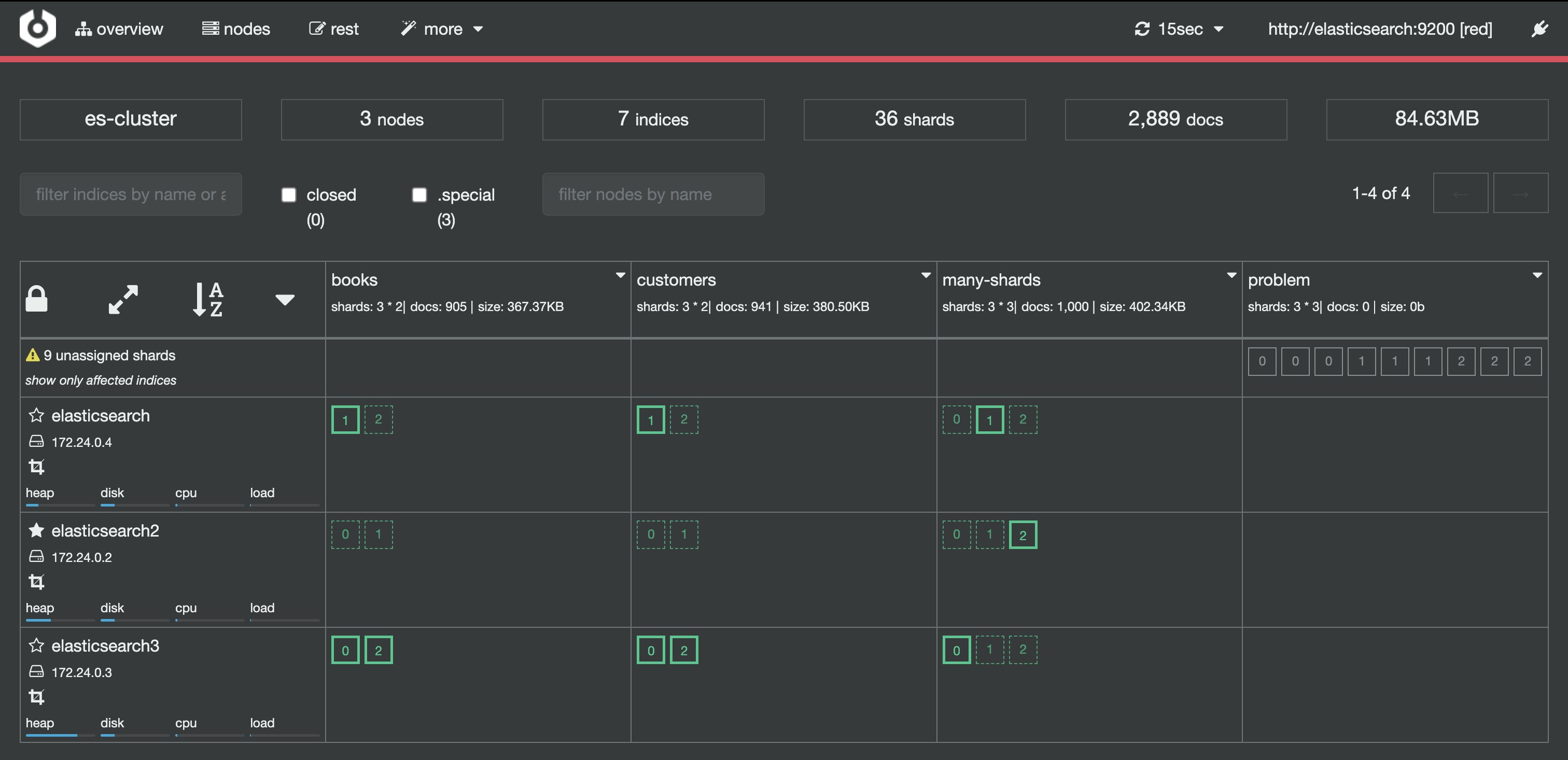

In the Cerebro screenshot below, an index has just been added to the cluster, but its shards haven't been assigned.

Shard allocation is enabled by default on all nodes, but you may have disabled shard allocation at some point (for example, in order to perform a rolling restart), and forgotten to re-enable it.

To enable shard allocation, update the Cluster Update Settings API:

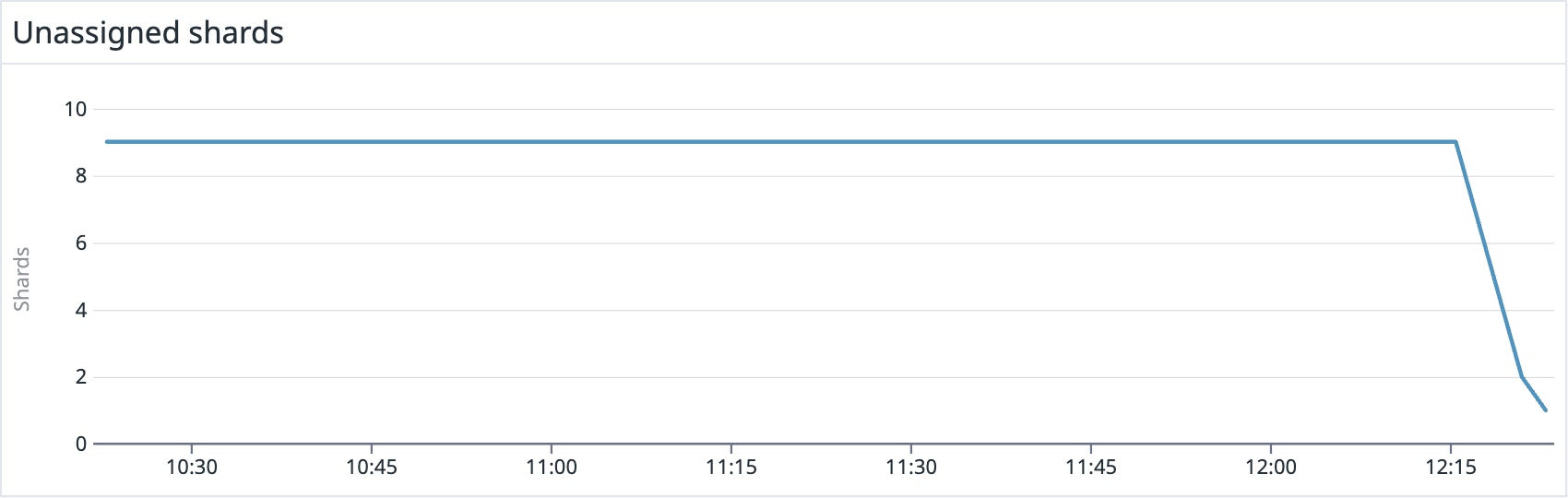

curl -X PUT "localhost:9200/_cluster/settings?pretty" -H 'Content-Type: application/json' -d'{ "transient" : { "cluster.routing.allocation.enable" : "all" }}'If this solved the problem, your Cerebro or Datadog dashboard should show the number of unassigned shards decreasing as they are successfully assigned to nodes.

It looks like this solved the issue for all of our unassigned shards, with one exception: shard 0 of the constant-updates index. Let's explore other possible reasons why the shard remains unassigned.

Reason 4: Shard data no longer exists in the cluster

In this case, primary shard 0 of the constant-updates index is unassigned. It may have been created on a node without any replicas (a technique used to speed up the initial indexing process), and the node left the cluster before the data could be replicated. The primary detects the shard in its global cluster state file, but can't locate the shard's data in the cluster.

Another possibility is that a node may have encountered an issue while rebooting. Normally, when a node resumes its connection to the cluster, it relays information about its on-disk shards to the primary node, which then transitions those shards from "unassigned" to "assigned/started". When this process fails for some reason (e.g. the node's storage has been damaged in some way), the shards may remain unassigned.

In this scenario, you have to decide how to proceed: try to get the original node to recover and rejoin the cluster (and do not force allocate the primary shard), or force allocate the shard using the Cluster Reroute API and reindex the missing data using the original data source, or from a backup.

If you decide to go with the latter (forcing allocation of a primary shard), the caveat is that you will be assigning an "empty" shard. If the node that contained the original primary shard data were to rejoin the cluster later, its data would be overwritten by the newly created (empty) primary shard, because it would be considered a "newer" version of the data. Before proceeding with this action, you may want to retry allocation instead, which would allow you to preserve the data stored on that shard.

If you understand the implications and still want to force allocate the unassigned primary shard, you can do so by using the allocate_empty_primary flag. The following command reroutes primary shard 0 in the constant-updates index to a specific node:

curl -XPOST "localhost:9200/_cluster/reroute?pretty" -H 'Content-Type: application/json' -d'{ "commands" : [ { "allocate_empty_primary" : { "index" : "constant-updates", "shard" : 0, "node" : "<NODE_NAME>", "accept_data_loss" : "true" } } ]}'Note that you'll need to specify "accept_data_loss" : "true" to confirm that you are prepared to lose the data on the shard. If you don't include this parameter, you will see an error like the one below:

{ "error" : { "root_cause" : [ { "type" : "remote_transport_exception", "reason" : "[NODE_NAME][127.0.0.1:9300][cluster:admin/reroute]" } ], "type" : "illegal_argument_exception", "reason" : "[allocate_empty_primary] allocating an empty primary for [constant-updates][0] can result in data loss. Please confirm by setting the accept_data_loss parameter to true" }, "status" : 400}You will now need to reindex the missing data, or restore as much as you can from a backup snapshot using the Snapshot and Restore API.

Reason 5: Low disk watermark

The primary node may not be able to assign shards if there are not enough nodes with sufficient disk space (it will not assign shards to nodes that have over 85 percent disk in use). Once a node has reached this level of disk usage, or what Elasticsearch calls a "low disk watermark", it will not be assigned more shards.

You can check the disk space on each node in your cluster (and see which shards are stored on each of those nodes) by querying the cat API:

curl -s 'localhost:9200/_cat/allocation?v'Consult this article for options on what to do if any particular node is running low on disk space (remove outdated data and store it off-cluster, add more nodes, upgrade your hardware, etc.).

If your nodes have large disk capacities, the default low watermark (85 percent disk usage) may be too low. You can use the Cluster Update Settings API to change cluster.routing.allocation.disk.watermark.low and/or cluster.routing.allocation.disk.watermark.high. For example, this Stack Overflow thread points out that if your nodes have 5TB disk capacity, you can probably safely increase the low disk watermark to 90 percent:

curl -XPUT "localhost:9200/_cluster/settings" -H 'Content-Type: application/json' -d'{ "transient": { "cluster.routing.allocation.disk.watermark.low": "90%" }}'If you want your configuration changes to persist upon cluster restart, replace "transient" with "persistent", or update these values in your configuration file. You can choose to update these settings by either using byte or percentage values, but be sure to remember this important note from the Elasticsearch documentation: "Percentage values refer to used disk space, while byte values refer to free disk space."

Reason 6: Multiple Elasticsearch versions

This problem only arises in clusters running more than one version of Elasticsearch (perhaps in the middle of a rolling upgrade). According to the Elasticsearch documentation, the primary node will not assign a primary shard’s replicas to any node running an older version. For example, if a primary shard is running on version 1.4, the primary node will not be able to assign that shard’s replicas to any node that is running any version prior to 1.4.

If you try to manually reroute a shard from a newer-version node to an older-version node, you will see an error like the one below:

[NO(target node version [XXX] is older than source node version [XXX])]Elasticsearch does not support rollbacks to previous versions, only upgrades. Upgrading the nodes running the older version should solve the problem if this is indeed the issue at hand.

Have you tried turning it off and on again?

If none of the scenarios above apply to your situation, you still have the option of reindexing the missing data from the original data source, or restoring the affected index from an old snapshot, as explained here.

Monitoring for unassigned shards

It's important to fix unassigned shards as soon as possible, as they indicate that data is missing/unavailable, or that your cluster is not configured for optimal reliability. If you're already using Datadog, turn on the Elasticsearch integration and you'll immediately begin monitoring for unassigned shards and other key Elasticsearch performance and health metrics. If you don't use Datadog but would like to, sign up for a free trial.