Nimisha Saxena

Clement Gaboriau Couanau

Security teams often struggle to keep up with rapidly evolving threats, especially when they have to manually manage detection rules. Without automation or version control, it’s difficult to maintain consistency across environments, track changes, or deploy updates quickly.

Datadog Cloud SIEM supports detection as code, a structured approach to authoring, testing, deploying, and managing detection rules using code and infrastructure-as-code tools like Terraform. This enables teams to automate rule workflows, minimize human error, and integrate seamlessly with CI/CD pipelines.

In this post, we’ll walk through key aspects of implementing detection as code with Datadog Cloud SIEM:

- Validating rule format with linting

- Testing detection logic with mock and historical data

- Converting detection rules to Terraform

- Deploying rules via API or Terraform

- Tracking changes with rule versioning

- Scaling rule management through CI/CD

Validate rule format with linting

Before deploying a detection rule, it’s important to ensure that it adheres to Datadog’s expected structure. Linting enables you to catch format and schema issues early in the development process.

You can use the Datadog API to submit detection rules for validation. This step helps ensure that only well-formed rules progress through your pipeline. In the example below, we attempt to validate a rule to detect unexpected external access to Snowflake tables. The rule fails due to an invalid value for the duration after which a learned value is forgotten (options.newValueOptions.forgetAfter).

curl https://api.datadoghq.com/api/v2/security_monitoring/rules/validation -H "DD-API-KEY: $DD_API_KEY" -H "DD-APPLICATION-KEY: $DD_APP_KEY" -H "Content-Type: application/json" --data '{ "name": "Snowflake external access occurred", "isEnabled": true, "queries": [ { "query": "source:snowflake snowflake.table:external_access", "groupByFields": [ "@network.client.ip" ], "hasOptionalGroupByFields": false, "distinctFields": [], "metric": "@network.client.ip", "metrics": [ "@network.client.ip" ], "aggregation": "new_value", "name": "", "dataSource": "logs" } ], "options": { "evaluationWindow": 0, "detectionMethod": "new_value", "maxSignalDuration": 86400, "keepAlive": 3600, "newValueOptions": { "forgetAfter": 34, "learningDuration": 7, "learningThreshold": 0, "learningMethod": "duration" }, "decreaseCriticalityBasedOnEnv": true }, "cases": [ { "name": "", "status": "medium", "notifications": [] } ], "message": "## Goal\n\nDetect when an external access event occurs in your Snowflake environment.\n\", "tags": [ "scope:snowflake", "technique:T1199-trusted-relationship", "mitre_platform:saas", "source:snowflake", "team:cloud-siem", "security:attack", "tactic:TA0001-initial-access", "mitre_platform:cloud" ], "hasExtendedTitle": true, "type": "log_detection", "filters": []}'{"error":{"code":"InvalidArgument","message":"Invalid rule configuration","details":[{"code":"InvalidArgument","message":"forgetAfter value is invalid. Maximum allowed value (in days): 30","target":"forgetAfter"}]}Automated validation eliminates the need for manual checks, making your workflow more efficient and less error-prone.

Test detection rules against mock and log data

Once rules are linted, testing them helps confirm that they behave as expected, without triggering false positives or missing actual threats. With the Datadog API, you can unit test rules with mock data, or run them against historical logs to test them in real-world situations.

Unit testing with mock data

You can use the test-a-rule API to validate a rule’s query logic (rather than the case logic) against mock data in isolation before using real log data. The response will contain the assert results, which are returned in the same order as the rule query payloads. For each payload, it returns true if the result matches the expectedResult value, false otherwise.

In the below example, we are sending three query payloads to test query logic that looks for logs sent from the source my-source and that contain a host tag.

curl https://api.datadoghq.com/api/v2/security_monitoring/rules/test -H "DD-API-KEY: $DD_API_KEY" -H "DD-APPLICATION-KEY: $DD_APP_KEY" -H "Content-Type: application/json" --data '{ "rule": { "cases": [ { "condition": "a > 5", "name": "High count", "notifications": [], "status": "high" } ], "isEnabled": true, "name": "Test Threshold Rule", "message": "Test Threshold Rule", "options": { "evaluationWindow": 900, "keepAlive": 3600, "maxSignalDuration": 86400 }, "queries": [ { "aggregation": "count", "distinctFields": [], "groupByFields": [ "host" ], "name": "a", "query": "source:my-source" } ], "tags": [], "type": "log_detection" }, "ruleQueryPayloads": [ { "expectedResult": true, "index": 0, "payload": { "ddsource": "my-source", "host": "i-9399", "tags": [] } }, { "expectedResult": false, "index": 0, "payload": { "ddsource": "not-my-source", "host": "i-9399", "tags": [] } }, { "expectedResult": false, "index": 0, "payload": { "ddsource": "my-source", "tags": [] } } ]}'{"results":[true,true,true]}Our first payload includes a host tag and queries the correct my-source log source and so we expect a return of true. The second payload has a host tag but queries an incorrect log source, and so the expected result is false. The final payload has the correct source but no host tag, and so should also return false. As we can see in the results (true,true,true), each of our expected results was correct.

Mock data enables rapid feedback cycles, helping you fix logic errors early in the rule development process.

Back testing with historical data

To understand how a rule performs under real-world conditions, you can back test it against historical logs using the run-a-historical-job API.

The below command runs an historical job on the rule with the ID nr5-nrp-9dm using logs from the logs index github-audit-logs across a specified one-hour time range. The command returns the ID of the newly created Historical Job a9d2c2e5-cec4-466c-885e-d170a29ec94d.

curl https://app.datadoghq.com/api/v2/siem-historical-detections/jobs -H "DD-API-KEY: $DD_API_KEY" -H "DD-APPLICATION-KEY: $DD_APP_KEY" -H "Content-Type: application/json" --data '{ "data": { "type": "historicalDetectionsJobCreate", "attributes": { "fromRule": { "from": 1748002971780, "to": 1748006571827, "index": "github-audit-logs", "id": "nr5-nrp-9dm", "notifications": [] } } }}'{"data":{"id":"a9d2c2e5-cec4-466c-885e-d170a29ec94d","type":"historicalDetectionsJob"}}This approach surfaces insights about how a rule would have behaved during past incidents, helping you fine-tune its effectiveness and reduce noise.

Convert detection rules to Terraform

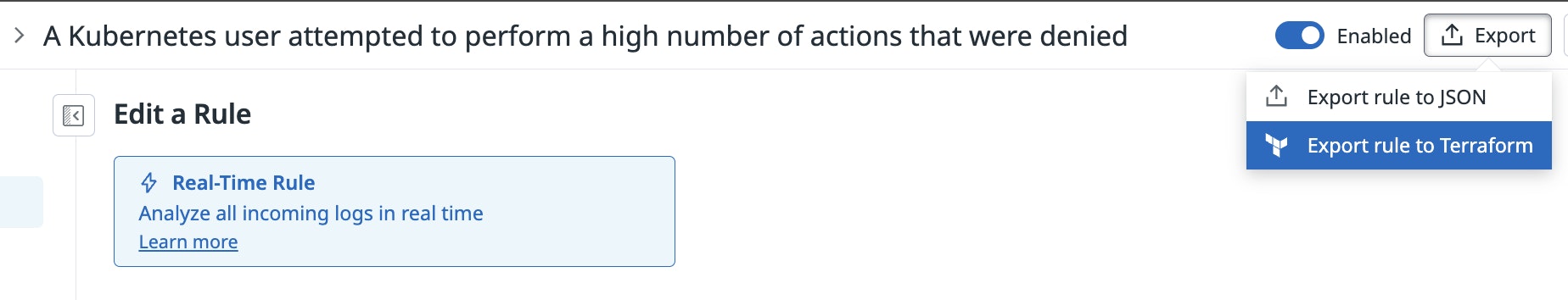

Datadog detection rules are defined in JSON, but managing them as Terraform code makes it easier to standardize deployments and track changes. You can easily export a rule to Terraform from the Datadog UI.

Alternatively, the convert-a-rule-from-json-to-terraform API transforms a JSON rule into Terraform syntax. In the following example, we’re converting our Snowflake external access detection rule (after having corrected the forgetAfter value).

curl https://api.datadoghq.com/api/v2/security_monitoring/rules/convert -H "DD-API-KEY: $DD_API_KEY" -H "DD-APPLICATION-KEY: $DD_APP_KEY" -H "Content-Type: application/json" --data '{ "name": "Snowflake external access occurred", "isEnabled": true, "queries": [ { "query": "source:snowflake snowflake.table:external_access", "groupByFields": [ "@network.client.ip" ], "hasOptionalGroupByFields": false, "distinctFields": [], "metric": "@network.client.ip", "metrics": [ "@network.client.ip" ], "aggregation": "new_value", "name": "", "dataSource": "logs" } ], "options": { "evaluationWindow": 0, "detectionMethod": "new_value", "maxSignalDuration": 86400, "keepAlive": 3600, "newValueOptions": { "forgetAfter": 28, "learningDuration": 7, "learningThreshold": 0, "learningMethod": "duration" }, "decreaseCriticalityBasedOnEnv": true }, "cases": [ { "name": "", "status": "medium", "notifications": [] } ], "message": "## Goal\n\nDetect when an external access event occurs in your Snowflake environment.\n\n## Strategy\n\nThis rule allows you to detect when a new external access event occurs in Snowflake. Review any suspicious entries of external access performed by procedure or user-defined function (UDF) handler code within the last 365 days through the External Access History table. Unexpected use of external access for your environment is a potential indicator of compromise.\n\n## Triage and response\n\n1. Inspect the logs to identify the source cloud, source region, target cloud, target region, and query ID.\n2. Investigate whether the source and target cloud locations are expected.\n3. Using the query ID, correlate the behavior with Query History logs to determine the user, query, and other useful information.\n4. If there are signs of compromise, disable the user associated with the external access integration and rotate credentials.\n", "tags": [ "scope:snowflake", "technique:T1199-trusted-relationship", "mitre_platform:saas", "source:snowflake", "team:cloud-siem", "security:attack", "tactic:TA0001-initial-access", "mitre_platform:cloud" ], "hasExtendedTitle": true, "type": "log_detection", "filters": [] }' | jq -r '.terraformContent'resource "datadog_security_monitoring_rule" "snowflake_external_access_occurred" { name = "Snowflake external access occurred" enabled = true query { query = "source:snowflake snowflake.table:external_access" group_by_fields = ["@network.client.ip"] distinct_fields = [] metric = "@network.client.ip" metrics = ["@network.client.ip"] aggregation = "new_value" name = "" data_source = "logs" } options { keep_alive = 3600 max_signal_duration = 86400 detection_method = "new_value" evaluation_window = 0 new_value_options { forget_after = 28 learning_duration = 7 learning_threshold = 0 learning_method = "duration" } decrease_criticality_based_on_env = true } case { name = "" status = "medium" notifications = [] } message = "## Goal\\n\\nDetect when an external access event occurs in your Snowflake environment.\\n\\n## Strategy\\n\\nThis rule allows you to detect when a new external access event occurs in Snowflake. Review any suspicious entries of external access performed by procedure or user-defined function (UDF) handler code within the last 365 days through the External Access History table. Unexpected use of external access for your environment is a potential indicator of compromise.\\n\\n## Triage and response\\n\\n1. Inspect the logs to identify the source cloud, source region, target cloud, target region, and query ID.\\n2. Investigate whether the source and target cloud locations are expected.\\n3. Using the query ID, correlate the behavior with Query History logs to determine the user, query, and other useful information.\\n4. If there are signs of compromise, disable the user associated with the external access integration and rotate credentials.\\n" tags = ["scope:snowflake","technique:T1199-trusted-relationship","mitre_platform:saas","source:snowflake","team:cloud-siem","security:attack","tactic:TA0001-initial-access","mitre_platform:cloud"] has_extended_title = true type = "log_detection"}Storing detection rules as code enables you to integrate with source control, review changes via pull requests, and deploy consistently across environments.

Deploy rules via API or Terraform

After validating and testing your rules, Datadog offers two deployment methods: direct API submission or Terraform.

Use the create-a-detection-rule API for dynamic deployments, or manage infrastructure-as-code with the security_monitoring_rule Terraform resource.

Both methods support automation and can be integrated into CI/CD pipelines, ensuring fast, repeatable deployments with minimal manual steps.

Track and manage rule changes with versioning

As detection rules evolve, it’s critical to maintain visibility into how and when they change. Datadog’s rule versioning API lets you audit the history of a rule, including its updates and authors.

curl https://app.datadoghq.com/api/v2/security_monitoring/rules/el5-khj-p4i/version_history\?page%5Bsize%5D\=10\&page%5Bnumber%5D\=0 -H "DD-API-KEY: $DD_API_KEY" -H "DD-APPLICATION-KEY: $DD_APP_KEY" -H "Content-Type: application/json"{ "data": { "id": "el5-khj-p4i", "type": "GetRuleVersionHistoryResponse", "attributes": { "count": 2, "data": { "1": { "rule": { "name": "[CGC] High number of operation on security assets", "createdAt": 1745075545230, "isDefault": false, "isPartner": false, "isEnabled": true, "isBeta": false, "isDeleted": false, "isDeprecated": false, "queries": [ { "query": "@asset.type:security_rule @usr.email:*@datadoghq.com", "groupByFields": [ "@usr.id" ], "hasOptionalGroupByFields": false, "distinctFields": [], "aggregation": "count", "name": "", "dataSource": "audit" } ], "options": { "evaluationWindow": 900, "detectionMethod": "threshold", "maxSignalDuration": 86400, "keepAlive": 3600 }, "cases": [ { "name": "Condition 1", "status": "medium", "notifications": [], "condition": "a> 15" }, { "name": "Condition 2", "status": "low", "notifications": [], "condition": "a> 10" }, { "name": "Condition 3", "status": "info", "notifications": [], "condition": "a> 5" } ], "message": "High number of operation on security assets", "tags": [], "hasExtendedTitle": true, "type": "log_detection", "filters": [], "version": 1, "id": "el5-khj-p4i", "blocking": false, "metadata": { "entities": null, "sources": null }, "creationAuthorId": 3746826, "creator": { "handle": "clement.gaboriaucouanau", "name": "Clément Gaboriau Couanau" }, "updater": { "handle": "", "name": "" } }, "changes": [] }, "2": { "rule": { "name": "[CGC] High number of operation on security assets", "createdAt": 1745075545230, "isDefault": false, "isPartner": false, "isEnabled": true, "isBeta": false, "isDeleted": false, "isDeprecated": false, "queries": [ { "query": "@asset.type:security_rule @usr.email:*@datadoghq.com", "groupByFields": [ "@usr.id" ], "hasOptionalGroupByFields": false, "distinctFields": [], "aggregation": "count", "name": "", "dataSource": "audit" } ], "options": { "evaluationWindow": 900, "detectionMethod": "threshold", "maxSignalDuration": 86400, "keepAlive": 3600 }, "cases": [ { "name": "Condition 1", "status": "medium", "notifications": [], "condition": "a> 15" }, { "name": "Condition 2", "status": "low", "notifications": [], "condition": "a> 10" }, { "name": "Condition 3", "status": "info", "notifications": [], "condition": "a> 5" } ], "message": "High number of operation on security assets", "tags": [ "team:cloud-siem", "source:audit-trail" ], "hasExtendedTitle": true, "type": "log_detection", "filters": [], "version": 2, "id": "el5-khj-p4i", "updatedAt": 1747782170877, "blocking": false, "metadata": { "entities": null, "sources": null }, "creationAuthorId": 3746826, "updateAuthorId": 3746826, "creator": { "handle": "clement.gaboriaucouanau", "name": "Clément Gaboriau Couanau" }, "updater": { "handle": "clement.gaboriaucouanau", "name": "Clément Gaboriau Couanau" } }, "changes": [ { "type": "CREATE", "field": "Tags", "change": "source:audit-trail" }, { "type": "CREATE", "field": "Tags", "change": "team:cloud-siem" } ] } } } }}Versioning supports compliance, debugging, and collaboration, especially in environments where multiple teams contribute to rule development.

Manage detection at scale with CI/CD

Scaling detection across environments requires consistent processes and automated tooling. Datadog enables this with robust API support, a Terraform integration, and compatibility with CI/CD systems.

This approach allows large teams to operate efficiently and with confidence—ensuring that security coverage keeps pace with evolving infrastructure.

Start managing detection as code with Datadog Cloud SIEM

Detection as code helps security teams move faster, reduce errors, and maintain clarity over their detection rules. With Datadog Cloud SIEM, you can build, test, and scale your detection strategy using automation and infrastructure as code.

To learn more, explore our API documentation and Terraform resources.

Ready to get started? 14-day free trial