Kent Shultz

What is Terraform?

Terraform is an increasingly popular infrastructure-as-code tool for teams that manage cloud environments spanning many service providers. New users are often drawn to Terraform’s ability to quickly provision compute instances and similar resources from infrastructure providers, but Terraform can also manage platform-as-a-service and software-as-a-service resources.

What Datadog calls integrations, Terraform calls providers; Terraform’s Heroku provider, for example, is all the code within Terraform that interacts with Heroku. The Datadog provider enables you to build Terraform configurations to manage your dashboards, monitors, cloud integrations, Synthetic browser or API tests, and more. The provider also offers data sources that retrieve up-to-date information from your Datadog environment, so you don't have to hardcode environment values in your Terraform configurations. For example, you can use the Synthetic locations data source to automatically pull in new testing locations for your Terraform-configured tests as Datadog adds them.

In this post, we'll look at:

- how Terraform and the Datadog provider help you automate your environment

- creating new Datadog resources to instantly monitor your systems

Why Terraform?

Maybe you're the type who feels a twinge of guilt when you've spent too much time fumbling around in your favorite cloud provider's web console. You're keen on automating your environment as much as possible, and you're familiar with Datadog's API. Datadog's Terraform provider is built into the Terraform package and aims to offer full feature parity with Datadog's existing API library. With the provider, you can implement monitoring as code, which enables you to instantly set up monitoring for your containers, clusters, instances, and more as you create them.

If you have only a small cloud footprint, rarely provision new services, or you're a one-person team, interacting with our API from ad hoc scripts and shell one-liners may be as much automation as you need. Or, if you're already using the Datadog API alongside those of other cloud providers to develop in-house tooling that fits neatly into your team's workflow, great! We won't try to convince you to ditch your own tooling. But if you're a growing team without a well-established workflow, or your scale has outgrown your existing tooling, Terraform is worth a look.

Its design principles will be familiar to users of configuration management tools like Puppet. You'll model your environment with declarative templates. Repeated application of the templates is idempotent (i.e., a template calling for three DNS records will not, when applied twice, create six records). And of course, though Terraform doesn't require it, you will collaborate with teammates on your templates using a version control system like Git. Once you have adopted it, you should use Terraform—and only Terraform—to manage your entire cloud environment. Any scaling up, down, or out should start with a pull request and team review. No more tribal knowledge or out-of-date runbooks. It's infrastructure as code.

If you haven't already installed Terraform, you can download the Terraform package for your system directly from HashiCorp and follow their instructions to complete the install.

Creating new Datadog resources

Terraform's Datadog provider offers a large variety of resources for implementing monitoring as code for your infrastructure—from creating a new monitor to setting up an AWS integration. We'll walk through a few of their available resources next:

- set up and edit a Datadog monitor

- create a new dashboard

- configure your AWS integration

We'll also show how you can use Terraform to create a resource that automatically spins up an AWS EC2 instance and an associated Datadog monitor with a single command.

Instantly deploy new monitors for your environment

Datadog monitors actively check the status of your environments, including metrics, integration availability, TCP/HTTP endpoints, logs, and more. You can use the datadog_monitor resource to deploy new monitors for your environment. Let's create a simple monitor resource to set an alert condition in Datadog. In any directory, create a file main.tf with the following contents:

resource "datadog_monitor" "cpumonitor" { name = "cpu monitor" type = "metric alert" message = "CPU usage alert" query = "avg(last_1m):avg:system.cpu.system{*} by {host} > 60"}The monitor resource requires four fields, as seen above: name, type, message, and query. If you've ever created a monitor via Datadog's API, this won’t be news to you. Terraform resource types always follow the pattern ”<provider_name>_<resource>”. In this case, the type is ”datadog_monitor”. The resource name is up to us, and we’ve chosen ”cpumonitor”. Resource names only serve to uniquely identify the resource within Terraform templates.

Before you apply resources, you will need to add your Datadog API and application keys. You can enter your Datadog credentials via CLI, but obviously you won’t want to do this every time you run Terraform. There are two options: set them within a ”datadog” provider block, or in shell environment variables. You should never set secrets directly in a provider block since they would get checked into source control, but there's another way. HashiCorp recommends using a provider block which—for credentials and other secrets—references variables you've added in a separate, source control–exempt file, terraform.tfvars. We won't get into using input variables here, so let's just set credentials in the Datadog provider’s special environment variables:

$ export DATADOG_API_KEY="<your_api_key>"$ export DATADOG_APP_KEY="<your_app_key>"See your Datadog account settings if you don't know your API key, and create an application key at the same page if you don't already have one.

Let's apply the template now:

$ terraform applyTerraform will perform the following actions:

# datadog_monitor.cpumonitor will be created + resource "datadog_monitor" "cpumonitor" { + evaluation_delay = (known after apply) + id = (known after apply) + include_tags = true + message = "CPU usage alert" + name = "cpu monitor" + new_host_delay = 300 + notify_no_data = false + query = "avg(last_1m):avg:system.cpu.system{*} by {host} > 60" + require_full_window = true + type = "metric alert" }

Plan: 1 to add, 0 to change, 0 to destroy.

…datadog_monitor.cpumonitor: Creating...datadog_monitor.cpumonitor: Creation complete after 2s [id=23098507]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Success! Terraform created the metric monitor and didn’t touch anything else. Every terraform apply compares your template to the state of the same resources, if any, that Terraform knows it has managed previously. Since this is your first apply, Terraform couldn’t have modified any of your existing monitors (unless you had first imported them).

Terraform knows which resources fall within its purview by keeping its own copy of the state of the infrastructure it manages. Notice that your first apply created a new file, terraform.tfstate. Terraform uses this file to know which resources in your templates map to which resources in reality. If you’ll be collaborating with teammates on your templates, you should store state in a remote backend rather than having each collaborator use his or her own local terraform.tfstate.

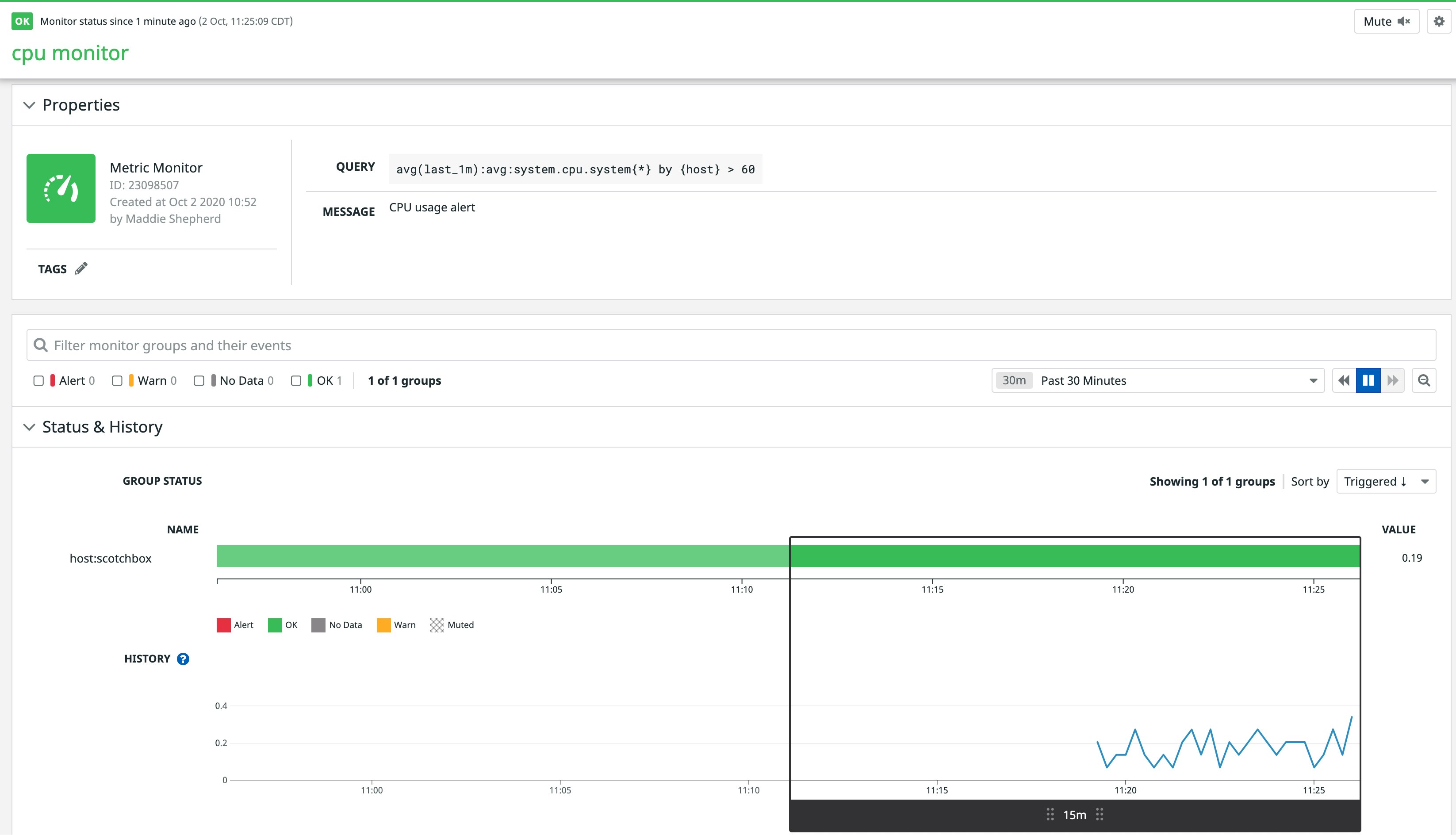

Check out the new monitor in Datadog:

If some of your hosts are busy right now (i.e., hovering above 60 percent system CPU usage), the new monitor may start flapping between OK and ALERT. You won't get spammed with alerts, though, since we didn't add a notification channel to the monitor's message.

As mentioned earlier, Terraform operations are idempotent; if we immediately apply the same template, Terraform ought to read the attributes of the new monitor, see that they still match those specified in the template, and do nothing:

$ terraform applydatadog_monitor.cpumonitor: Refreshing state... [id=23098507]

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.Now let's add some optional fields to the monitor to illustrate how Terraform updates resources. It’s common to set tiered thresholds on metric monitors, so add some thresholds to the resource in a block-style field:

resource "datadog_monitor" "cpumonitor" { name = "cpu monitor" type = "metric alert" message = "CPU usage alert" query = "avg(last_1m):avg:system.cpu.system{*} by {host} > 60" thresholds = { ok = 20 warning = 50 critical = 60 }}This time, let's not be so quick to apply the change. We're about to modify an existing resource, and that can be nerve-racking, especially in a production environment. Fortunately, Terraform allows you to plan changes and review them before making them live:

$ terraform plan...datadog_monitor.cpumonitor: Refreshing state... [id=23098507]

------------------------------------------------------------------------

An execution plan has been generated and is shown below.Resource actions are indicated with the following symbols: ~ update in-place

Terraform will perform the following actions:

# datadog_monitor.cpumonitor will be updated in-place ~ resource "datadog_monitor" "cpumonitor" { evaluation_delay = 0 id = "23098507" include_tags = true locked = false message = "CPU usage alert" name = "cpu monitor" new_host_delay = 300 notify_audit = false notify_no_data = false query = "avg(last_1m):avg:system.cpu.system{*} by {host} > 60" renotify_interval = 0 require_full_window = true silenced = {} tags = [] threshold_windows = {} ~ thresholds = { + "critical" = "60" + "ok" = "20" + "warning" = "50" } timeout_h = 0 type = "metric alert" }

Plan: 0 to add, 1 to change, 0 to destroy.

------------------------------------------------------------------------Near the end of the plan output, we’re shown how Terraform will change the monitor if we run terraform apply. It looks how we expect, so go ahead and apply the template to add the thresholds. You can check out Terraform's documentation to learn more about the plan command and its usage.

You'll see the new thresholds in the monitor's page in Datadog, of course, but let's inspect the monitor with Terraform using the show command:

$ terraform show# datadog_monitor.cpumonitor:resource "datadog_monitor" "cpumonitor" { evaluation_delay = 0 id = "23098507" include_tags = true locked = false message = "CPU usage alert" name = "cpu monitor" new_host_delay = 300 notify_audit = false notify_no_data = false query = "avg(last_1m):avg:system.cpu.system{*} by {host} > 60" renotify_interval = 0 require_full_window = true silenced = {} tags = [] threshold_windows = {} thresholds = { "critical" = "60" "ok" = "20" "warning" = "50" } timeout_h = 0 type = "metric alert"}Here we see a full list of the monitor's attributes, including those we didn't set. They’ve been set to default values, but note that these defaults were applied by the Terraform provider, not by the Datadog API. For any optional fields missing from a resource declaration, Terraform inserts those fields—using its own defaults—into its request to the Datadog API, so be mindful that Terraform’s defaults may occasionally differ from the cloud providers’ defaults.

Before moving on to a more interesting example, let's finish our tour of your first Terraform Datadog resource with the last CRUD operation, delete:

$ terraform destroydatadog_monitor.cpumonitor: Refreshing state... [id=23098507]

...

Plan: 0 to add, 0 to change, 1 to destroy.

Do you really want to destroy all resources? Terraform will destroy all your managed infrastructure, as shown above. There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

datadog_monitor.cpumonitor: Destroying... [id=23098507]datadog_monitor.cpumonitor: Destruction complete after 1s

Destroy complete! Resources: 1 destroyed.Any time you're squeamish about destroying resources, first run terraform plan -destroy to confirm which resources you need to destroy.

In the next few sections, we'll briefly show a few more examples of the types of resources you can use in your environments.

Create a dashboard for your newly deployed infrastructure

Dashboards are another important piece to implementing monitoring as code as they provide a real-time view into the state of your environments. They also enable you to visualize metrics used in your existing monitors and correlate that data with other key system metrics.

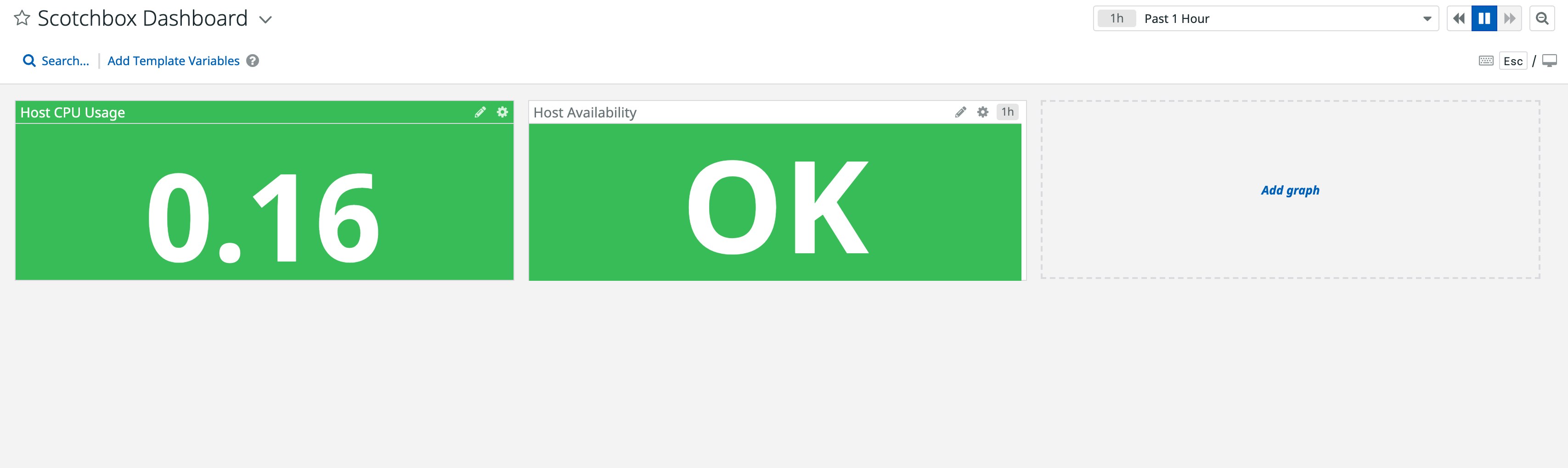

Let's create a simple dashboard with two widgets: alert value and check status. The alert value widget displays the current value of a metric used in any monitor, such as the one we created earlier. The check status widget shows host availability.

resource "datadog_dashboard" "scotchbox_dashboard" { title = "Scotchbox Dashboard" description = "Created using the Datadog provider in Terraform" layout_type = "ordered" is_read_only = true

widget { alert_value_definition { alert_id = "23100499" #the ID of our recently created monitor precision = 3 unit = "b" text_align = "center" title = "Host CPU Usage" } }

widget { check_status_definition { check = "datadog.agent.up" grouping = "check" group = "host:scotchbox" title = "Host Availability" time = { live_span = "1h" } } }}Run terraform apply again to create the new dashboard, which you will see in the dashboard list of your Datadog account.

Check out Terraform's documentation for more examples of the different types of widgets you can add to your dashboard. You can also check out Datadog's API documentation for more details about the available fields that you can incorporate in your Terraform resource.

Set up your AWS integration

If you use a cloud provider like AWS, you can create a resource that automatically configures Datadog's built-in AWS integration. This enables you to start collecting key metrics, such as those emitted by your Amazon EC2 instances.

To set up the integration, add the following resource to your main.tf configuration file:

resource "datadog_integration_aws" "sandbox" { account_id = "1234567890" #your Amazon account ID role_name = "DatadogAWSIntegrationRole" filter_tags = ["key:value"] host_tags = ["key:value", "key2:value2"] account_specific_namespace_rules = { auto_scaling = false opsworks = false } excluded_regions = ["us-east-1", "us-west-2"]}You can also use the provider to manage the Datadog forwarder, which enables you to easily capture AWS service logs via a Lambda function.

Once you set up the integration and forwarder, AWS metrics and logs will start flowing into Datadog.

Monitor more with your resources

We've looked at some simple examples to get started, but the Datadog provider offers several different types of resources to expand your monitoring capabilities, including:

- creating new Synthetic tests to automatically verify application behavior in new environments

- setting service level objectives for newly deployed applications

- setting up integrations for other cloud providers such as Azure or GCP

You can link any of the available Datadog resources to other Terraform providers, such as AWS, and easily create dashboards, monitors, and more that are tailored to your complex environments. We'll show you how next.

Linking Datadog resources to other providers

Terraform’s value becomes especially obvious when you start to snap resources together, particularly those from different providers. You could have easily created the monitor in the previous section with the Datadog API, and you could further recruit the AWS CLI to create a new instance, but with Terraform, you can get it all done with one power tool.

Let’s create a datadog_monitor alongside one of the most time-tested and popular resource types, aws_instance. If you have an AWS account and don't mind potentially spending a few cents, feel free to follow along. Otherwise, the example is still illuminating to read.

Wipe out the template from the previous section and start a new main.tf:

provider "aws" { region = "us-west-2" # or your favorite region}

resource "aws_instance" "base" { ami = "ami-ff7cade9" # choose your own AMI — this one isn't real instance_type = "t2.micro"}

resource "datadog_monitor" "cpumonitor" { name = "cpu monitor ${aws_instance.base.id}" type = "metric alert" message = "CPU usage alert" query = "avg(last_1m):avg:system.cpu.system{host:${aws_instance.base.id}} by {host} > 10" new_host_delay = 30 # just so we can generate an alert quickly}Let's unpack this block by block.

This time we are using a provider block, but to configure a region, not account credentials. You can provide AWS credentials in this block, too, or in environment variables as we did earlier for the Datadog provider. Terraform will examine the provider block and environment variables to find everything it needs to manage AWS resources. See the AWS provider reference to find the field names for credentials.

Our aws_instance resource minimally provides an AMI ID and an instance size. Pick any AMI that’s available in your AWS account in us-west-2, preferably one that has the Datadog Agent pre-baked in. If you don’t have such an AMI handy, no problem, but if you’d like to actually test alerting for this instance later on, you’ll need to configure SSH access to it so you can install the Agent. See the aws_instance reference for a full list of optional fields, including those related to SSH access (security_groups and key_name).

Finally, although the datadog_monitor above resembles the one from earlier, there's a crucial difference here: it references an attribute from the aws_instance. This is a powerful feature in Terraform. The attribute aws_instance.base.id is called a computed attribute because AWS chose it for us. It's an output of the instance resource, not an input, but once it’s known (i.e. once it’s been “computed”) it can become an input for any other resource. Since the datadog_monitor references it, Terraform cannot create the monitor until the instance is done provisioning and its ID is known. Terraform implicitly orders the creation of all resources in your templates based on these resource-to-resource references (though you can also make resource ordering explicit by using the depends_on metaparameter with any resource). Resources that don't depend on one another will be provisioned concurrently, each in its own Goroutine.

After you've set your AWS credentials, chosen an AMI, and optionally configured a security group and/or SSH key for the instance, apply the template:

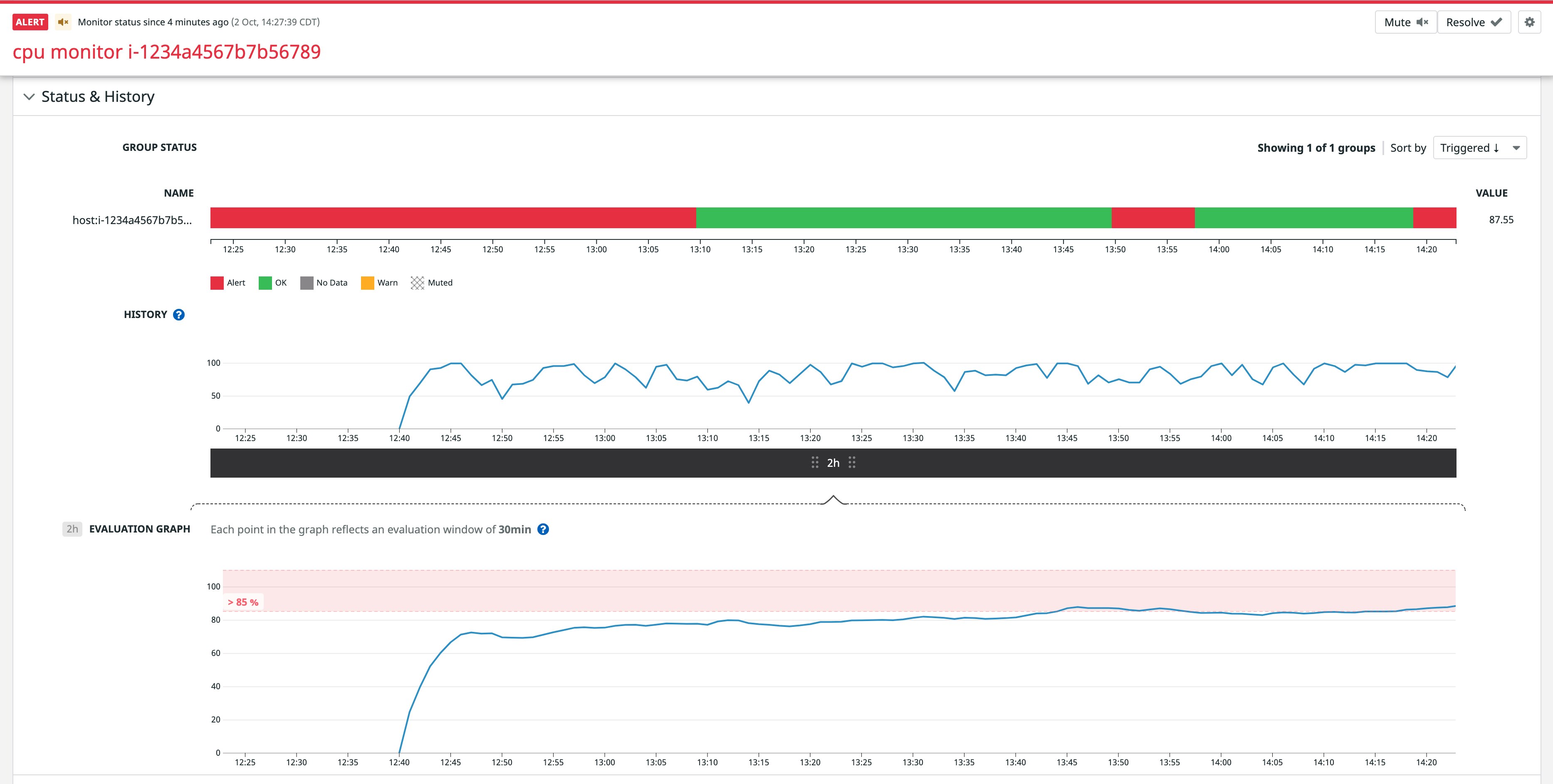

$ terraform applyaws_instance.base: Creating... ami: "" => "ami-ff7cade9" associate_public_ip_address: "" => "<computed>" availability_zone: "" => "<computed>" ebs_block_device.#: "" => "<computed>" ephemeral_block_device.#: "" => "<computed>" instance_state: "" => "<computed>" instance_type: "" => "t2.micro" ipv6_addresses.#: "" => "<computed>" key_name: "" => "<computed>" network_interface_id: "" => "<computed>" placement_group: "" => "<computed>" private_dns: "" => "<computed>" private_ip: "" => "<computed>" public_dns: "" => "<computed>" public_ip: "" => "<computed>" root_block_device.#: "" => "<computed>" security_groups.#: "" => "<computed>" source_dest_check: "" => "true" subnet_id: "" => "<computed>" tenancy: "" => "<computed>" vpc_security_group_ids.#: "" => "<computed>"aws_instance.base: Still creating... (10s elapsed)aws_instance.base: Still creating... (20s elapsed)aws_instance.base: Creation complete (ID: i-1234a4567b7b56789)datadog_monitor.cpumonitor: Creating... include_tags: "" => "true" message: "" => "CPU usage alert" name: "" => "cpu monitor I-1234a4567b7b56789" new_host_delay: "" => "30" notify_no_data: "" => "false" query: "" => "avg(last_1m):avg:system.cpu.system{host:i-1234a4567b7b56789} by {host} > 10" require_full_window: "" => "true" type: "" => "metric alert"datadog_monitor.cpumonitor: Creation complete (ID: 1853221)

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.Observe the instance’s ID within the name and query fields of the monitor. As intended, the monitor will only apply to this one host.

Before testing the new monitor, append an email address (e.g., "@you@example.com") or other notification channel to the monitor's message field and apply again:

$ terraform applyaws_instance.base: Refreshing state... (ID: i-1234a4567b7b56789)datadog_monitor.cpumonitor: Refreshing state... (ID: 1853221)datadog_monitor.cpumonitor: Modifying... (ID: 1853221) message: "CPU usage alert" => "CPU usage alert @you@example.com"datadog_monitor.cpumonitor: Modifications complete (ID: 1853221)

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.Finally, login to the instance and run any command that will fire up the CPU above 10 percent usage (e.g., while true; do lsof; done). Brace yourself for an alert!

Don't forget to clean up with a terraform destroy when you're finished.

Deploy Datadog with Terraform today

The previous examples, though simple, should give you an idea of what’s possible with Terraform. It’s capable of managing extremely large and complex infrastructure; it doesn’t matter how many instances, resource types, providers, or regions make up your environment. Datadog is an important piece of your environment, and now you can manage it alongside the rest of your cloud services with this increasingly adopted and thoughtfully designed devops tool.

The Datadog provider (and Terraform in general) is under very active development and already supports several Datadog API endpoints. If you’re a Gopher, we encourage you to contribute!

If you’re already a Datadog customer, you can start managing your alerts, timeboards, and more with Terraform today. Otherwise, you’re welcome to sign up for a free trial of Datadog.

Happy Terraforming!

Acknowledgments

Many thanks to Otto Jongerius for contributing the Datadog provider to Terraform, and to Seth Vargo from HashiCorp for providing feedback on a draft of this article.