Candace Shamieh

Maxime Visonneau

Anton Ippolitov

Operating Cilium at a small scale is straightforward. You install the Helm chart, choose a routing mode, and apply a few network policies. Day 1 is about getting packets to flow. Day 2 is about keeping them boring.

At Datadog, we run Cilium across hundreds of Kubernetes clusters, tens of thousands of nodes, and hundreds of thousands of pods in multiple clouds. When operating at this scale, small configuration choices stop being minor details and start becoming risk multipliers. A single misconfigured feature flag can show up as pod start delays, throttled cloud APIs, or subtle datapath drops that are extremely difficult to reproduce.

In this post, we’ll share what mattered most for reliability at our scale. We’ll discuss the defaults we kept, what we changed, and the guardrails we rely on to keep Cilium predictable in a multi-cluster and multi-cloud environment. We’ll cover:

- IPAM choices that avoid waste without slowing scheduling

- Upgrade gates that catch regressions before they impact users

- Health signals that detect control‑plane limits early

- Datapath tuning that prevents silent drops

Avoid IPAM errors at scale

IP allocation is one of the first places where scale pressure becomes visible. Small inefficiencies compound when they happen in parallel across thousands of nodes, and cloud-provider APIs can introduce bottlenecks that are easy to underestimate. In practice, IP Address Management (IPAM), routing mode, and identity management all shape how consistently Cilium behaves in production at an enterprise scale.

Establish a standard connectivity pattern with native routing

From the start, we adopted a flat, VPC-routable network that uses native routing instead of overlays. This proved to be the right long-term choice for large, multi-cloud clusters for a few reasons—namely, less overhead, a simpler datapath, and easier large-scale ingress. Native routing enables us to minimize overhead. Overlays require encapsulation, which increases packet size and consumes CPU. Because we run in cloud environments that already provide virtual networking, adding a second virtual layer offered little benefit but significant cost.

Native routing also reduces per-packet work in the datapath and enables us to avoid troubleshooting a second layer of maximum transfer units (MTUs), tunneling, and offload capabilities. With routable pod IPs, we can simplify the management of large-scale ingress. Load balancers can target backends directly instead of fanning out through NodePorts, which removes additional hops and node-local load.

Native routing also works well with cross-cluster and hybrid connectivity. Non-Kubernetes systems can reach pods directly, and clusters in different regions or clouds can communicate over our existing VPN links and VPC/VNet peering without adding an overlay network on top. By using native routing, we designed a standard connectivity pattern that we could implement everywhere, eliminating the need to maintain multiple models.

If you frequently notice MTU-related inconsistencies across layers, excessive overhead from load balancers when routing through NodePorts, or elevated CPU usage from overlay networking, it may be time to consider switching to native routing.

Standardize the operator/agent split

At scale, how you “talk” to the cloud matters just as much as what you configure. For example, on AWS, we rely heavily on the operator/agent split in Cilium’s AWS ENI IPAM mode. The operator is the component that communicates to cloud APIs and manages both Elastic Network Interfaces (ENIs) and prefixes. Agents consume the resulting pod IPs via Kubernetes sources.

Standardizing this behavior enables us to:

- Apply consistent client-side rate limits to all cloud API calls, instead of letting each node compete for quotas.

- Use common

pre-allocate,min-allocate, andmax-allocateconventions for per-node pools, which keeps behavior uniform across clusters. - Define logging and metric collection requirements for ENI and IP operations, making it easier to correlate operator behavior with pod scheduling and churn.

While this sounds like plumbing detail, it’s exactly what keeps bursty node churn from turning into a fleet-wide, API-throttling event.

Tune IPAM for large clusters

With the right routing in place, we can fine-tune IPAM parameters to optimize for large clusters. On AWS and Azure, we use pre-allocate=1 to limit IP waste, especially on high-density nodes. This helps ensure that we don’t hoard unused addresses and keeps per-node pools minimal.

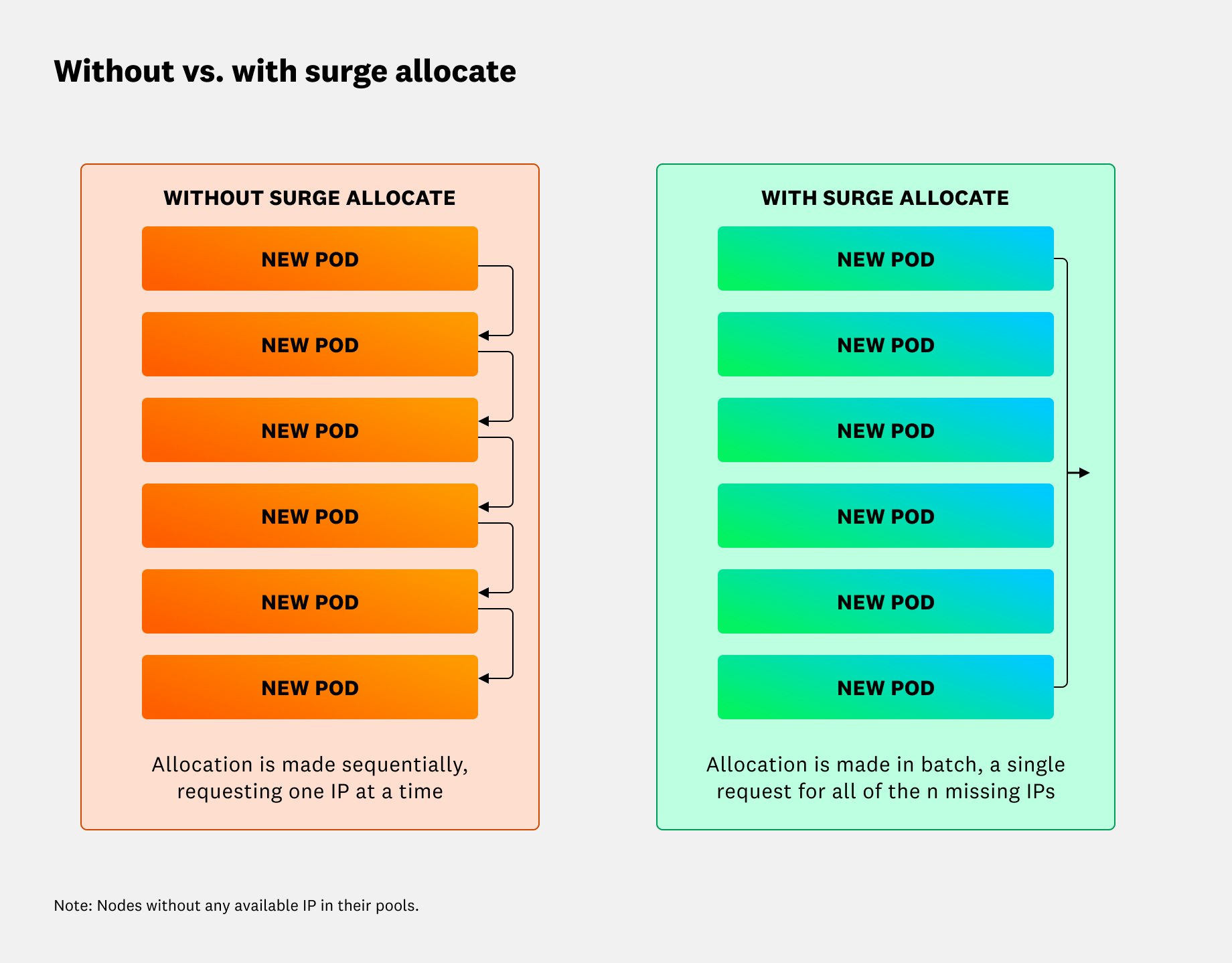

In exchange, we know that when nodes churn, pods might have to wait while extra IPs are allocated. To address this, we implemented surge allocation, a change which made the operator aware of the pending pod backlog. Once the operator becomes backlog-aware, it allocates all pending IPs in a single pass whenever it detects pressure. Because surge allocate enables us to pay the allocation cost once instead of per pod, pod start delays are significantly reduced during large rescheduling events.

The following diagram compares IP allocation for nodes with empty IP pools. Without surge allocate, Cilium allocates IPs in small increments as each new pod arrives. When surge allocate is enabled, the operator allocates enough IPs in a single pass to cover all pending pods, reducing repeated allocation cycles and minimizing pod start delays.

If you notice signs of IP pressure, even when nodes have available CPU and memory, it might be time to adjust your IPAM parameters. For example, you may benefit from enabling pre-allocate and surge allocate if you experience the following:

- Pods remain in a

Pendingstate due to IP exhaustion - Spikes in IP allocation latency or ENI operations during node rollouts or autoscaling events

- Pod start latency that correlates strongly with node replacements or disruptive upgrades

Configure AWS IPv4 delegation to avoid rate-limiting constraints

Even with tuned per-node pools, large AWS clusters can hit ENI and IP scaling limits when workloads churn quickly. This is where Day 2 IPAM work shifts from node-level settings to cloud-level behavior.

On AWS, we enable IPv4 prefix delegation so that ENIs receive /28 prefixes instead of individual IPs. Each prefix covers 16 addresses, which increases pod density per ENI while reducing VPC-wide address pressure. As a result, fragmented prefixes or frequent node turnover can lead to stranded capacity, and aggressive scale-up events can trigger a burst of prefix or ENI operations. This is where cloud API rate limits become a practical constraint.

To keep this under control, we monitor:

- Prefix churn and the distribution of unused IPs per prefix

- ENI and prefix API request rates and non-OK responses

- Time from pod scheduling to IP assignment during churn

If any of these trends drift, we adjust pre-allocate, min-allocate, and max-allocate. We also edit our scaling policies so that pod latency won’t start to impact users.

Consider using AWS prefix delegation along with more efficient IPAM tuning if your nodes frequently hit ENI or IP-per-ENI limits. These issues can occur even when your VPC still has address space available because many ENIs carry only a small number of pods. Additionally, if you observe cloud API throttling or elevated error rates during large rollouts, it could indicate that your current IP allocation strategy isn’t scaling effectively.

Keep identities stable

High-cardinality identities can cause large clusters to behave unexpectedly. Every label that participates in identity calculation can increase the number of distinct identities that Cilium needs to track, which drives up memory usage and map pressure on each node. We combat this by:

- Maintaining a compact allowlist of identity-relevant labels, focusing on labels that actually drive policy decisions.

- Excluding high-cardinality prefixes, like pod-specific or deployment-specific labels, from identity calculation.

- Watching identity map size and garbage-collection behavior during churn.

The goal isn’t to minimize the number of identities at all costs, but instead to keep the set of identities bounded, stable, and compliant with how policies are written.

Elevated agent memory usage on nodes with many workloads, frequent identity garbage-collection cycles accompanied by allocation-pressure warnings, and slowdowns in policy evaluation after introducing new labels or tenants are common indicators of label-related identity churn. These patterns suggest that your labeling strategy may need refinement in order to keep identity allocation and policy behavior predictable at scale.

Maintain consistent MTU baselines

Finally, we size MTUs deliberately. On Day 2, intermittent connectivity issues that only affect some paths or payload sizes can trace back to inconsistent MTU settings. We derive pod MTU from the underlying network MTU and subtract any existing encapsulation overhead. If packets fragment or drop, we review them to ensure that MTU values are consistent. MTU value mismatches can trigger silent and hard-to-reproduce drops because the failures usually don’t look like a clear denial or error in Cilium.

By standardizing MTU settings per environment in this way, we avoid mismatches in cross-cluster and cross-VPC communication. When we enable optimizations like XDP or kube-proxy replacement later in the process, having a consistent baseline MTU helps prevent confusion.

You may be experiencing MTU issues if you observe intermittent timeouts or connection resets when sending larger payloads, even though smaller requests succeed. You might also see spikes in TCP retransmits or an increase in Fragmentation Needed or Packet Too Big messages along specific network paths. If connectivity problems only appear for cross-region, VPN, or peered-VPC traffic, that is another strong indicator of MTU mismatches.

Upgrade practices that keep deployments safe

Version upgrades can introduce behavioral changes that aren’t immediately obvious without validation. A new field in a NetworkPolicy resource may be syntactically valid, but it can also change how traffic is handled. Features like deny policies, including ingressDeny, can change the effective behavior of existing policies even when validation passes. To avoid unintended behavior when upgrades introduce new features, we treat Cilium upgrades the same way we handle other high-impact changes, meaning they go through validation, gating, and canary rollouts before reaching full production.

Before each upgrade, we run cilium preflight validate-cnp on the in-pod CLI. For newer versions, we run the following:

kubectl -n kube-system exec deploy/cilium-operator -- \ cilium-dbg preflight validate-cnpThis tool scans all of the CiliumNetworkPolicy resources and CiliumClusterwideNetworkPolicy resources within a cluster and verifies that they’re valid for the target version. If any are malformed or no longer valid, it exits non-zero so the upgrade can be blocked. This step has repeatedly caught policies that older versions accepted but newer versions reject or interpret differently. It doesn’t eliminate all upgrade risk, but it does ensure that we don’t start from a broken baseline.

We’ve also learned to pay close attention to warnings. Some preflight checks emit warnings about policies that might behave differently after an upgrade while still reporting “all valid.” We treat those warnings as action items, not as success messages.

If upgrades have altered effective policy behavior in the past, or if policy validation still occurs manually in staging, integrating preflight validation into every upgrade can help reduce risk.

Use connectivity tests as a behavioral gate

Preflight validation ensures our policies are structurally sound, but it doesn’t guarantee that traffic will still flow the way we expect after an upgrade. We run the cilium connectivity test as a Helm post-upgrade hook. This end-to-end test suite exercises pod-to-pod, pod-to-service, pod-to-node, or pod-to-external connectivity and verifies that policies and service load balancing behave as expected in the datapath.

If the connectivity tests fail, the rollout is blocked and the cluster remains on the previous version. This gives us a single, consistent signal of whether a release is safe to promote.

If upgrades lead to changes in connectivity, especially when they only affect specific service topologies or external paths, integrating the cilium connectivity test into the rollout pipeline will help reveal compatibility issues early.

Standardize upgrades across clusters

Because we manage hundreds of clusters, consistency is crucial. We maintain a Helm chart derived from the upstream Cilium chart and keep it closely in sync with upstream releases, while enabling the features we rely on and reviewing new image defaults carefully. This helps us apply Cilium’s upgrade guidance consistently across clusters and avoid surprises when defaults change.

We stagger rollouts across groups of clusters, beginning with the canary clusters that most closely match production characteristics. By pre-pulling images and synchronizing Kubernetes and kernel versions in our canary environments, we reduce environmental differences during each rollout. If a release introduces a regression, this combination of preflight validation, connectivity testing, and staggered rollouts ensures that we can address issues long before they impact the majority of clusters.

Monitor control plane and datapath signals to catch issues early

Cilium exports rich signals at the control plane and datapath levels. Watching these signals closely, especially around upgrades and periods of high churn, enables us to detect issues before they cause disruptions.

Watch cloud APIs like any other dependency

During node churn, the Cilium operator can generate a burst of ENI- and IP-related API calls. In one incident, calls to the AWS ENI API spiked to nearly 1,000 requests per second and correlated with an increase in non-OK responses. The throttling slowed IP allocation across multiple clusters and resulted in pod scheduling delays.

We now treat cloud APIs as first-class dependencies. We track request rate, error rate, and latency per API operation. We correlate operator logs with cloud API metrics so that throttling patterns are easy to identify.

Additionally, we use centralized client-side rate limits to prevent individual clusters from overwhelming shared quotas. When client-side rate limits are bypassed, whether through misconfiguration or a new path, we’ve seen it show up as identity churn and backoff behavior that is very complex to debug without these metrics.

You may need to adjust configuration parameters if you notice spikes in ENI- or IP-related API calls during autoscaling or node upgrades. Throttling errors that coincide with pods getting stuck in Pending due to IP allocation are another strong indicator. If operator logs are showing repeated retries or backoff behavior when interacting with cloud APIs, it likely means that your current settings aren’t keeping up with demand.

Make KVstore allocation mode observable

Some of our largest clusters run in key-value store (KVstore) allocation mode (we use etcd). In those clusters, Cilium relies on the KVstore for distributing identities and other control-plane states, so we treat it as a critical shared dependency and monitor the following metrics:

- Etcd operation latency and error rates

- Etcd queries per second (QPS), especially during upgrades and leader changes

- Cilium identity garbage-collection latency and behavior

At one point, a strict QPS limit introduced during an upgrade increased identity garbage-collection latency enough to cause backpressure. Our collected metrics made the relationship clear and let us relax the limit before it impacted users. We also alert on agent and operator restarts, controller failure counts, and health-check failures from agents to the KVstore.

We’ve also experienced a KVstore availability issue that led to a deadlock, resulting in health-check failures and widespread pod restarts. Having these alerts in place enabled us to detect and remediate the issues quickly.

In clusters that use KVstore allocation mode, symptoms such as delayed identity updates, elevated etcd latency, or periodic agent restarts may indicate that the store—and its QPS limits—should be included in your Cilium-related SLOs.

Tune Hubble for high-traffic clusters

To minimize resource contention and reduce latency, we tuned Hubble for operation in high-traffic clusters. This includes increasing event-queue sizes and buffer limits where necessary, and applying rate limits to flow events so that high-volume traffic cannot starve other signal types. We also monitor and alert on backpressure, queue drops, and repeated processing failures. By managing Hubble’s performance in the same way that we manage any other production-critical component, we maintain full visibility without introducing instability into the observability pipeline itself.

If you notice Hubble queues or buffers filling during peak traffic, or if flow visibility in the UI or CLI becomes delayed or incomplete, you may be approaching the limits of the current configuration. If you encounter increased Cilium agent CPU usage during flow capture while overall dataplane traffic remains unchanged, Hubble likely requires additional tuning to support your cluster’s throughput.

Configure your datapath to be reliable at scale

Datapath issues can appear as silent drops or rare edge-case bugs. By the time users notice them, the evidence you need to diagnose them is usually gone. We rely heavily on BPF map metrics, kernel-level tools, and conservative sizing to keep datapath behavior stable.

Use map pressure as an early warning signal

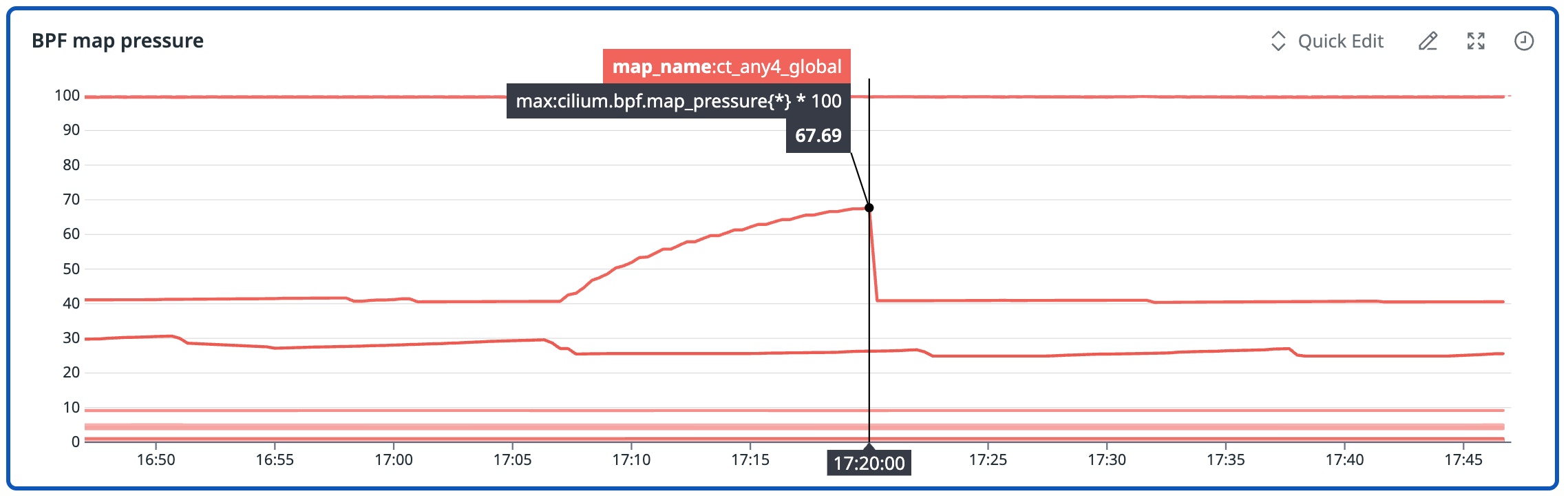

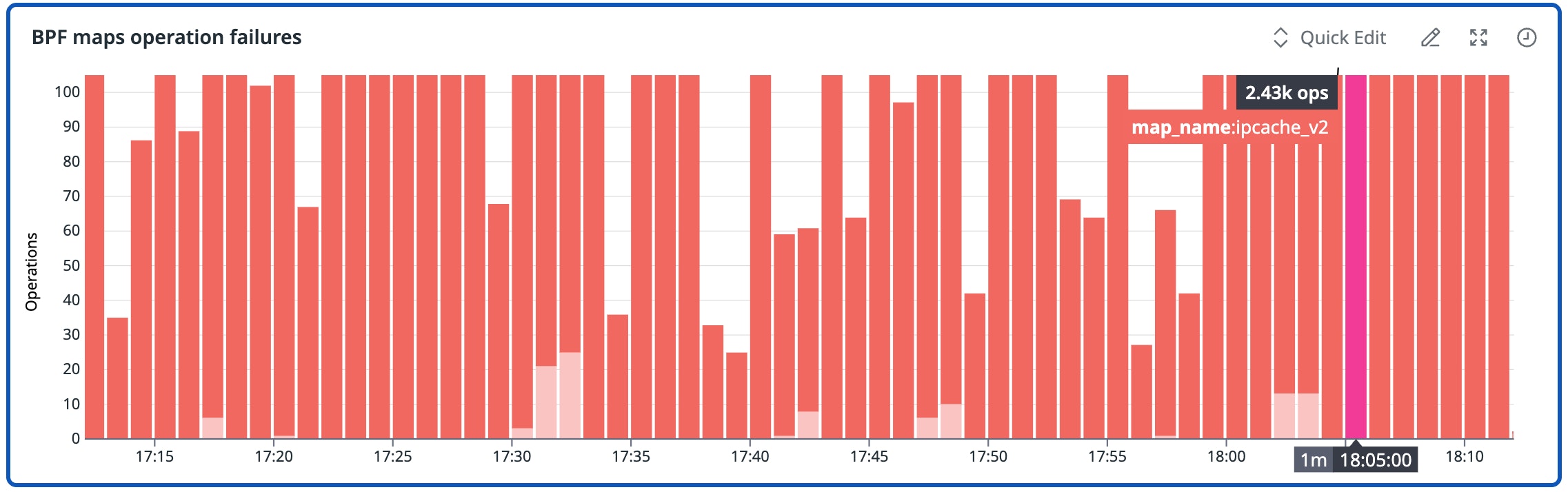

Cilium exposes a cilium_bpf_map_pressure metric that reports on how full datapath maps are, including policy maps, connection-tracking (conntrack) maps, and load-balancing maps. We enable these metrics and alert on pressure for critical maps. Map pressure can be the most reliable early indicator of impending failures. When maps approach capacity, insertion attempts start to fail. Some maps, like least recently used (LRU) conntrack maps, can’t provide perfectly accurate counts with simple counters, making pressure easier to monitor than raw operations.

The following example shows how BPF map pressure can reveal datapath stress long before workloads feel it. In this cluster, several maps began approaching pressure limits well before any user-facing symptoms appeared.

The following visualization illustrates how repeated BPF map operation failures can accompany rising map pressure. These failures arose quietly during churn and served as a clear early signal of a datapath bottleneck.

We’ve also seen this manifest as specific drop reasons—such as CT: Map insertion failed—in high-throughput environments like DNS, which also appear well before customers experience any broader impact.

You can consider investigating BPF map sizing and policy shape if you notice the following:

- Sustained high

cilium_bpf_map_pressurevalues on critical maps - Non-zero or rising map insertion failure counters

- Drop reasons associated with map capacity, even when overall traffic volume hasn’t changed dramatically

Rightsize and plan around kernel limits

Rather than relying solely on compatibility matrices, we evaluate Cilium’s datapath requirements as a part of our Kubernetes and kernel upgrade planning. We size memory-intensive maps by using --bpf-map-dynamic-size-ratio. This enables us to allocate a fixed percentage of node memory (for example, 0.25%) to high-volume maps, such as the global conntrack, SNAT, NodePort neighbor, and selected load-balancing maps.

We avoid changing BPF map size settings and restarting agents during peak traffic, because recreating and repopulating maps under load can cause transient packet drops. Additionally, we plan kernel versions around newer batch APIs that improve the accuracy and cost of inspecting LRU maps and conntrack state.

If you regularly increase map sizes to handle load, or notice intermittent drops during agent restarts or rollouts, it may indicate that your memory ratios and kernel capabilities require more explicit planning instead of being tuned reactively.

Investigate with bpftrace and bpftool

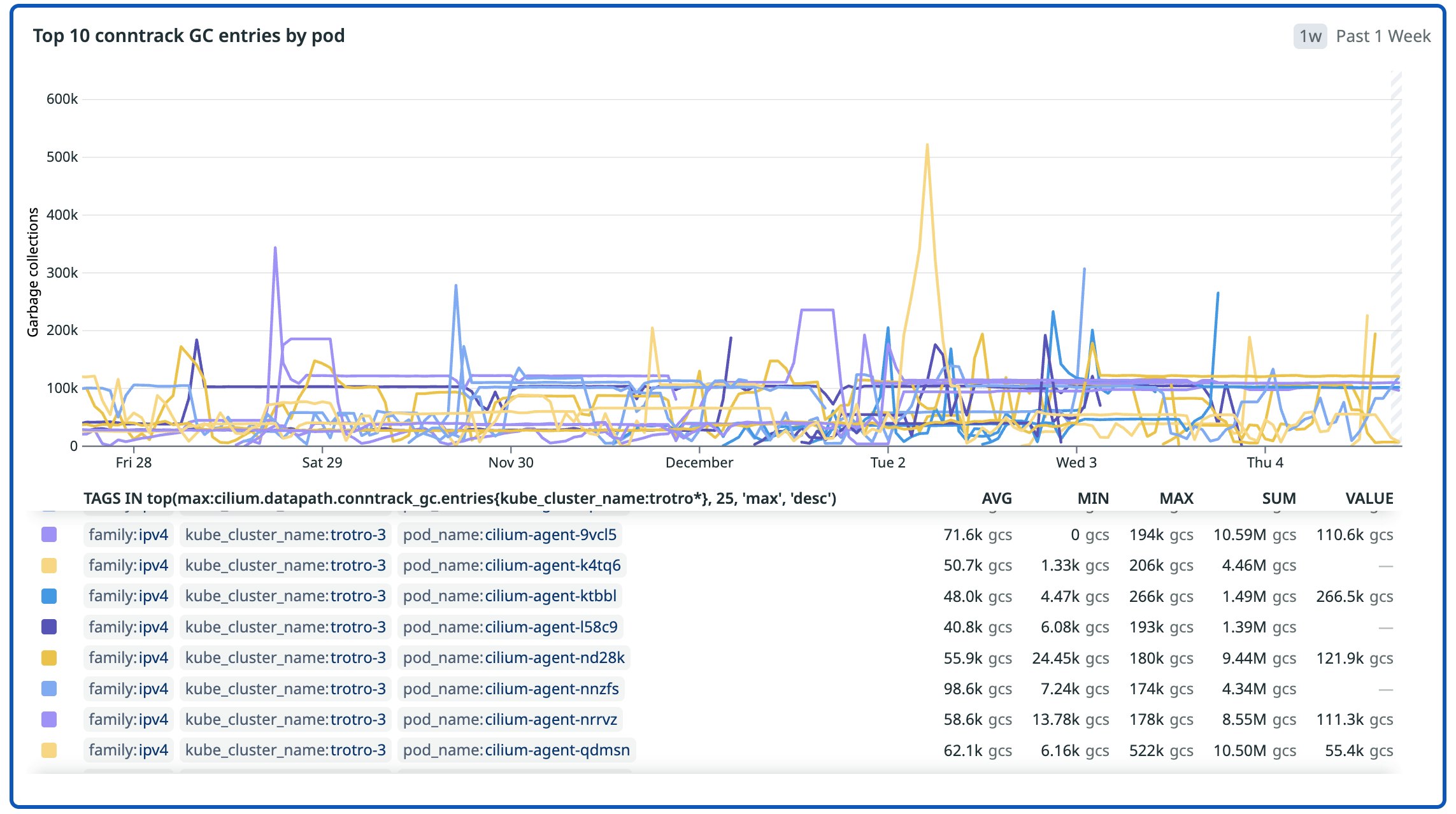

When metrics alone aren’t enough, we turn to kernel-level tools like bpftrace and bpftool.

These tools provide visibility into the datapath to validate hypotheses without permanently increasing overhead. For example, we once traced skb->mark through the datapath to debug a case where identity bits became corrupted. In our Cluster Mesh setup, each cluster had a numeric ID in the documented 1–255 range, but using a value in the upper half of that range (greater than 128) exposed a bug in how we encoded the cluster ID into skb->mark in ENI/NodePort mode. Tracing made the collision visible, enabling us to fix the encoding and contribute an upstream improvement.

The following chart highlights how conntrack garbage-collection spikes vary across nodes. Only a handful of agents showed large bursts, which helped us identify the localized datapath stress that cluster-level averages would have hidden.

We also used BPF map inspection to track down a service-backend leak. The issue occurred when a service was deleted while one of its backend pods was still terminating. Inspecting the relevant maps made the underlying problem clear, and adding tests upstream helped prevent recurrence.

When path-specific or node-specific failures occur even though Cilium and node-level metrics appear normal, using bpftrace or bpftool can help validate hypotheses about datapath behavior.

Make failures explicit instead of silent

Some of our most effective changes are the ones that users never notice directly. We use enable-unreachable-routes=true (the --enable-unreachable-routes config option) so the kernel returns “ICMP host unreachable” for reclaimed pod IPs. This stops blind retransmits from clients that would otherwise keep sending traffic to dead addresses and reduces “martian source” noise in logs. In our environment, this correlated with a visible drop in failed retransmits after pod churn events.

Bursts of retransmits toward reclaimed pod IPs, or persistent martian source logs after churn events, may indicate that enabling unreachable routes can expose these failure modes more readily.

Scale kube-proxy replacement reliably

To obtain measurable performance improvements and reduce reliance on iptables, we use Cilium’s kube-proxy replacement to offload service load balancing into eBPF. As with other Day 2 optimizations, it depends on careful configuration to stay predictable under heavy traffic. To ensure Cilium behaves as expected, we perform the following actions:

- Enable Maglev consistent hashing so backend selection remains stable as backends are added or removed.

- Use XDP acceleration where hardware and kernels permit, to reduce latency for service traffic.

- Rely on eBPF masquerading and well-defined native-routing CIDRs so east-west traffic is not accidentally NATed.

- Use Local Redirect Policies to keep hot paths such as DNS on-node, avoiding extra hops and cross-node dependencies.

Combined with the IPAM and observability practices previously mentioned, these settings give us a service datapath that scales with traffic while reducing unpredictable behavior.

Uneven backend utilization, connection stickiness issues under load, and latency or jitter that increases as you add more backends or services are all signals that your kube-proxy replacement may require adjustment.

Lessons learned from running Cilium at scale

Running Cilium at Datadog scale reinforces a simple lesson: Small operational decisions matter, and their effects accumulate quickly. Choices about routing, IPAM, labels, upgrade validation, and datapath observability all contribute to how a cluster behaves in real-world conditions.

By standardizing these practices, we keep even our largest clusters quiet enough to feel boring, which is often the highest compliment that an engineer can give to a system. To learn more, you can visit the Cilium project in the Datadog Open Source Hub, listen to the Day 2 with Cilium talk, and review the Cilium integration documentation. If you’re new to Datadog, you can sign up for a 14-day free trial now.