Mallory Mooney

Usman Khan

Modern applications log vast amounts of personal and business information that should not be accessible to external sources. Organizations face the difficult task of securing and storing this sensitive data in order to protect their customers and remain compliant. But there is often a lack of visibility into the sensitive data that application services are logging, especially in large-scale environments, and the requirements for handling it can vary across industries and regions. For example, many healthcare organizations in the US need to ensure that they comply with HIPAA, the US federal law that regulates protected health information, while companies operating in the EU need to comply with Europe’s GDPR when processing certain personal data.

Additionally, certain categories of sensitive data may not require the same level of protection as others, or they may have different retention policies. As such, creating processes that account for variation in the types of data your services log enables you to focus on the information that is most important to protect. For example, data breaches that expose personally identifiable information (PIIs) are expensive to resolve and put customers at risk, so your environment should have stringent controls around any PIIs it stores.

In this post, we’ll look at how you can address these challenges by breaking down sensitive data management into the following stages:

- identifying confidential information logged by application services

- categorizing flagged data based on dimensions severity and sensitivity

- taking appropriate action based on your classifications

We’ll also show how Datadog Sensitive Data Scanner can help you implement these steps at scale so you always have complete visibility and control over any sensitive data logged by your services.

Take inventory of application services and their data

Having a better understanding of the types of information your services log is a critical first step to building an effective data compliance strategy. This process involves auditing application services in order to identify and classify their data. But this step is often the most difficult one, as it requires teams to develop a unified approach to data discovery that can be applied to different types of data and data sources. In this section, we’ll look at how you can systematically inventory your application services and efficiently search for exposed sensitive data.

Leverage frameworks and visualization tools to start mapping data

Sensitive data in your environment may vary depending on your organization’s region and industry. The following security and compliance frameworks and regulations can serve as reference points for determining which data to look for:

| Framework or Regulation | Data Type | Examples |

|---|---|---|

| HIPAA | Protected health information | An individual’s full name or social security number coupled with information about their health |

| PCI DSS | Payment information | Credit card numbers and verification codes |

| GDPR | Personal data | Full name, identification numbers, and online identifiers (like an IP address) |

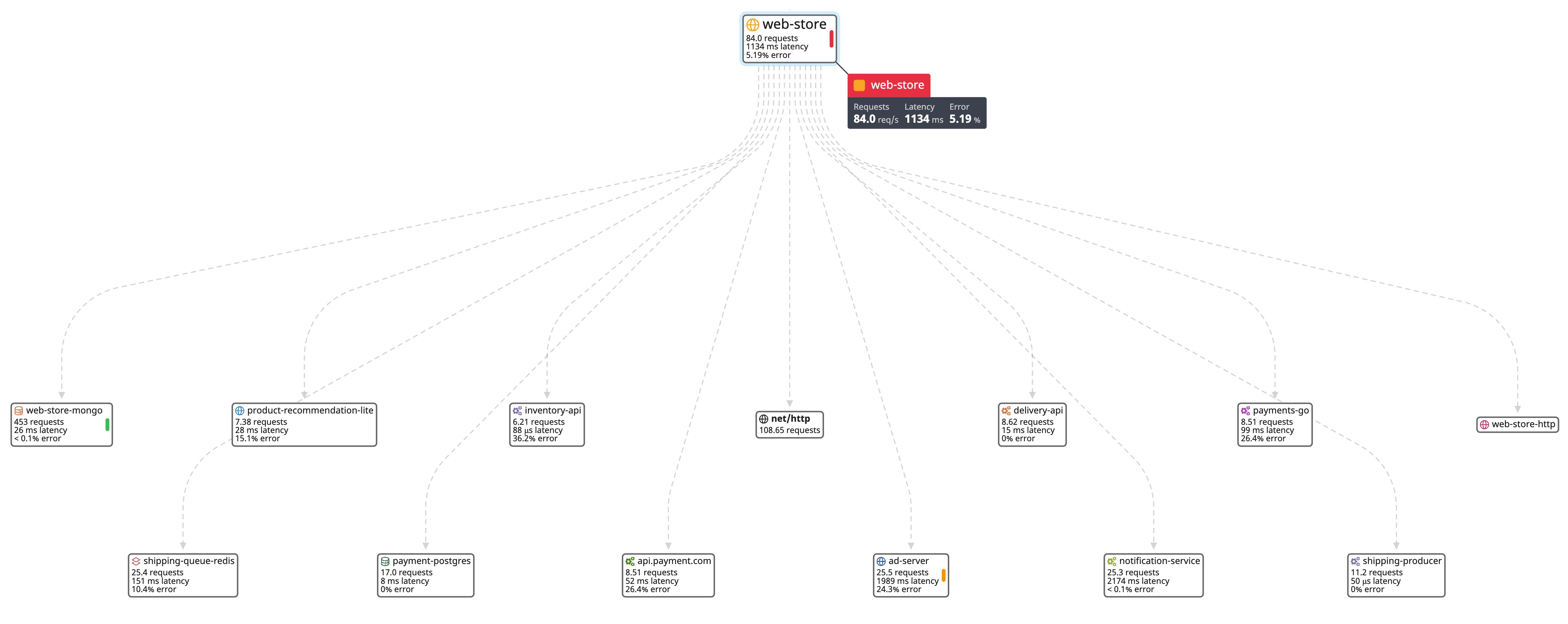

Next, you can target the most common sources of leaks in your applications, such as data storage and API services. For example, an organization that runs an e-commerce application may start the data discovery process by auditing their application’s customer databases and payment processing services. Service or network maps can also give you better visibility into the flow of data through your applications and how each service is connected, so you can track data that is being stored (at rest), regularly updated (in use), and transmitted to other sources (in motion).

The example service map above shows data transmitting from the web-store service to several database and API endpoints, including two that are dedicated to processing payments (payment-postgres and api.payment.com). As previously mentioned, these two sources can be good places to start the data discovery process as their logs may store payment information, which is considered high risk for data compliance.

Add granularity to your search via data filters

Once you identify the different sources and types of data in your environment, you will need to ensure that you are capturing the right information. Methods like machine learning models can automatically match patterns of data in your logs, but leveraging filters like regular expressions gives you greater control over your search. Regular expressions are strings of characters used to search for and match specific patterns in data, such as any financial information captured in your logs. They enable you to fine-tune the information you search for as part of your data discovery journey and ensure that you are only flagging sensitive data based on necessary regulatory and compliance standards. They are also a key element in search mechanisms for log management and data discovery solutions, so building efficient queries helps you get the most out of the tools you use.

When customizing regular expressions for your services, you can ask yourself the following questions to help you target the appropriate data types:

- Customer database: How are customer or user IDs structured in your application? (e.g,. character length, special symbols, alphanumeric, case sensitive)

- Customer database: Are any services logging EU identifiers? (e.g., VAT codes, national identification numbers, license plate numbers)

- Payment database: What is the word order for key phrases that need to be matched? (e.g., Discover credit card)

- Payment API service: What is the date format for timestamps? (e.g., MM/DD/YYYY)

Using our previous payment database and API endpoint examples, you can also leverage the following expressions to capture these sources’ common data types:

- Email addresses:

^[\w\.=-]+@[\w\.-]+\.[\w]{2,3}$ - American Express numbers:

^3[47][0-9]{13}$ - MM/DD/YYYY formatted dates:

^([1][12]|[0]?[1-9])[\/-]([3][01]|[12]\d|[0]?[1-9])[\/-](\d{4}|\d{2})$

These types of regular expressions give you greater control over the data classification process, which we’ll talk about next.

Group sensitive data into meaningful categories

After you’ve identified the different types and sources of sensitive data in your environment, you will need to categorize the data you surface in order to appropriately manage it. While classifications vary depending on the industry and organizational goals, you can start by grouping your application’s data into manageable categories based on its level of severity and sensitivity. This high-level process can help you develop a framework for classification, such as:

| Severity | Sensitivity | Description | Examples |

|---|---|---|---|

| Low | Unrestricted | Data that can be shared openly with the public and does not require any security controls when used or stored | Press releases, announcements |

| Moderate | Confidential | Data that can be used within an organization but is subject to certain compliance restrictions | Customer lists, first and last names, email addresses, order history, transaction dates |

| High | Restricted | Data that requires strict security controls for use and access | Banking information, authentication tokens, health data |

The levels seen above are based on common compliance and regulation examples and guide how you should prioritize data compliance and remediation efforts. You can use these levels to add tags to incoming logs via your log management or other observability tools. For example, you can apply the following tags to logs that contain credit card numbers:

severity:high: sets the level of severitysensitivity:restricted: categorizes this data as “restricted”requirement:pci: references the relevant compliance framework or regulationservice:payment-processing: references the service generating the datateam:payment-service-compliance: specifies which team owns the associated service

As seen in the examples above, tags are key-value pairs that provide more context to sensitive data, which can be critical for audits and security investigations. Labeling sensitive data also helps you create guidelines for access control, data management, and more, which we’ll look at in the next section.

Take appropriate action on flagged data

Once you’ve developed a robust strategy for data discovery and classification, the final step is establishing processes for handling sensitive information in logs. Remediatory actions can entail:

- adjusting retention policies for different data classifications

- masking flagged data in your logs based on your policies

- limiting data access from unauthorized users in your organization

- monitoring data over time to identify trends

Each of these steps, which we will explore in more detail in this section, can strengthen your data compliance strategy and give you insight into how effective your policies are over time.

Develop retention policies for compliance purposes

Data retention policies play a key role in how you manage sensitive data. They enable you to define how long data should be stored in your environment and what happens to it when it is no longer needed. Most compliance frameworks and regulations have their own set of requirements for how long you should retain data. For instance, PCI DSS and GDPR state that data should be stored until it is no longer needed for processing, while the Bank Secrecy Act specifies that data should be stored for a minimum of five years.

As such, you will need to develop retention policies based on the types of data you collect and how you use it. For example, a policy for managing payment information may look like the following:

| Data | Classification | Sensitivity | Storage Location | Retention Period | Action |

|---|---|---|---|---|---|

| Credit Card Numbers | Confidential | High | payments-postgres | One month | Scrub |

| Routing and Account Numbers | Confidential | High | payments-postgres | One month | Scrub |

| Customer IDs | Confidential | High | customers-postgres | 12 months | Obfuscate |

Well-defined retention policies like the one above provide multiple benefits. First, they enable you to create clear rules that meet compliance and regulation standards and organize your data in order to expedite the archival process. Data retention policies can also help you reduce storage costs and minimize the volume of data in your environment by clearly defining when it should be deleted. Finally, retention policies help strengthen data compliance by limiting the amount of sensitive information that an attacker can access or intercept.

Obfuscate or scrub data from logs based on your policies

Most compliance frameworks and regulations stress the importance of de-identification, which is the process of removing identifying information from data, such as email addresses or customer IDs. Different types of sensitive data will require different approaches to de-identification, which should be defined in your retention policy (as shown above). For example, high-risk or restricted data may need to be redacted after a period of time in order to maintain compliance. On the other hand, you may need to obfuscate other types of high-risk data like customer IDs while retaining access to the original values for troubleshooting purposes. This strategy enables you to retain uniqueness for information that’s critical for security audits while still protecting customer information from a data breach.

Redacting data from logs is a fairly straightforward process because it involves replacing a matched pattern with placeholder text, as seen in the example log entry below:

Payment rejected for credit card number: [redacted]Data masking techniques like hashing, on the other hand, may require more planning before implementation. This technique replaces sensitive data values with generated tokens, which greatly reduces the risk of exposing data in use while maintaining its integrity through unique identifiers. For example, the log below leverages hashing to substitute a customer ID with a token.

Payment rejected for customer ID: F1DC2A5FAB886DEE5BEEGenerally, data masking techniques should be:

- a flexible, repeatable process, accounting for data growth and changes over time

- difficult for attackers to reverse engineer

- able to preserve data integrity as teams copy databases for use in other environments

Use RBAC controls to protect data from unauthorized access

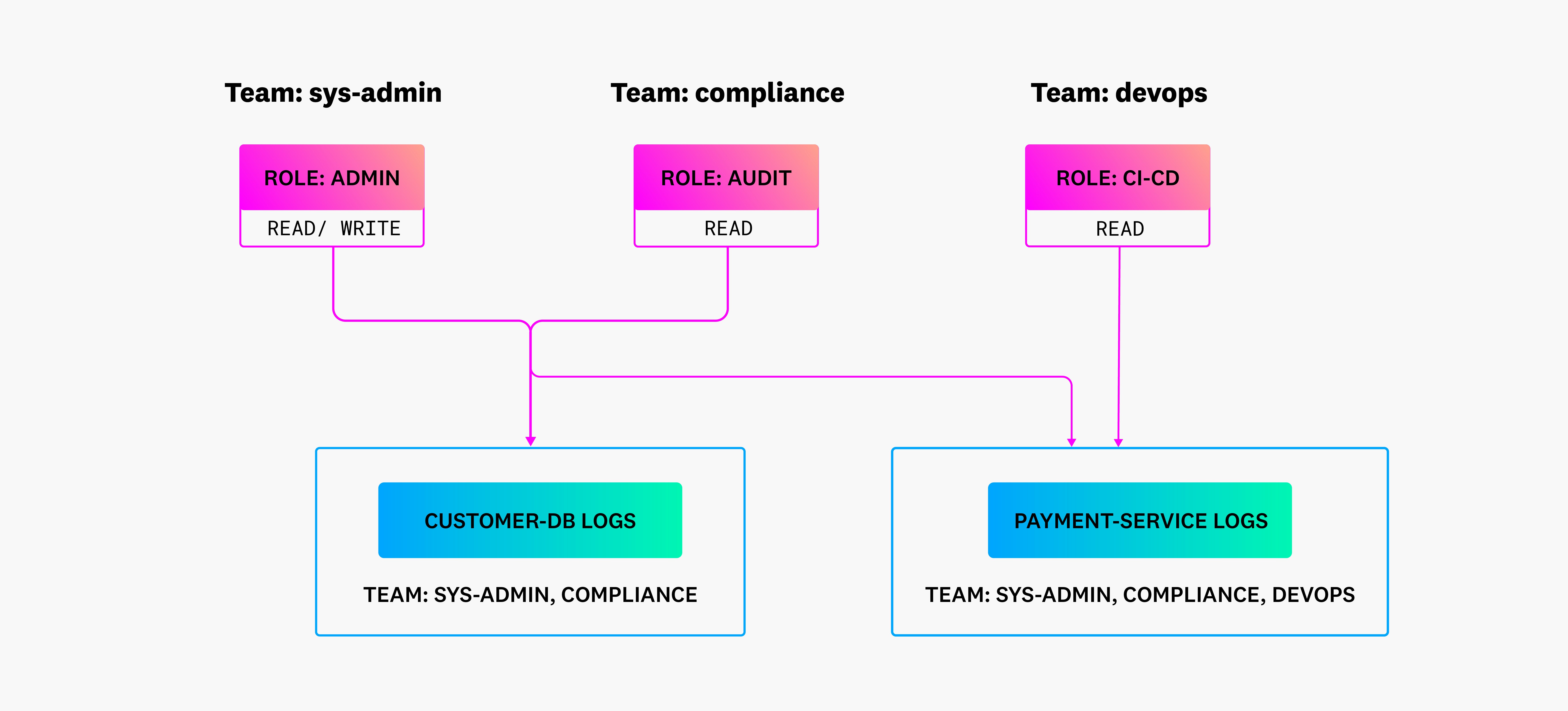

Another key step in protecting data is limiting access to log data in your environment through role-based access control (RBAC), which is required by certain compliance frameworks like PCI DSS. RBAC is a mechanism that grants the appropriate combinations of read and write permissions to employees based on their role in your organization. For example, a system administrator should have read access for customer database logs, while a regular user should not. RBAC can help reduce the risk of sharing sensitive data with unauthorized employees—and determine which users may have disclosed information in the event of a breach.

The following diagram is an example of an RBAC policy that grants the appropriate levels of access to users in your log management tool. There are three defined teams and roles for this environment, all of which have various levels of access to service and database logs based on their responsibilities. For example, the compliance team needs read permissions to logs from both payment-service and customer-db in order to conduct audits, so they are assigned the audit role and granted access to those services. DevOps, however, only needs to troubleshoot deployment issues in payment-service, so they are added with the CI-CD role, which has read-only privileges.

Monitor sensitive data in your environment to visualize trends

Monitoring your logs enables you to track trends over time and identify gaps in your discovery and classification policies so that you can create measurable goals for improving your strategy. There are a few datapoints you can focus on to help you get started, such as:

- which users have accessed sources of sensitive data over a certain period of time

- the average number of logs that contain sensitive data per environment, service, and source

- the volume of different types of sensitive data in your environment over time

- the percentage of logs that contain highly-restricted data, based on your sensitivity and severity levels

Monitoring user access, for example, can help ensure that your RBAC policies are up-to-date and applied appropriately. It also creates an audit trail of users who access high-risk sources, which can be crucial when investigating data breaches.

Enhance your data compliance strategy with Datadog

A key part of any data compliance strategy is finding the right solution with which to apply all of your policies. Datadog provides a comprehensive suite of tools that enable you to create the appropriate scope for discovering, classifying, and protecting sensitive data in your logs. For example, you can use Datadog’s built-in network and service maps to identify high-risk application services and dependencies, which is the first step in your data discovery journey.

With Datadog Sensitive Data Scanner, you can search for and classify sensitive data from identified services through customizable rules, which you can configure to automatically mask, redact, or partially redact certain information based on your retention policies. Datadog offers a library of over fifty scanning rules, all of which are pre-configured to match on common types of sensitive data. These rules enable you to kickstart the data discovery process and help reduce the need for creating and managing a large database of custom regular expressions.

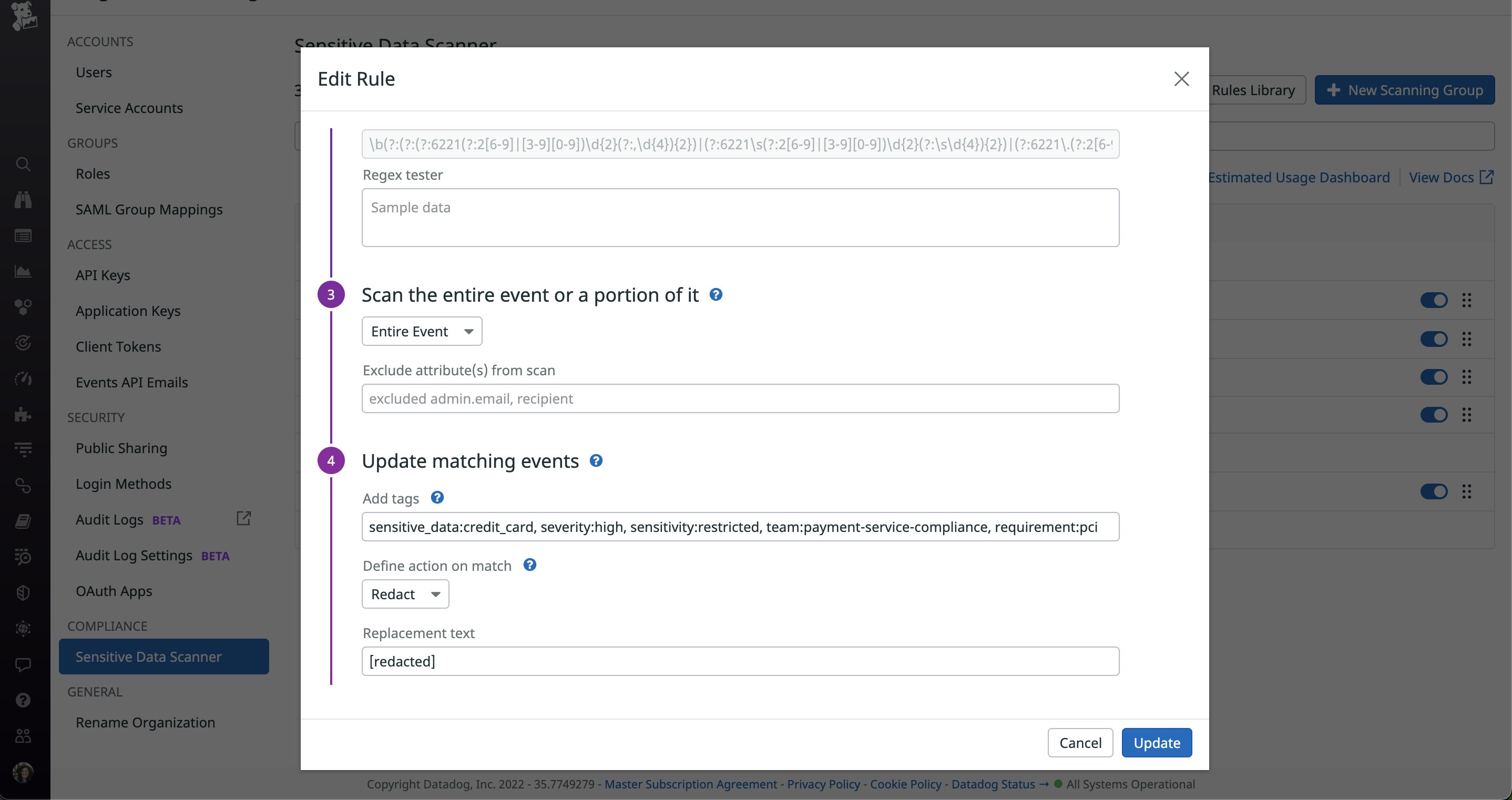

With the example rule below, data that matches the defined regular expression will automatically be scrubbed, and its associated log will be tagged with the appropriate labels.

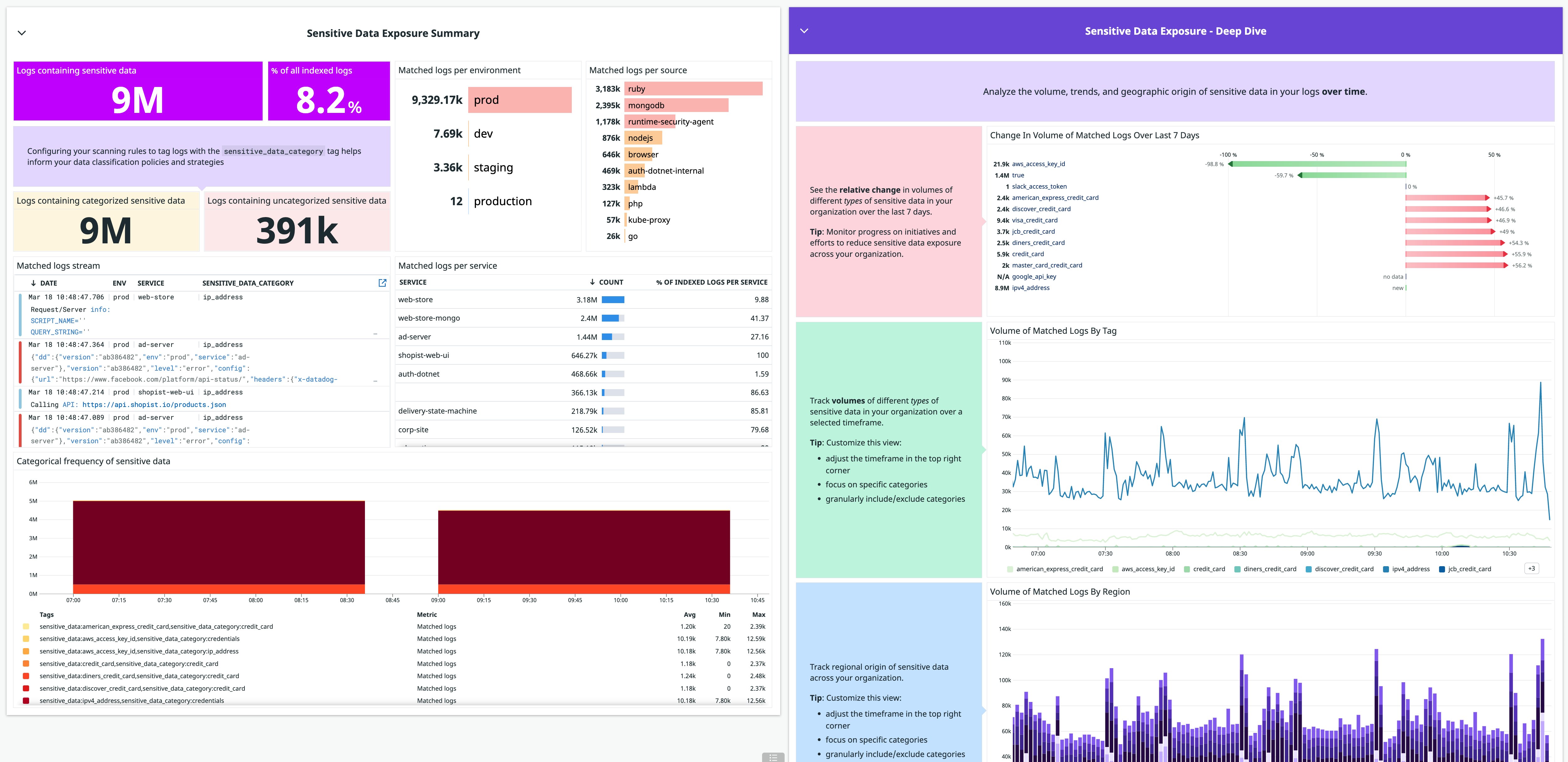

Datadog also offers an out-of-the-box dashboard for deeper insight into your environment’s sensitive data exposure, such as details about the volume and geographic origin of a specific classification of information.

The dashboard can be customized to fit your needs and data strategy goals. For example, you can leverage the tags in your scanner rules to visualize the breakdown of sensitive data by level of severity, so you can prioritize the areas of your application that need tighter security controls. You can also add custom metrics generated from sensitive data in your logs in order to track activity over longer periods of time—log-based metrics are retained for 15 months.

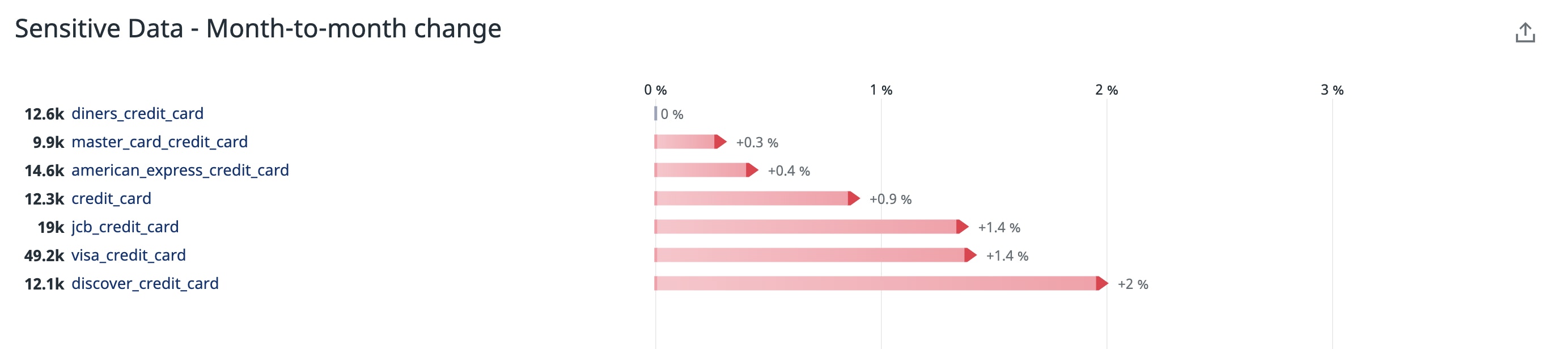

The example screenshot above shows a significant month-to-month increase in the volume of credit card numbers logged by a payment processing service. An uptick in this area could be indicative of an unexpected change in how the service logs payment information, which would require further investigation.

Finally, Datadog Log Management offers powerful controls to help you enforce and manage your RBAC and retention policies. Together, these tools enable you to build a modern data compliance strategy that fits your organizational goals.

Start your data discovery and classification journey

In this post, we explored some best practices for flagging and categorizing sensitive data in logs so that you can protect customers and employees from costly data breaches. We also looked at how Datadog Sensitive Data Scanner enables you to build a complete data compliance strategy for your environment. Check out our documentation to learn more about the Sensitive Data Scanner. If you don’t already have an account, you can sign up for a free 14-day trial.