Zara Boddula

Pranay Kamat

Security and observability teams generate terabytes of log data every day—from firewalls, identity systems, and cloud infrastructure, in addition to application and access logs. To control SIEM costs and meet long-term retention requirements, many organizations archive a significant portion of this data in cost-optimized object storage such as Amazon S3, Google Cloud Storage, and Azure Blob Storage.

These archives help reduce ingestion spend and meet compliance mandates, but once data is archived, it can be both difficult and costly to retrieve. Retrieving archived logs from cold storage can take hours or even days, during which investigations, audits, and the testing of new routing or enrichment logic can stall.

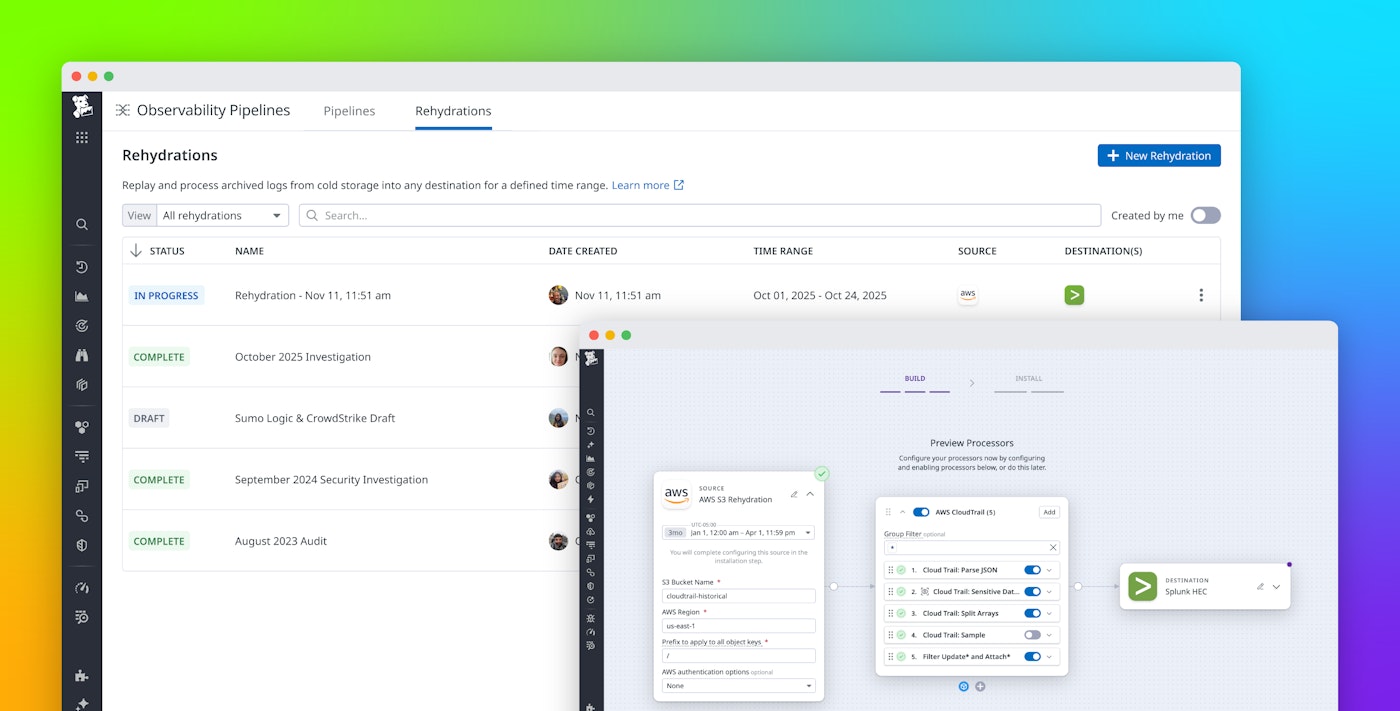

Rehydration for Observability Pipelines, now available in Preview, bridges this gap. It enables teams to quickly retrieve archived logs from external storage and replay them through existing Observability Pipelines using the same parsing, enrichment, and routing logic applied to live data.

In this post, we’ll explain how Observability Pipelines and Rehydration help you:

- Store logs cost-effectively in object storage such as S3, GCS, or Azure Blob Storage

- Retrieve archived logs on demand to support investigations, audits, or testing

- Apply parsing, enrichment, and routing logic to log rehydrations

Store logs cost-effectively in object storage such as S3, GCS, or Azure Blob Storage

As log volumes continue to grow, teams need to balance visibility with cost. Security engineers often face ingestion limits in their SIEM, while DevOps teams need to preserve full-fidelity data for investigations and compliance.

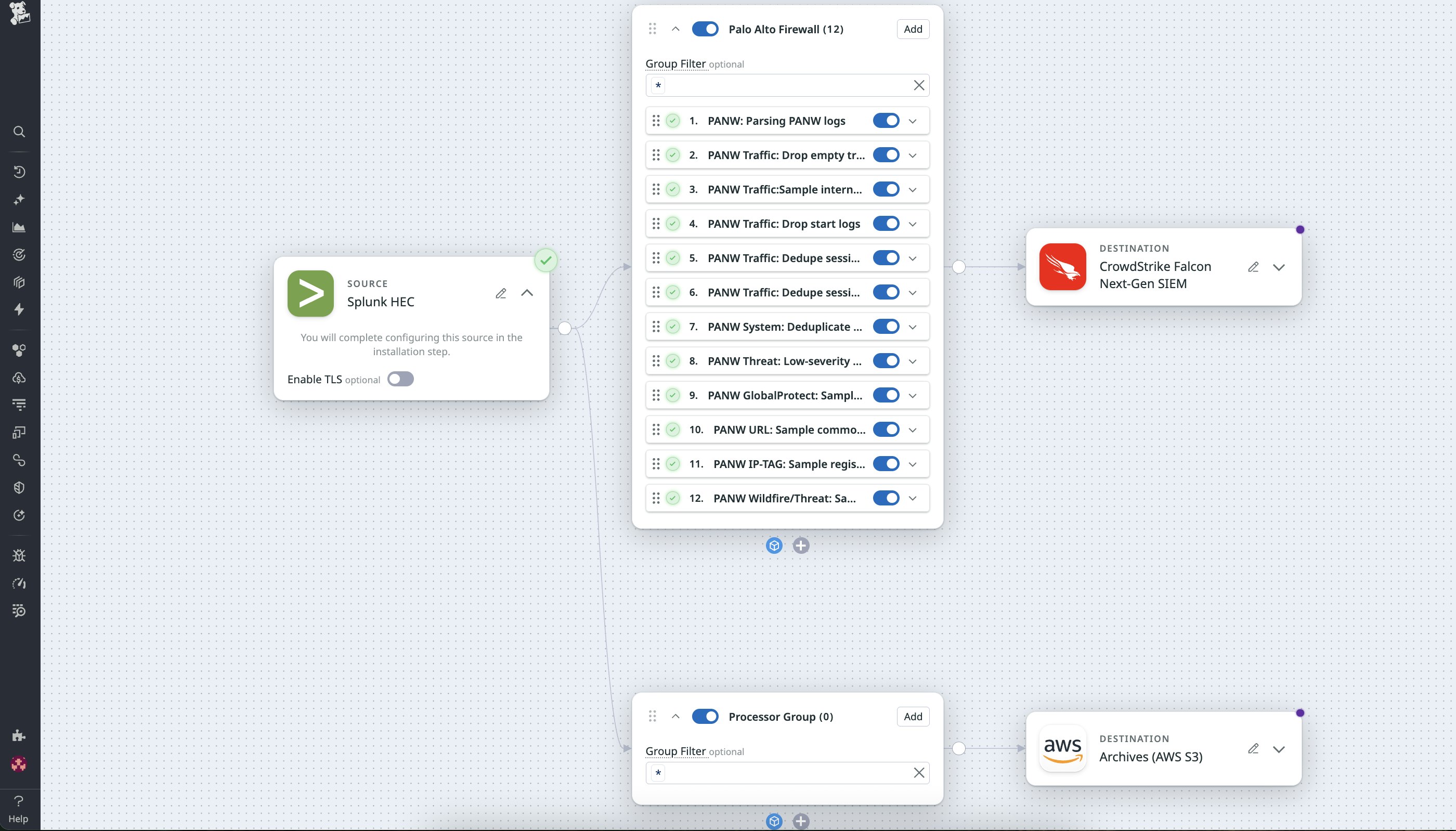

With Observability Pipelines and ready-to-use Packs, you can easily control which data stays in your analytics tools and which is archived in low-cost object storage. Packs are readymade, source-specific configurations for Observability Pipelines that apply Datadog-recommended best practices, filtering low-value events, parsing important fields, normalizing formats, and routing logs consistently, so teams don’t have to recreate this logic from scratch.

For example, a network security team managing the Palo Alto firewall can start from the Pack that automatically parses firewall logs, deduplicates and filters low-value logs, and extracts key attributes like src_ip, dest_ip, action, and rule. From there, the team can add two simple routes:

- Filtered logs (e.g., critical or denied actions) flow into their SIEM tool for immediate analysis.

- Full-fidelity logs are archived in Amazon S3 for long-term retention and compliance.

This dual-destination setup helps teams stay under ingestion quotas while maintaining a full record of events. When new threats emerge or an audit requires historical context, the archived logs in S3, Google Cloud Storage, or Azure Blob Storage are ready to be rehydrated through Observability Pipelines without any need for reconfiguring sources or the risk of breaking compliance rules.

Retrieve archived logs on demand to support investigations, audits, and testing

Teams archive data for many reasons: to meet retention policies, control storage costs, or preserve long-term historical context. But when an incident, audit, or test arises, those archives often contain the critical details that active systems no longer retain.

Rehydration for Observability Pipelines gives you an automated way to access that historical data when you need it. Rehydration pulls logs directly from object storage and lets you target the exact time range of the events you want to analyze. Once retrieved, the logs flow through the same parsing, enrichment, normalization, and routing logic you use for live data, including any Packs. This maintains consistent formatting and context whether you’re debugging an outage, investigating suspicious activity, validating new configurations, or preparing for an audit. You can then route the processed historical data to your preferred destinations, such as Splunk, CrowdStrike, Sumo Logic, data lakes, and more.

Let’s look at how different teams use Rehydration for Observability Pipelines in practice.

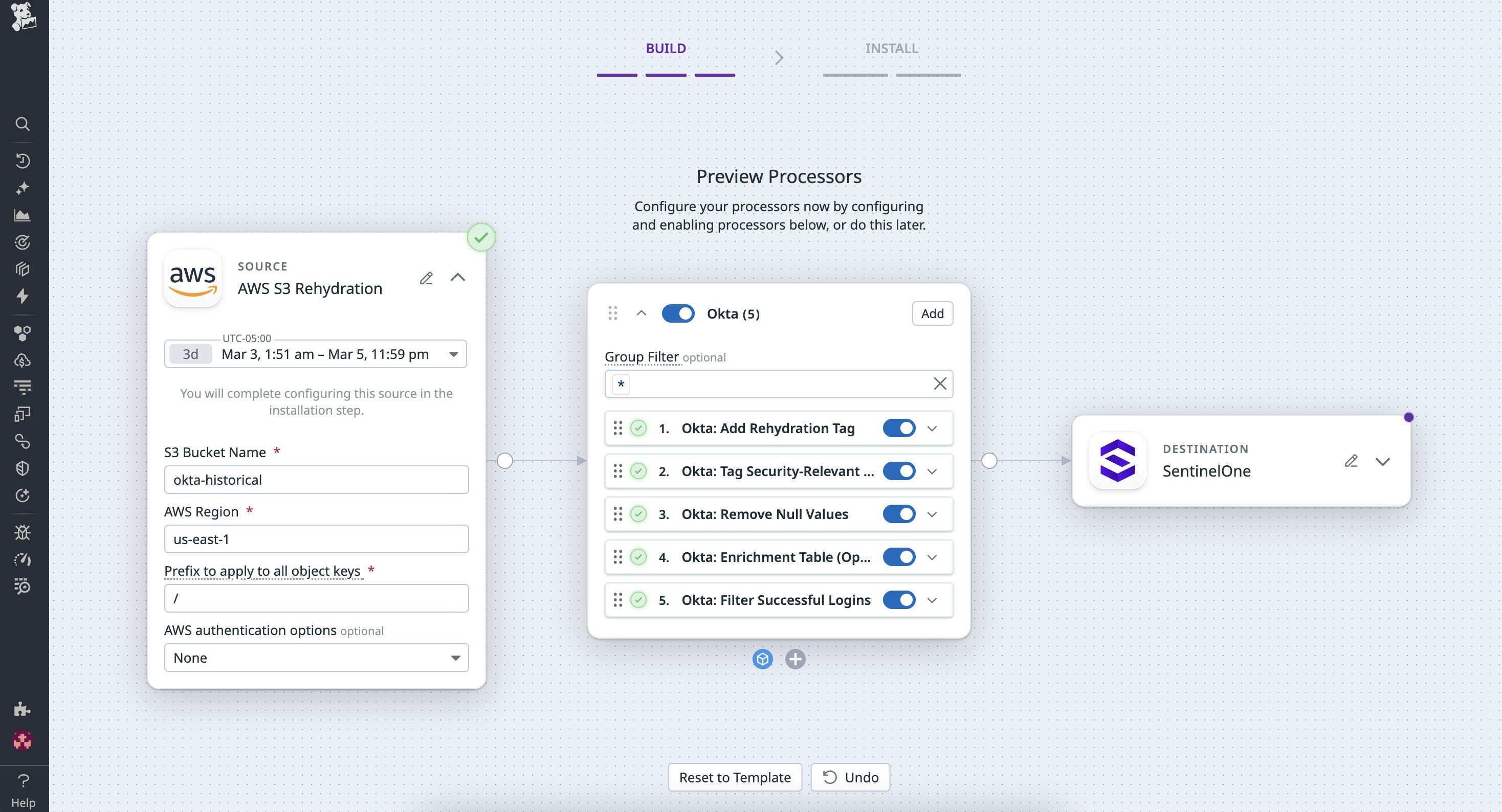

Identity and access analysis with Okta logs

A security team monitoring authentication activity uses Observability Pipelines to collect logs from Okta. Using the Okta Pack, the team is able to reduce their indexed log volume by retaining only high-value logs such as failed login attempts, policy violations, and a select few sampled successful logins in their SIEM tool. All other successful login events are routed to long-term storage in Amazon S3.

When an alert indicates suspicious logins from an overseas location, the analyst opens the Rehydration tab in Observability Pipelines, selects the S3 bucket that Okta logs were archived to, and rehydrates three days’ worth of successful login events from affected users into their SIEM tool.

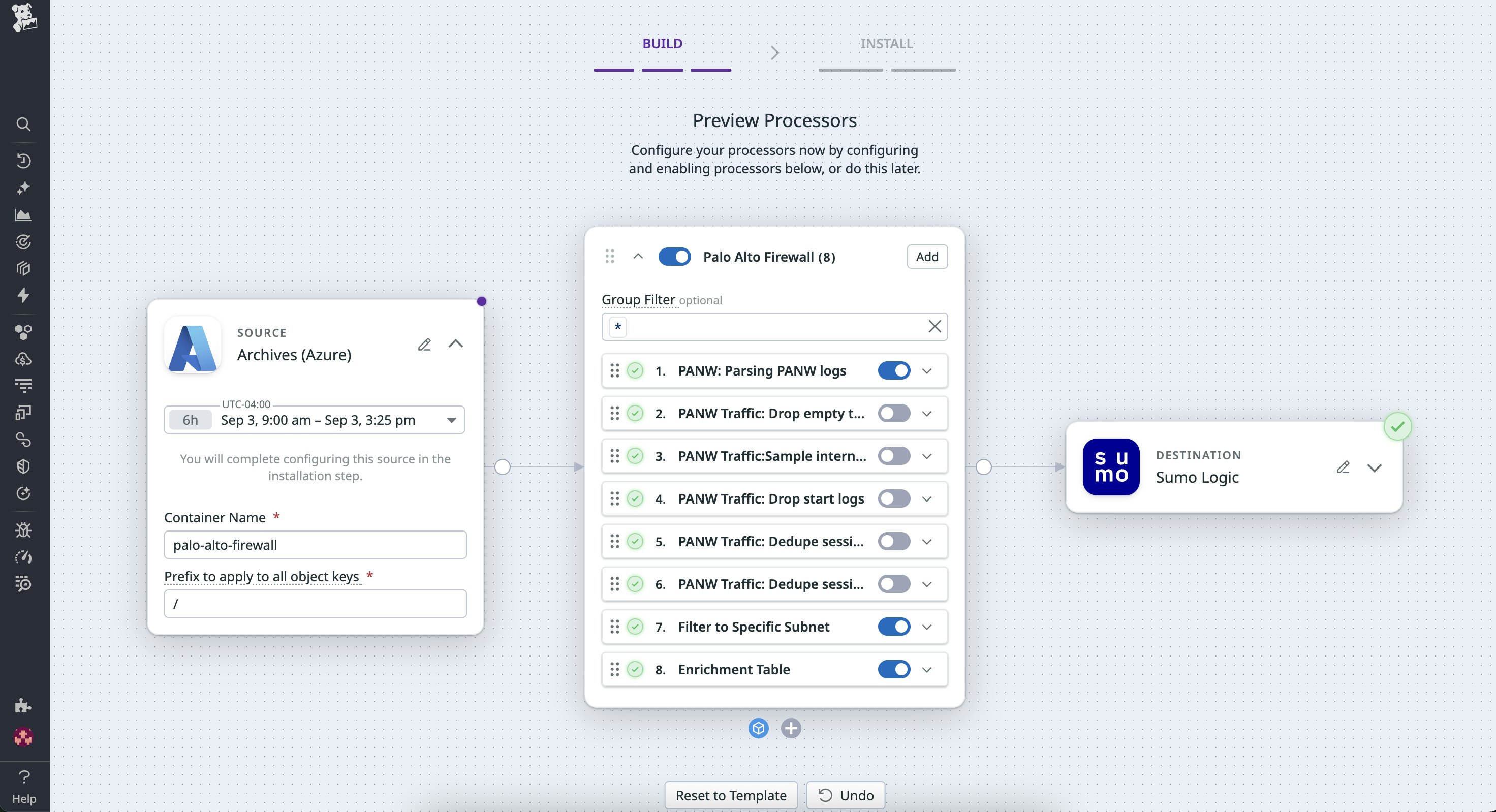

Network troubleshooting with Palo Alto Firewall logs

A network operations team uses the Palo Alto Firewall Pack to parse and enrich firewall logs. To reduce noise, they filter and deduplicate high-frequency TRAFFIC events before sending full-fidelity copies to an Azure bucket for storage. They keep THREAT and SYSTEM logs in their observability tool for real-time visibility.

When users in one data center report latency, the team opens the Rehydration tab in Observability Pipelines, selects the bucket that the TRAFFIC logs are stored in, and retrieves six hours’ worth of archived TRAFFIC logs for the affected subnet. These logs flow through the same parsing and enrichment logic used for live data, which helps engineers confirm that the issue was caused by a misconfigured NAT rule.

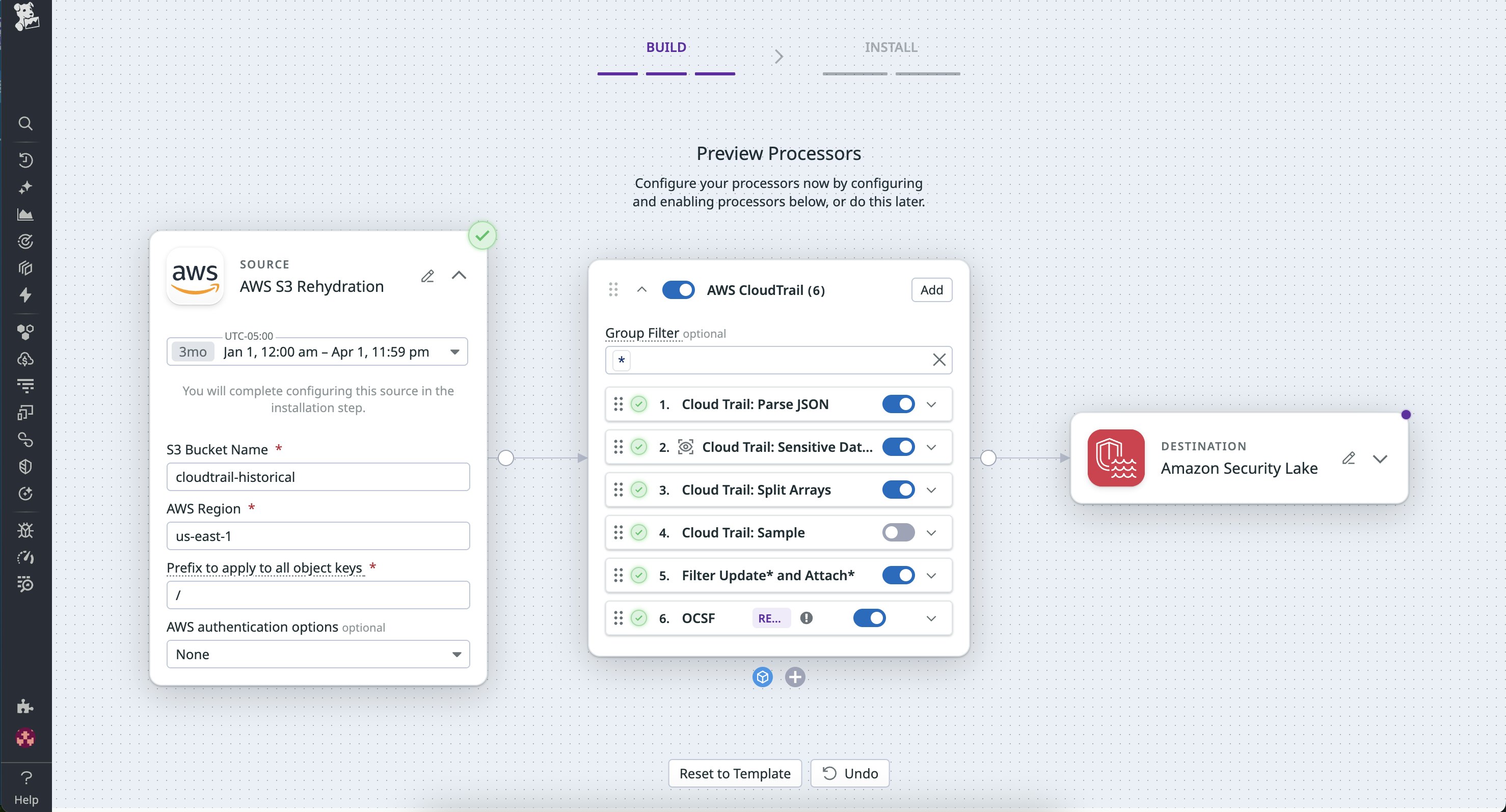

Compliance and configuration audits with CloudTrail

A DevOps team relies on AWS CloudTrail archives to maintain a full record of infrastructure changes. Routine Get* and Describe* events are filtered from live pipelines, but all events are stored in Amazon S3 and readily retrievable via rehydration. When a quarterly compliance review requires evidence of IAM policy changes, the team rehydrates only the relevant Update* and Attach* events from the past quarter. Observability Pipelines processes and routes these logs to their SIEM for review and omits all other logs from the given time frame. Whether it’s used for performance investigations or compliance validation, rehydration lets teams retrieve the specific data they need from cold storage without the need for scripts, decompression, or manual file handling.

Apply parsing, enrichment, and routing logic to log rehydrations

As illustrated in the above examples, once you’ve selected your archived data and time frame, Observability Pipelines handles the rest. Rehydration automatically streams logs from storage through a pipeline. This allows you to apply Observability Pipeline’s processors to parse, enrich, and route rehydrated logs as with any other ingested live data. Based on the previous examples, your engineering teams can process archived logs as follows:

- Security teams can apply field extractions and tagging from the Okta Pack to enrich rehydrated authentication logs with user and geo-IP context before sending them to their investigation workspace.

- Network engineers can run rehydrated Palo Alto Firewall TRAFFIC logs through the parsing and enrichment Pack rules used for live data, ensuring that historical logs share the same schema and context as real-time logs.

- DevOps teams can validate CloudTrail policy-change events by routing rehydrated logs through their enrichment processors before pushing them into their observability tool.

Try Rehydration in Preview

Rehydration for Observability Pipelines is now available in Preview. Whether you’re responding to an incident or performing a configuration audit, Rehydration gives you on-demand access to archived logs with the same consistency and control as live data.

To learn more, visit the Observability Pipelines product page. If you’re not a Datadog customer, sign up for a 14-day free trial to experience how Datadog helps you manage log retention and reprocessing.