Nina Rei

Event-driven systems are great at decoupling services, but they also make incidents harder to untangle. A single user request can turn into dozens (or thousands) of messages, multiple consumers, retries, and delayed acknowledgments. If your tracing only tells you that a message was sent or received, you still have to guess which upstream request produced the message, whether a batch publish fanned out cleanly, and where queue time is accumulating.

Datadog APM now provides producer-aware distributed tracing for Google Cloud Pub/Sub, giving teams enhanced visibility into patterns that show up in real Pub/Sub applications: batch publishing, serverless push subscriptions to Cloud Run, and acknowledgments that happen asynchronously after downstream work completes. With Datadog’s Cloud Run integration and a small Pub/Sub configuration change for push deliveries, you can follow the full message life cycle and keep traces connected across producers and consumers.

In this post, we’ll show you how Datadog APM enables you to:

- Keep producer and consumer traces connected from end to end

- Debug batch publishing fan-out with span links

- Identify Pub/Sub push deliveries in Cloud Run

- Preserve trace context across async acknowledgments

- Configure push subscriptions and Eventarc for full trace visibility

Keep producer and consumer traces connected from end to end

Native approaches for monitoring Pub/Sub messages, such as Cloud Trace, typically generate separate traces for producers and consumers. This makes it difficult to answer questions like “which API request triggered this message?” or “why did this consumer start timing out after a deployment?”

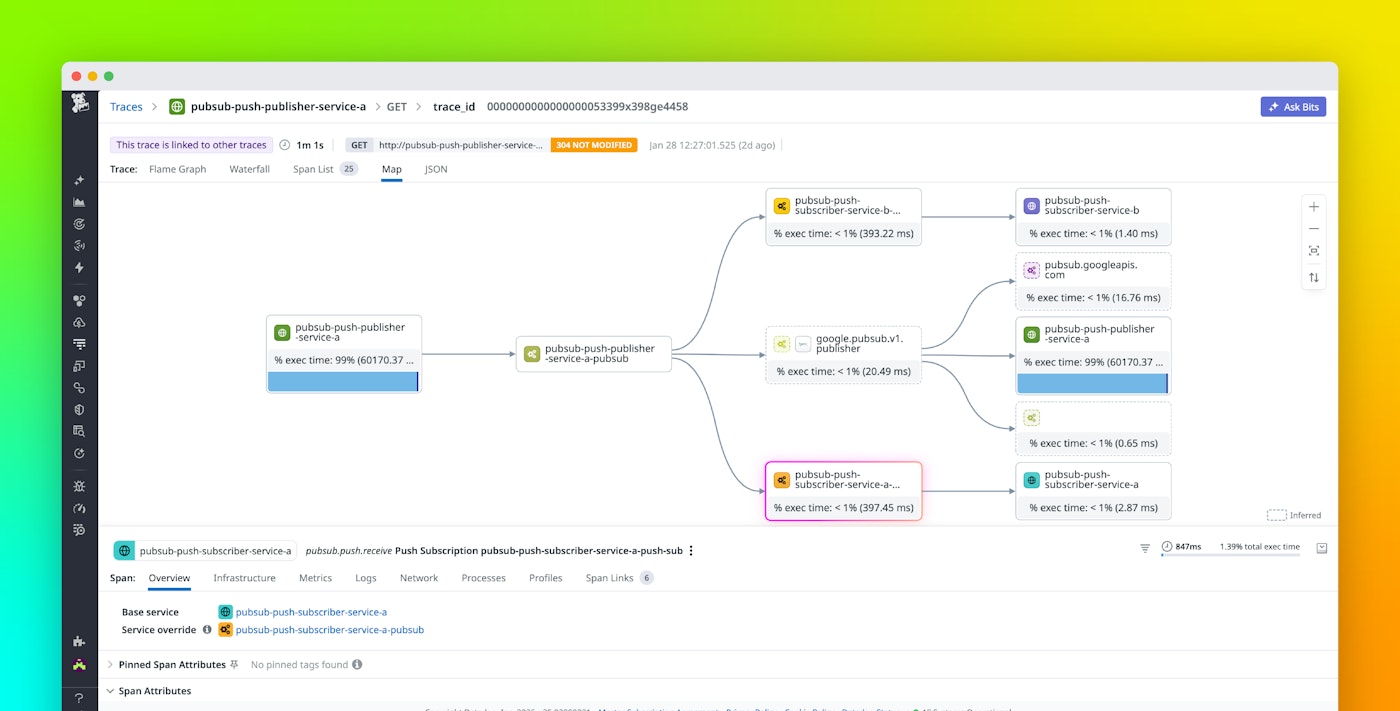

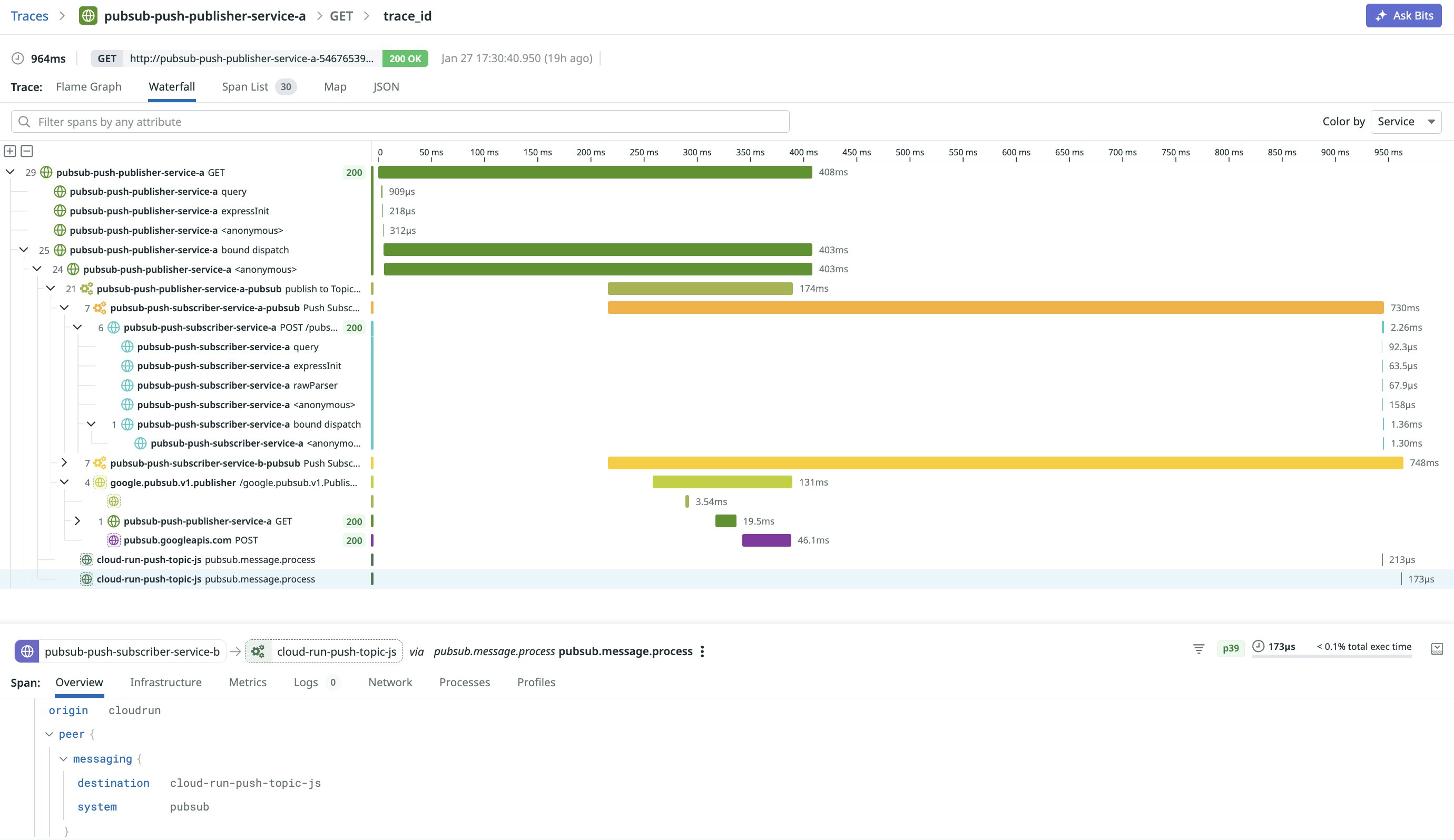

With Datadog APM, published messages automatically carry trace context in message attributes. When the consumer receives the message, Datadog extracts that context and continues the trace, so consumer work appears in the same distributed trace as the producer.

In practice, this means a single user request that produces messages can stay “whole” in APM: The producer’s http.request span can parent the Pub/Sub publish span (e.g., pubsub.request), and each consumer receive span (e.g., pubsub.receive for pull, or pubsub.push.receive for push) can continue from that same trace context.

Measure queue time with producer-injected timestamps

Trace continuity is useful on its own, but it becomes much more actionable when you can separate time spent waiting from time spent processing. Datadog adds a producer-side publish timestamp to each message and calculates delivery latency on the consumer side, surfacing a queue-time signal directly on consumer spans (e.g., pubsub.delivery_duration_ms).

This helps you distinguish between a slow consumer (processing time is high) and an overloaded pipeline (delivery latency is high, indicating backlog or insufficient consumer capacity).

Debug batch publishing fan-out with span links

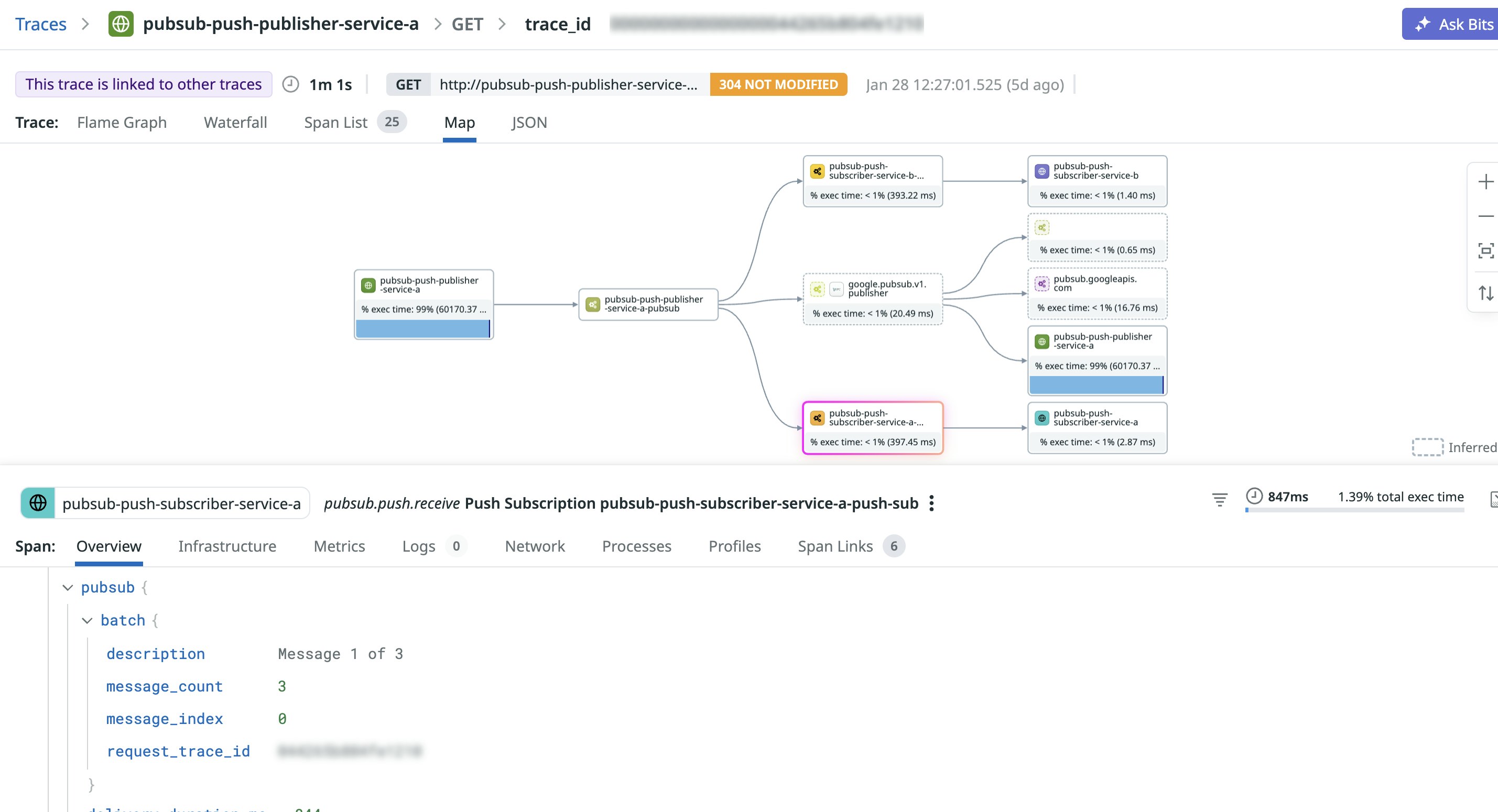

Most Pub/Sub producers don’t publish one message at a time. Client libraries batch publishes under the hood and flush batches based on timing and size thresholds. That batching behavior is great for throughput, but it introduces a visibility problem during incidents: You may see many consumer messages, but you can’t reliably connect them back to the producer’s batch publish operation.

Datadog APM addresses this by detecting batch publishes and representing them as a single publish span, while also attaching span links from each consumer message back to the originating batch publish span. This creates a many-to-one relationship, in which multiple consumer traces can all be linked back to one batch. This setup is particularly helpful for production debugging because you can pivot from the producer-side batch to all messages in that fan-out, and then group and filter those consumer traces by timing or metadata.

To make this usable during real investigations, Datadog enriches consumer spans with batch context, such as batch size and the message’s index within the batch. Instead of treating each message as an isolated unit, you can identify important patterns: for example, if messages after a particular index experienced higher delivery latency, or only a subset of a batch appears to have been processed.

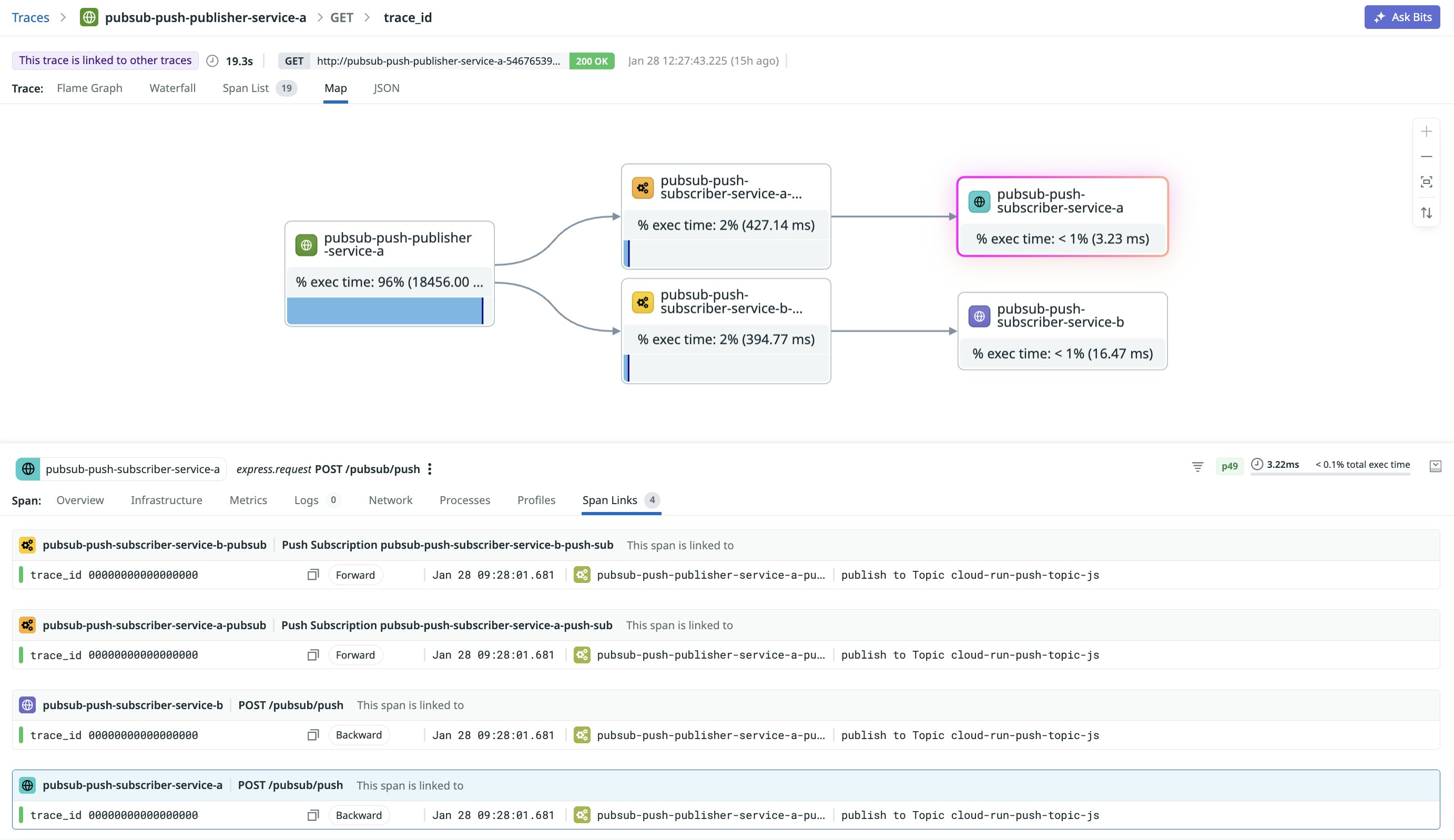

Identify Pub/Sub push deliveries in Cloud Run

Push subscriptions introduce another common tracing gap: The consumer side looks like a generic HTTP request. In Cloud Run, a Pub/Sub push delivery arrives as an HTTP POST, so without Pub/Sub-aware tracing, you’ll see an inbound http.request span with no clear indication that it was triggered by Pub/Sub, which topic delivered it, or which producer trace it should connect to.

Datadog detects Pub/Sub push deliveries and creates a dedicated pubsub.push.receive span that represents time spent in Pub/Sub infrastructure and scheduling prior to delivery. Datadog also reparents the generic consumer HTTP request span under that pubsub.push.receive span, making the trace hierarchy reflect reality: The HTTP request happened because Pub/Sub delivered a message, not because an external client called your service directly.

This is especially useful when Cloud Run services have mixed traffic—direct requests and Pub/Sub-delivered invocations—because it gives you a clear way to separate and analyze those two sources of work.

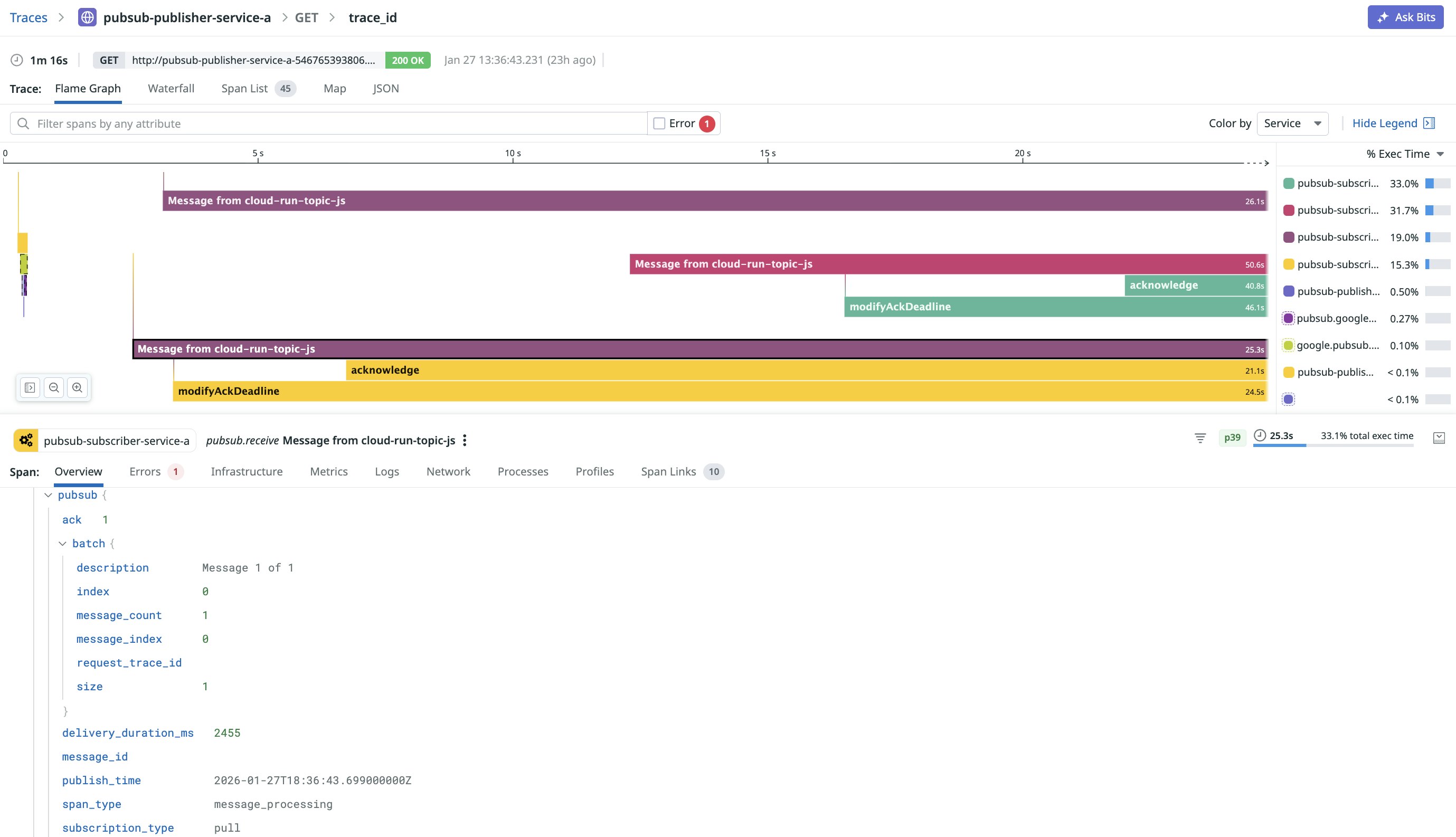

Preserve trace context across async acknowledgments

Acknowledgments in Pub/Sub rarely happen immediately at the end of a message handler. In many applications, the ack is intentionally delayed until a database transaction commits, an external API confirms a change, or a batch processing step completes. From a tracing perspective, that delay is a problem: If the ack happens after the handler returns (or in a different async context), the ack span can become an orphan, or disappear entirely.

Datadog preserves trace context across these async boundaries so that acknowledgments still attach to the original receive span, even when the application calls message.ack() minutes later and the Pub/Sub client batches the underlying acknowledge(ackIds) calls.

When ack spans stay connected, you can:

- Measure the full receive-to-ack duration (i.e., how long a message stayed in-flight)

- Separate application processing time from Pub/Sub acknowledgment latency

- Avoid misleading traces where acknowledgments appear unrelated to the work they finalize

Configure push subscriptions and Eventarc for full trace visibility

For pull subscriptions, trace propagation can work with no Pub/Sub-side changes because the consumer library can read message attributes directly. Push subscriptions are different: The message arrives over HTTP, and depending on configuration, message attributes may be nested in a wrapper payload that is only parsed after HTTP instrumentation has already created the request span. If the producer trace context isn’t available early enough, you can’t reliably connect the consumer back to the producer.

To unlock full producer-aware tracing for push subscriptions, configure your push subscription to write metadata to HTTP headers by using the --push-no-wrapper-write-metadata flag. With that configuration, Pub/Sub places message attributes into headers, which makes trace context available at the start of request handling, early enough for Datadog to create the pubsub.push.receive span and continue the producer trace cleanly.

Note: With payload unwrapping enabled, Pub/Sub delivers raw message data directly as the HTTP request body, instead of the default wrapped JSON that contains metadata and a base64-encoded data field. This means you no longer need to parse nested JSON or base64-decode the message; req.body becomes the original payload sent by the publisher.

Configure a standard push subscription

You can create a new push subscription with the flag enabled:

gcloud pubsub subscriptions create order-processor-sub \ --topic=orders \ --push-endpoint=https://order-processor-xyz.run.app/pubsub \ --push-no-wrapper \ --push-no-wrapper-write-metadataOr update an existing subscription:

gcloud pubsub subscriptions update order-processor-sub \ --push-no-wrapper-write-metadataEventarc-backed Pub/Sub triggers

Eventarc Pub/Sub triggers use push subscriptions under the hood. When you create an Eventarc trigger, Google Cloud creates a managed push subscription automatically, but the trigger creation flow does not expose --push-no-wrapper-write-metadata. If you want producer-aware tracing for Eventarc deliveries to Cloud Run, you’ll need to update the auto-created subscription after the trigger exists.

A typical workflow looks like this:

- Create the Eventarc trigger (standard).

- Identify the auto-created subscription associated with your topic.

- Update that subscription with

--push-no-wrapper-write-metadata.

gcloud pubsub subscriptions update eventarc-...-sub-... \ --push-no-wrapper-write-metadataGet end-to-end Pub/Sub traces in Datadog

Producer-aware tracing for Pub/Sub is most valuable when you’re debugging the failures that only show up in production: partial batch fan-out, queue backlogs that ripple into downstream latency, and delayed acknowledgments that obscure true end-to-end timing. By keeping producer and consumer traces connected, adding batch-aware span links, detecting push deliveries in Cloud Run, and preserving async ack context, Datadog helps you investigate these issues with fewer blind spots.

To get started, install and configure Datadog APM for your Pub/Sub producer and consumer services, then make sure your push subscriptions are configured with --push-no-wrapper-write-metadata. Learn more in our APM documentation. If you’re new to Datadog, sign up for a 14-day free trial.