Micah Kim

Chris Kelner

JC Mackin

Managed security service providers (MSSPs) deliver 24/7 monitoring and incident response for hundreds of customers across large, hybrid environments. As they add more customers and ingest more logs, MSSPs face mounting difficulties in collecting and processing that data before routing it to downstream security tools. Doing this reliably at petabyte scale while accounting for complex, customer-specific taxonomy and compliance requirements is a major challenge. This is especially true when it isn’t possible to use installed agents or forwarders because of resource or routing limitations.

Datadog Observability Pipelines provides a lightweight, self-hosted, centralized pipeline for ingesting logs across diverse customer environments. It enables MSSPs to receive data locally from any source and apply parsing, enrichment, and tagging rules before routing it to security platforms such as Microsoft Sentinel, CrowdStrike, Datadog Cloud SIEM, and ClickHouse.

In this post, we’ll show how Observability Pipelines helps MSSPs:

- Deploy lightweight, centralized collection across diverse customer environments

- Standardize and enrich logs to support consistent downstream security workflows

- Support smooth migrations from legacy SIEMs to modern platforms

Deploy lightweight, centralized log collection across diverse customer environments

Collecting petabyte-scale log volumes across hundreds of customer environments is difficult, particularly when installing a forwarder or proprietary agent on each device may not be feasible. Many MSSPs support environments where resources are constrained or legacy systems cannot accommodate additional software. These limitations can increase operational overhead and make it harder to collect data consistently, leading to observability blind spots.

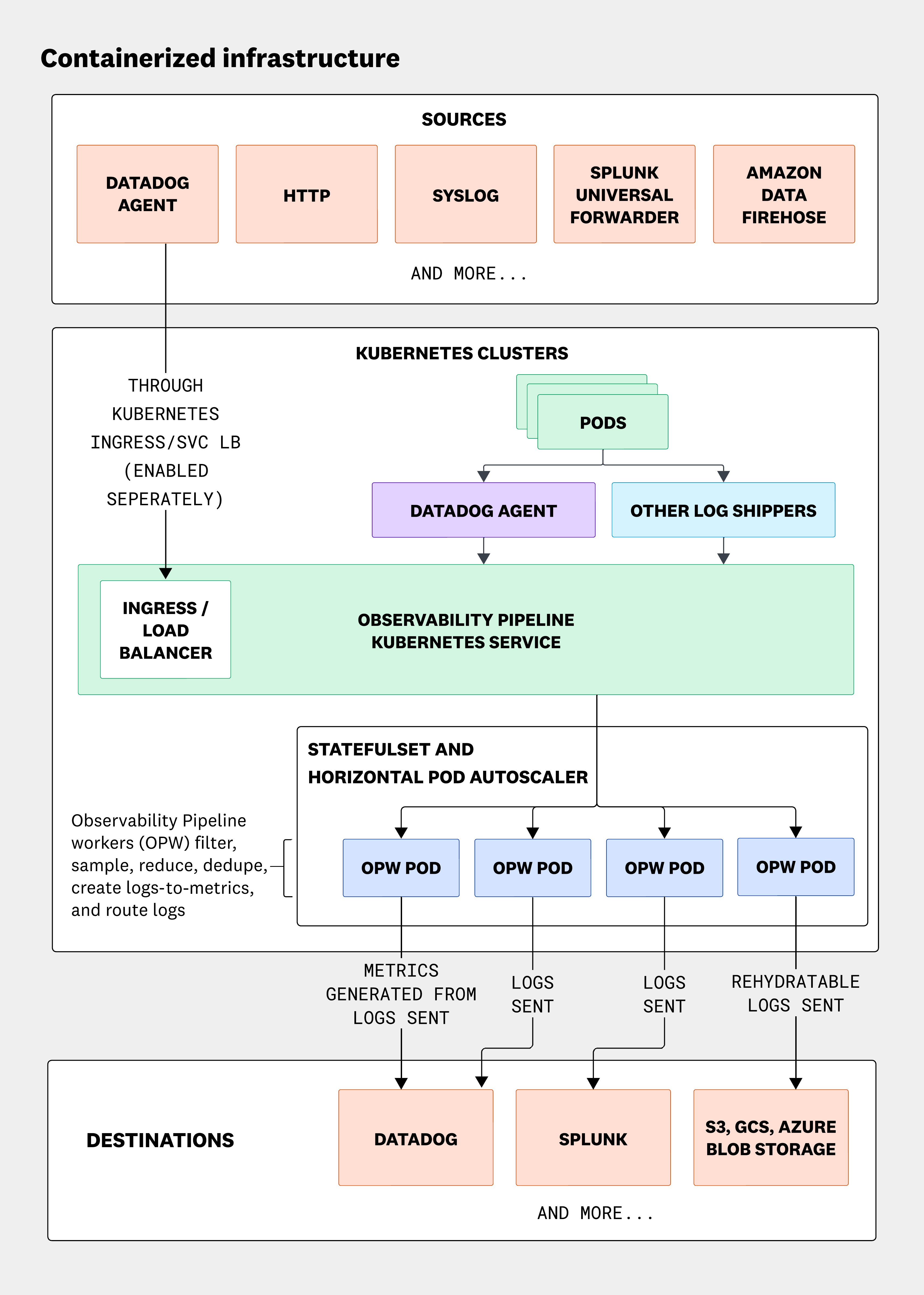

Observability Pipelines enables MSSPs to shift from per-endpoint agents to a central aggregation layer for all their log sources. Instead of installing heavy forwarding agents across every Windows server, Linux host, or network device, MSSPs can deploy VMs or containerized collectors per customer environment. Windows logs can be aggregated through tools like NXLog or Windows Event Forwarding; Linux environments can use rsyslog or syslog-ng; and network appliances can forward syslog directly. Every environment can use the same architectural pattern, significantly reducing overhead while increasing reliability.

Beyond migrating to modern SIEMs, security teams are also increasingly adopting data lakes that provide self-hosted storage and analytics capabilities at petabyte scale. By shipping high volume logs to data lakes like Datadog CloudPrem, for example, MSSPs can ensure that enriched, normalized logs are available for fast investigation and querying. This kind of move enables troubleshooting by analyzing data stored within the customer’s own environment.

Standardize, parse, and enrich logs for consistent security workflows

Before logs can support investigations, detections, or retention policies, they must be normalized into structured, interpretable formats. MSSPs serving hundreds of tenants need each customer’s logs to conform to a predictable taxonomy so that detection rules are consistent and security teams spend less time correcting malformed fields.

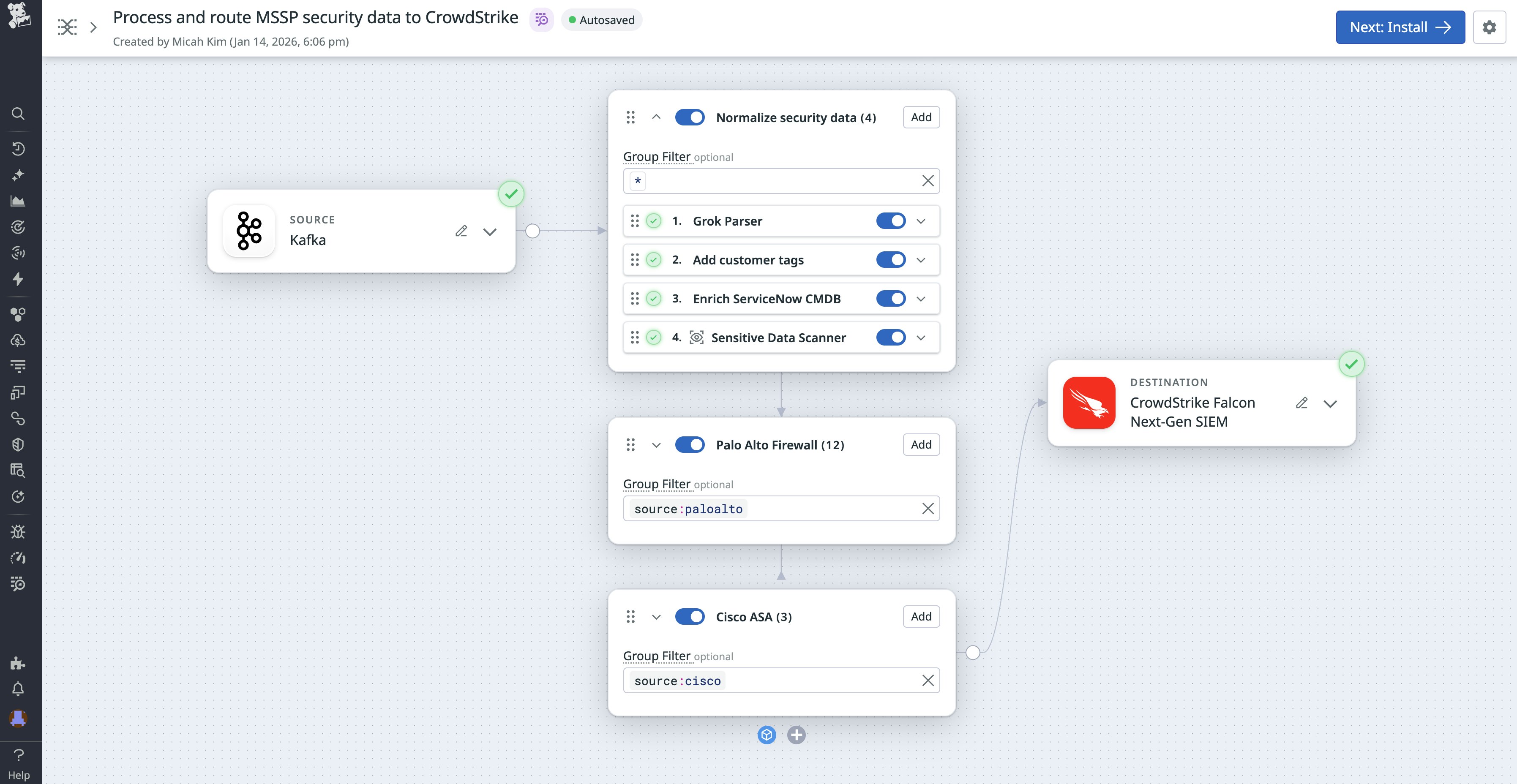

Observability Pipelines includes a broad suite of processors for parsing, standardizing, and enriching logs before they reach any SIEM or data lake. MSSPs can use Grok, JSON, or XML parsers to structure unformatted logs; apply hostname or service tagging to restore missing context; redact sensitive data with Sensitive Data Scanner; and enrich logs on-stream with SaaS-based Reference Tables from systems like ServiceNow CMDB, Snowflake, and S3 to add business context.

Support smooth migrations from legacy SIEMs to modern platforms

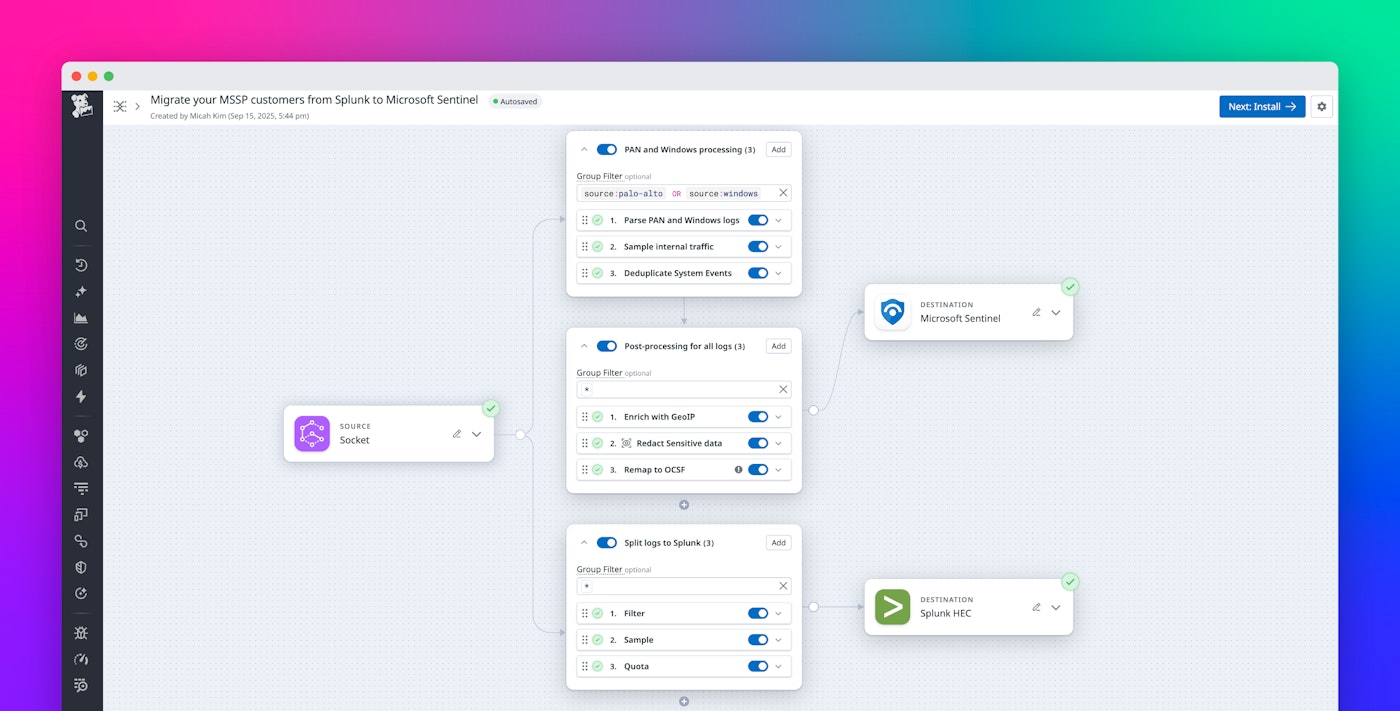

Many MSSPs maintain legacy SIEM deployments like Exabeam or Securonix while onboarding new customers onto a modern platform such as Google SecOps or CrowdStrike. Migrating all data for all customers simultaneously is rarely feasible as detection rules need to be tailored, retention requirements vary, and SOC workflows need time to adapt. During this transition, MSSPs usually split data between the old and new SIEM platforms while validating and tuning the new environment.

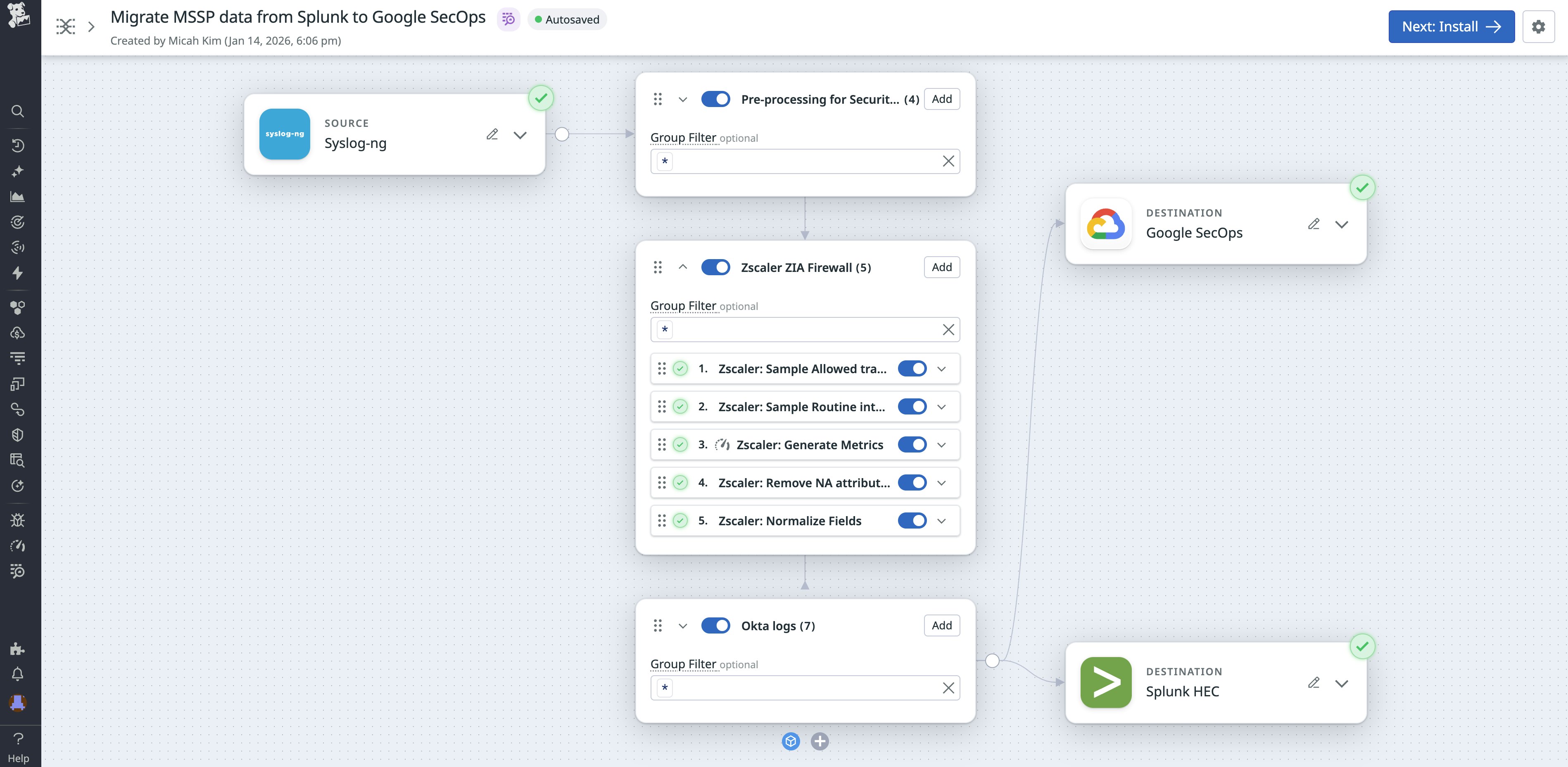

Observability Pipelines makes these migrations far more manageable by enabling dual-shipping and phased transitions. MSSPs can route logs to both their legacy SIEM and a modern SIEM from the same pipeline, without duplicating collection. As each customer is ready to migrate, the processing and routing rules can be updated in one place, no endpoint reconfiguration required. This reduces the risk and operational burden of large-scale SIEM modernization projects and allows MSSPs to validate detections, retention strategies, and dashboards before cutover.

For example, if you’re leading a migration from Splunk to Google SecOps, you can configure Observability Pipelines to simultaneously dual-ship logs to both destinations. This lets your teams start migrating scoped sets of data and detections from your legacy tooling to your preferred destination.

Move toward unified, scalable log operations for MSSPs

Observability Pipelines gives MSSPs a foundation for consistent, reliable, and scalable log collection across customer environments. By centralizing ingestion, supporting high-volume and diverse sources, enforcing consistent parsing and enrichment, and enabling smooth SIEM transitions, MSSPs can modernize their data aggregation, processing, and routing workflows without sacrificing control or increasing overhead.

To learn more about implementing Observability Pipelines, visit our documentation. And if you’re new to Datadog, sign up for a 14-day free trial.