Eric Metaj

Moving quickly is essential for modern engineering teams, but speed without guardrails can introduce hidden risks in testing. As organizations scale, teams often define and apply coverage standards inconsistently across services and repositories. What qualifies as “acceptable coverage” in one project may be completely different in another. Without automated enforcement, untested code can slip through reviews. Over time, those gaps can appear as reliability issues that are harder to trace back to the original changes.

With Datadog Code Coverage, teams can move fast without breaking things across their codebases. This capability surfaces test coverage insights directly in CI pipelines and pull requests (PRs) to enable platform engineers to help enforce consistent testing standards across repositories. Instead of relying on manual checks, institutional knowledge, or one-off scripts, teams gain automated controls that apply the same coverage criteria to every change before it’s merged. These controls evaluate both the overall coverage of a service and the coverage introduced by the current diff, surfacing results directly in the PR so developers and reviewers can address gaps while changes are still under review.

This post shows how Code Coverage translates those controls into shared coverage thresholds, automated gates, and PR-native feedback to help teams maintain consistent testing standards as they scale.

Unified standards across teams and repositories

Most high-velocity organizations struggle to ensure that every team evaluates test coverage consistently. With decentralized tooling and diverse repositories, threshold drift and testing expectations become unclear. Datadog Code Coverage helps reduce the ambiguity by allowing teams to standardize coverage criteria across all repositories, ensuring that every service and team operates from a unified definition of quality.

This consistency builds a shared understanding of what “good testing” looks like, regardless of tech stack, repository provider, or team structure.

Automated gates for untested code

Even when teams agree on testing standards, enforcing them is another challenge entirely. Manual reviews are error-prone and slow. Context switching between tools can lead to oversights and unintended mistakes.

Coverage checks that aren’t automated can unintentionally allow untested code to be merged into main branches. Over time, ad hoc exceptions and local workarounds make it more difficult to apply coverage expectations consistently across services and repositories.

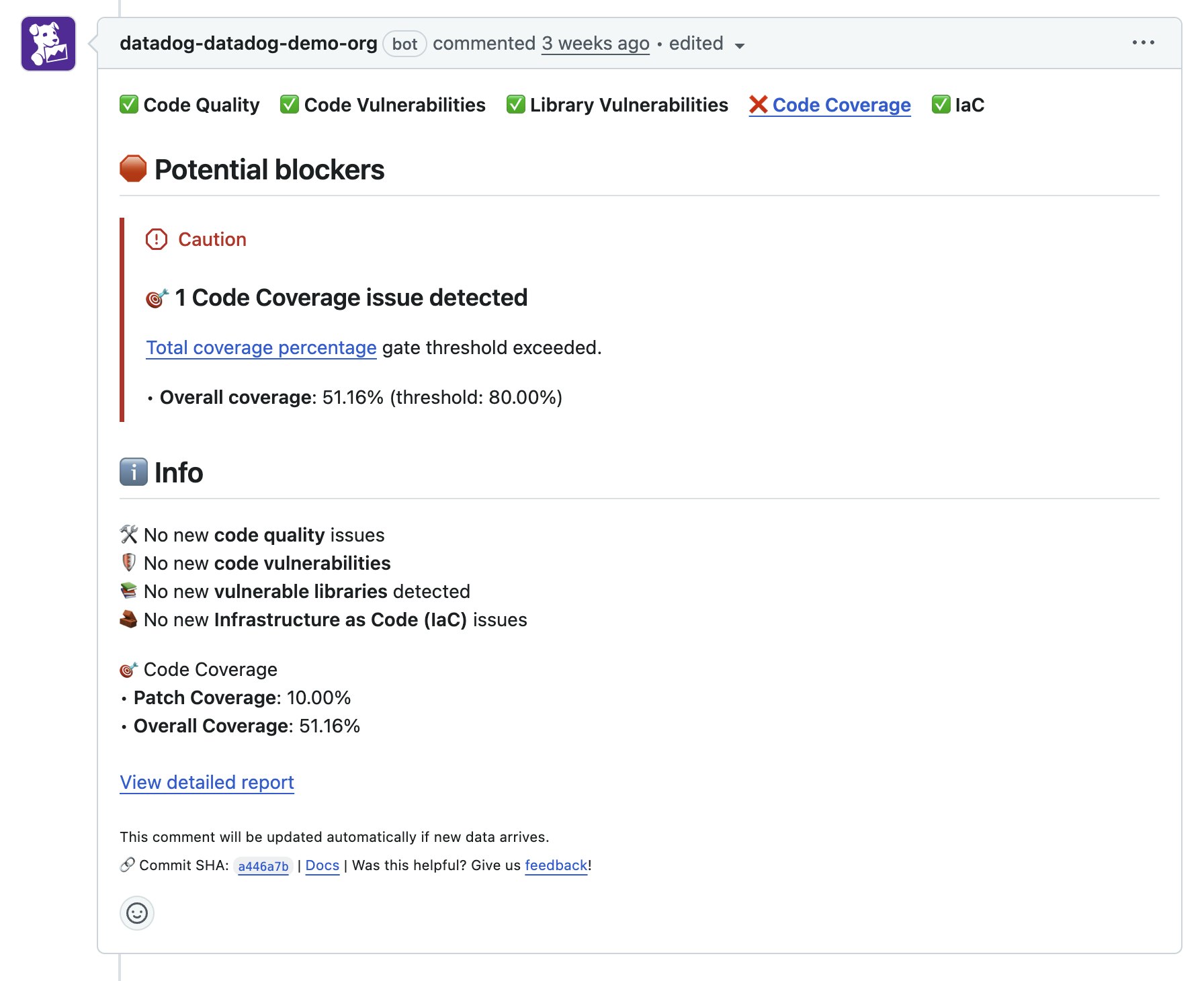

Datadog Code Coverage enables teams to apply quality gates for overall and patch coverage, automatically blocking merges that don’t meet defined thresholds. These PR gates ensure testing standards are consistently enforced on every pull request and every repository, without requiring manual review. Because the same rules apply wherever code is hosted, engineers work from a single definition of acceptable coverage rather than a patchwork of team conventions.

By preventing untested changes from merging, teams protect their codebase from the reliability risks that coverage decay introduces. This reduces the chance that coverage gaps can accumulate unnoticed, allowing teams to maintain development velocity while still meeting organizational testing standards.

Instant feedback where developers already work

Developers work more efficiently when they can see whether their code meets testing standards without leaving a pull request. Datadog surfaces coverage insights and gate results directly in pull request comments, giving engineers fast, clear feedback. When a PR fails a coverage gate, developers can quickly see what happened and why. This makes it easier to add the right tests before the code merges.

This tight feedback loop strengthens testing discipline and helps teams maintain momentum without slowing down development.

Faster delivery without sacrificing quality

Standardized thresholds, automated gating, and PR-native feedback in Code Coverage give teams a reliable and consistent way to enforce testing standards across services, teams, and repositories. This approach can support faster development with fewer surprises because every code change is evaluated using consistent rules, with clear feedback at the right moment.

With Code Coverage, teams no longer need to choose between speed and quality. They can move quickly and confidently without breaking things.

Datadog Code Coverage is now generally available. To learn more, read our documentation and blog post describing its capabilities. If you’re not already a Datadog customer, sign up for a free 14-day trial today.