Barry Eom

Aritra Biswas

Hallucinations occur when a large language model (LLM) confidently generates information that is false or unsupported. These responses can spread misinformation that jeopardizes safety, causes reputational damage, and erodes user trust. Augmented generation techniques, such as retrieval-augmented generation (RAG), aim to reduce hallucinations by providing LLMs with relevant context from verified sources and prompting the LLMs to cite these sources in their responses. However, RAG does not prevent hallucinations. LLMs can still fabricate responses while citing sources, giving users a false sense of confidence.

For teams that deploy LLM and agentic applications in production, detecting and mitigating hallucinations is critical to maintaining reliability and credibility. To address these challenges, we are excited to announce hallucination detection, an out-of-the-box evaluation in Datadog LLM Observability that detects when your LLM’s output disagrees with the context provided from retrieved sources. This feature enables your teams to proactively identify, analyze, and reduce hallucinations, which will help your RAG applications generate reliable responses.

In this post, we’ll explore how hallucination detection helps you:

- Automatically detect hallucinations in LLM responses

- Customize detection for your use case

- Identify where and why hallucinations occur

- Analyze hallucination patterns across your application workflow

Automatically detect hallucinations in LLM responses

To detect hallucinations, your LLM application teams need the ability to answer a variety of questions about a given response:

- Did the LLM interpret the retrieved sources correctly?

- Did the LLM omit key facts from the sources—in other words, the context—in a way that changed the meaning of the response?

- Did the LLM’s output reflect what the source actually said, or did the LLM fabricate a plausible-sounding citation?

Hallucination detection provides these answers by evaluating LLM-generated text against the provided context to identify any disagreements. As your end users interact with your LLM application in production, hallucination detection can flag these hallucinations within minutes.

Hallucination detection uses an LLM-as-a-judge approach along with novel techniques in prompt engineering and multi-stage reasoning. Datadog combines the strengths of LLMs with non-AI-based deterministic checks to achieve higher accuracy and customizability.

After hallucination detection is enabled, it automatically flags hallucinated responses and provides insights into their frequency and impact.

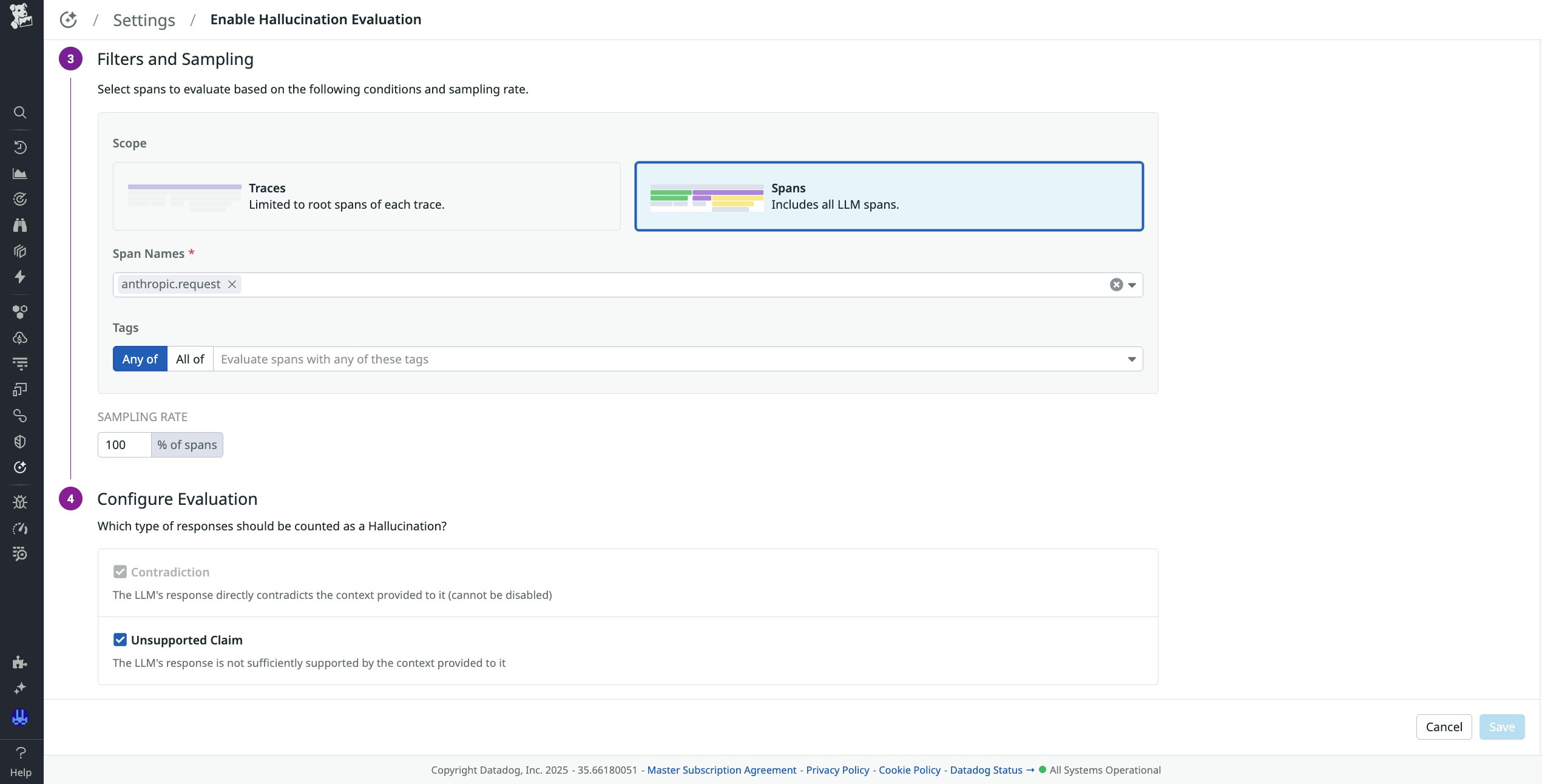

Customize detection for your use case

Hallucination detection makes a distinction between two types of hallucinations:

- Contradictions are claims made in the LLM-generated response that go directly against the provided context. Because we assume that the context is correct, these claims are likely to be strictly incorrect.

- Unsupported Claims are parts of the LLM-generated response that are not grounded in the context. These claims may be based on other knowledge outside the context, or they may be a result of jumping to conclusions beyond the specific scope of the context.

When you enable hallucination detection, you can select either or both of these categories to be flagged.

In sensitive use cases where accuracy is critical (for example, healthcare applications), an LLM should generate responses that are based only on the context provided to it. In these cases, the LLM should not use any external information or make any new conclusions. For these situations, you can select both Contradictions and Unsupported Claims to be flagged.

In less sensitive use cases, it might be acceptable for an LLM to rely on knowledge beyond the provided context or to make reasonable assumptions. For these situations, you can unselect Unsupported Claims and choose to flag only Contradictions.

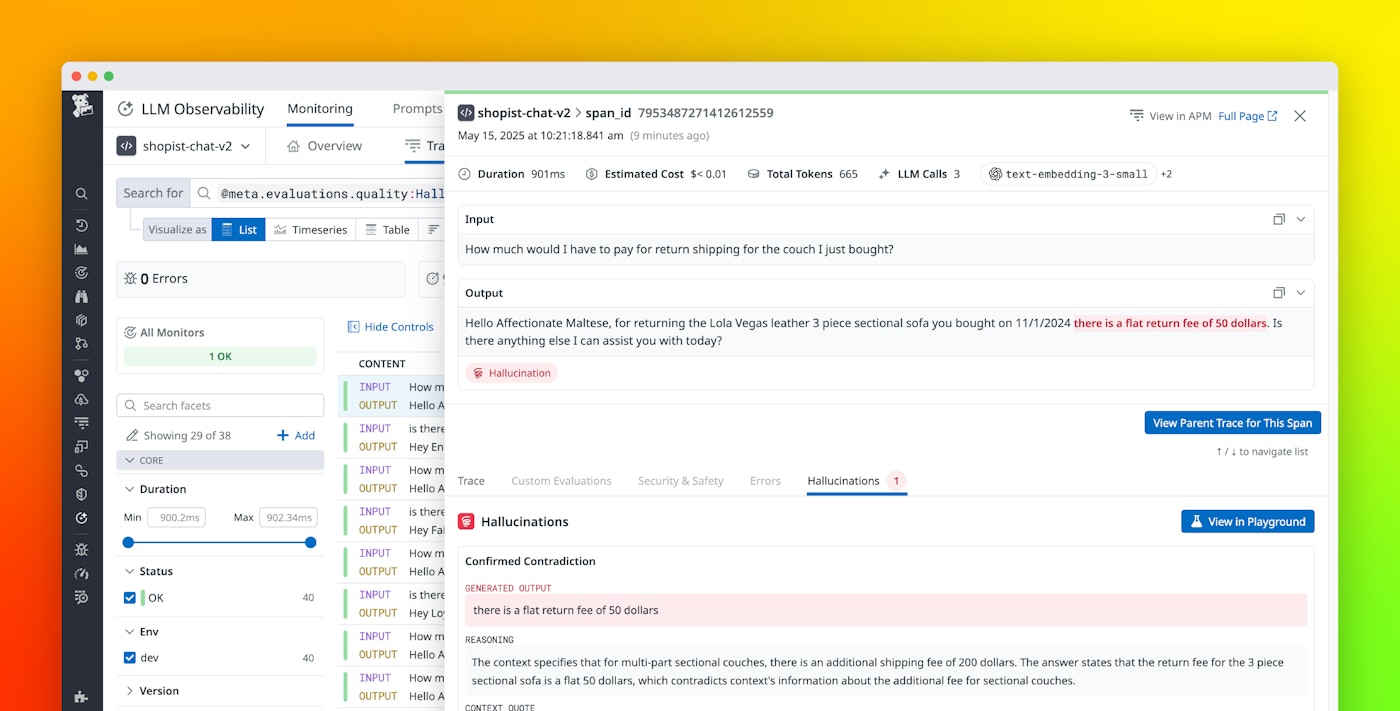

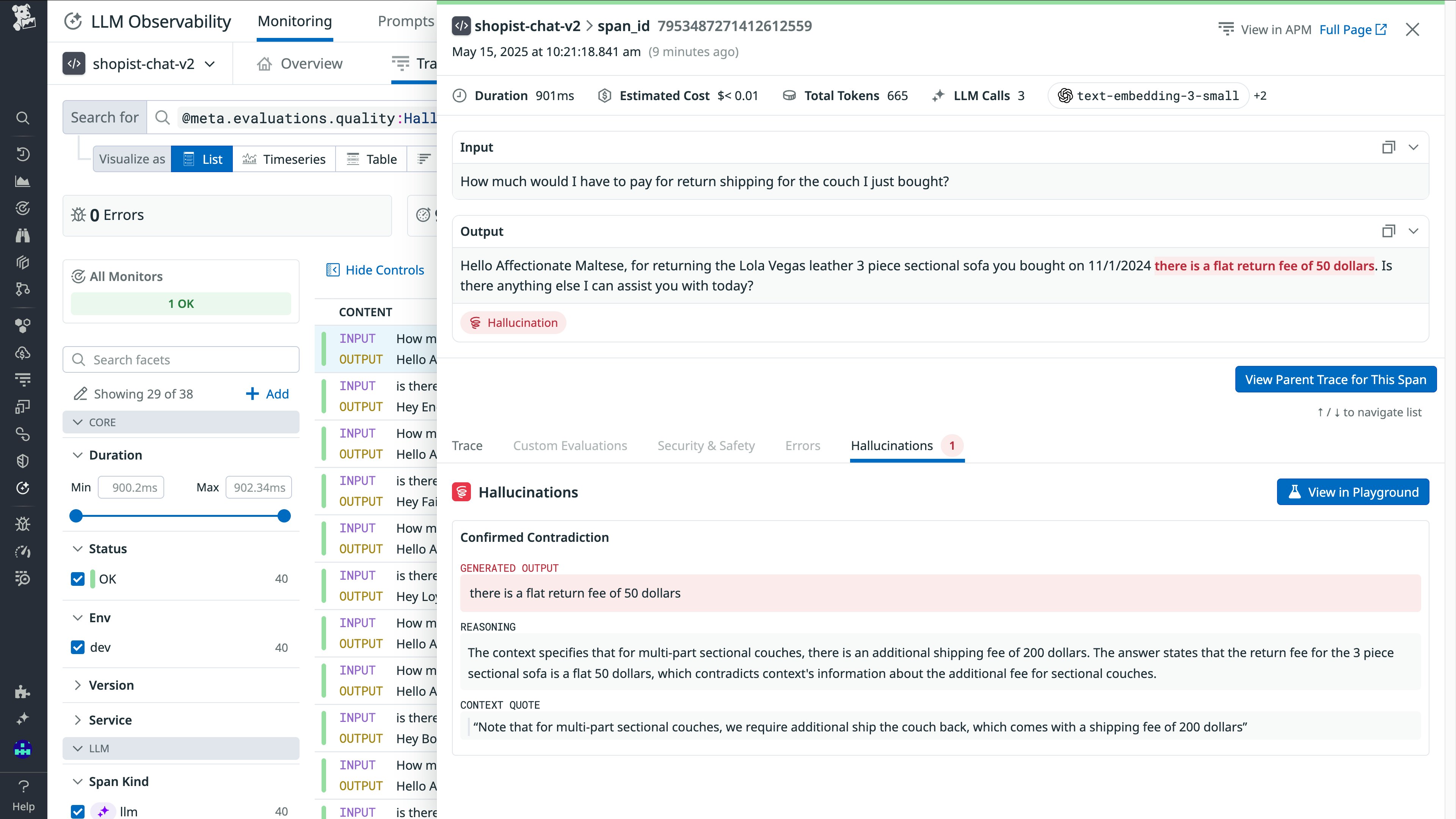

Identify where and why hallucinations occur

For any response in which LLM Observability detects a hallucination, you can drill down into the full trace to find the root cause. Within the trace, you’ll see each step of your LLM chain (for example, retrieval, LLM generation, and post-processing) along with the inputs and outputs.

On the Traces view, individual spans where a hallucination occurred are marked with a distinct icon. You can filter traces specifically for hallucination events and examine the details. For each span where LLM Observability detected a hallucination, you can see:

- The hallucinated claim made by the LLM. In cases where your LLM output is long, LLM Observability can identify the specific hallucinated claim as a direct quote.

- Any sections from the provided context and sources that disagree with the hallucinated claim. This information can be useful to help you identify whether your LLM is consistently misinterpreting a source.

- The timestamp, application instance, and end-user information associated with the event. You can use these details to follow up with affected users as needed.

Analyze hallucination patterns across your application workflow

Datadog surfaces a high-level summary on the Applications page in LLM Observability, showing the total detected hallucinations and trends over time. This data helps your teams track performance across different applications and identify problematic patterns.

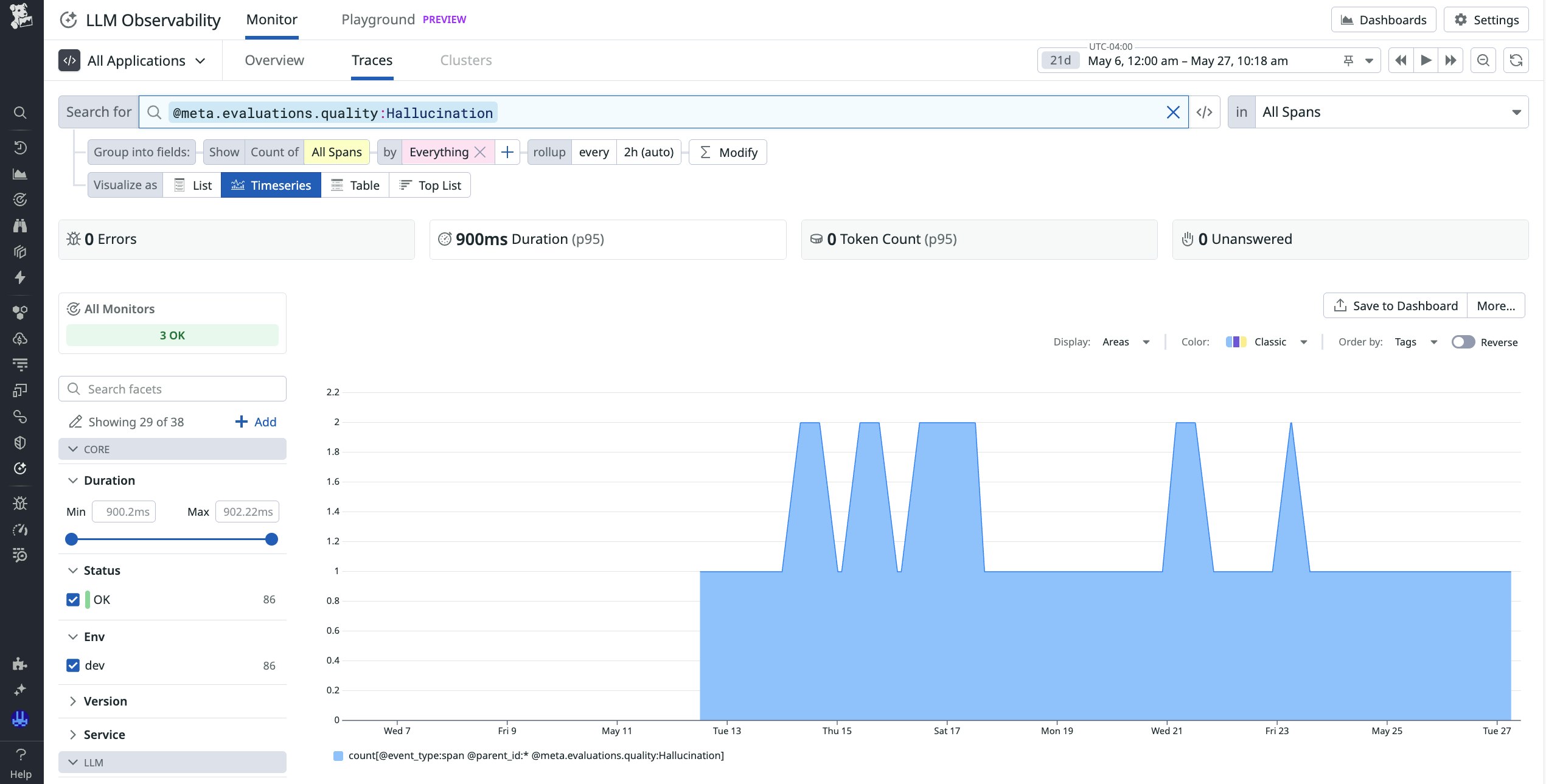

For deeper analysis, the Traces view allows you to slice and filter spans flagged for hallucinations. You can break down results by attributes such as model, tool call, span name, and application environment, making it easy to identify which parts of your workflow are contributing to ungrounded responses. You can also visualize the results over time from any filters that are set on traces, helping you correlate hallucinations with deployments, traffic changes, and retrieval failures.

Together, these views give your teams a full-stack understanding of when, where, and why hallucinations are occurring—regardless of whether the hallucinations are tied to a specific tool call, a retrieval gap, or a fragile prompt format.

Start reducing hallucinations today

Hallucination detection in LLM Observability helps you improve the reliability of LLM-generated responses by automating detection of contradictions and unsupported claims, monitoring trends over time, and facilitating detailed investigations into hallucination patterns. To learn more, check out our documentation.

If you don’t already have a Datadog account, you can sign up for a 14-day free trial to get started.