Aaron Kaplan

Harel Shein

Jonathan Morin

Data lineage is the evolutionary history of datasets. More concretely, lineage is metadata that captures the flow and transformation of data in data pipelines, also called the data lifecycle. (Data pipelines, broadly defined, are any sequential data collection, transformation, and delivery processes.) By describing where our data is coming from, where it is going, and what has happened to it along the way, lineage helps us understand the nature of our datasets, enabling us to ensure and troubleshoot data quality, security, and compliance. This visibility is essential to data engineering, governance, business intelligence, and analytics in general. And it is particularly critical given the complexity of modern data pipelines, which are often collaboratively managed by diverse teams with limited knowledge of each other’s operations.

In this post, we’ll cover the fundamentals of data lineage and its role in fostering healthy and secure data ecosystems. In the process, we’ll also cover the basics of OpenLineage, the open source, industry-standard framework for lineage collection.

The importance of lineage

By helping us better understand the abundance of data in our pipelines, lineage helps us foster healthy data ecosystems and strengthen our analytics. The ability to understand and track lineage plays a key role in improving reliability, facilitating data provenance and discovery, and ensuring compliance.

Improving reliability

Ultimately, the reliability of data pipelines comes down to the quality and availability of the data within them: Is the data you need readily accessible? Is it accurate and up to date? These are the basic questions of reliability—questions that many teams must answer on a continual basis.

When it comes to availability, the issue is timely, dependable throughput from data producers. By contrast, how data quality is measured will vary from organization to organization and from team to team. Generally speaking, data quality tests check for things like:

- Is the volume of our data what we expect it to be?

- Do the values in each column fall within expected ranges?

- Are our distributions correct?

- Do our schemas look right? Are there unexpected or missing columns?

- Are there higher-than-expected proportions of null values?

When the quality or availability of our data is compromised, we can use lineage to trace the issue to its causes upstream. For example, lineage can help data engineers conduct root cause analysis for pipeline health and performance issues. Let’s say you’re alerted to a job failure in your pipeline—you can use lineage to quickly trace the issue to its source, whether it’s an upstream schema change or faulty aggregation logic. And data analysts can use lineage to verify the accuracy and freshness of their data. Perhaps a misconfiguration in your data platform’s ingest layer causes duplication of records, skewing your metrics. Lineage can help you quickly trace the issue to its source.

By helping us track the flow of our data downstream, lineage can help us stay ahead of such issues. In other words, lineage enables effective impact analysis in pipelines at scale, helping us understand how changes we make to our datasets will affect downstream dependencies in order to prevent adverse effects on data consumers. This makes lineage essential to change management in pipelines at scale.

Facilitating data provenance and discovery

As data travels through pipelines, it transforms and proliferates. Data pipelines generally comprise many stages of filtering, merging, masking, and aggregation. By showing us where our data is coming from and where it has been—in other words, our data provenance—lineage helps us navigate the abundance of data in our pipelines, enabling us to ensure we are deriving the right data from the right layers for our purposes.

For example, let’s say you’re analyzing customer data for your organization and plan to share your findings with a third party. Perhaps your pipeline touches many different tables labeled “customers,” containing data that is produced and consumed for a wide variety of purposes—processed in varying ways, to varying degrees. You can use lineage to ensure that you’re using the right dataset—one that has been scrubbed of spam, artifacts of internal usage, and PII. This brings us to our next topic.

Ensuring compliance

Lineage is often essential to compliance with data regulations. For example, GDPR, HIPAA, BCBS 239, CCPA, and other regulations call for exhaustive records of when and where private data is collected, processed, and shared. Lineage can also be used to verify the correctness of financial records by documenting the end-to-end flow of transactions—facilitating FINRA or SEC audits, for example.

We have established why lineage is important. Let’s move on to the question of how lineage is collected and used in practice.

Lineage in practice

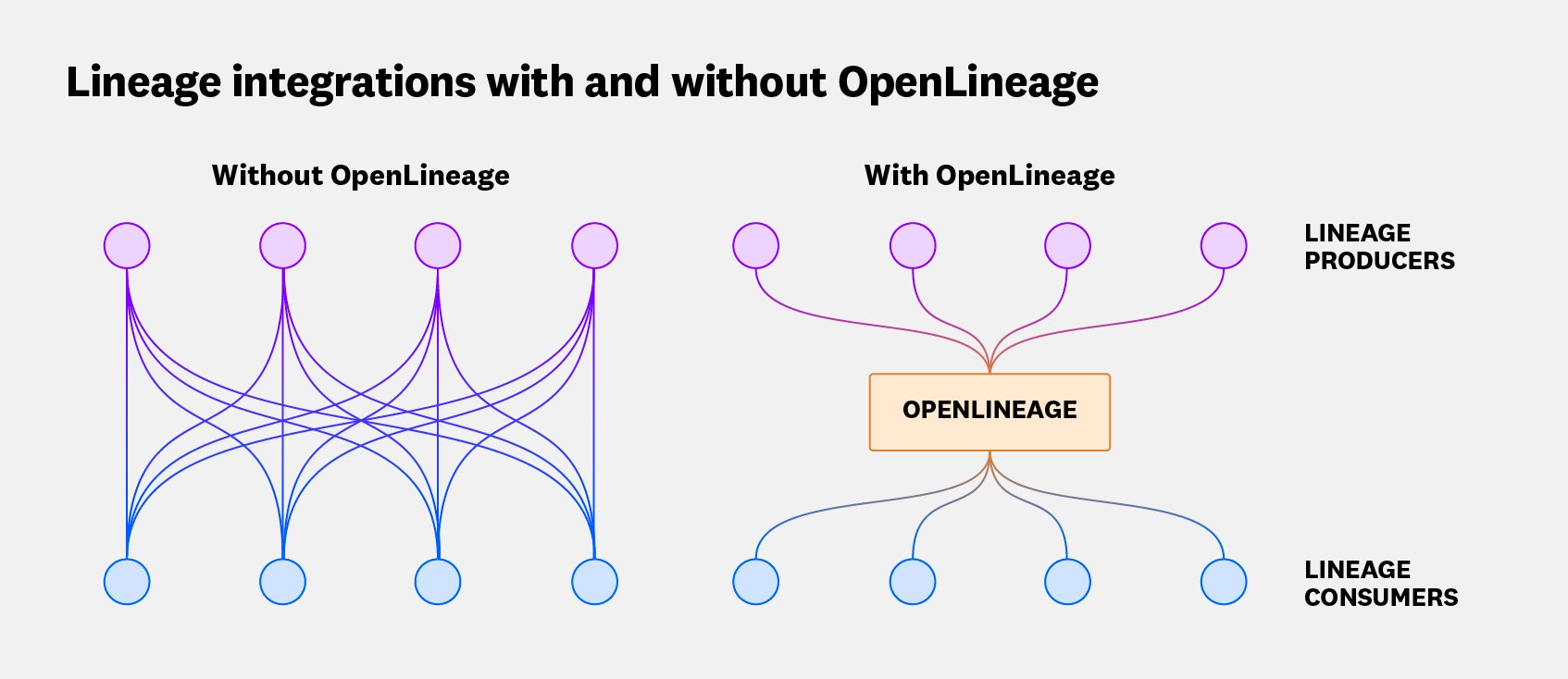

Historically, the process of collecting and correlating lineage metadata has presented major challenges. On top of the complexity of manually instrumenting pipelines and annotating lineage, there is another main problem: among the wide variety of tooling and storage types that comprise the modern data stack, there is no consistent standard for metadata. This has constrained interoperability and necessitated the ad hoc development and maintenance of highly brittle lineage solutions. These siloed projects have entailed the custom instrumentation of every possible type of data processing job, and the development of integrations that break frequently as data sources, schedulers, and processing frameworks change.

OpenLineage is an open source specification for data lineage that was introduced to address these problems. Since its creation in 2020, OpenLineage has become the industry standard for data lineage. Its extensibility enables consistent collection of lineage metadata across a growing number of platforms, with integrations for both producers (e.g., Airflow, Snowflake, BigQuery, Spark, Flink, dbt) and consumers (e.g., Datadog, Marquez) of lineage.

In order to illustrate how lineage works in practice, let’s examine the OpenLineage core model, which distills lineage to its essential components.

Key lineage metadata

The OpenLineage core model is based on a JSON Schema spec that defines three basic types of lineage metadata: datasets, jobs, and runs.

- Datasets are representations of data.

- Jobs are reusable data-processing workloads: repeatable, self-contained processes of reading and writing data, such as SQL queries, Spark or Flink jobs, or Python scripts.

- Runs are specific instances of jobs (at specific times, with specific parameters).

The core model uses consistent naming for jobs (scheduler.job.task—e.g., my_app.execute_insert_into_hive_table.mydb_mytable,) and datasets (instance.schema.table—e.g., prod.mydb.mytable) in order to facilitate the stitching-together of lineage metadata from diverse sources.

In the most general terms, data pipelines are systems in which jobs consume and produce datasets from other datasets in individual runs. In reality, of course, there’s a lot more to it: There are many other important types of lineage metadata, which is why OpenLineage enables the extensibility of its core specification via facets: customizable pieces of metadata that can be attached to jobs, runs, or datasets. Facets enable users to adapt the core specification to their needs without requiring central approval. Facets may capture dataset schemas, stats such as column distributions or null-value counts, job schedules, query plans, and source code, among countless other types of metadata. The key metadata for streaming datasets such as Kafka topics, for example, will look very different from the key metadata for tables in data warehouses or folders in distributed file systems.

Lineage in a typical data platform

To illustrate lineage in action, let’s consider a fairly “typical” data platform. Data platforms generally comprise several layers, often including:

- An ingest layer, where data comes in.

- A storage layer, which might comprise a streaming tier as well as an archive or distributed file system tier.

- A compute layer, which might comprise a streaming compute tier and a batch/ML compute tier.

- A business intelligence layer.

Our (hypothetical) data platform looks like this:

Here, lineage data from every layer of our platform is collected, stored, and visualized with Datadog and Marquez, which is the reference implementation of the OpenLineage specification. This example also allows us to see the architectural problem solved by OpenLineage.

OpenLineage turns lineage integrations between data pipeline components into a shared effort. Rather than depending on each other for lineage, all data consumers and producers can depend on OpenLineage.

Towards greater data observability

In this post, we’ve examined the role of lineage in fostering the healthy data ecosystems that form the basis of successful analytics. We’ve also discussed the fundamental importance of lineage to data security and compliance. And we’ve demonstrated the critical visibility lineage provides into data pipelines—visibility that can help us ensure the reliability of our data, protect downstream dependencies, and quickly trace issues to their sources upstream. Finally, we’ve seen how the OpenLineage framework can simplify and improve the interoperability of cross-platform lineage.

Stay tuned for an in-depth exploration of how you can use Datadog to explore data lineage. In the meantime, we encourage you to learn more about OpenLineage, Datadog Data Observability, and using Data Streams Monitoring and Data Jobs Monitoring to observe the data lifecycle. If you’re new to Datadog, you can sign up for a 14-day free trial.