James Eastham

We recently released the 2025 State of Containers and Serverless report, which examines cloud usage data from tens of thousands of Datadog customers. The study shows adoption trends across container orchestration platforms and serverless offerings, and it explores how organizations use those resources to optimize workloads for efficiency, cost, and simplicity. We saw that organizations are beginning to adopt GPUs to enable AI-related workloads, changing the autoscaling tools they use, and evolving their compute architectures.

This post highlights key findings from the report and shows you how Datadog can help you apply those insights to your own environments. You’ll learn how you can:

- Expand your use of GPUs for data-intensive workloads

- Fine-tune autoscaling to improve efficiency

- Find the best compute model for each workload

- Evaluate Arm-based compute for efficiency gains

Use GPUs and other specialized compute for data-intensive workloads

Organizations are beginning to adopt GPUs to support data-intensive workloads like AI training and inference. Compared to general-purpose CPUs, GPUs deliver substantial performance and cost-efficiency gains, especially for AI workloads.

As AI continues to scale, it’s important to plan for greater demand on your GPUs, as well as other specialized compute resources such as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs). Teams should maximize utilization of these resources by monitoring workload performance and adjusting allocations to minimize idle capacity. Even if your organization hasn’t yet adopted these technologies, familiarizing yourself with your cloud providers’ GPU, FPGA, and ASIC offerings now will help you move quickly when you need to deploy these resources.

Monitor GPUs with Datadog

Datadog brings deep visibility into the performance and efficiency of your specialized compute environments. Integrations for NVIDIA and other GPU devices enable you to closely track memory usage, temperature, and active process counts to manage performance and utilization. And Datadog GPU Monitoring brings together observability data from your entire GPU fleet, from the health of a cluster all the way down to the utilization of individual GPU cores.

Datadog’s Containers view helps you analyze workloads running on specialized instances across Kubernetes, Amazon ECS, and other container platforms. You can use Application Performance Monitoring (APM) to correlate GPU resource usage with your workloads’ performance, highlighting underutilized workloads or inefficient code paths that inflate costs and degrade performance.

Fine-tune autoscaling to improve efficiency

It’s critical to ensure that your workloads scale effectively as demand fluctuates. Autoscaling in Kubernetes is widely used, but still many clusters are overprovisioned. While maintaining some headroom is essential to safeguard performance, effective autoscaling can improve efficiency by right-sizing resources.

To maximize the benefits of autoscaling, first evaluate available autoscaling tools and adopt the ones that align with your organization’s needs for flexibility, control, and simplicity.

Next, identify workloads that don’t yet use autoscaling and determine whether they could benefit from it. Some workloads may be better suited to serverless platforms, which provide autoscaling without configuration. For workloads running in clusters, configure autoscaling policies that use the most effective signals to trigger scaling—CPU or memory utilization, or custom application metrics like queue depth or request rate.

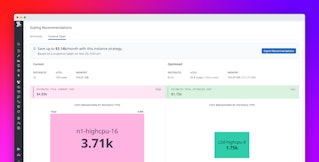

Right-size your cluster resources with Datadog Kubernetes Autoscaling

Datadog Kubernetes Autoscaling turns your real‑time and historical telemetry data into intelligent scaling guidance for each workload. It recommends right‑sizing for replicas and container resources, quantifies idle cost and potential savings to inform ROI, and lets teams apply changes manually or automate them as part of their workflows. Datadog makes it easy to correlate scaling events with node efficiency and resource trends so you can verify the impact of your autoscaling, maintain application performance, and optimize your cloud spending.

Find the best compute model for each workload

Cloud providers now offer a broad range of compute services, allowing teams to run each workload on the platform that best matches its needs. Rather than adhering to a single compute model, many organizations combine multiple services to create hybrid environments that balance performance, cost efficiency, and operational simplicity.

Depending on the needs of each workload, you might run some on serverless functions, others on managed container platforms, and still others in self-managed Kubernetes clusters. Serverless platforms—such as AWS Lambda, Google Cloud Run, and Azure Functions—can be ideal for event-driven or spiky workloads that require rapid scaling and benefit from pay-per-use pricing. Managed container orchestration platforms like Amazon ECS Fargate, Azure Container Apps, and self-managed Kubernetes are better suited for long-running or infrastructure-intensive applications. These managed orchestrators handle much of the infrastructure automatically and require minimal operational overhead. Managing your own Kubernetes clusters offers greater flexibility and control but demands significantly more effort to operate and maintain.

You should evaluate each workload to determine its optimal placement and select the environment that provides the best combination of efficiency, reliability, cost, and simplicity. If your organization has historically used only one or a few services, identify and reevaluate any policies or ingrained practices that limit workload placement.

Evaluate efficiency across environments with Datadog

Datadog gives you the visibility you need to determine whether each workload is running in the most efficient environment. You can easily track CPU and memory utilization for both containers and serverless functions to identify when a workload is overprovisioned or mismatched to its current environment. Serverless Monitoring surfaces each function’s invocation patterns, cold-start durations, and request latency, and Cloud Cost Management (CCM) helps you track each workload’s cost efficiency.

When you identify a workload that might perform better elsewhere—for example, a containerized service that could move to serverless functions to better handle spiky traffic—you can use custom dashboards to compare performance metrics and track migration results. Datadog Notebooks can help your team form a migration plan. With Notebooks, you can collect metrics, traces, and logs in one place, document findings, and align on next steps for workload optimization.

Evaluate Arm-based compute for efficiency gains

While GPUs are powering data-intensive workloads, many teams are also finding efficiency gains by migrating CPU-based workloads to Arm. Workloads running on Arm-based compute architectures achieve better price performance across both Lambda functions and cloud instances. This cost efficiency makes Arm an attractive option for teams seeking to optimize costs without sacrificing the performance of their workloads.

Arm’s high core density and energy-efficient design make it well-suited for serving workloads that demand low latency or high throughput. To evaluate Arm’s advantages for your own environment, begin by testing key workloads on Arm to assess performance, compatibility, and potential cost savings. Then track adoption over time to verify that each migration yields meaningful efficiency gains.

Use Datadog to identify workloads to migrate to Arm

Datadog provides the visibility you need to identify and monitor workloads running on Arm-based compute. You can easily filter Amazon EC2 instances and Lambda functions by architecture, selecting arm64 to surface Arm-based workloads or x86_64 to find migration opportunities.

Use CCM to compare cost and utilization across architectures and validate the price-performance impact of your migrations. Finally, use dashboards to visualize your organization’s Arm adoption over time and Notebooks to document results and collaborate on further optimization strategies.

Turn container and serverless insights into action with Datadog

The 2025 State of Containers and Serverless report shows how organizations are continuing to evolve their infrastructure strategies by adopting the compute models, autoscaling tools, and architectures that balance performance, cost, and simplicity. From aligning workloads with the right compute model to fine-tuning autoscaling, expanding their use of GPUs, and exploring the use of Arm-based instances, teams are optimizing for efficiency across every layer of the stack.

Datadog helps you turn these insights into action. With unified visibility across serverless, containerized, and specialized compute environments, you can spot workloads that would benefit from a new compute model, monitor their performance and cost impact, and continuously refine your compute strategy for efficiency and scale.

See our documentation to learn more about using Datadog to track autoscaling and measure workload efficiency. And if you’re new to Datadog, sign up for a 14-day free trial to start optimizing your compute environment today.