Daniel Shan

Tristan Ratchford

We built Bits AI SRE to help engineers investigate and solve production incidents, one of the most difficult aspects of operating distributed systems today. As environments grow more dynamic and complex, resolving issues becomes more challenging. Failures now span more services, involve noisier signals, and encompass larger volumes of telemetry data, making it hard for on-call engineers to find root causes quickly. Today, Bits AI SRE is already helping teams decrease time to resolution by up to 95%.

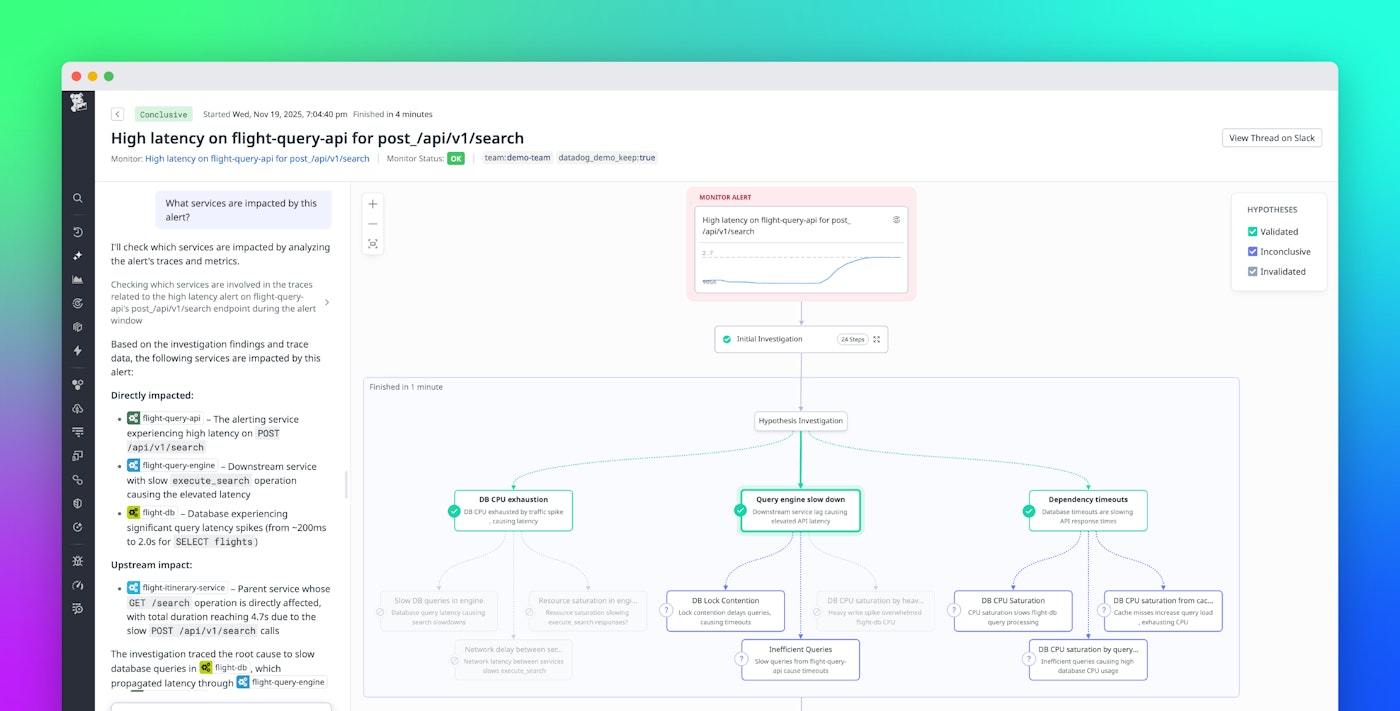

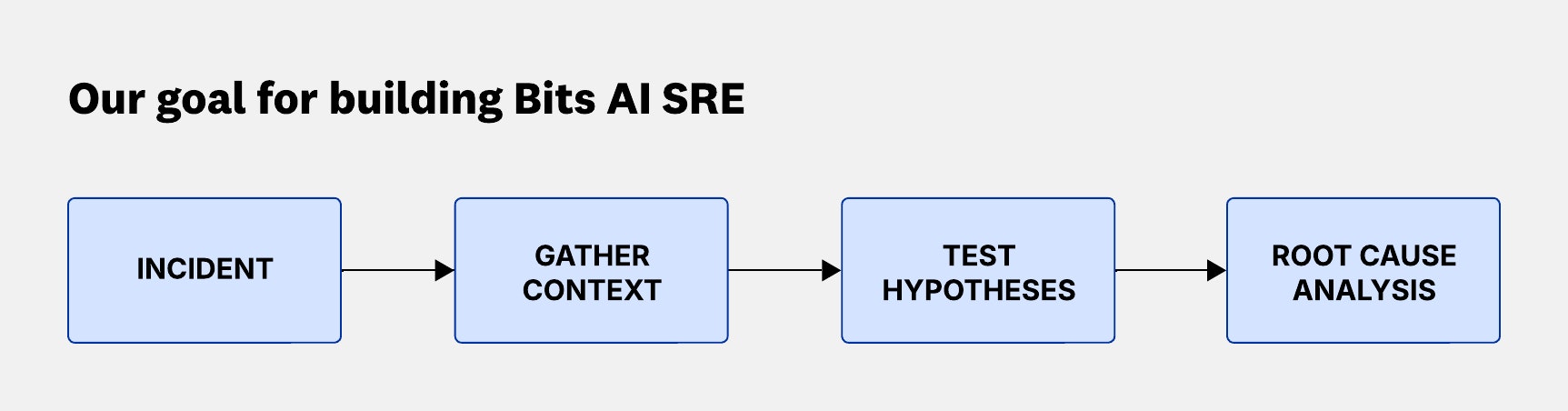

Bits AI SRE is our new agent that automatically investigates incidents and monitor alerts by autonomously reasoning over complex telemetry data and producing audit-ready root cause analyses in minutes. Behind the scenes, it mimics the way human SREs think: by forming hypotheses, testing them using live telemetry data, and following promising evidence to a root cause.

In this post, we’ll show how we evaluate Bits AI SRE against real-world data, share performance results, and highlight aspects of this agent’s design.

Benchmarked on real incidents

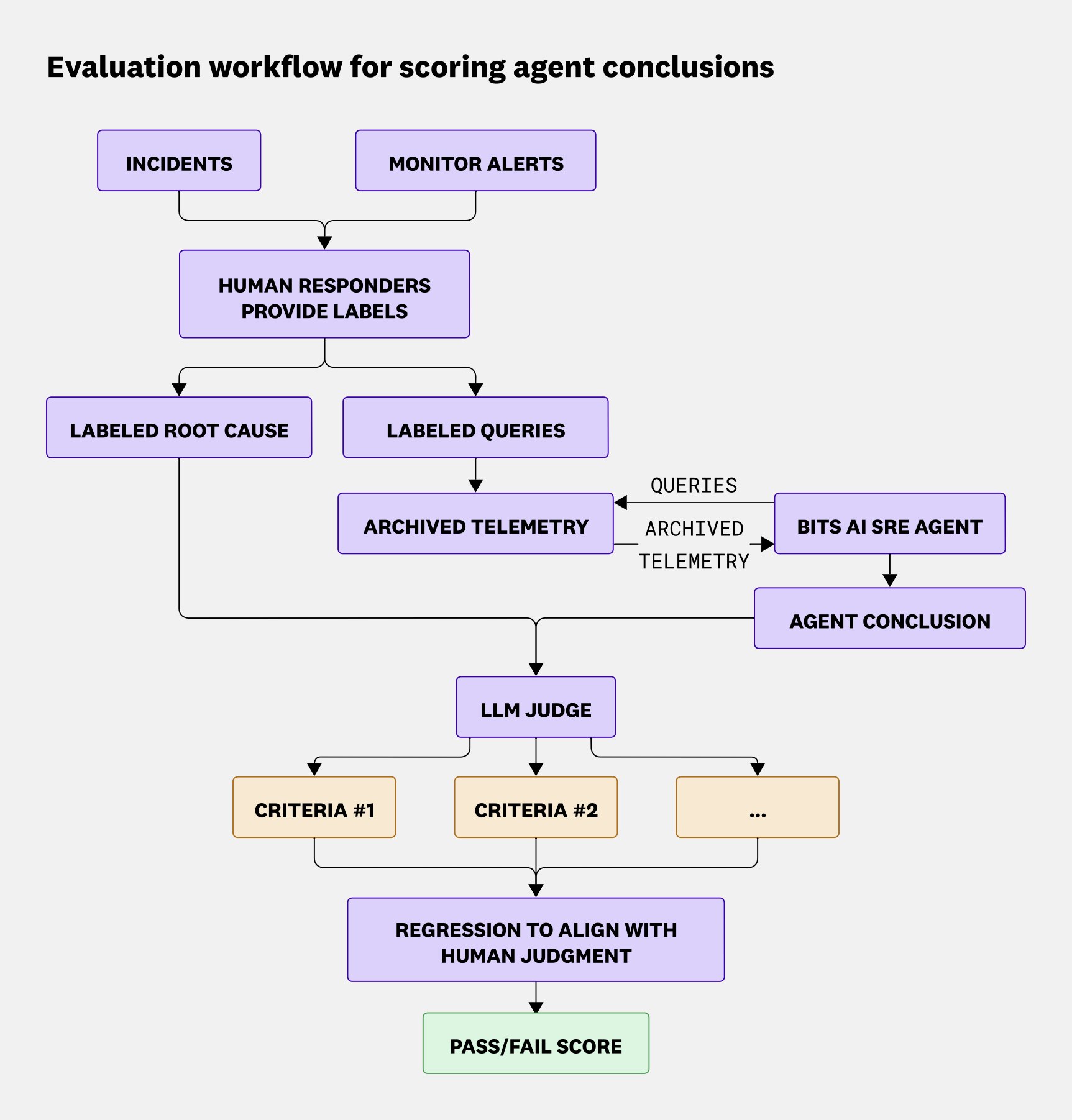

Evaluating against real incidents is fundamental and critical to building an effective AI SRE agent. This is the most reliable way to measure meaningful progress and ensure that an agent can generalize to the complexity of real-world environments. With the largest dataset of production telemetry data in the industry, Datadog is uniquely positioned to do this well.

We worked across hundreds of teams at Datadog to collect and label real incidents and used them to create a benchmark dataset of test scenarios. We evaluate Bits AI SRE’s performance by scoring its output against each scenario’s ground truth label.

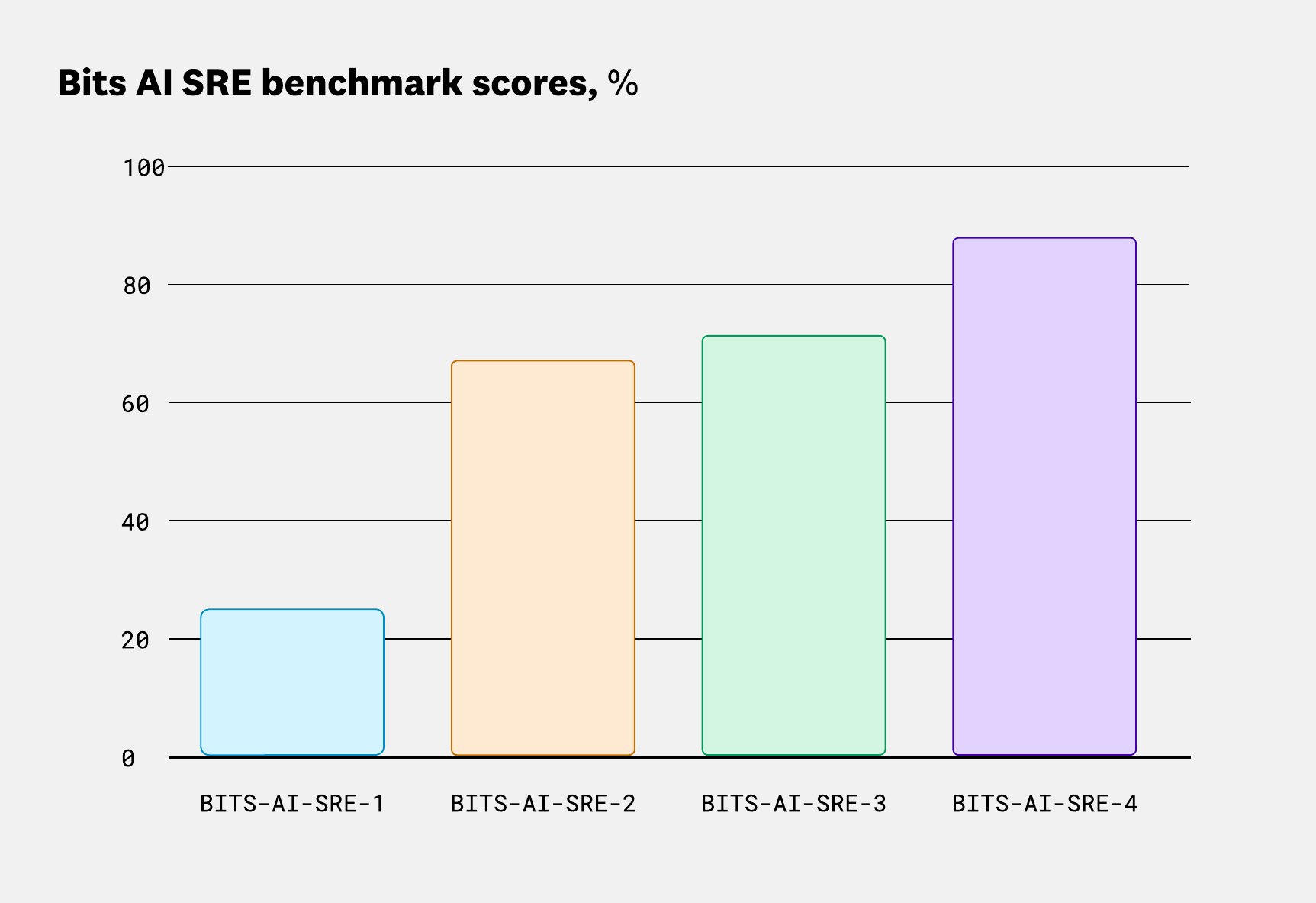

We use this benchmark to regularly measure our agent’s performance and improve the agent over time. Our agent’s capabilities have significantly improved over the past year, and we expect them to get even better as we continue to build.

Investigates like humans, not a summary engine

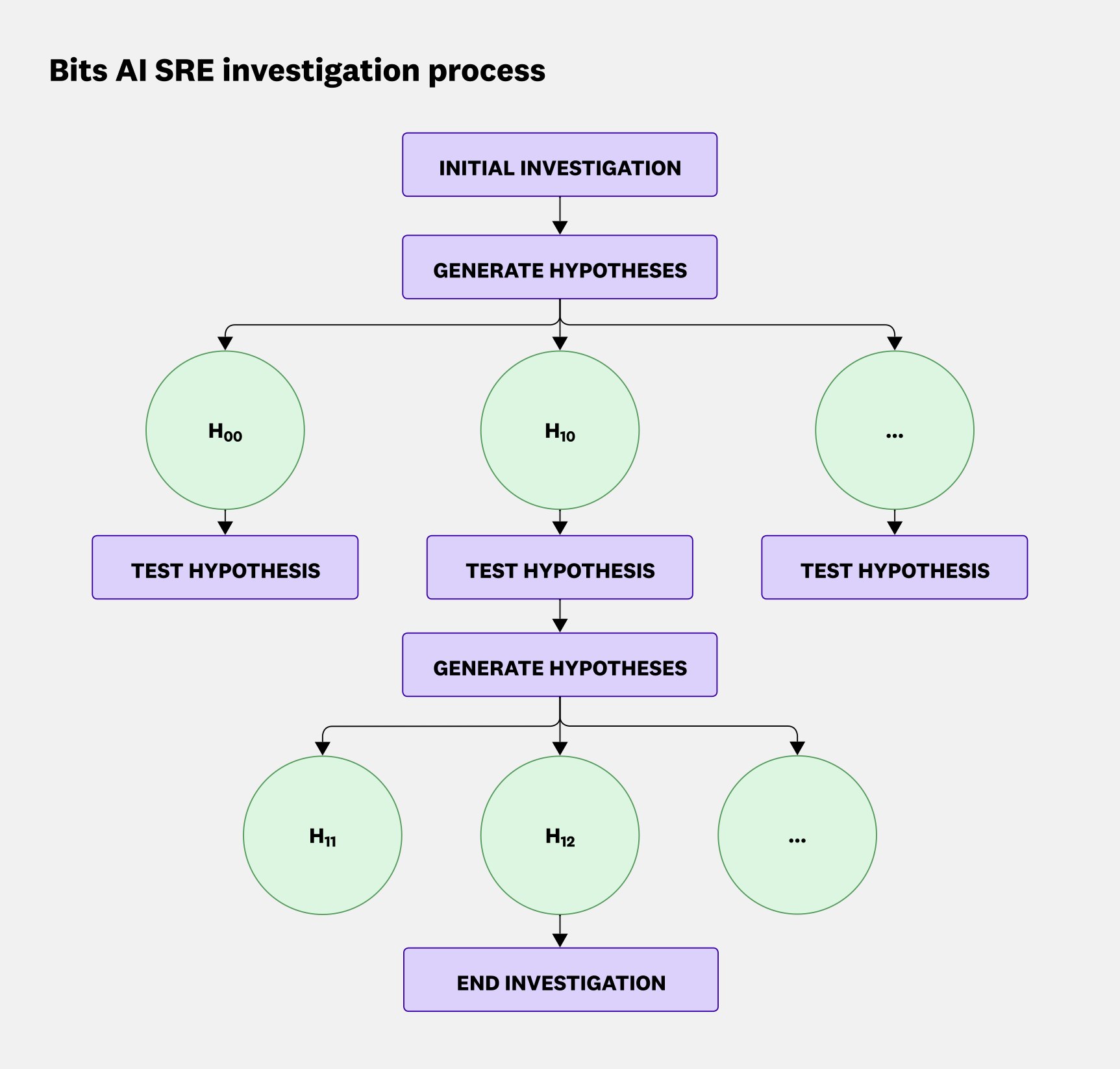

Bits AI SRE investigates like a team of SREs performing an on-call investigation. Rather than simply summarizing raw telemetry data all at once, Bits AI SRE investigates like a human.

Bits AI SRE will:

- Formulate hypotheses about the root cause

- Validate or reject hypotheses using data from targeted queries

- Repeat this process until it reaches a root cause

This significantly reduces noise that can distract or derail the agent from getting to the correct root cause, and allows the agent to perform deep, insightful investigations by following the evidence where it leads.

Focuses on causal relationships instead of noise

Early SRE agents scaled by performing more tool calls across the platform and prompting an LLM to summarize the responses. This approach, however, proved to have a notable shortcoming: Increasing the number of tool calls caused the input token count for the summarization prompt to scale linearly. This meant incorporating additional telemetry data slowly degraded model performance or exceeded the context window limit.

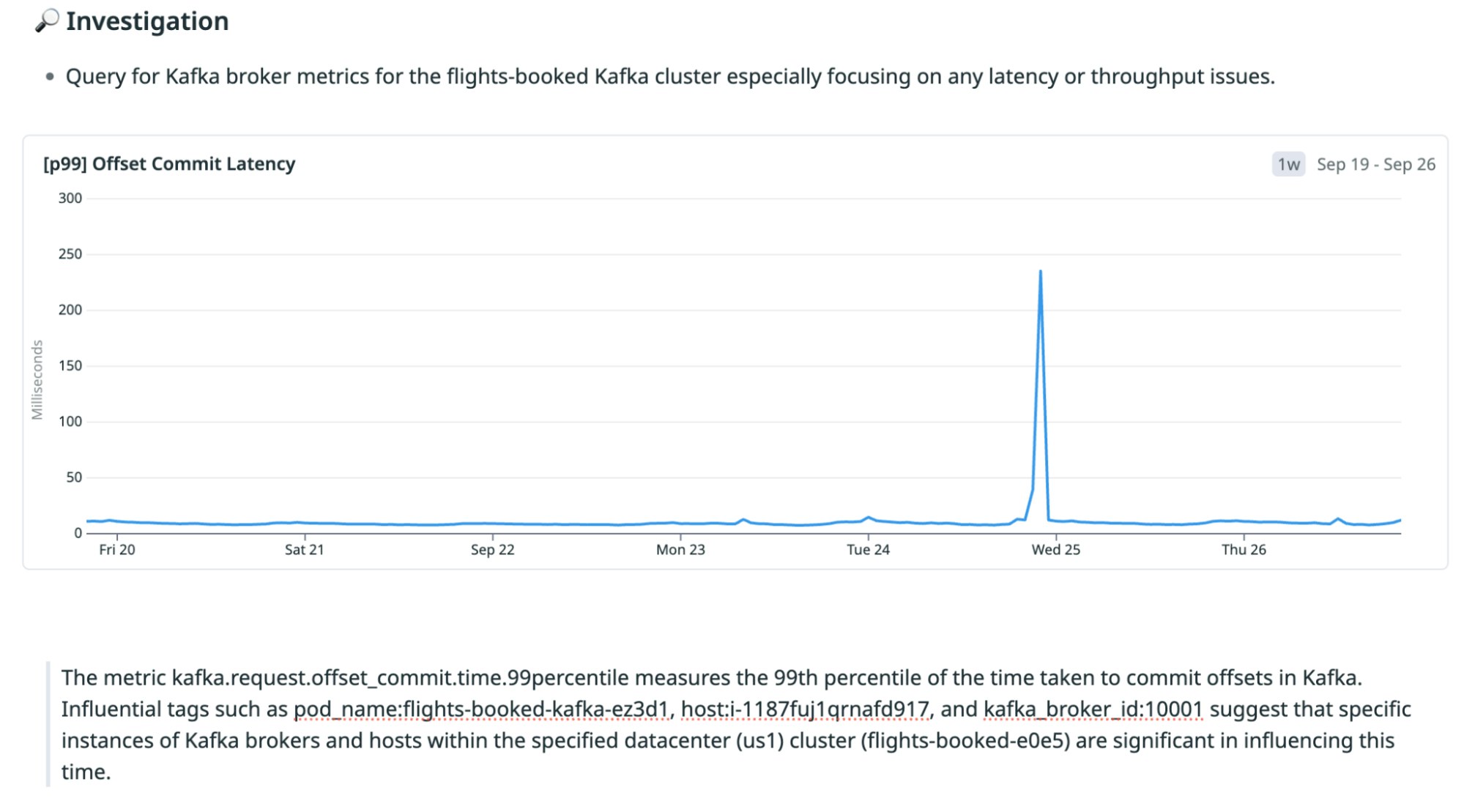

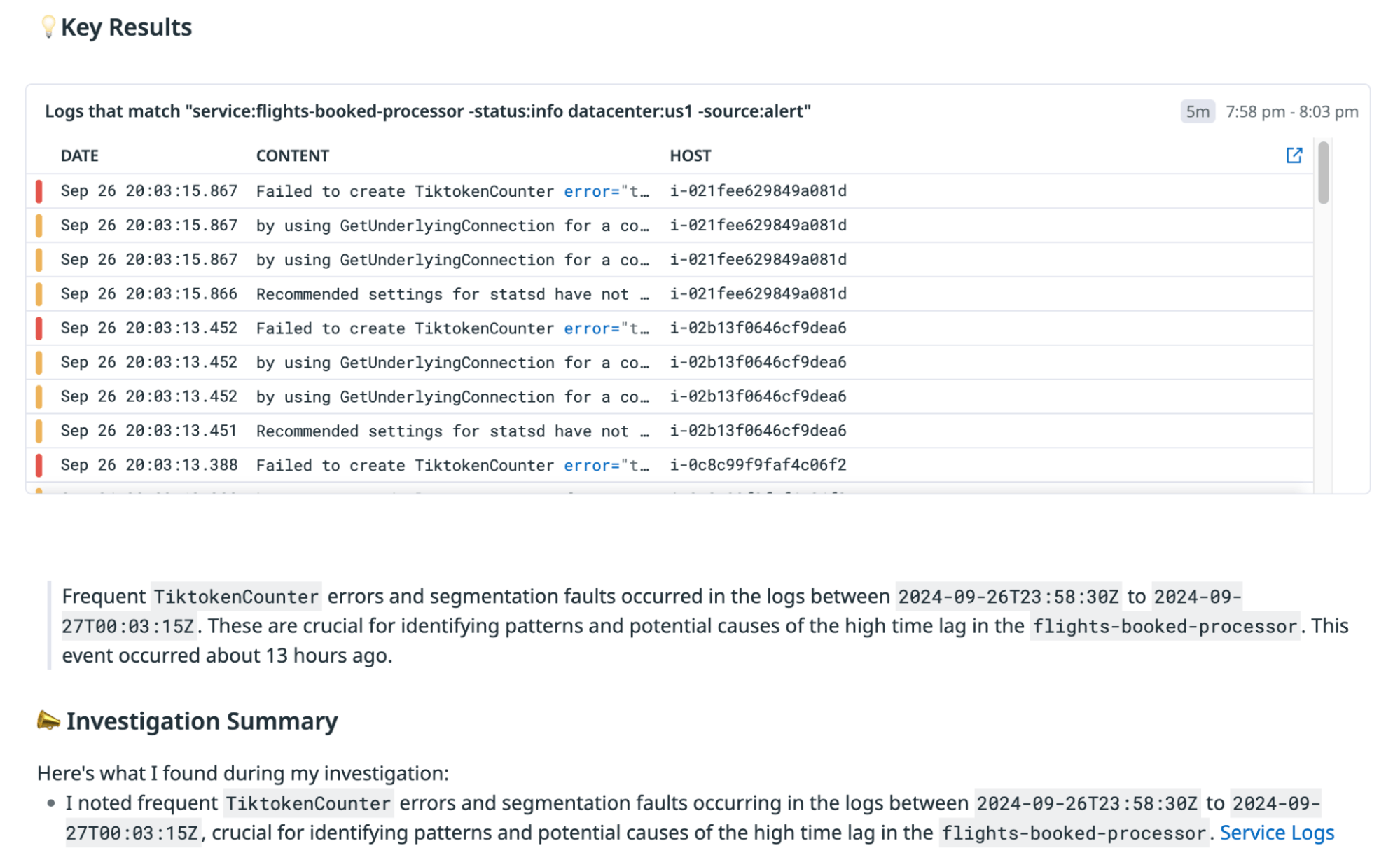

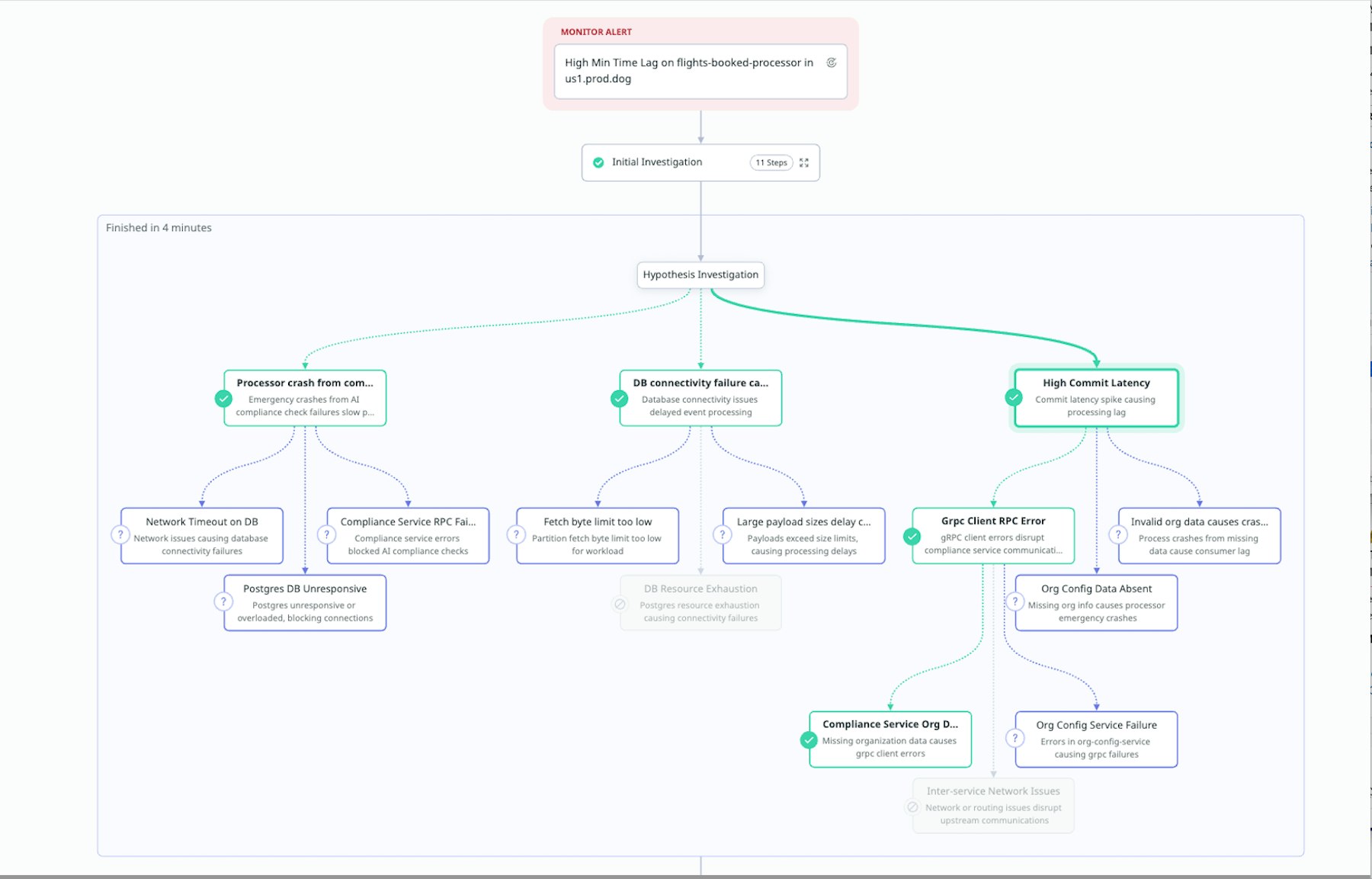

In the following incident, Kafka lag was caused by a spike in commit latency. An early version of Bits AI SRE issued 12 tool calls across logs, traces, and metrics. One of the tool calls correctly pinpointed the root cause. But because other tool responses included suspicious signals like critical application errors in an upstream service, the summarization prompt returned an incorrect root cause.

The newest version of Bits AI SRE correctly surfaces the commit latency as the root cause because the agent focuses on the causal relationship between the monitor alert and specific telemetry data pertaining to a hypothesis, rather than looking at all of the available telemetry data at once.

Performs deep investigations of multi-component issues

In complex incidents, root causes can span multiple systems or require multiple steps to find. Finding multi-component root causes requires the model to connect multiple independent signals.

When investigating, Bits AI SRE breaks down complex hypotheses into sub-hypotheses. When a sub-hypothesis is supported by evidence, the agent digs deeper. If not, it looks elsewhere, just like a human SRE following the most promising lead.

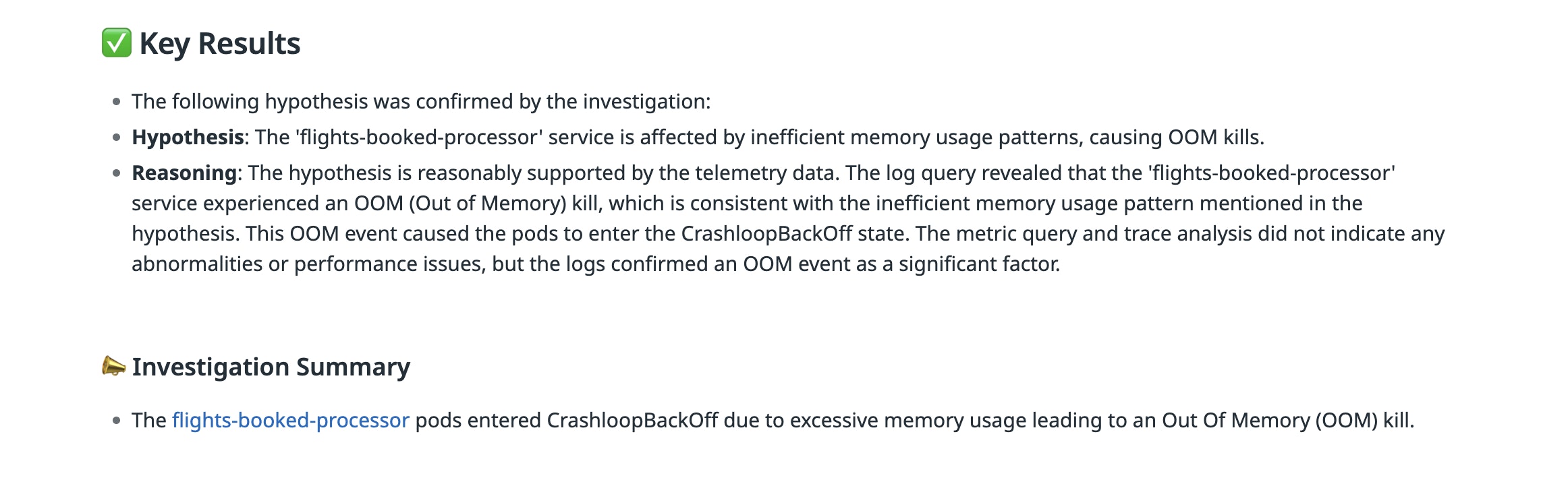

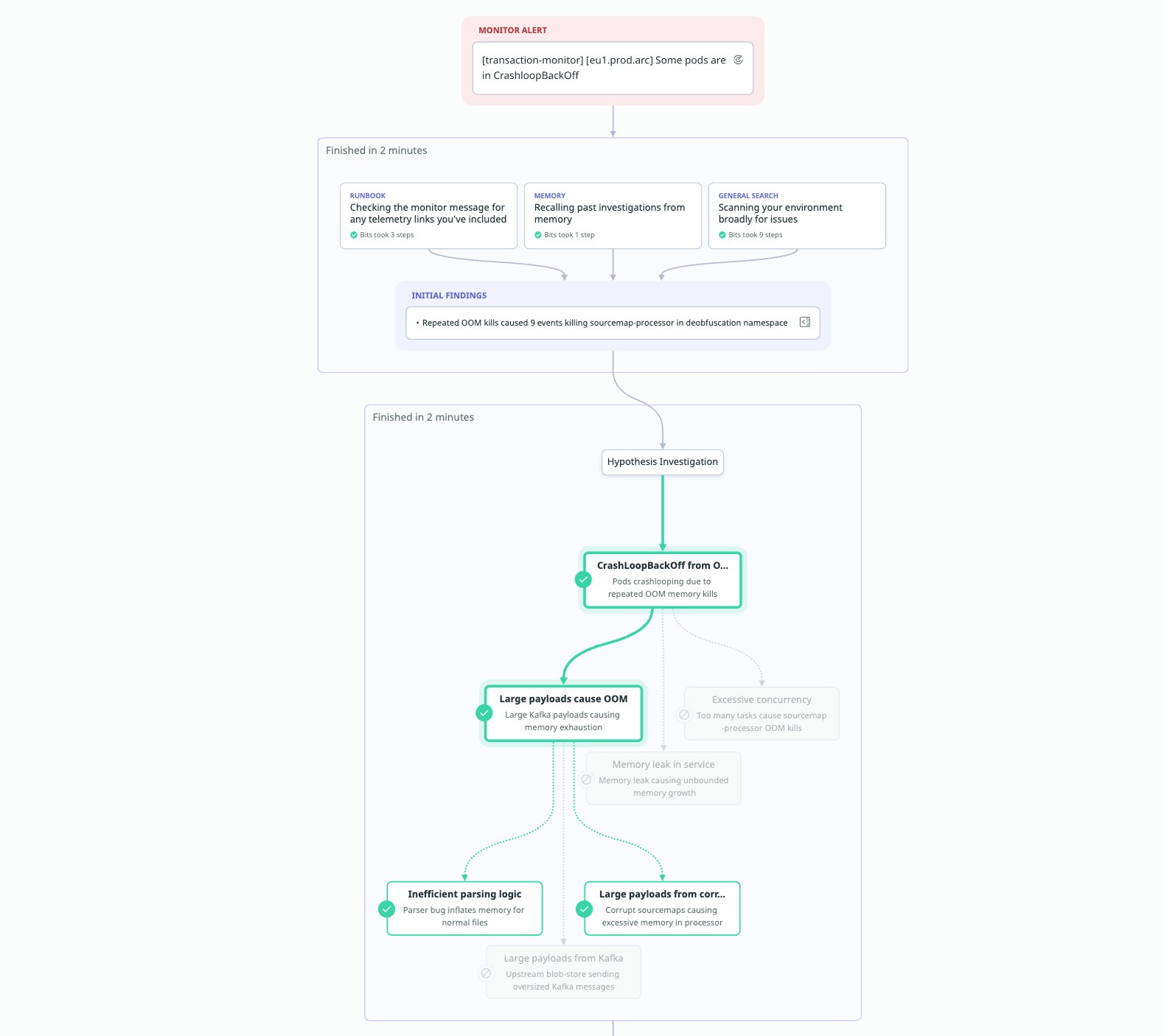

In the following incident, the agent was alerted to pods in CrashLoopBackOff. An early version of Bits AI SRE surfaced that the alert fired because a pod ran out of memory.

While this answer is superficially correct, the newest version of Bits AI SRE digs one level deeper to surface that the OOMs were caused by an influx of abnormally large payloads, which led a single pod to crash, triggering the alert. This version of the agent recursively generates deeper root cause hypotheses until it exhausts the search space, allowing for deeper, more insightful investigations into an alert.

What’s next for Bits AI SRE

Over the past year, we’ve seen that solving real-world SRE problems starts with having a robust evaluation framework grounded in data from real production systems. We believe that this is the best way to ensure agents can reliably solve the problems they will encounter every day, and that effectively using production data will be the defining factor in who can build the most capable SRE agent.

We’re just beginning to see what’s possible with autonomous SRE agents. Bits AI SRE has already received overwhelmingly positive feedback from customers who’ve observed reduced time to root cause detection for complex incidents, and it’s only getting better.

We’re actively expanding Bits AI SRE to cover additional real-world situations and data sources. We’re also deepening its capabilities by integrating it with more expert investigator and optimization agents we’re building across the Datadog platform, enabling Bits AI SRE to drive end-to-end resolution workflows.

Get started with Bits AI SRE today. If you don’t already have a Datadog account, sign up for a 14-day free trial.