Kai Xin Tai

Serverless has become an increasingly popular paradigm among organizations looking to modernize their applications as it allows them to increase agility while reducing their operational overhead and costs. But the highly distributed nature of serverless architectures requires developers to rethink their approach to application design and development. AWS-based serverless applications hinge on AWS Lambda functions, which are stateless and ephemeral by design. These functions run on infrastructure that is managed entirely by AWS, and they integrate with other AWS services to power a range of application workflows. As a result, developers spend time thinking about how to minimize latency, design for failure, and implement security policies, rather than managing hardware.

This two-part series will explore best practices for designing and building serverless applications on AWS. We’ll begin this first post by discussing why microservices has gained prevalence over monoliths, and exploring the qualities of serverless that make it well-suited for microservice-based architectures. Then, we’ll outline several well-established microservice design patterns that allow developers to create highly scalable and reliable serverless applications.

From monoliths to microservices

Organizations have traditionally designed their applications as a single, monolithic unit. This approach is appealing for its simplicity; developers only need to work with one code base, programming language, and application framework, which makes monoliths easy to build, test, and debug. But while this architectural style works well for small, early-stage applications, its drawbacks become more apparent as the application grows and becomes more complex. For instance, the tight coupling of components in a monolith means that a bug in one service has the potential to bring down the entire application. Additionally, monoliths need to be fully redeployed every time a change is made, which prevents teams from deploying continuously and independently from one another.

These issues have led many organizations to shift to a microservice-based approach, in which an application is divided into small, loosely coupled services that each serve a specific business purpose and can be owned by an autonomous team. For instance, an e-commerce application might have separate microservices for its login, product catalog, and checkout functionalities. Microservices can be deployed independently of one another, and they communicate through HTTP-based APIs or asynchronous messaging.

Serverless is a natural choice for microservice-based architectures because Lambda functions are designed to run small chunks of code in response to events emitted by other services. Lambda also integrates with a range of managed services that can be used to implement common patterns in distributed systems, such as message queues (Amazon Simple Queue Service), APIs (Amazon API Gateway), and event streams (Amazon Kinesis). This helps minimize the typical pain points of building microservices, such as repeatedly setting up small services and connecting them to client applications. Once you’ve built a few microservices, you can use existing APIs and Lambda functions as building blocks for new ones. And because AWS takes care of all infrastructure management tasks on your behalf, you can flexibly scale individual microservices without worrying about whether the underlying infrastructure can accommodate the increase in load.

Common design patterns for serverless microservices

Now that we’ve established the benefits of running microservices in a serverless manner, we’ll explore several popular microservice design patterns that can be implemented with AWS serverless technologies. First, we’ll describe a pattern that you can use when you’re decomposing a monolith into microservices. Then, we’ll outline a few patterns for tackling common challenges developers face when working with microservices, such as managing complexity, implementing asynchronous and stream processing, and handling failures.

Migrating from monoliths to microservices

Strangler pattern

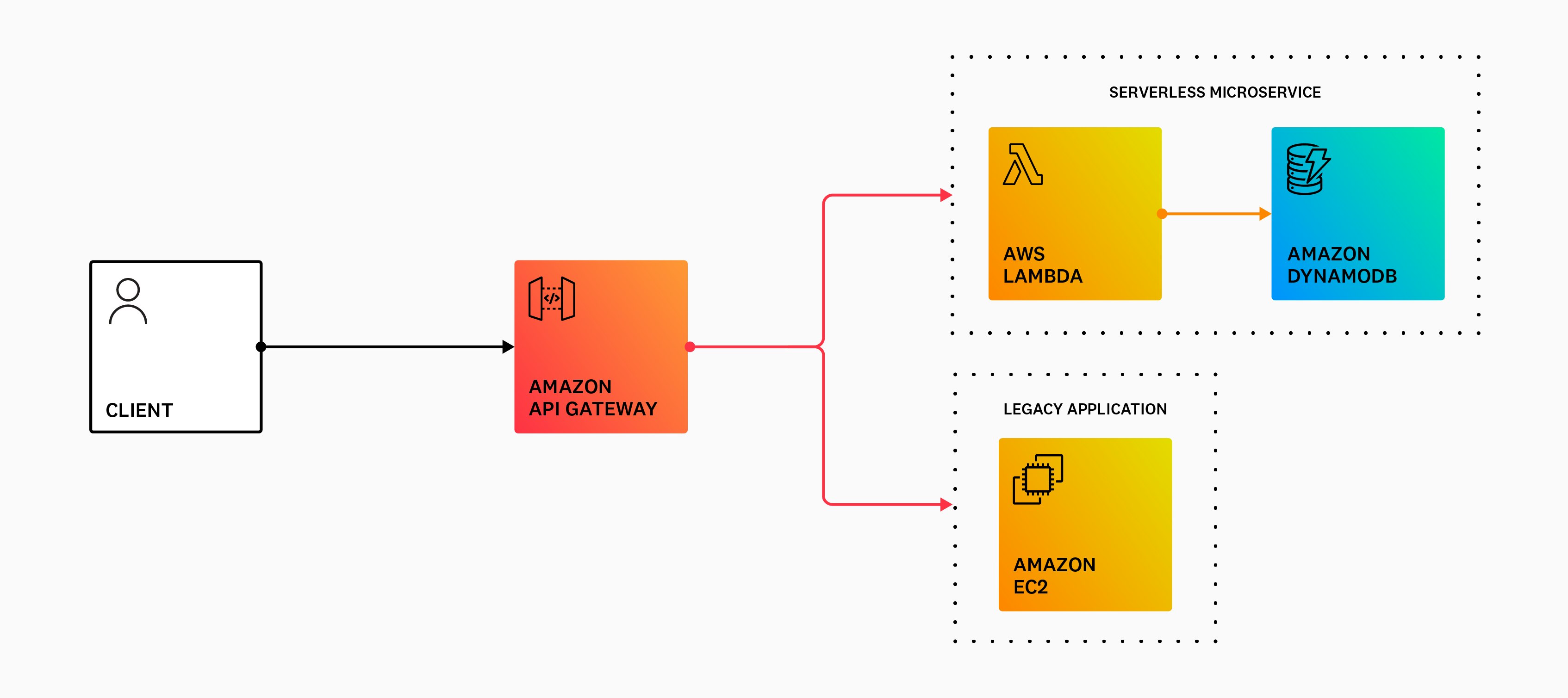

As we discussed earlier, more and more organizations are breaking down their monoliths into microservices to keep up with today’s rapidly changing market. These migrations are non-trivial and require meticulous planning to minimize risk and downtime. The Strangler pattern allows developers to gradually replace components of their monolith with microservices (which can be implemented with one or more Lambda functions), rather than completely shutting down and replacing their monolith in one go. Developers typically migrate the components with the fewest dependencies first, before tackling more complicated ones.

Since the Strangler pattern involves running two applications in parallel, you need to ensure that client requests are routed to the correct location. This pattern uses a strangler facade, such as API Gateway, to accept all incoming requests to the legacy system. The facade then routes them to either the legacy application or the new serverless application. Because clients only interact with the facade, they have no knowledge of—and are unaffected by—any migrations that might have taken place in the backend. Once the entire legacy system has been refactored, and all traffic is routed to the new application, the former can be safely deprecated.

Managing complexity

State Machine pattern

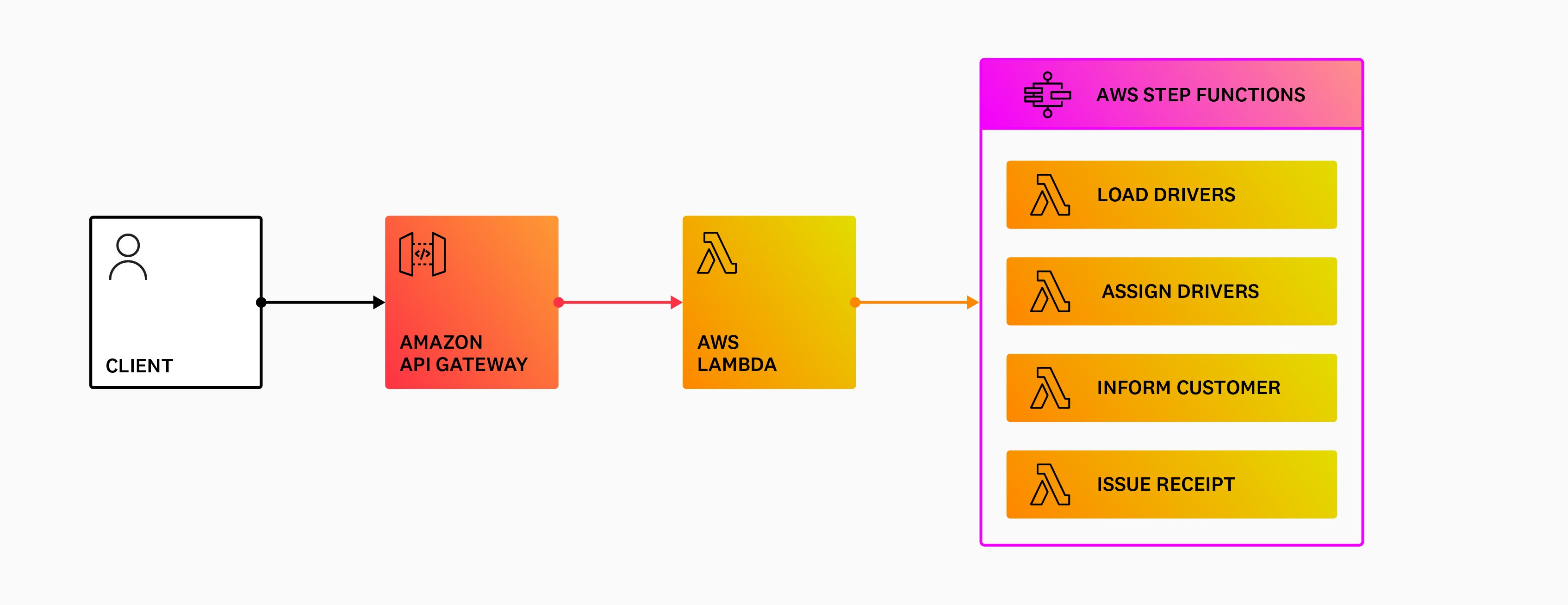

As you build out your application, your business workflows can become highly complex. If you’re running a food delivery application, for instance, your order assignment workflow might involve loading all available drivers, assigning a driver to the order, informing the customer that their food is on the way, and issuing a receipt once the order has been completed. This workflow will also need to account for a variety of scenarios, such as delays in finding a driver and order cancellations. While it is possible to write custom code in Lambda functions to implement this logic, such implementations are incredibly brittle and tend to consume a substantial number of engineering hours.

A better alternative is to use AWS Step Functions to orchestrate complex workflows that involve multiple microservices. Step Functions includes built-in state management, branching, error handling, and retry capabilities, which eliminates the need to write boilerplate code. Depending on your use case, you can either leverage Standard Workflows, which can run for up to a year, or Express Workflows, which run for up to 5 minutes.

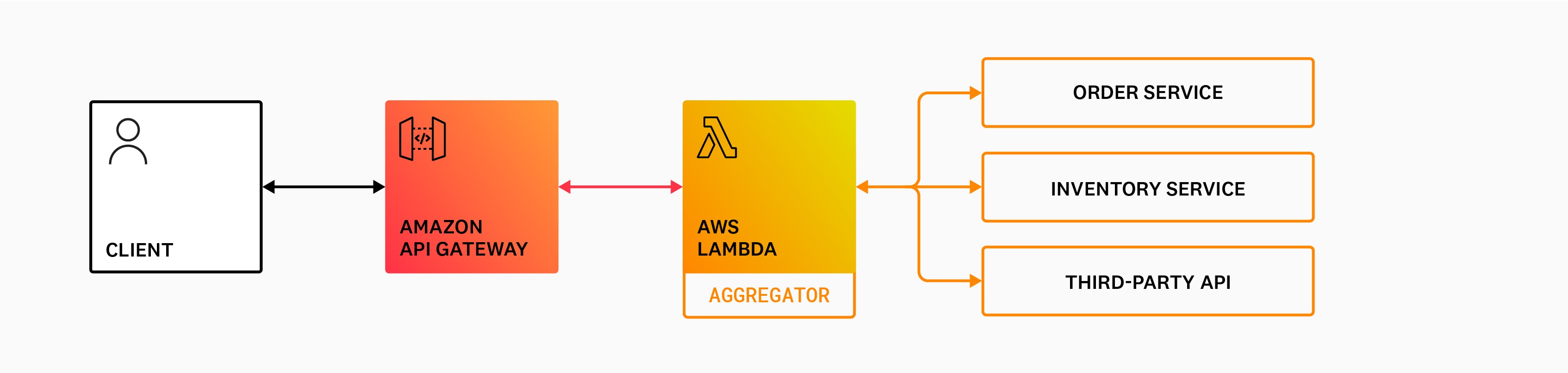

Aggregator pattern

In microservice-based architectures, clients often need to make calls to multiple backend services to perform an operation. Because these calls occur over the network, chatty communication between clients and microservices can increase application latency, particularly in situations where bandwidth is limited. The Aggregator pattern reduces the number of calls clients need to make by using a single Lambda function to accept all client requests. The Lambda function then forwards the requests to the appropriate microservices and third-party APIs, aggregates their results, and returns a single response to the client.

Implementing asynchronous and stream processing

Publisher-Subscriber pattern

Microservices communicate with each other either synchronously (through REST APIs) or asynchronously (through message and event passing). In the synchronous model, the client sends a request to a service and then waits for a response. This works well when the workflow only consists of a single service, but if the request must traverse multiple services, a delay in one service can significantly increase the overall response time. As such, asynchronous communication—in which events are passed between services and the client doesn’t wait for a response—can optimize performance and costs when an immediate response is not necessary.

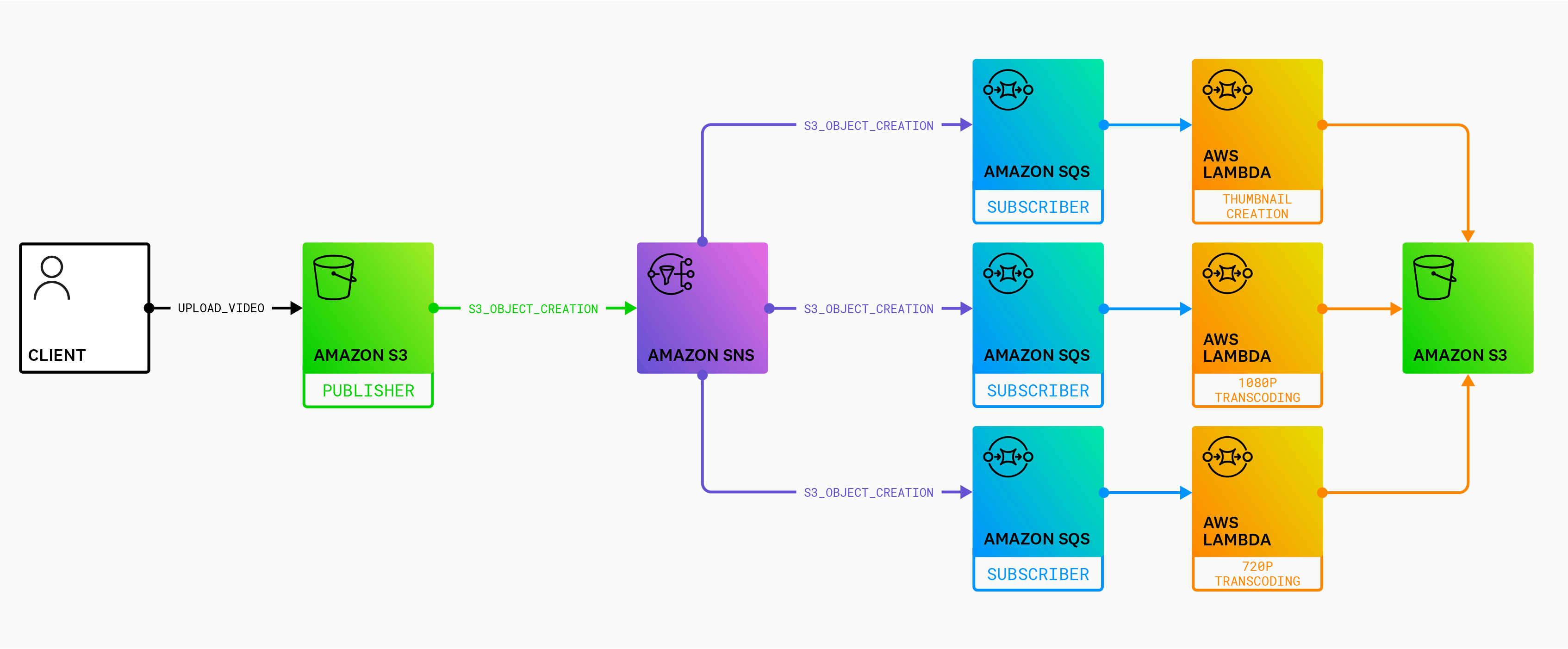

The Publisher-Subscriber pattern is a common way to implement asynchronous communication between microservices. In the example below, Amazon S3 pushes a message to an Amazon Simple Notification Service (SNS) topic whenever a user uploads a video to an S3 bucket. SNS then forwards the message to the topic’s subscribers, which in this case are three SQS queues. Each of the SQS queues then triggers its respective Lambda function, which downloads the video from the original S3 bucket, either resizes it or creates a thumbnail, and uploads the final product to a separate S3 bucket. In this pattern, the publisher has no knowledge of which subscribers are listening and vice versa, which allows them to stay decoupled. This pattern is also commonly used to send mobile push notifications, as well as email and text messages to users at scale.

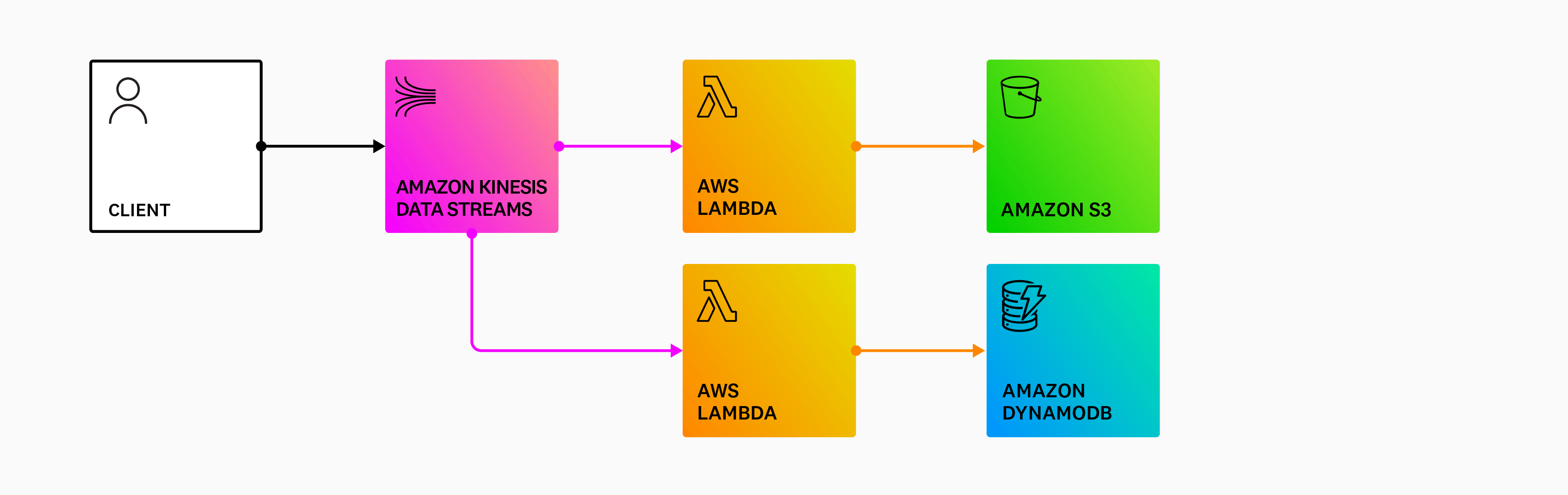

For more complex use cases, such as real-time processing of large volumes of data (e.g., clickstreams, IoT sensor data, financial transactions), Lambda integrates out-of-the-box with Amazon Kinesis Data Streams. A Kinesis data stream is made up of a set of shards, each containing a sequence of data records. As a consumer, Lambda automatically polls your stream and invokes your function when a new record is detected. By default, each shard uses a single instance of a function to process records, although you can increase Lambda’s parallelization factor to scale up the number of concurrent executions during peak hours.

Handling failures

Circuit Breaker pattern

In distributed systems, where multiple services are involved in fulfilling a request, it is crucial to think about how service failures are handled. Some issues, such as network latency, are intermittent and resolve on their own, so a retry call from an upstream service is likely to succeed. More severe issues or outages, however, may require active intervention and can take an indeterminate amount of time to resolve. Continuous retries in these situations can consume critical resources and starve other services that depend on the same resource pool, which may result in a catastrophic cascading failure.

The Circuit Breaker pattern allows you to build fault tolerance into your system by using an Amazon DynamoDB table to keep track of request failures and circuit breaker status, along with a Lambda function to decide whether or not to allow subsequent calls to the impacted service based on the failure count.

The circuit breaker operates in three states: closed, open, and half-open. In the closed state, the circuit breaker allows all traffic through, while keeping track of the number of failed requests to a service.

If this number exceeds a threshold within a certain period of time, it transitions to the open state, where it stops calling the failed service and returns an exception to the client.

After a brief timeout has elapsed, the circuit breaker moves into the half-open state, where it begins to allow a small number of requests to the service. If these calls are successful, it assumes that the fault has been corrected and begins allowing all traffic through. However, if the requests fail, it reverts to the open state and repeats the process.

Saga pattern

Monolithic applications are built with a central database, which allows them to make use of ACID (atomic, consistent, isolated, durable) transactions to guarantee data consistency. But in a microservice-based application, each microservice typically has its own database, which contains data that is closely related to the data in other microservices’ databases. The Saga pattern ensures data consistency by coordinating a sequence of local transactions in interconnected microservices. Once a microservice performs its local transaction, it triggers the next service in the chain to perform its transaction. If a transaction fails along the way, a series of compensating transactions is kicked off to roll back the changes made in prior transactions.

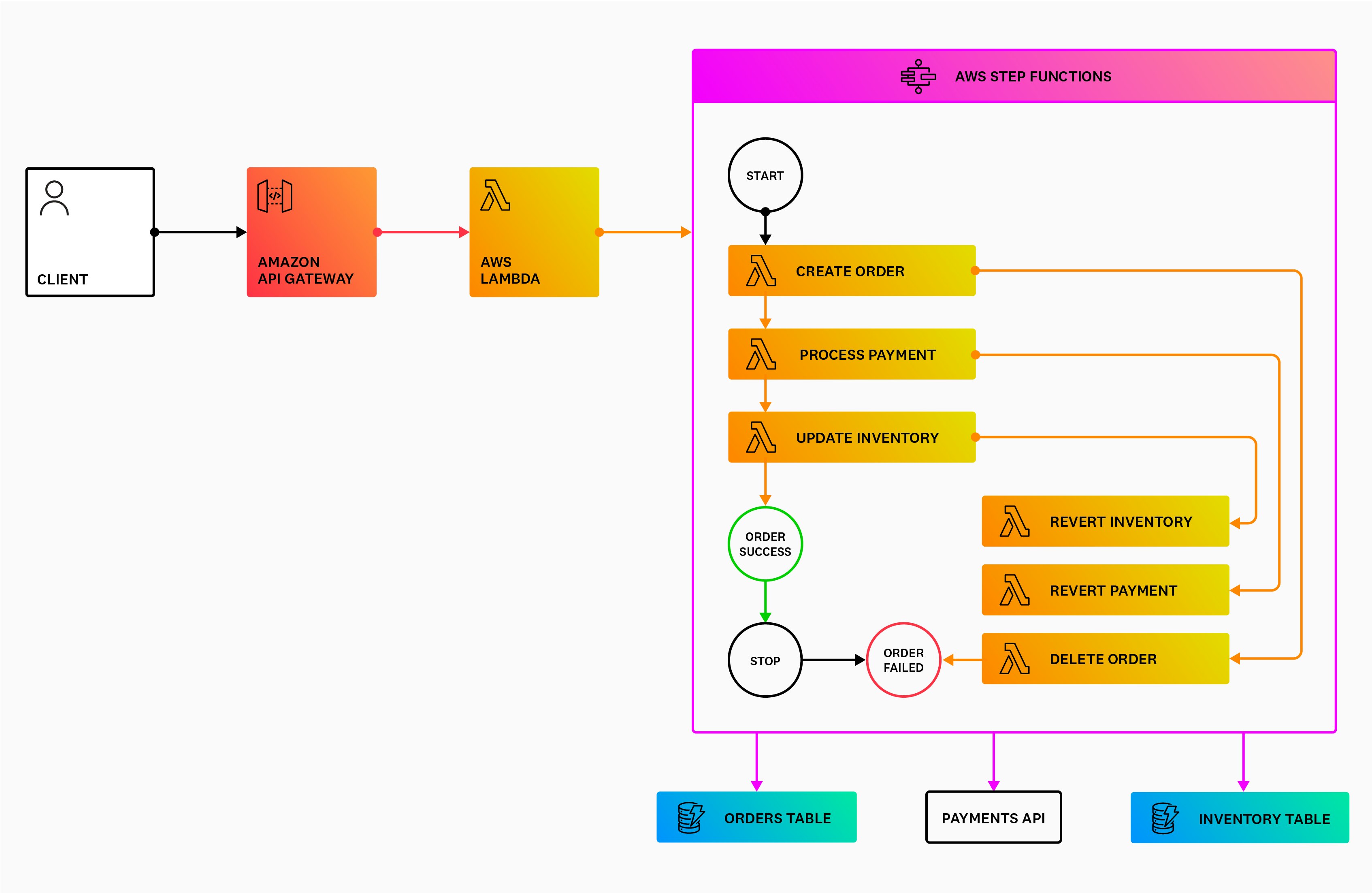

The Saga pattern can be implemented through choreography or orchestration. In the choreography model, each service publishes an event that triggers the next service to run. With orchestration, a central coordinator manages the entire chain of transactions. The example below uses Step Functions to implement the Saga orchestration pattern. This e-commerce workflow consists of an order service, a payment service, and an inventory service. When a customer places an order (i.e., when the Create order Lambda function is triggered), a record is written to the Orders DynamoDB table. The orchestrator then calls the Process payment Lambda function, which is responsible for calling a third-party payments API. If this step fails, the orchestrator invokes the Revert payment and Delete order Lambda functions, and returns an error to the caller.

Start designing your serverless applications

So far, we’ve discussed the benefits of microservice-based architectures and how serverless technologies can be used to implement them. We’ve also explored a few microservice design patterns that can serve as blueprints for your serverless applications. To learn more design patterns, check out Jeremy Daly’s guide. In the next part of this series, we will examine some serverless best practices that adhere to AWS’s Well-Architected Framework, which can guide you as you continue to develop and optimize your applications.

If you’re new to Datadog, sign up for a 14-day free trial to monitor your serverless applications today.