Cole Maring

Kassen Qian

Static application security testing (SAST) is foundational to modern application and code security programs. Yet these tools inevitably produce false positives that require manual review. When scanners find vulnerabilities that are not genuine issues, they erode trust, slow down remediation, and make it harder for teams to understand which alerts require attention.

Datadog’s new false positive filtering feature for Static Code Analysis (SAST), powered by Bits AI, addresses this challenge by classifying vulnerabilities as likely to be a true positive or a false positive. This capability enables faster triage, reduces distractions, and improves the signal-to-noise ratio so teams can focus on fixing the vulnerabilities that matter most.

In this post, we’ll explore how Bits AI classifies and explains vulnerability findings, how false positive filtering works, and how AI is advancing capabilities across the Datadog Code Security platform.

Cutting through the noise

Today, Datadog Static Code Analysis continuously scans source code for security vulnerabilities and quality issues as developers commit changes. The false positive filtering feature builds on this capability by adding AI-powered classification and context to relevant findings.

When SAST identifies a vulnerability that maps to a curated set of Common Weakness Enumerations (CWEs) from the OWASP Benchmark and Top Ten, Bits AI evaluates the surrounding code to determine whether the issue is more likely to be a true or a false positive. It also generates a concise explanation that outlines the reasoning behind its assessment. This information appears directly alongside each finding in the Datadog platform, providing immediate context while leaving room for engineers to validate findings through their own judgment and feedback.

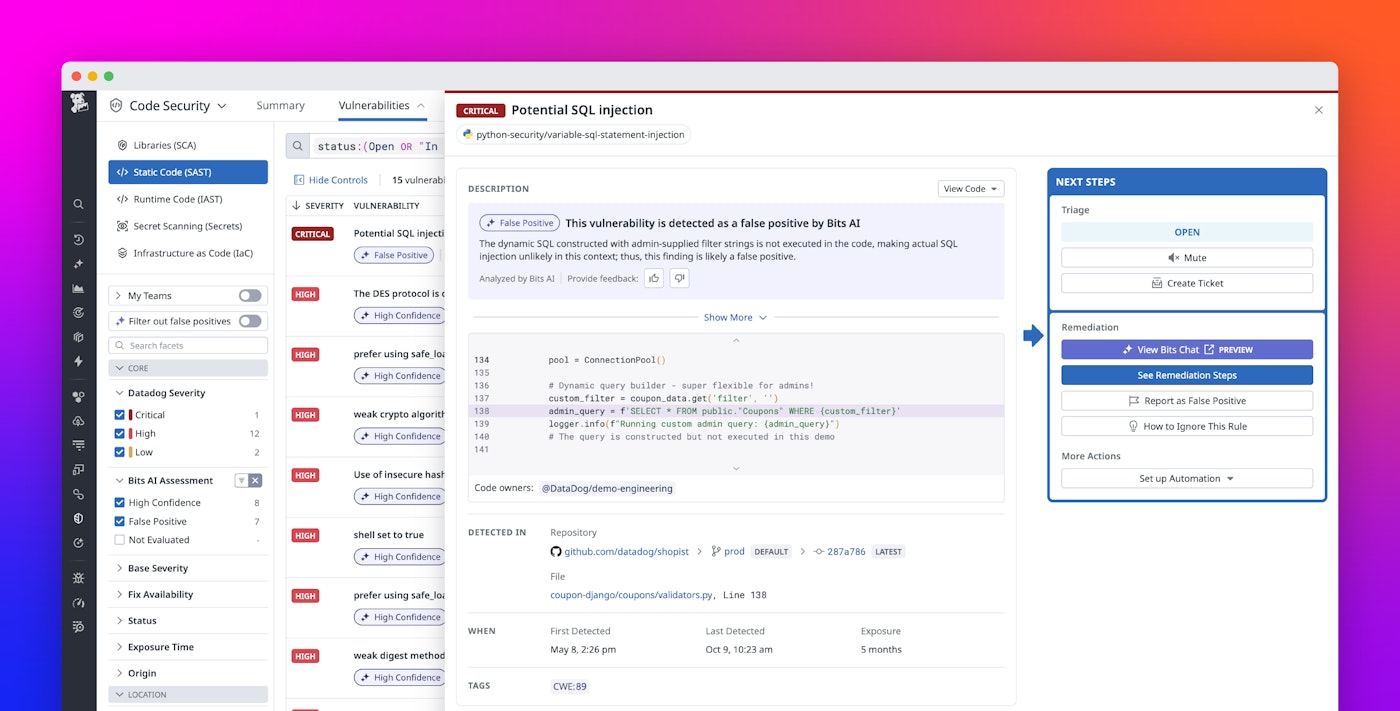

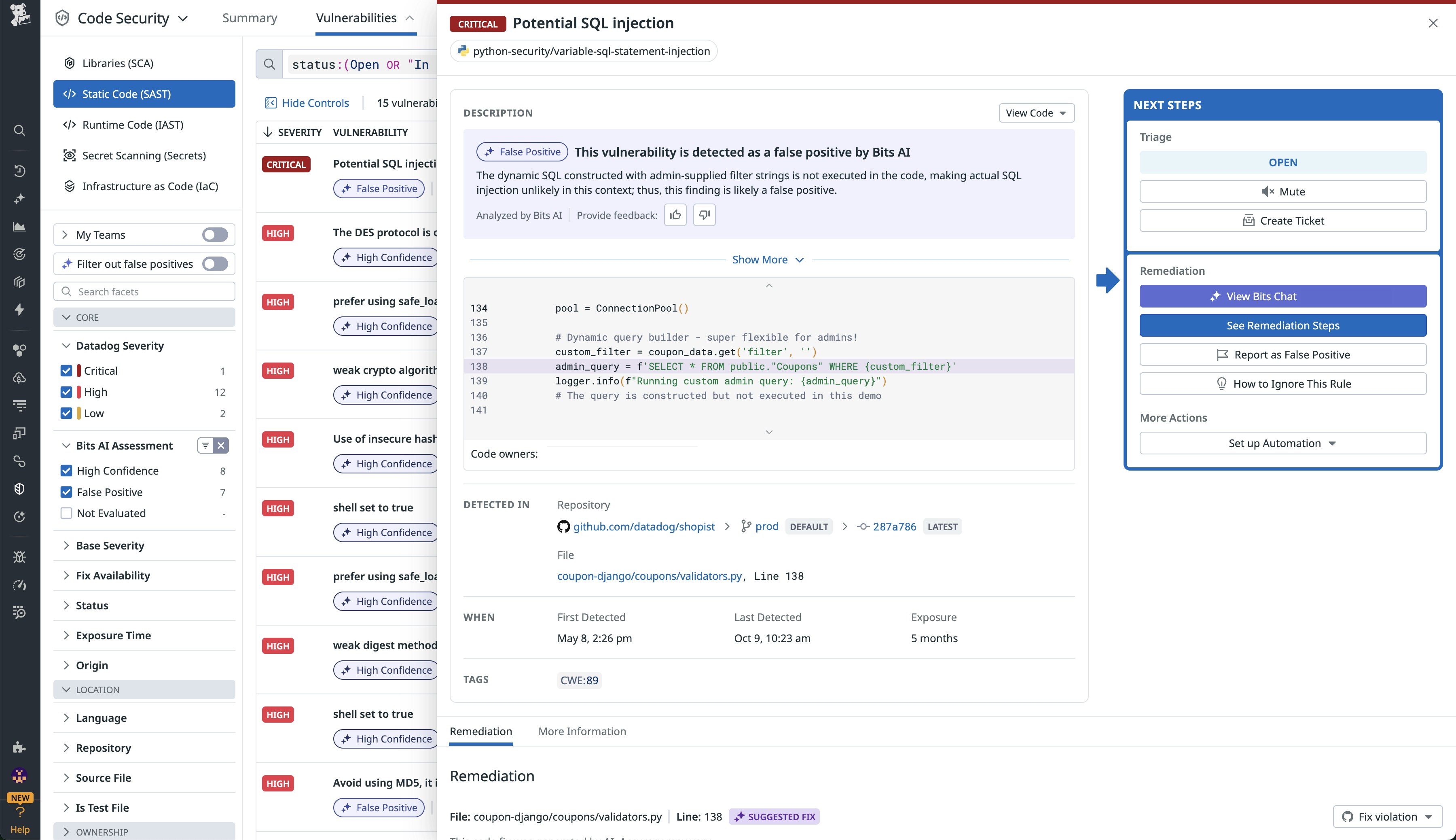

Once enabled, the false positive filtering feature integrates into the SAST Vulnerabilities and Repositories explorers, giving security and development teams an easy way to triage findings. Likely false positives can be filtered out, while higher-confidence results can be reviewed first.

Reviewing and refining results

In the Vulnerabilities explorer, false positives can be filtered out with a single click. Vulnerabilities can be grouped by confidence level, enabling teams to focus on high-signal findings while still being able to review issues that Bits AI has assessed as likely false positives. Confidence levels make it easy to review the findings most likely to require action. Each assessment is automatically labeled with a confidence badge that reflects its Bits AI classification.

Selecting a vulnerability opens a side panel that displays the reasoning Bits AI provides for its classification. This view provides transparency into how each decision was made and enables users to validate the result. From the same panel, engineers can provide feedback by confirming or correcting the classification and, if needed, add further context in a text field. This feedback helps refine Bits AI’s performance over time, ensuring the model continues to adjust to real-world patterns and security priorities.

By filtering out likely false positives and surfacing relevant findings, the feature directs teams toward genuine risks. This focus helps teams concentrate their efforts on high-impact vulnerabilities, enabling them to be reviewed and resolved first.

Improving accuracy with Bits AI

Static analyzers are intentionally risk-averse. As such, they are designed to flag anything that looks like a potential vulnerability. While this approach ensures broad coverage, it inevitably produces findings that are unlikely to be exploitable. Every SAST tool generates false positives as part of this trade-off.

An LLM introduces the ability to reason about context in ways that cannot be done by static analysis tools. Rather than relying solely on rule-based detection, an LLM can evaluate how code behaves in its broader structure, such as how data moves through functions, how input is validated, and whether conditions for exploitation are actually met.

This reasoning capability enables LLMs to filter out findings that appear risky but cannot be meaningfully exploited. That said, the model’s effectiveness still depends on the quality of the underlying static analyzer and its rules. Without strong initial detection, the model has nothing reliable to evaluate against.

Testing methodology and evaluation

To validate Bits AI’s performance, we framed the problem as a classification task. We wanted to understand if given a finding, could an LLM determine whether it was more likely to be a true positive or a false positive. To answer this, we designed experiments around the OWASP Benchmark, a publicly available and widely used dataset that provides a framework for assessing the accuracy of static analysis tools. By working with this controlled dataset, we could measure accuracy and iterate quickly without being blocked by the ambiguity that comes with real-world code.

To explore the space, our engineers tested a wide range of parameters and prompt designs, including variables such as system prompt, model type, temperature, top-p sampling, and the language of benchmark examples. We tuned parameters sequentially, focusing first on those that had the greatest impact and then concentrating on those that had the least.

There was a trade-off in prompts that shaped the final design. Prompts optimized for catching true positives tended to misclassify more false positives, while prompts tuned for filtering false positives risked missing real issues. The configuration we selected balanced these competing pressures, achieving strong false positive and true positive detection rates.

Continuing investment in AI for Code Security

At Datadog, we continue to invest in AI-driven innovation within Code Security. We recognize that LLMs are reshaping how teams detect, prioritize, and remediate vulnerabilities. Beyond filtering noise and managing backlogs, these models have the potential to identify new classes of security issues and fix them faster.

False positive filtering is the first step in this direction. By reducing the impact of false alarms and highlighting likely true vulnerabilities, this capability improves signal quality and provides better context for security and development teams. With clearer signals and improved visibility, teams can spend less time on distractions and more time addressing real security issues.

Getting started with false positive filtering

False positive filtering is available today for Datadog Code Security customers. To learn how to enable and use false positive filtering, visit the Datadog Code Security documentation. Or, if you’re new to Datadog, you can sign up for a 14-day free trial.