Jean-Philippe Bempel

Scott Gerring

Nicholas Thomson

Will Roper

Historically, developers have relied on languages like C and C++ for explicit control over memory allocation and deallocation. This approach can yield very low overhead and tight control over performance, but it also increases complexity and risk (e.g., memory leaks, dangling pointers, and double frees). This often results in runtime issues that are difficult to diagnose, which can become a drag on team velocity.

Java introduced a managed runtime with the garbage collector (GC) as a solution to these risks. The GC automatically reclaims memory used by objects that are no longer reachable or needed by the application, freeing the developer of the need to explicitly manage memory. While this increases developer productivity and provides built-in memory safety, it comes with tradeoffs. Choosing a GC runtime means giving up some degree of predictability and control in exchange for ease of use and safety. The GC also introduces runtime overhead and can result in non-deterministic pauses, which may not be acceptable for workloads like high-frequency trading or industrial control system loops.

In this post, we’ll cover:

- A refresher on how Java GC works

- Signs that you may need to tune GC settings

- The major GC algorithms available in OpenJDK

- Guidance on choosing the right GC for your application

A refresher on Java GC

Java introduced GC to eliminate many of the risks of manual memory management, such as dangling pointers or memory leaks. At its core, GC reclaims memory by freeing objects that are no longer referenced by an application. But to understand how GC decisions impact performance, two concepts are key: generations and compaction.

Generational GC

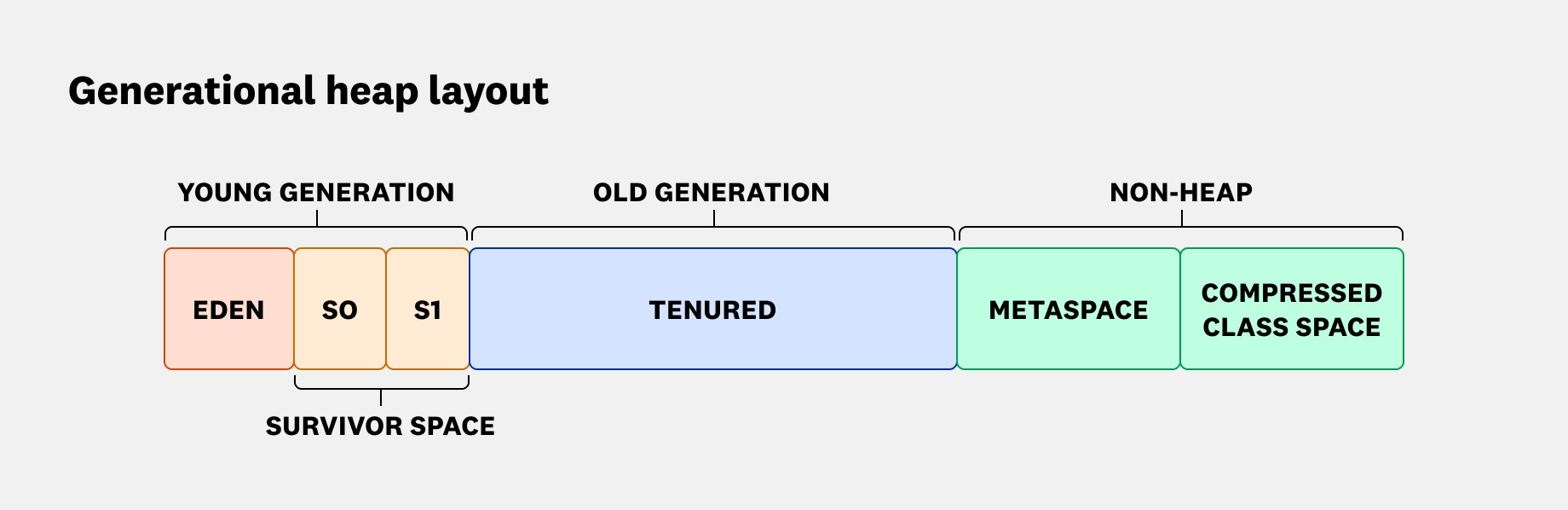

Most modern JVMs use a generational GC strategy. The heap (where all objects in Java are stored) is split into a young generation, where new objects are allocated, and an old generation, where longer-lived objects are promoted. This approach follows the generational hypothesis: most objects die young. By focusing frequent, lightweight collections on the young generation, the JVM reduces pause times and overhead.

In a generational GC, the young generation is typically divided into multiple regions to optimize allocation and collection efficiency. One common layout includes the Eden space—where new objects are initially allocated—and two Survivor spaces, which help manage objects that live long enough to survive initial collections. A related concept is the semi-space model used by some collectors, which alternates between two equally sized memory regions, copying live objects from one to the other and reclaiming the rest in a single sweep. These strategies reduce fragmentation and make allocation fast by enabling simple pointer bumping, especially in the Eden space, which is frequently cleared during minor GCs.

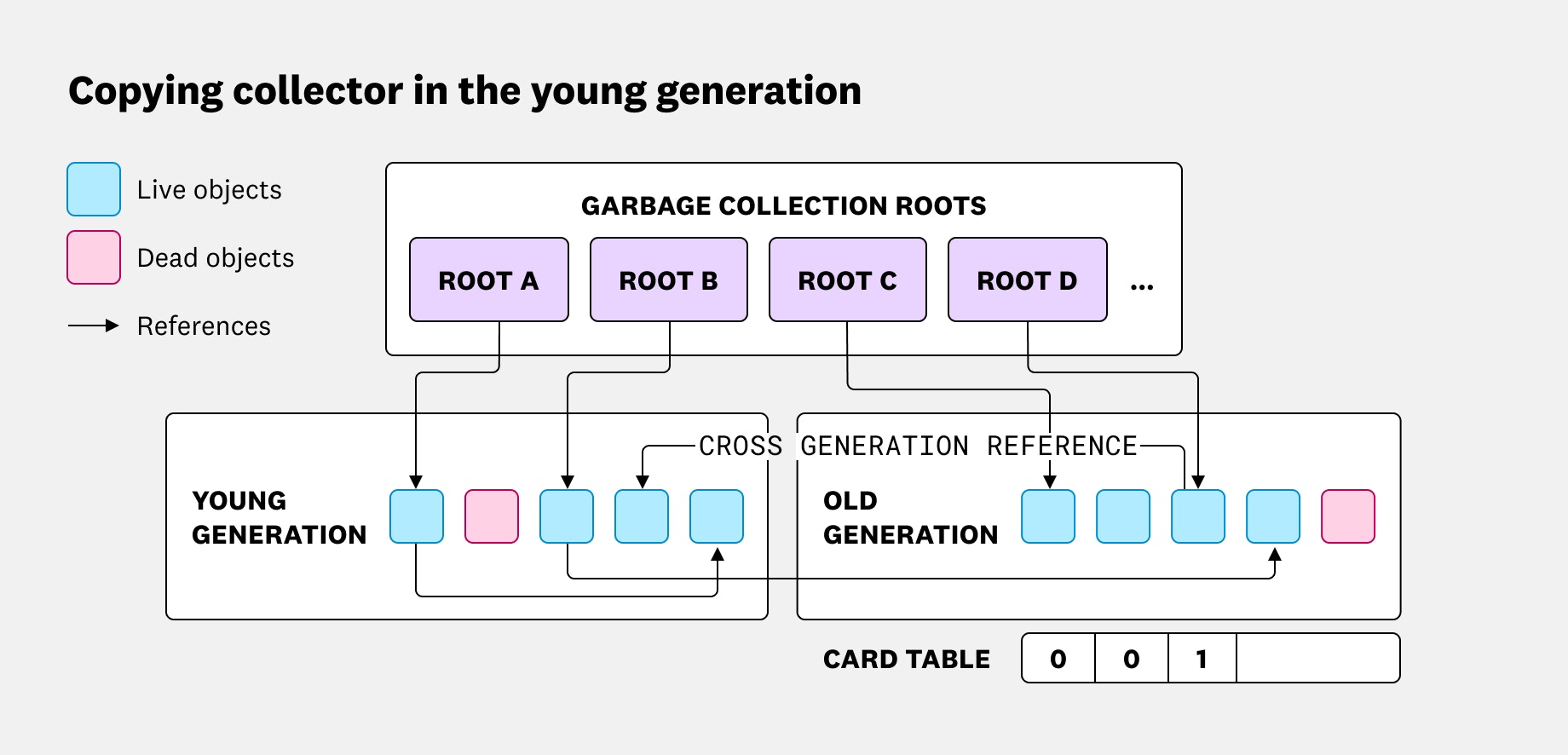

Generational garbage collection becomes more efficient by narrowing the scope of GC work to just the young generation during most cycles. This allows the JVM to run separate GC strategies for young and old generations. Young generation GCs are more frequent and lightweight; since most newly allocated objects die quickly, the marking phase is fast, and survivors can be copied to a new space while treating the rest as free memory. This is typically implemented using a copying collector, which only copies live objects and reclaims dead memory immediately, resulting in compact, defragmented regions. However, simply scanning the young generation isn’t enough. Some references may exist from the old generation pointing to the young generation (called old-to-young references), and these must be tracked to ensure correctness. To handle this, the generational garbage collectors use remembered sets: metadata structures that record which parts of the old generation may contain pointers to young objects. A write barrier is used during object updates to maintain these sets efficiently. Together, these mechanisms ensure that young generation collections remain both accurate and fast, without requiring a full heap scan.

Memory compaction

All OpenJDK GCs implement a compacting strategy, which rearranges live objects in memory to reduce fragmentation. During a GC cycle, surviving objects are moved closer together, either within the same region or copied to another (like a Survivor space in generational collectors). At the end of a GC cycle, this compaction frees up a contiguous block of memory, improving heap utilization.

One of the main advantages of this approach is that it enables bump pointer allocation, a fast allocation technique where the GC increments a pointer by the size of the new object to preserve heap memory.

In multi-threaded scenarios, this efficiency is preserved by using Thread-Local Allocation Buffers (TLABs): small memory regions assigned per thread to enable lock-free allocation. Another benefit of using a compacting GC is improved memory locality, as tightly packed objects are more likely to reside in the CPU cache, which can boost application performance. While copying objects incurs some cost, this overhead is often offset in generational GCs where short-lived objects are collected more frequently, and long-lived ones are compacted less often.

Signs you may need to tune the GC

For many applications, the JVM’s default GC settings are sufficient, but it’s important to understand when the default GC is causing a performance bottleneck. These bottlenecks typically appear in two forms:

-

Latency sensitivity: GC pauses, especially in older collectors, can impact response times. Full GC events that align with p99 latency may cause issues and missed deadlines for latency-sensitive applications. To determine if the default settings are causing large spikes, you can look at GC logs or APM tools to surface long or frequent pause times, especially from full GC events. In low-latency applications—like trading systems, real-time analytics, or multiplayer gaming—those pauses can be unacceptable.

-

Throughput pressure: In high-throughput applications with large heaps and high allocation rates (e.g., data processing pipelines), the GC might spend too much time collecting. This affects overall performance, as applications with high allocation rates may spend more than 10% of CPU time in GC, leading to slowdowns. One indication that your GC is causing throughput drops is if your application appears to be getting slower even though CPU usage is high, and GC logs show frequent and long collections.

Java GCs

OpenJDK offers several collectors, each suited to different workloads:

Serial GC

The Serial GC is the simplest garbage collector available in the JVM (excluding Epsilon) and is designed for environments with limited CPU and memory resources. It is a stop-the-world collector, meaning that all application threads are paused during garbage collection phases. As its name implies, it uses a single thread to perform all GC work, which makes it ideal for small heaps and low-core-count systems.

By default, the JVM selects Serial GC when it detects fewer than two CPUs or a heap size under 2 GB—conditions where the overhead of coordinating multiple threads would outweigh any performance benefit. In these cases, using a single thread avoids synchronization costs and handles garbage collection efficiently.

Despite its simplicity, Serial GC is still a generational collector, dividing the heap into young and old generations. It performs minor GCs for the young generation and full GCs for the entire heap, providing a basic but effective memory management strategy for constrained workloads.

Parallel GC

Also known as the Throughput Collector, the Parallel GC works similarly to Serial GC in terms of its basic algorithm but parallelizes most of its phases. By using multiple threads to perform garbage collection, it makes more efficient use of available CPU cores, significantly improving throughput and reducing pause times compared to single-threaded collectors.

The performance of Parallel GC scales with the number of cores. More CPU cores mean more parallel work during GC cycles, which helps maintain throughput even in larger heaps. It’s well suited for applications where maximizing overall throughput is more important than minimizing individual pause times.

G1 GC

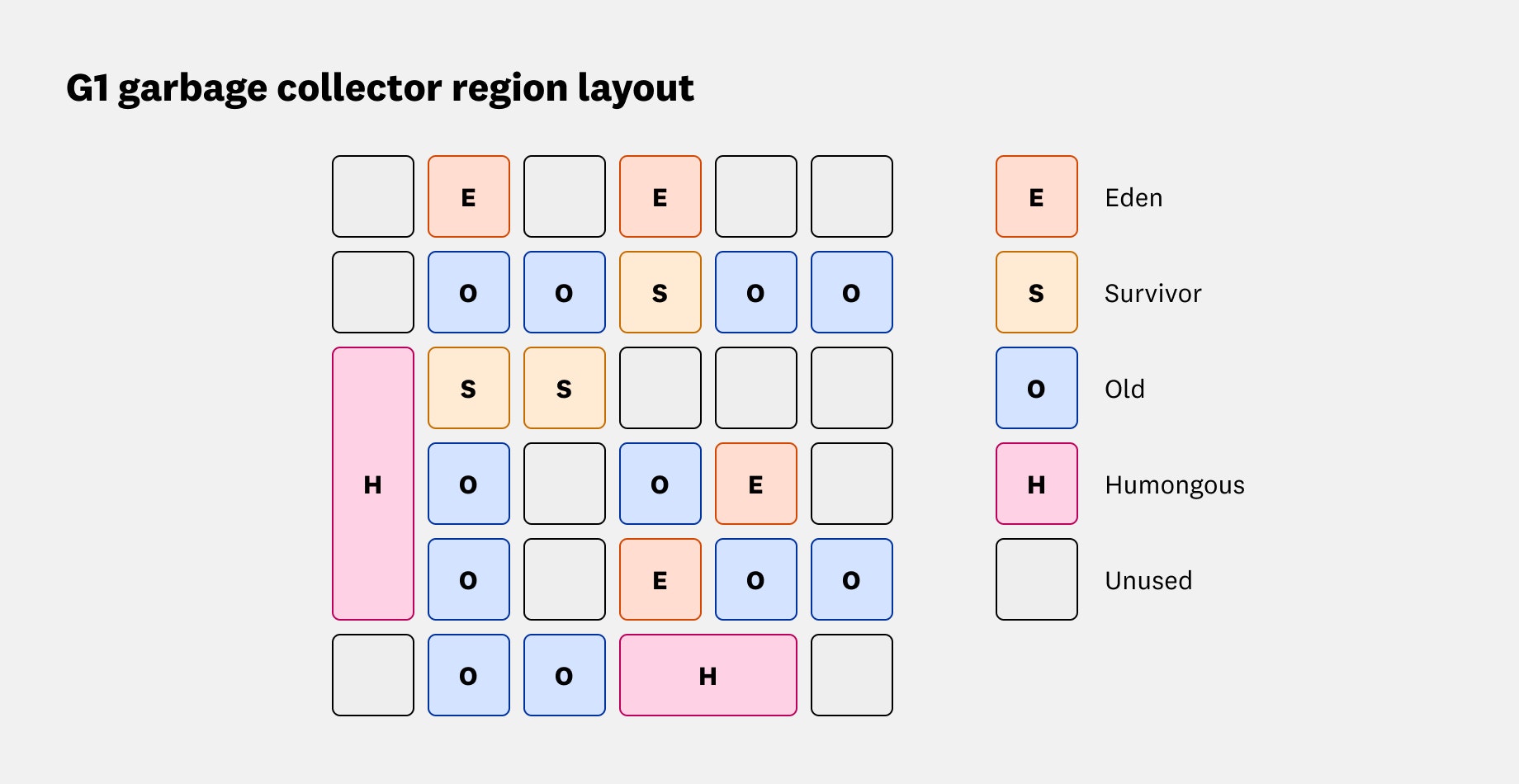

The G1 (Garbage-First) garbage collector is a sophisticated generational GC designed to improve heap management and offer predictable pause times. Unlike traditional collectors that divide memory into large contiguous regions, G1 partitions the Java heap into many fixed-size regions, each of which can belong to the Eden, Survivor, Old, or Humongous spaces. This region-based design provides greater flexibility in managing memory and enables incremental collection of parts of the heap, especially the old generation. In practice, only the old generation is collected partially, while the young generation is always considered as a whole.

G1’s core strategy is to prioritize regions with the most reclaimable garbage. After a marking phase, G1 estimates how much garbage is in each region and selects those with the highest return on investment to evacuate, minimizing the number of live objects that need to be copied. This approach helps the collector maintain predictable pause times by focusing work where it’s most effective. It also supports a pause time target, though it’s not a strict guarantee; if the collector overshoots, it may dynamically adjust the balance between young and old generation sizes.

While G1 includes some concurrent phases (such as marking the old generation), the majority of its operations—including object evacuation—remain stop-the-world. As a result, G1 is better classified as a throughput-oriented collector with bounded latency, rather than a true low-latency GC. Configuring G1 with pause time goals under 50 ms is generally unrealistic.

Since JDK 9, G1 has become the default GC on systems with at least two CPUs and 2 GB of heap memory, offering a balanced option for applications that require reasonable throughput with predictable GC behavior.

Shenandoah GC

Shenandoah is a low-latency garbage collector introduced by Red Hat for OpenJDK and designed to keep pause times consistently under 10 ms. To achieve this, most GC operations—including object evacuation—run concurrently with application threads. Because moving objects during runtime is complex, Shenandoah uses read barriers to ensure references remain valid even if an object has been relocated. These barriers are implemented during reference loads and benefit from JIT compiler optimizations, allowing a single reference check to serve multiple field accesses. If a thread encounters an object that hasn’t yet been moved, it can complete the evacuation itself and effectively redistribute GC work to application threads for reduced coordination overhead and smooth pause behavior. While this approach sacrifices some throughput, it provides strong latency guarantees, making Shenandoah a good fit for web servers and user-facing applications where responsiveness is critical.

ZGC

ZGC is a low-latency garbage collector developed by Oracle for OpenJDK and designed with goals similar to Shenandoah: minimizing pause times by performing nearly all GC work—including compaction—concurrently with application threads. Like Shenandoah, it uses read barriers to maintain reference consistency while objects are being relocated in the heap. As of JDK 21, ZGC introduced a generational mode, which further improves performance by separating short-lived and long-lived objects. This generational version became the default in JDK 23, offering better throughput while maintaining ZGC’s hallmark low-pause behavior.

Choosing the right GC

With multiple GCs available in the JVM, it’s natural to ask: Which one is right for my workload? While the JVM provides a sensible default with G1 GC in most recent versions, the best choice depends on the nature of your application; specifically, whether you prioritize throughput or low latency.

We can broadly categorize JVM workloads into two types:

- Throughput-oriented: Focused on processing as much data as possible in a given timeframe, where occasional GC pauses are acceptable.

- Latency-sensitive: Focused on delivering consistent, fast response times, where even short GC pauses can impact user experience.

Throughput-oriented workloads

Throughput-oriented workloads include batch jobs, ETL pipelines, data ingestion services, and other backend tasks that are not user-facing. In these scenarios, maximizing throughput is more important than minimizing pauses. It’s acceptable for the application to pause briefly, as long as it finishes the job efficiently.

-

Serial GC: A simple, stop-the-world, single-threaded collector ideal for small heaps (less than 2 GB) and low-CPU environments (like containers with less than one CPU). The Serial GC avoids multi-threaded overhead, making it efficient in constrained setups.

-

Parallel GC: A stop-the-world, multi-threaded collector great for applications with larger heaps and multiple CPU cores, such as Spark or Kafka jobs. Additionally, the Parallel GC offers higher throughput than Serial GC and is often the best choice when pause times aren’t a concern.

-

G1 GC (Garbage-First): A mix of parallel and incremental behavior good for large heaps (more than 32 GB) or when you want to reduce full GC frequency without tuning too much. The G1 GC splits the heap into regions and focuses GC on the ones with the most garbage first.

Latency-sensitive workloads

Latency-sensitive applications, such as APIs, microservices, or real-time systems, need consistently fast response times, often within 100 ms end to end. In these systems, even brief pauses from GC can introduce jitter or timeouts.

-

Shenandoah GC: Runs most GC phases concurrently with application threads, including object evaluation. Shenandoah works on 32-bit and 64-bit systems, offering flexibility in platform support. Shenandoah is best for workloads that value predictable latency over raw throughput.

-

ZGC: Supports heap sizes from a few GB to terabytes, and is ideal for large-scale, latency-sensitive apps. Since JDK 21, ZGC introduced a generational mode for better performance, and it became the default in JDK 23. ZGC works only on 64-bit systems and is particularly good at quickly returning memory to the OS, even before a GC cycle completes.

| Type | Best for | Pros | Notes |

|---|---|---|---|

| Serial | Small, single-threaded apps | Simple, low overhead | Best for < 2 GB heap, < 2 CPUs |

| Parallel | Batch/processing pipelines | High throughput | Accepts longer pause times |

| G1 GC | General purpose | Balanced throughput and pause time | Default in most JVMs |

| Shenandoah | Low-latency apps | Very low pause time, 32-bit support | Slightly lower throughput |

| ZGC | High-scale, low-latency apps | Ultra-low pauses, large heaps | 64-bit only, generational mode default in JDK 23 |

Choosing the right GC is about balancing your system’s priorities. If you’re running high-throughput data jobs, Parallel or G1 GC may serve you best. If your goal is ultra-low-latency for user-facing workloads, Shenandoah or ZGC will give you tighter pause time control.

Get deeper visibility into the performance of your Java GCs with Datadog

In this post, we’ve explored the different types of Java GCs and provided context for which application types would be best suited by each type of GC. For more information, check out our posts on tuning Java applications for containers, Java memory management, and monitoring the Java runtime.

Alternatively, check out our documentation on monitoring the Java runtime. If you’re new to Datadog and would like to monitor the health and performance of your Java GCs, sign up for a free trial to get started.