Mahashree Rajendran

Mohammad Jama

As organizations scale their cloud operations, object storage costs and performance issues often escalate. With petabytes of data, thousands of buckets, and millions of objects—especially from AI and data-intensive workloads—minor inefficiencies can quickly drive up expenses and degrade service quality.

Datadog Storage Management helps you see exactly how your cloud storage is used, reduce unnecessary costs, optimize performance, and improve operational efficiency across Amazon S3, Google Cloud Storage, and Azure Blob Storage. Storage Management now provides prefix-level metrics for Amazon S3 across three key use cases: cost optimization, performance optimization, and operational efficiency.

In this post, we’ll explore how new metrics for Amazon S3 in Storage Management help you:

- Implement and manage Amazon S3 life cycle and retention policies

- Quickly identify opportunities to optimize Amazon S3 storage costs

- Identify high-traffic Amazon S3 buckets and resolve bottlenecks

Implement and manage Amazon S3 life cycle and retention policies

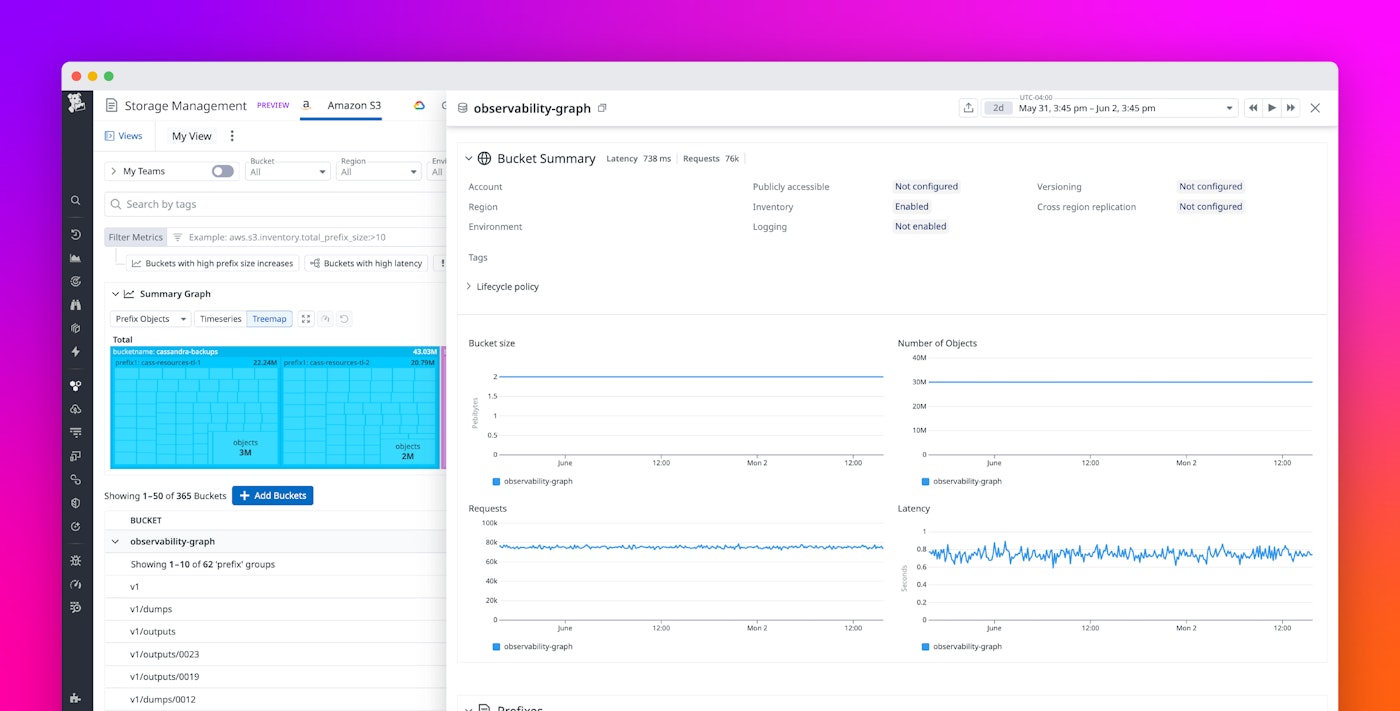

Configurations like versioning in Amazon S3 buckets provides valuable protection against accidental deletions and overwrites. But without proper life cycle management, version accumulation can lead to increased storage costs and management complexity. Storage Management provides visibility into bucket-level life cycle and retention policies directly within the Datadog platform, making it possible to understand existing configurations and identify opportunities for improvement—all in one place. This centralized view helps you enforce organization-wide storage policies without needing to switch between multiple AWS console pages.

For example, Storage Management might identify a versioned bucket accumulating excessive non-current versions without appropriate life cycle rules. The detailed metrics for this bucket would show the impact on storage consumption, along with current life cycle policy settings.

With this visibility into your Amazon S3 bucket configurations, you can implement appropriate life cycle rules to manage non-current versions, moving them to lower-cost storage tiers or expiring them after appropriate retention periods.

Quickly identify opportunities to reduce Amazon S3 storage costs

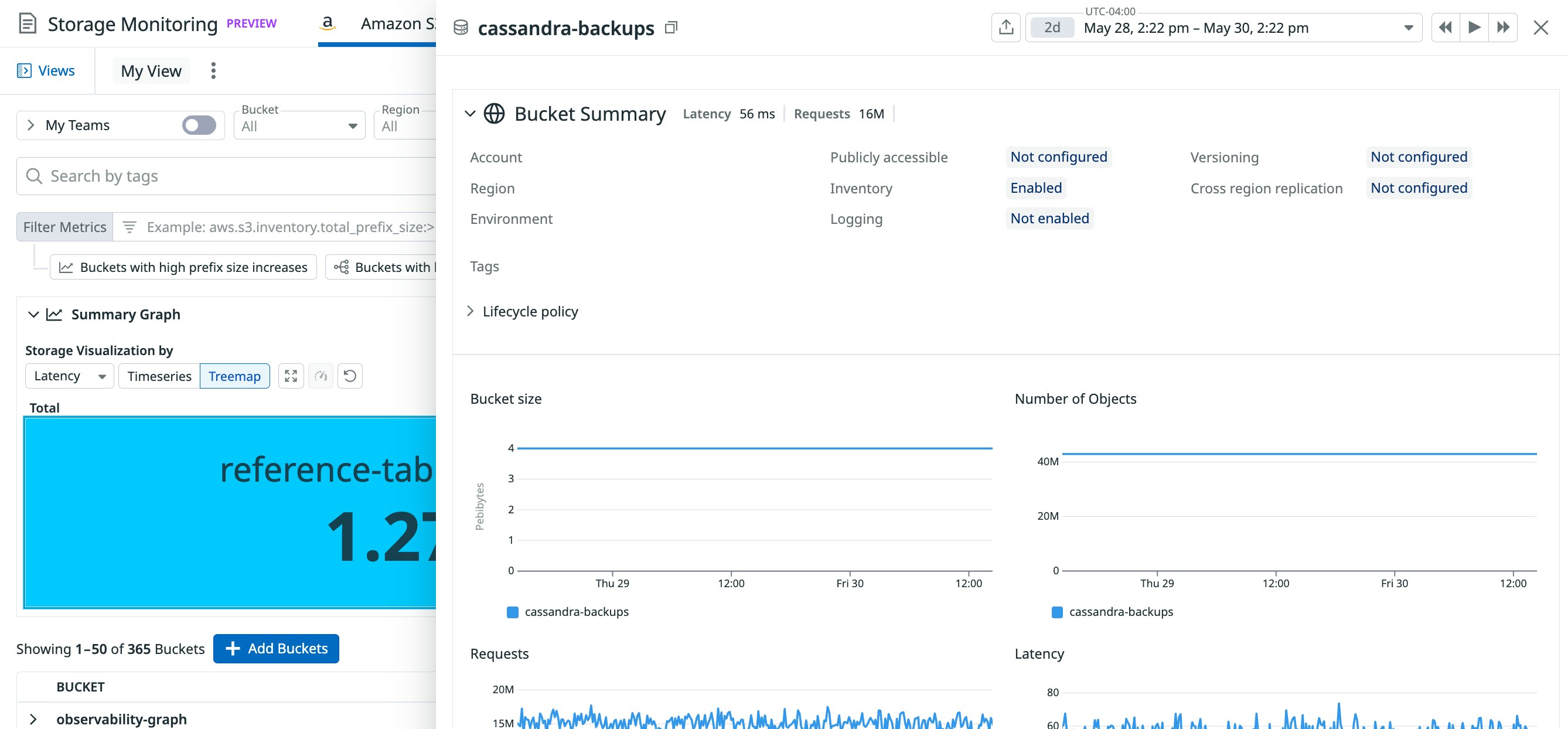

Organizations often store data in the Amazon S3 Standard storage class by default, but this tier isn’t cost-effective for infrequently accessed data. Identifying which data can be moved to lower-cost storage tiers typically requires deep analysis of access patterns and object metadata—especially on a prefix level.

Storage Management’s new request metrics by prefix for Amazon S3 enable you to visualize exactly the prefixes that are actively used and, conversely, those that contain rarely accessed data. Combined with object count and age metrics, you can quickly identify opportunities to transition infrequently accessed data to lower-cost storage classes.

Let’s say you have a workload that’s fine-tuning an LLM model. Storage Management can show that your training dataset prefixes contain terabytes of data that were heavily accessed during initial training but haven’t been retrieved since the model was deployed five months ago. In the following example screenshot, a specific AI dataset prefix is selected, and the sidepanel shows that the objects in that prefix haven’t been accessed in over a month.

These metrics enable you to identify exactly which data would benefit from transitioning to Glacier or Glacier Instant Retrieval and, as a result, implement appropriate life cycle policies through the Amazon S3 console or AWS CLI.

Identify high-traffic Amazon S3 buckets and resolve bottlenecks

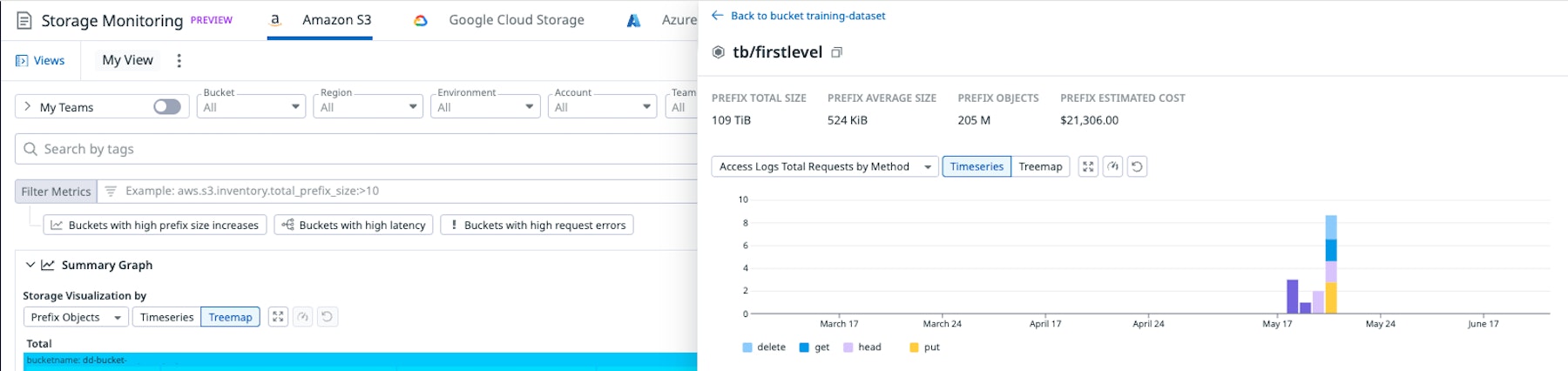

Performance issues such as throttling or latency are often caused by hot prefixes, which are paths that receive more requests than Amazon S3 can efficiently handle. But pinpointing these hotspots requires granular visibility that most teams don’t have. Storage Management’s new latency by prefix metrics provide unprecedented visibility into exactly where performance bottlenecks are occurring.

For example, Storage Management might show that a specific prefix in your media processing bucket is experiencing high latency during peak processing times. Using the Summary graph, you can identify high-traffic buckets and then zero in on the corresponding prefix. In the screenshot below, the treemap breaks down latency by prefix within the storage-monitoring-test-saleel bucket. The largest blocks represent prefixes with the highest average latency, such as tb and tb/firstlevel.

These detailed metrics can provide clues to help you implement design patterns that will better distribute the workload, such as using randomized prefixes to avoid throttling.

Get granular visibility into storage usage and patterns

Storage Management helps you understand exactly how your Amazon S3 storage is being used with granular metrics that enable you to optimize costs, performance, and operational efficiency. Each metric provides clear visibility into your storage usage patterns and configurations, enabling you to make informed decisions.

Storage Management for Amazon S3 is available today, along with object size metrics for Google Cloud Storage and Azure Blob Storage. Request-related metrics for Google Cloud Storage and Azure Blob Storage are coming soon. To learn more, see the Storage Management documentation. And if you’re not yet a Datadog user, you can get started with a 14-day free trial.