Micah Kim

Will Roper

Today, CISOs and security teams face a rapidly growing volume of logs from a variety of sources, all arriving in different formats. They write and maintain detection rules, build pipelines, and investigate threats across multiple environments and applications. Efficiently maintaining their security posture across multiple products and data formats has become increasingly challenging. Spending time normalizing data is especially frustrating for these teams, who are often smaller, may lack vendor-specific expertise, and operate on tighter budgets.

To address security data management, over 120 leading organizations collaborated to develop the Open Cybersecurity Schema Framework (OCSF). OCSF is an open source, vendor-neutral schema designed to standardize event formats for security data, streamlining migrations between platforms and improving cross-tool interoperability. Launched in 2022, OCSF establishes a common taxonomy, simplifying the correlation of Tactics, Techniques, and Procedures (TTPs) and enabling modular schemas. This improves the way teams stop bad actors, derive actionable threat insights, and identify Indicators of Compromise (IoC).

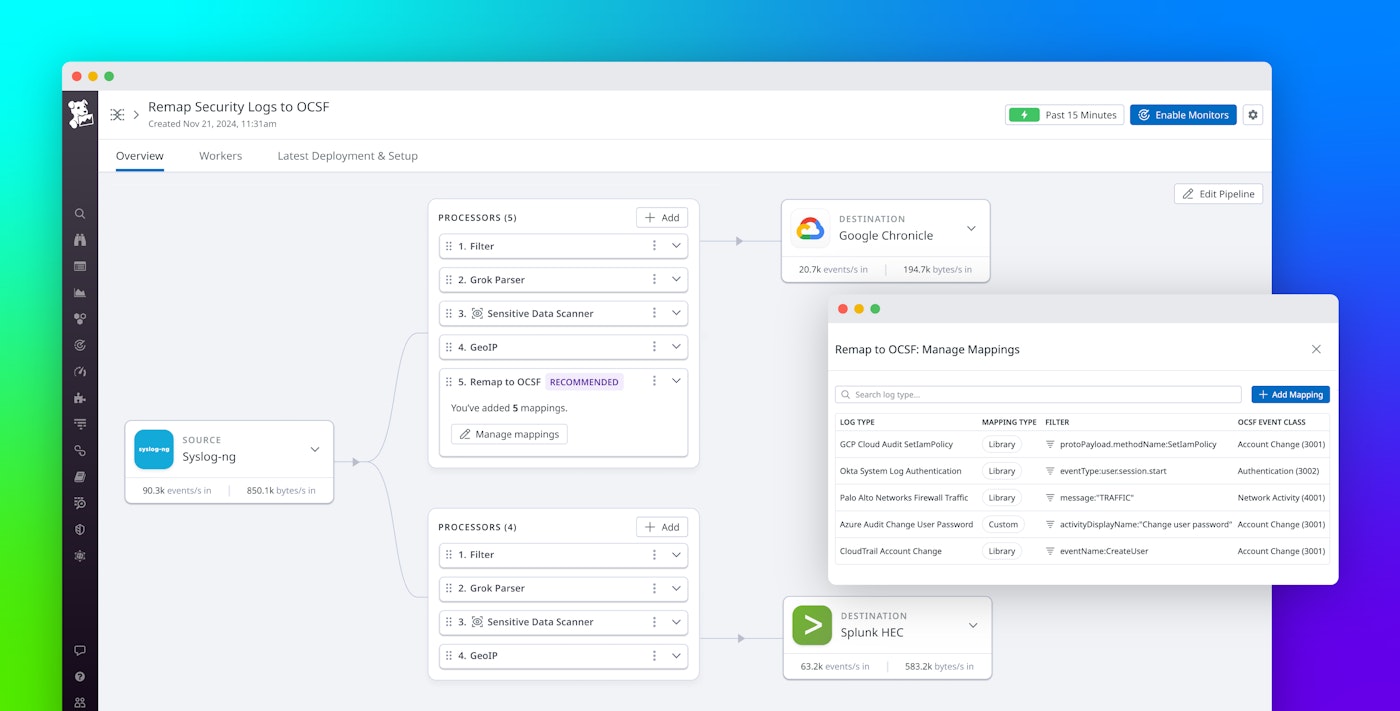

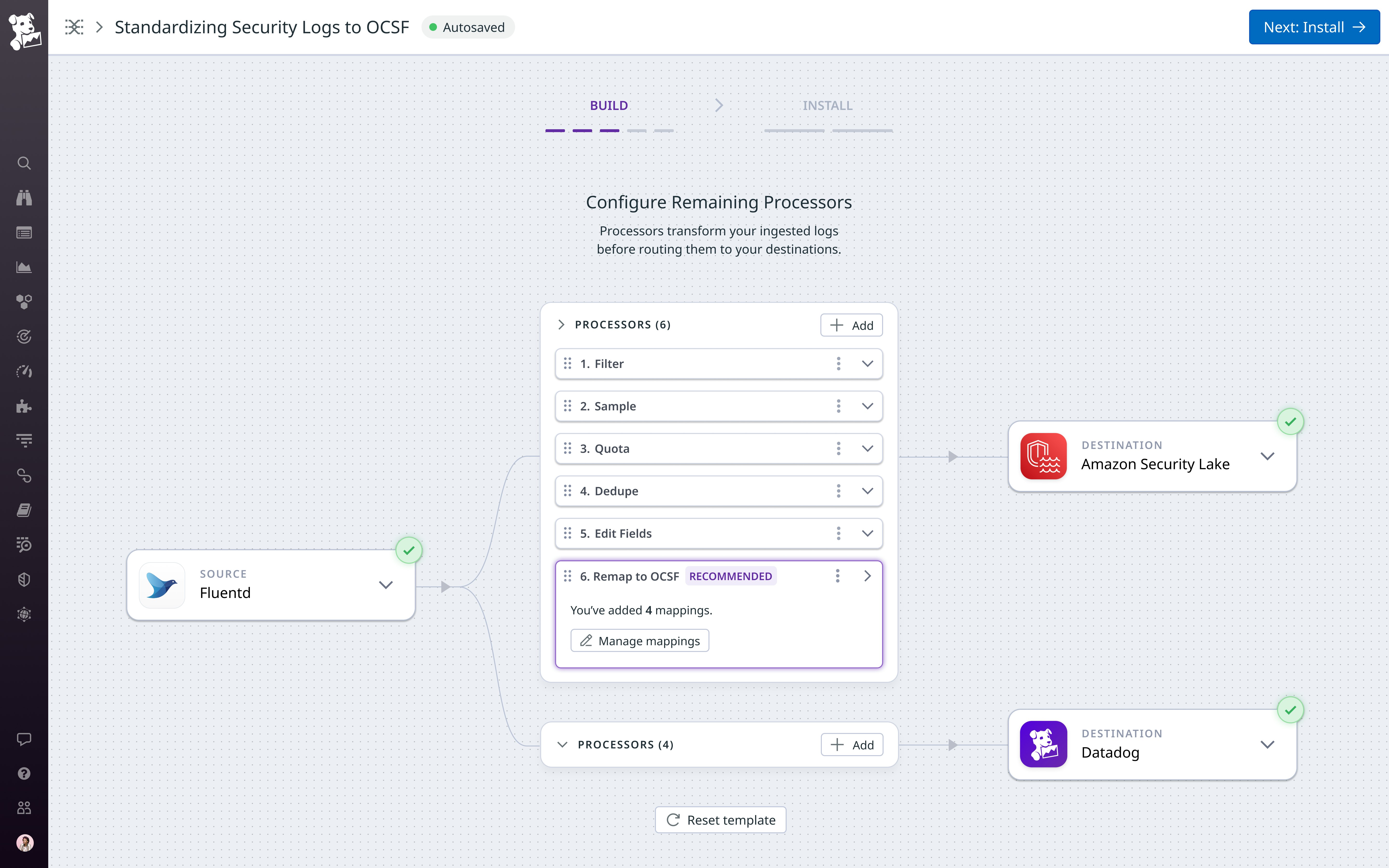

Datadog Observability Pipelines enables you to aggregate, process, and route logs to multiple destinations. This enables organizations to take control of the log volume and flow of data to enrich logs, direct traffic to different destinations, extract metrics, scan for sensitive data, and more. Now, Observability Pipelines supports transformation to OCSF on the stream and enables you to send remapped logs to your preferred security destinations.

In this post, we’ll cover how Observability Pipelines enables you to easily remap any log—from any vendor or source—to OCSF and standardize your security data.

Automatically remap logs from popular vendors to OCSF and send to desired security destinations or data lakes

Logs come from various sources and applications, and teams enrich, transform, and route them to different destinations based on their use cases and budgets. For security teams, these logs often arrive at their desired solutions in many different formats, and they need high-quality standardized logs and completeness of coverage as quickly as possible to protect against threats. Teams spend critical time and effort normalizing data before they’re able to detect and investigate attacks. In-house data standardization can be hard to maintain and requires attention to ensure that the data translated between security platforms is scalable and consistent.

Using Datadog Observability Pipelines to transform logs into OCSF format can help you standardize your security data on stream to support your taxonomy requirements and send it to security vendors such as Splunk, Datadog Cloud SIEM, Amazon Security Lake, Google SecOps (Chronicle), Microsoft Sentinel, SentinelOne, and CrowdStrike. Routing logs to Datadog Cloud SIEM provides intuitive, graph-based visualizations to surface actionable security insights across your cloud environments. Cloud SIEM also includes Content Packs with out-of-the-box resources for popular security integrations such as AWS CloudTrail, Okta, and Microsoft 365.

Once you select the supported log type, Observability Pipelines will automatically remap those logs to OCSF format from sources including AWS, Google, Microsoft, Palo Alto Networks, Okta, GitHub, and more.

Whereas mapping logs is a manual and complex process diverting security teams’ focus from threat detection and remediation, Observability Pipelines handles OCSF transformation on-stream and enables you to send your logs anywhere. As a universal log forwarder, you don’t have to worry about vendor lock-in or rely on your security tool’s ability to manage OCSF transformation.

For example, let’s say you’re a CISO at a financial services company specializing in insurance or healthcare. Your company uses Datadog for DevOps troubleshooting and a different solution for security, such as Splunk or Amazon Security Lake. Managing the split of the logs across different products and mapping each log source to OCSF is time consuming, costly, and can result in taxonomy inconsistencies for your team. With Observability Pipelines, you can transform your data to OCSF before they leave your environment, route your security logs to your desired destination, and keep your DevOps logs flowing to Datadog. Logs sent to Amazon Security Lake are automatically encoded in Parquet to meet AWS requirements.

Transform any log from any source to OCSF with custom mapping

Not all logs follow a standard format, especially those from internal applications, legacy systems, or niche vendors. Without a solution to normalize those logs, teams investing in OCSF are left with mismatched detection rules and lost coverage over their log data.

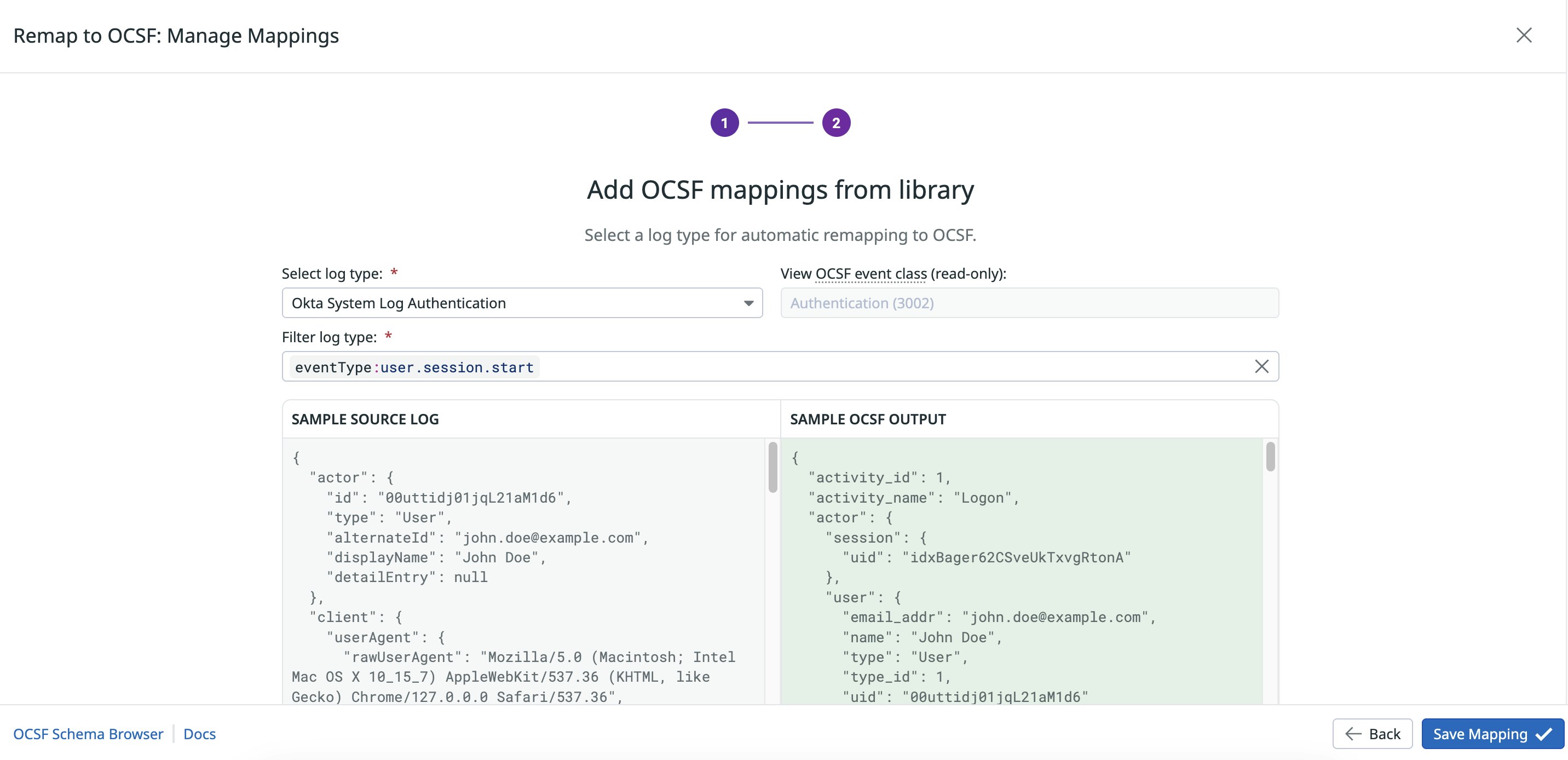

Alongside automatic remapping for popular vendors, Observability Pipelines supports custom mapping to OCSF, ensuring you can transform any log from any source or vendor. Whether you’re mapping internal audit logs, authentication events from smaller vendors, or application-specific security signals, custom mapping gives your security teams full control over schema design.

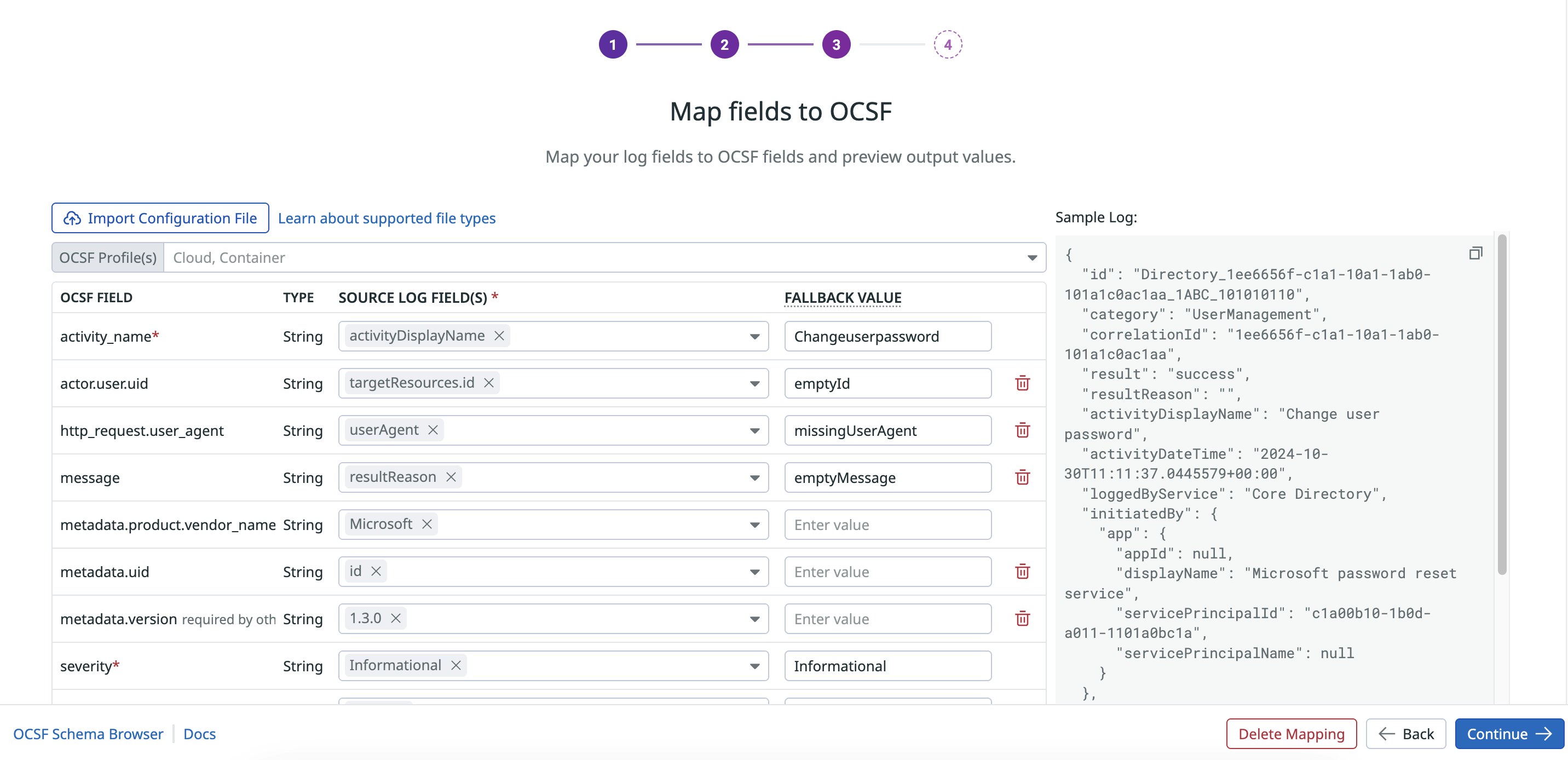

Once you select custom mapping, you can set the OCSF event class and category, input a sample log, and filter for the log to remap. Using the Observability Pipelines custom mapping UI, you can define the source field to OCSF field pairings and set fallback values in case the source log is missing.

Finally, you can configure any additional processing for fields like severity, which may contain multiple levels such as INFO, ERROR, or WARN corresponding to values in OCSF such as “Informational”, “High”, or “Warning”, respectively. The screenshot below shows an example workflow to custom map an Azure Audit log using the “Remap to OCSF” processor:

Security teams already investing in building OCSF mappings internally can reuse and scale that work with Observability Pipelines’ “Bring Your Own (OCSF) Mapping” feature. To turn your mapping into our templatized JSON format, simply use the “Import Configuration File” button to upload your existing mapping logic, and Observability Pipelines will autofill the custom mapper with your source and OCSF field pairings in real time.

How logs are remapped to OCSF

There are three major components to the OCSF model: Data Types, Attributes, and Arrays, Event Categories and Classes, and Profiles and Extensions.

Data Types, Attributes, and Arrays

- Data Types define the structure for each event class, including strings, numbers, booleans, arrays, and dictionaries.

- Attributes are individual data points such as unique identifiers, timestamps, and values for standardizing objects. An attribute dictionary provides a consistent, technical framework containing the foundation for how attributes are applied across datasets.

- Objects and arrays encapsulate related attributes, with objects acting as organized sets and arrays representing lists of similar items.

Event Categories and Classes

- Categories are high-level groupings of classes that segment an event’s domain and make querying and reporting manageable. For example, an Okta User Account Lock log would map to the IAM (3) category.

- Classes are specific sets of attributes that determine an activity type. Each class has unique names and identifiers that correspond to specific log types. For example, a Palo Alto Networks Firewall Traffic log would map to the specific Network Activity (4001) event class.

Profiles and Extensions

- Profiles are overlays that add specific attributes to event classes and objects. Profiles give granular details such as malware detection for endpoint detection tools. For example, the Cloud profile adds

cloudandAPIattributes to each applicable schema. - Extensions enable customization and creation of new schemas or existing schema modifications for specific security tool needs.

Using a sample Google Workspace Admin Audit addPrivilege log and the OCSF schema for the User Access Management (3005) class, we map the source log’s attributes into OCSF’s required, recommended, and optional segments. In addition to remapping existing values, OCSF introduces its own enriched attributes into the final log.

{ "protoPayload": { "@type": "type.googleapis.com/google.cloud.audit.AuditLog", "authenticationInfo": { "principalEmail": "darth-vader@example.net" }, "requestMetadata": { "callerIp": "10.10.10.10", "requestAttributes": {}, "destinationAttributes": {} }, "serviceName": "admin.googleapis.com", "methodName": "google.admin.AdminService.addPrivilege", "resourceName": "organizations/100000000000/delegatedAdminSettings", "metadata": { "@type": "type.googleapis.com/ccc_hosted_reporting.ActivityProto", "event": [ { "parameter": [ { "type": "TYPE_STRING", "label": "LABEL_OPTIONAL", "value": "tech_support", "name": "Darth Vader" }, { "value": "10001100000000000", "type": "TYPE_STRING", "label": "LABEL_OPTIONAL", "name": "ROLE_ID" }, { "type": "TYPE_STRING", "name": "PRIVILEGE_NAME", "label": "LABEL_OPTIONAL", "value": "Calendar;CALENDAR_SETTINGS_READ" } ], "eventType": "DELEGATED_ADMIN_SETTINGS", "eventName": "ADD_PRIVILEGE", "eventId": "7000b100" } ], "activityId": { "uniqQualifier": "-10000000000000", "timeUsec": "1000000000000" } } }, "insertId": "abc1abc1ab0a", "resource": { "type": "audited_resource", "labels": { "service": "admin.googleapis.com", "method": "google.admin.AdminService.addPrivilege" } }, "timestamp": "2021-09-12T09:19:08.647052Z", "severity": "NOTICE", "logName": "organizations/100000000000/logs/cloudaudit.googleapis.com%2Factivity", "receiveTimestamp": "2021-09-12T09:19:09.281725829Z"}OCSF-specific fields, such as class name (User Access Management), severity ID (2), and class ID (3005) are appended into the transformed log. Raw log fields such as protoPayload.requestMetadata.callerIp and timestamp are mapped using OCSF’s attribute dictionary to src_endpoint.ip (recommended) and time (required), respectively. Fields that don’t align with the schema, such as protoPayload.@type, are placed into the unmapped section. Additional attributes, such as the actor and device objects, are injected into the log from the host profile during the mapping process.

Observability Pipelines handles automatic remapping and outputs the Google Workspace Admin Audit addPrivilege log into OCSF’s User Access Management (3005) schema shown below:

{ "activity_id": 1, "activity_name": "Assign Privileges", "actor": { "user": { "email_addr": "darth-vader@example.net", "name": "Darth Vader" } }, "api": { "operation": "google.admin.AdminService.addPrivilege", "service": { "name": "admin.googleapis.com" } }, "category_name": "Identity & Access Management", "category_uid": 3, "class_name": "User Access Management", "class_uid": 3005, "cloud": { "provider": "Google" }, "metadata": { "log_name": "organizations/100000000000/logs/cloudaudit.googleapis.com%2Factivity", "product": { "name": "GSuite", "vendor_name": "Google" }, "profiles": [ "cloud", "host" ], "uid": "abc1abc1ab0a", "version": "1.1.0" }, "observables": [ { "name": "actor.user.email_addr", "type": "Email Address", "type_id": 5, "value": "darth-vader@example.net" }, { "name": "actor.user.name", "type": "User Name", "type_id": 4, "value": "Darth Vader" } ], "privileges": [ "Calendar;CALENDAR_SETTINGS_READ" ], "resource": { "name": "organizations/100000000000/delegatedAdminSettings", "type": "audited_resource", "data": "{\"method\":\"google.admin.AdminService.addPrivilege\",\"service\":\"admin.googleapis.com\"}" }, "severity_id": 2, "severity": "Low", "start_time": 1631438349281, "time": 1631438348647, "type_name": "User Access Management: Assign Privileges", "type_uid": 300501, "user": { "name": "Darth Vader" }, "unmapped": { "protoPayload": { "@type": "type.googleapis.com/google.cloud.audit.AuditLog", "metadata": { "@type": "type.googleapis.com/ccc_hosted_reporting.ActivityProto", "event": [ { "eventType": "DELEGATED_ADMIN_SETTINGS", "eventName": "ADD_PRIVILEGE", "eventId": "7000b100" } ], "activityId": { "uniqQualifier": "-10000000000000", "timeUsec": "1000000000000" } }, "requestMetadata": { "callerIp": "10.10.10.10", "destinationAttributes": {}, "requestAttributes": {} } } }}Route logs to security vendors in OCSF format with Observability Pipelines

Datadog Observability Pipelines can transform your logs into OCSF format and support your taxonomy requirements and security strategies with no vendor lock-in, enhanced analytics capabilities, and improved threat detection. On-stream, supported processing for popular log sources enables you to take advantage of OCSF without spending costly time normalizing data. This feature is generally available to Observability Pipelines users.

Observability Pipelines also enables Grok parsing with over 150 preconfigured parsing rules and custom parsing capabilities, GeoIP enrichment, JSON parsing, and more. Additionally, you can benefit from starting with Datadog Cloud SIEM, built on the most advanced log management solution, to elevate your organization’s threat detection and investigation for dynamic, cloud-scale environments.

Datadog Observability Pipelines can be used without any subscription to Datadog Log Management or Datadog Cloud SIEM. For more information, visit our documentation. If you’re new to Datadog, you can sign up for a 14-day free trial.