Kaushik Akula

JC Mackin

Modern organizations face a challenge in handling the massive volumes of log data—often scaling to terabytes—that they generate across their environments every day. Teams rely on this data to help them identify, diagnose, and resolve issues more quickly, but how and where should they store logs to best suit this purpose? For many organizations, the immediate answer is to consolidate all logs remotely in higher-cost indexed storage to ready them for searching and analysis.

But not all logs are made equal. Routing all logs to an indexed solution can dilute the data’s overall net value and cause teams to exceed the spend budgeted for that storage. To stay cost-compliant, organizations need to find a practical way to be more selective, at scale, about the logs they send to premium storage tiers.

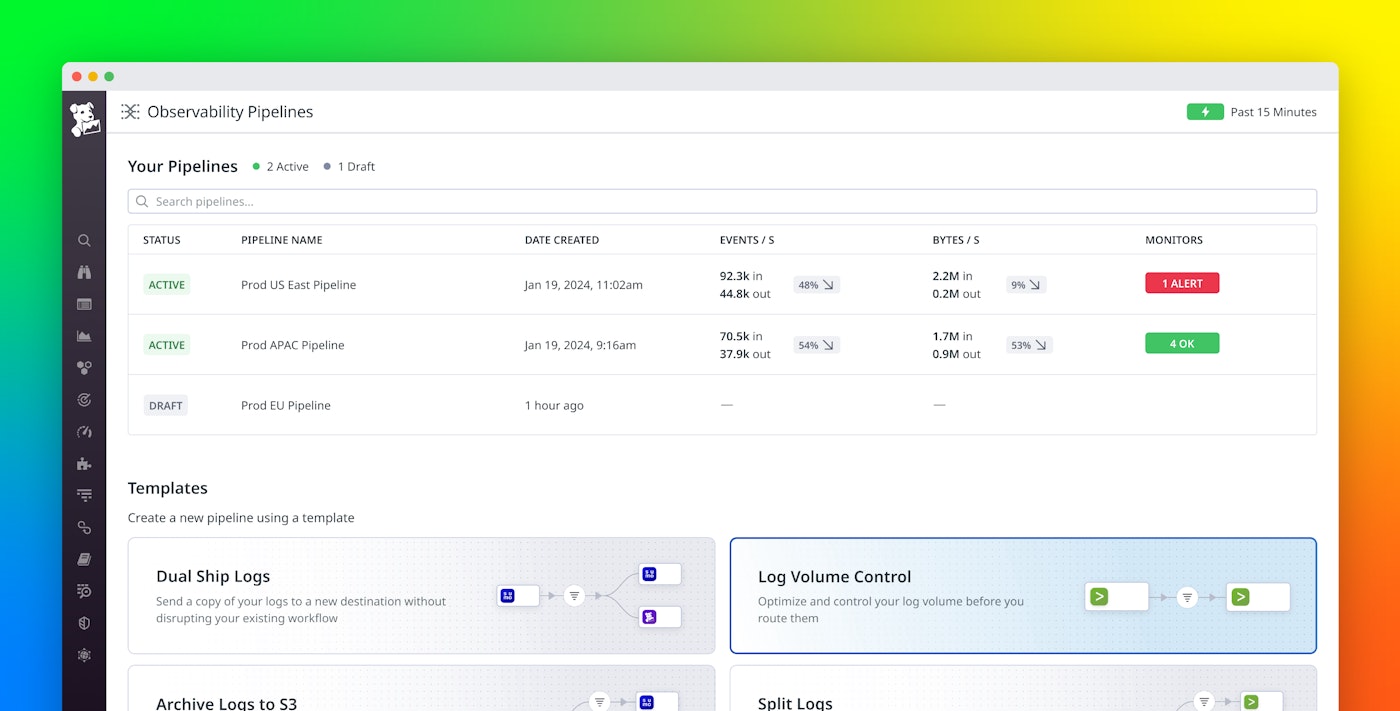

Datadog Observability Pipelines helps you focus your spend on more valuable log data by letting you systematically pre-process your logs before routing them to higher-cost destinations. This allows your teams to control log volumes in a deliberate and budget-compliant way and to optimize the value of the log data you earmark for indexed storage—including archiving, SIEM, or log management solutions.

In this blog post, we'll first look at how pre-processing your log volumes with Observability Pipelines helps you improve the value of your indexed log data and remain within budget. Then, we'll walk through how Datadog Observability Pipelines provides granular control over your logs.

Pre-process log data to optimize its value and stay within budget

Indexed log storage services, including log management and SIEM solutions, help organizations extract insights from log data—typically at a tiered pricing rate proportional to data volumes. By allowing you to pre-process the logs you send to these services, Observability Pipelines helps ensure you can find value in your indexed logs while also remaining within your planned budget.

Focus your spend on higher-value logs

Raw logs are noisy, and only some logs are useful for providing the kinds of signals that help your teams detect and resolve issues quickly. For example, compared to ERROR logs, INFO logs typically contain fewer insights into the root causes of system outages. Meanwhile, log data originating from development and staging environments can also be less useful for engineers who are troubleshooting production incidents. Besides these less-useful log types, specific fields within logs, such as empty attributes, hold little value for troubleshooting and analysis. And on top of all this, redundant copies of individual logs are common.

With Observability Pipelines, you can easily optimize your log spend by being selective about which logs to keep and which to drop. By applying several pre-processing functions such as filtering, sampling, editing, and deduplication to your source log data, you can amplify the signal within the noise before you ship logs to higher-cost storage. This allows you to optimize the expense budgeted for this purpose.

Implement budget safeguards through logging quotas

For organizations paying for premium log storage solutions, unexpected events like system crashes that cause logging spikes can compromise cost predictability. It is essential to quickly resolve these incidents, or at least to mute logs as soon as the issue is discovered and diagnosed. Stopping every log surge quickly can be impractical, however, when teams with different requirements and thresholds are responsible for the various applications, services, and systems whose logs are being collected.

With Observability Pipelines, you can define daily quotas for all your logging sources. For example, you can define a daily limit of 50 GB for logs originating from a specific team, environment, or application. By applying this log volume governance to any or all teams, you can intelligently prevent bursts in logging from causing budget overruns.

The following image shows such a pipeline, which imposes a daily volume limit of 50 billion events for INFO logs originating from all services owned by the na-web-ads team:

Easily configure pipelines to control log volumes

Observability Pipelines makes it easy to configure rules (called processors) to apply to your source logs in a pipeline. Together, these rules let you define, at scale, what data to drop and what to send along to premium storage. Observability Pipelines offers five processor types, which use filter queries to find matches within your source log data.

Filter processors allow you to select logs for forwarding that match specified criteria. For example, you can use a filter processor to select only security logs to forward to a downstream SIEM.

Sample processors allow you to capture only a desired percentage of logs that you know to be repetitive, noisy, or less valuable. For example, you can configure a sample processor to select a 20 percent sample of noisy OK-status logs from a VPC service to be sent to a higher-cost archive.

Quota processors enforce hard daily limits on logs that match a filter query. Quotas can be defined for either a volume of log data or a number of logged events. For example, you can use a quota processor to route to higher-cost storage only the first 100 GB of logged events per day that originate from a service named mongo-logs.

Dedupe processors allow you to drop duplicate copies of logs caused by retries from an unreliable network connection or by different non-critical services reporting on the same event. The following example shows a dedupe processor that drops duplicate copies of messages with matching information in the message, metadata.timestamp, and metadata.service fields.

Edit fields processors allow you to safely reduce the size of your logs by removing empty, verbose, or low-value log attributes and data fields.

Note that you can combine as many processors as you need within a pipeline. For example, you can combine a filter processor that drops all INFO logs from the ads-service service with a quota processor that drops logs from any service after a daily limit of 10 million events is reached. Combining processors in this way makes it easier to remove all the different types of noisy and less-valuable data in your logs before you route them to their destination.

Use Observability Pipelines to control log volumes and ensure budget compliance

Not all logs are equally valuable, and shipping all your logs to indexed storage without any pre-processing can lead teams to unintentionally overspend on these services. Datadog Observability Pipelines helps you control log volumes and costs by letting you set quotas, filter unnecessary data, and deduplicate redundant entries in your log data before you route it to higher-cost storage.

See our related blog posts to learn how Observability Pipelines can also help you ship logs to more than one destination, redact sensitive data from your logs, and cost-effectively prepare historical logs for a migration to Datadog.

If you’re already a customer, you can get started by using our Log Volume Control template for Observability Pipelines. Or, if you’re not yet a Datadog customer, you can sign up for a 14-day free trial.