Kaushik Akula

JC Mackin

If you plan to migrate to a new log management vendor, you need a strategy that lets you maintain visibility into your environment before, during, and after the transition. The key to achieving this kind of seamless changeover is to ensure that your historical logs are available to you in the new log management platform on day one. Organizations, however, face substantial hurdles in preparing historical log data for their next platform because of the perceived cost and complexity of performing this step.

Datadog Observability Pipelines provides a smooth, simple, and affordable migration path to Datadog Log Management by enabling you to archive a copy of your logs in low-cost storage while continuing to send logs to your existing log solution. This allows you to prepare historical logs for later use in the Datadog platform and still enjoy uninterrupted observability of your environment.

Prepare for a smooth migration to Datadog by archiving your logs

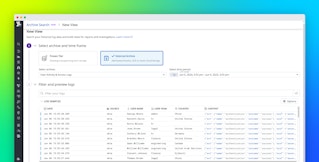

Observability Pipelines helps you cost-effectively and reliably transition to Datadog Log Management through an easy-to-configure Archive Logs to S3 template. You can use this template to pre-process your logs for your current vendor while, in the same pipeline, you separately pre-processes logs for your private Amazon S3 bucket. This way, you can easily maintain historical logs without disrupting your existing workflows.

As an illustration, the following pipeline was created from the Archive Logs to S3 template. It sends logs to two destinations: Splunk (representing your current vendor) and a Datadog archive (in your own private storage). Logs sent to Splunk are first pre-processed by filtering the data to drop all info logs, by sampling only 20 percent of messages with an ok status, and by removing duplicate logs. A second, more complete (non-sampled) set of logs is sent to a Datadog archive in your own low-cost storage.

When you perform your migration, you can rehydrate the archived logs into Datadog for your search, analysis, or security needs.

Make a smooth transition to Datadog Log Management with Observability Pipelines

Before migrating to a new log management solution, you need to prepare historical logs so that they will immediately be available to you after the transition. Observability Pipelines offers a reliable, easy, and cost-efficient way to perform this critical preparatory step for your migration to Datadog Log Management. With Observability Pipelines, it’s easy to send a copy of your logs to your own low-cost data store without disrupting your existing workflow. Later, you can rehydrate these archived logs in Datadog to ensure that visibility into your environment remains uninterrupted throughout the migration process.

Datadog Observability Pipelines is available for anyone considering a migration to Datadog Log Management—or otherwise needing to take control of the volume and flow of their log data. See our related blog posts to learn how Observability Pipelines can also help you manage your log volumes, ship logs to more than one destination, and redact sensitive data from your logs on-prem.

To get started, you can use our Archiving Logs to S3 template for Observability Pipelines. And if you’re not yet a Datadog customer, be sure to sign up for a 14-day free trial.