Zara Boddula

Rising log volumes are making it harder than ever for security and SRE teams to balance visibility with cost. Every network, CDN, and security layer generates continuous streams of telemetry, but deciding what to parse, retain, or drop often requires manual configuration, specialized knowledge, and extensive tuning.

For many organizations, this challenge is amplified by ingestion-based pricing models in downstream tools such as security information and event management systems (SIEMs) and data lakes. Logs from high-volume sources such as content delivery networks (CDNs), firewalls, AWS VPC Flow Logs, or Zscaler can quickly become prohibitively expensive to store, even though much of that data may not be needed for investigations or compliance. As a result, teams often face a tradeoff between expanding licenses or dropping log sources entirely, risking both cost overruns and potential data gaps.

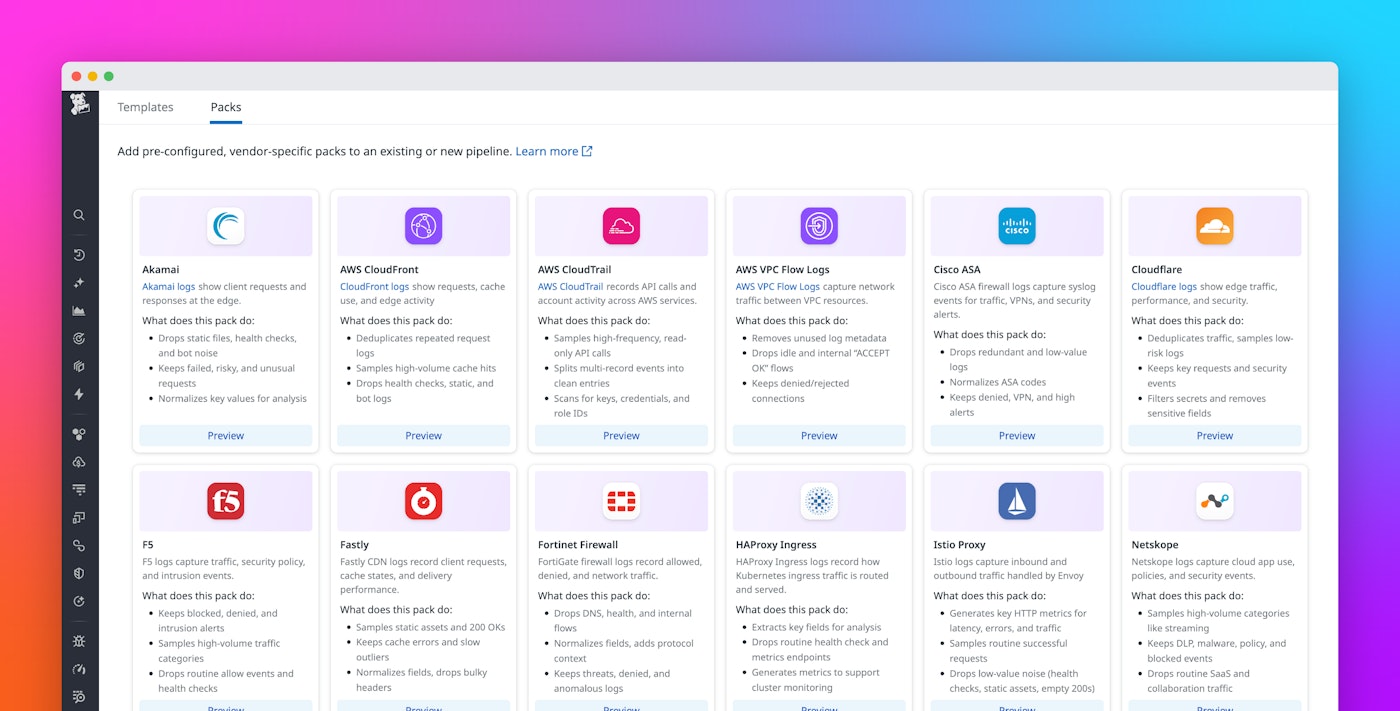

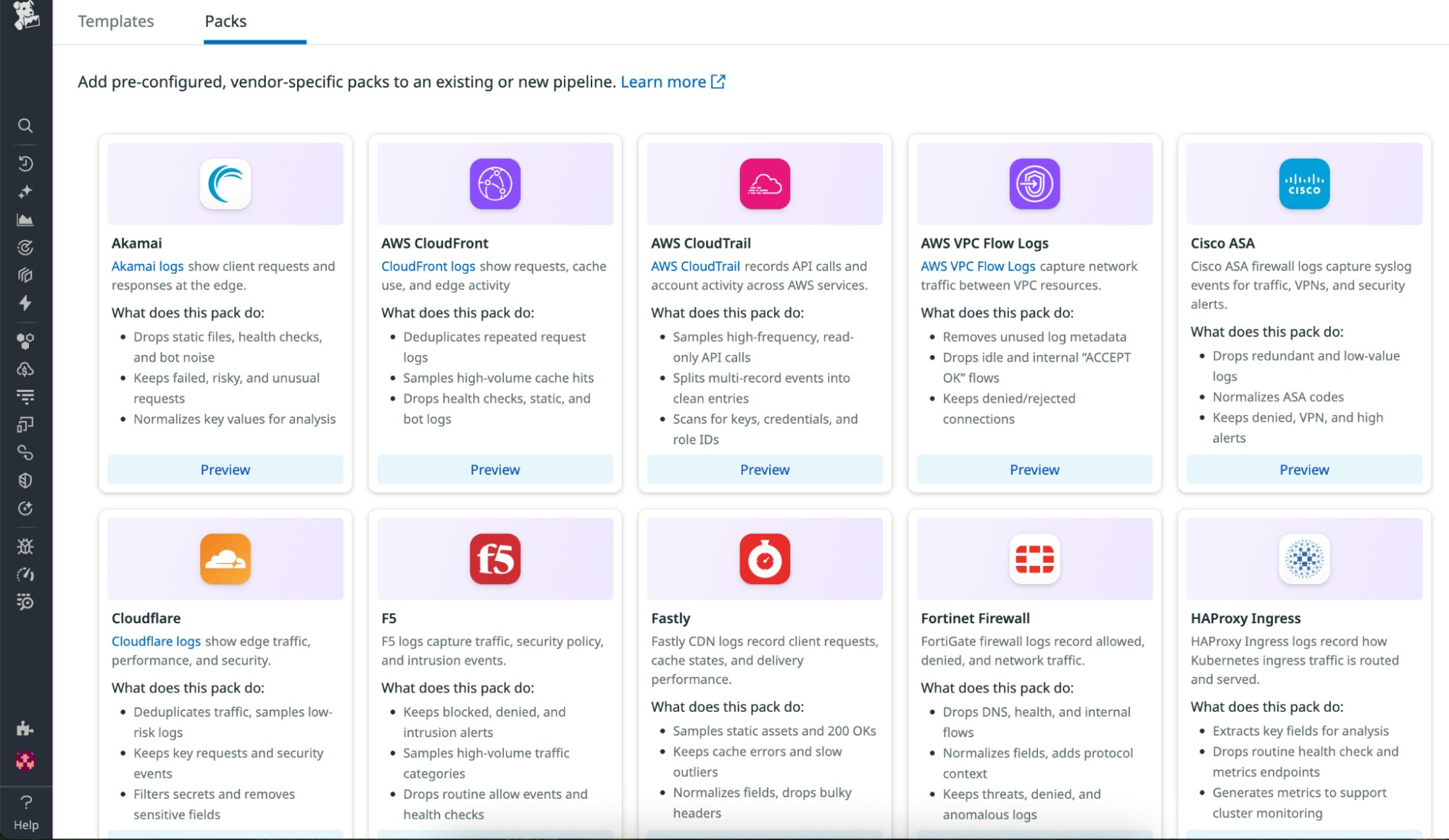

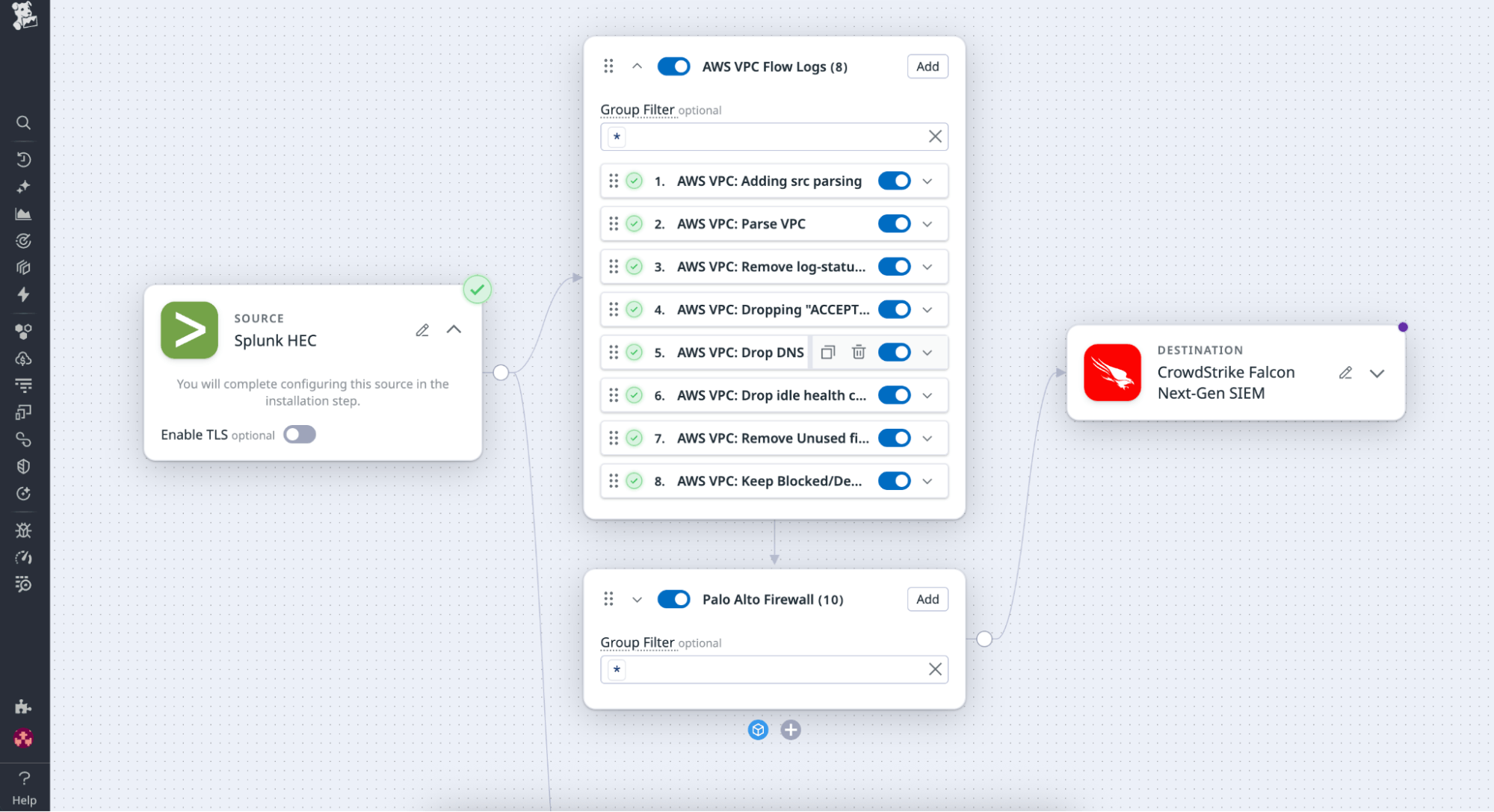

Datadog Packs, now available in Observability Pipelines, address this issue by providing predefined, ready-to-use configurations that bundle processors, filters, and rules for common log sources such as AWS VPC Flow Logs, Cloudflare, Palo Alto Firewall, Windows Event Logs, and Zscaler.

With Packs, teams can apply Datadog-recommended best practices before routing logs to any vendor. This helps organizations reduce noise, control costs, and standardize how data flows across their environments.

In this post, we’ll show how Packs help teams:

- Quickly deploy rules for common log sources

- Manage noisy Firewall and Zscaler logs before they reach your SIEM

- Transform logs with industry best practices

- Customize and extend Packs to fit organizational needs

Quickly deploy rules for common log sources

When teams build pipelines from scratch, their first challenge is often to prove value quickly. They need to reduce redundant logs, enforce compliance, and optimize routing to downstream systems. However, defining filters, processors, and transformations for every source can take weeks of manual work.

This time-intensive process requires specialized knowledge. Many teams lack deep expertise in every log format they handle, and each vendor structures logs differently. To make things more complex, log formats often change over time, forcing teams to revisit and adjust pipelines regularly to keep data flowing correctly.

Packs take care of this complexity by bundling Datadog’s recommended parsing, filtering, and enrichment logic for a specific log source, so teams can skip the trial-and-error setup and start optimizing data flow immediately.

For example:

- AWS VPC Flow Logs Pack: Removes unused metadata, drops idle or internal “ACCEPT OK” flows, and keeps denied or rejected connections for network-security visibility.

- Windows Event Logs Pack: Converts verbose XML into JSON, drops empty or redundant fields, and retains only security-relevant authentication and system events.

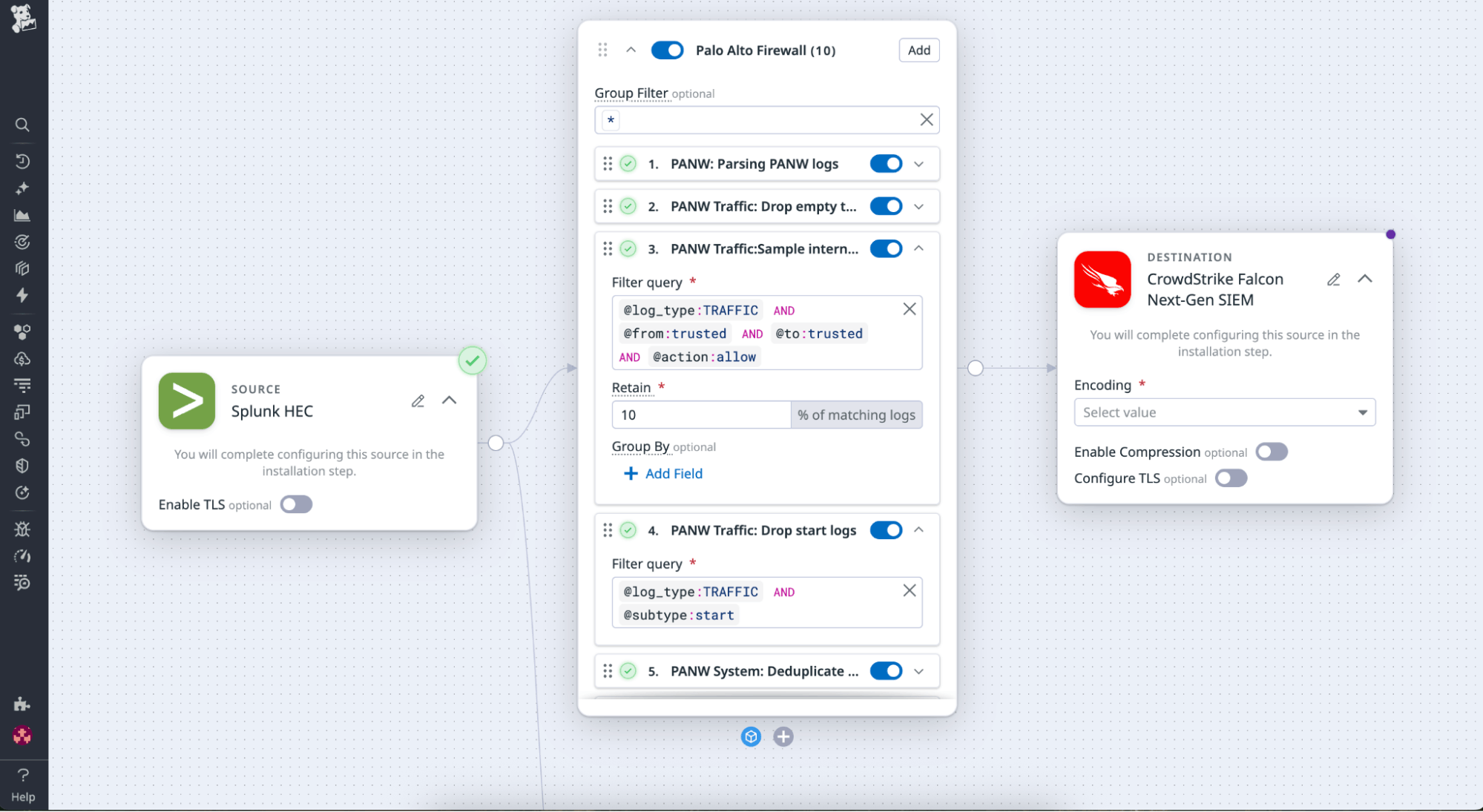

- Palo Alto Firewall Pack: Keeps detection and enforcement logs, drops redundant or benign traffic, and normalizes traffic, threat, and system fields for analysis in downstream SIEMs.

- Cloudflare Pack: Deduplicates traffic, samples low-risk logs, retains key requests and security events, and filters secrets or other sensitive fields.

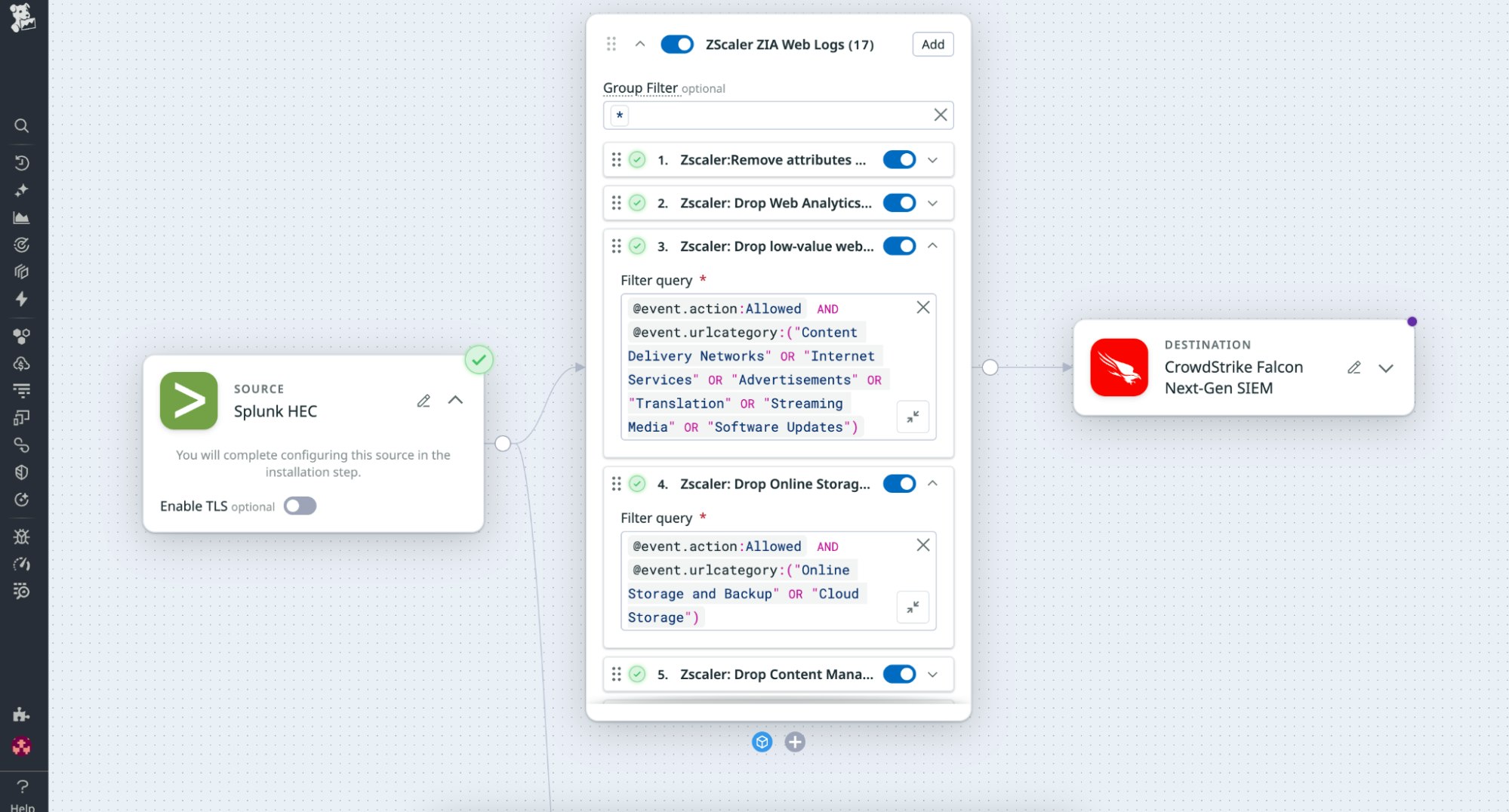

- Zscaler ZIA Web Logs Pack: Normalizes fields, enriches logs with threat context, drops routine and low-signal traffic, and retains risky, blocked, or unknown web activity.

- Netskope Pack: Samples high-volume categories such as streaming, keeps DLP, malware, policy, and blocked events, and drops routine SaaS and collaboration traffic.

From Observability Pipelines, teams can browse a catalog of Packs for these and other high-volume log sources. Each Pack is configured with Datadog’s best-practice logic to help organizations get started quickly and achieve consistent results.

Teams can deploy a Pack in just a few clicks to reduce noise and quickly demonstrate measurable cost savings.

Manage noisy Firewall and Zscaler logs before they reach your SIEM

Firewall and proxy logs often account for a large share of SIEM or data-lake ingestion. A single appliance might generate millions of events per hour—most of them routine “allow” or “accept” messages that rarely change over time. Yet every event counts toward license volume.

Packs manage that volume before it leaves your infrastructure.

The Palo Alto Firewall Pack automatically drops repetitive connection events while retaining high-signal logs such as denied sessions, threats, and system anomalies. It also standardizes and parses key fields—like source_ip, destination_ip, and action—to ensure consistency across downstream tools.

For organizations using Zscaler Internet Access (ZIA), the Zscaler Web Logs Pack applies the same logic. It reduces ingestion by filtering out repetitive allowed requests and routine browsing activity while retaining blocked, suspicious, and unknown connections. The Pack also normalizes fields such as user_id, url, and policy_action and enriches retained events with threat context, so investigations remain fast and accurate without adding cost.

By filtering and normalizing firewall and web traffic upstream, teams can significantly reduce SIEM and data-lake ingestion volumes while preserving the visibility required for security investigations and compliance.

Transform logs with industry best practices

Security and engineering teams often need to manage large volumes of logs from different sources. For example, a security team may want to reduce AWS VPC Flow Logs before sending them to an SIEM system, while an engineering team may need to ingest HAProxy logs into its logging platform. Now, instead of writing custom filters for each source, teams can add the VPC Flow Logs and HAProxy Packs directly into Observability Pipelines.

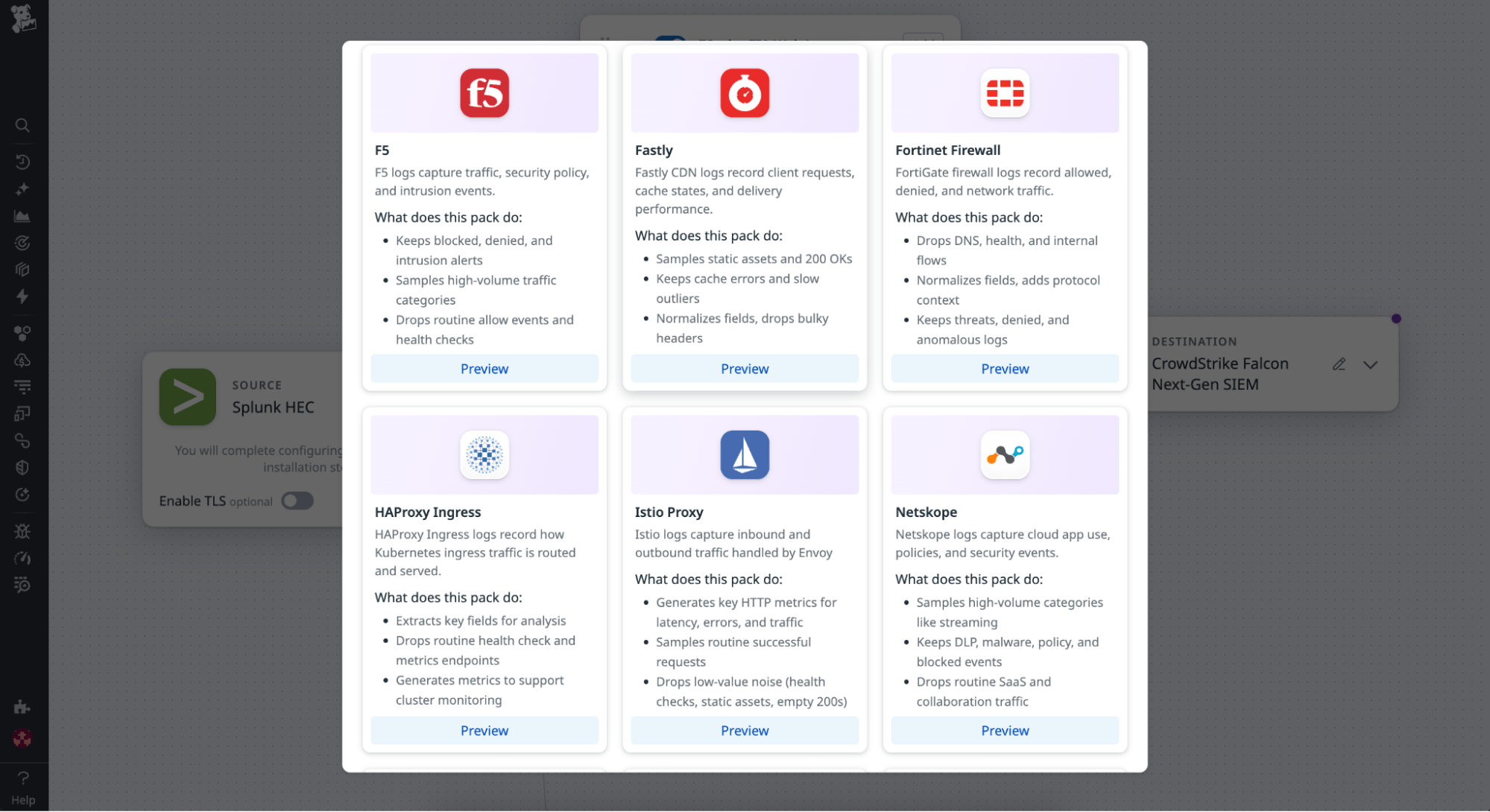

With these Packs, teams can automatically filter out low-value or redundant events while retaining meaningful data for analysis and alerting. When a pipeline is open, the Packs catalog appears under the existing processors. Selecting Add a Pack opens a curated list of prebuilt configurations for sources such as Cisco ASA, Palo Alto Firewall, and Okta.

Selecting the VPC Flow Logs Pack automatically adds processors and filters that drop repetitive “accept” events, normalize key fields, and tag retained logs for routing. From there, teams can preview and edit this logic inline to fit their organization’s priorities.

After deployment, the Pack begins processing logs immediately. Within minutes, ingestion volume decreases, noise is reduced, and downstream systems remain efficient without losing visibility into critical events.

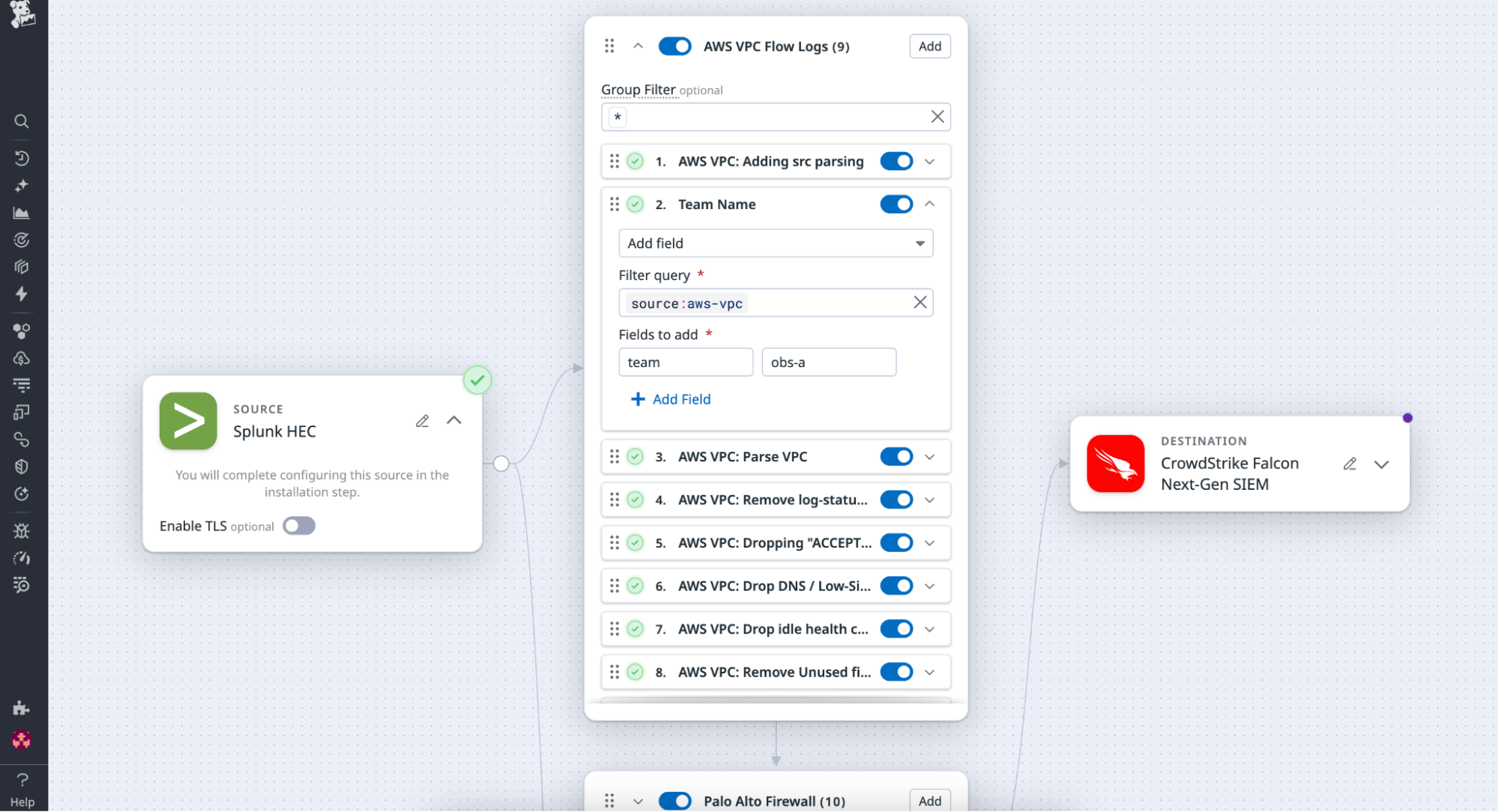

Customize and extend Packs to fit organizational needs

While Datadog Packs work out of the box to simplify log management, they’re not one-size-fits-all by design. Since teams have their own requirements for retention, compliance, and enrichment, Observability Pipelines provides a flexible framework that lets organizations start with Datadog’s recommended configuration and adapt it to their specific standards.

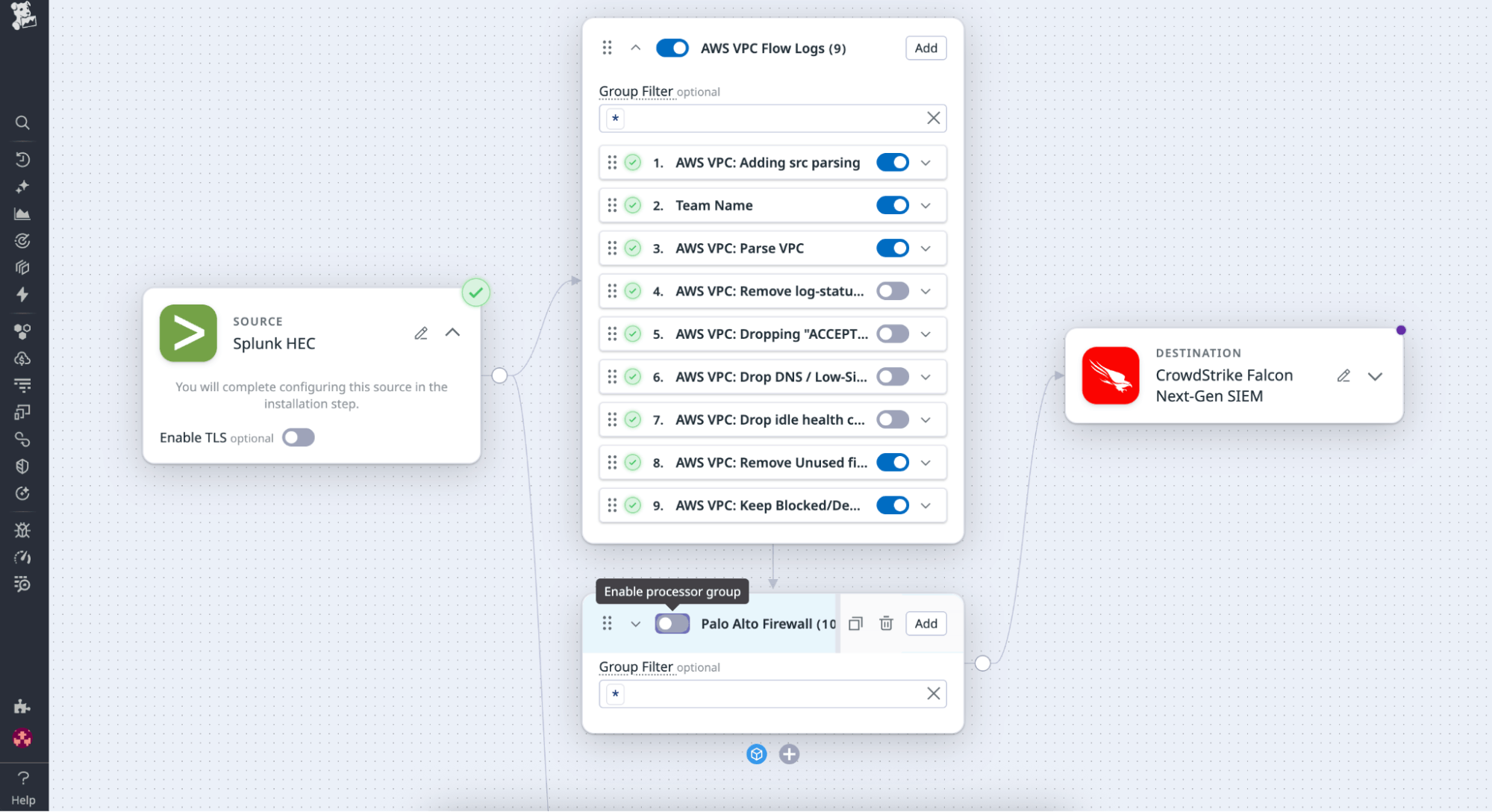

In large enterprises such as banks, insurance providers, and healthcare organizations, the same logs are often consumed by multiple teams for different purposes. Security, SRE, compliance, and data platform groups may all rely on the same network or firewall data, but each team needs to apply its own filters, tags, and routing policies. These organizations also manage complex vendor ecosystems, with data flowing across SIEMs, data lakes, and analytics tools that have their own formats and rules.

With Packs, teams can tailor configurations to meet these diverse needs. For example, organizations can:

- Combine multiple Packs within a single pipeline, for example, pairing a VPC Flow Logs Pack with a Palo Alto Firewall Pack to manage network and security data consistently.

- Add enrichment processors to tag logs with metadata such as ownership, environment, or compliance classification.

- Adjust filters or enable or disable rules to capture or drop fields based on internal policies.

The adjustments occur directly in the UI, so teams can experiment and iterate safely without needing to rebuild pipelines from scratch. Starting with Datadog’s best-practice Packs, teams can quickly build a tailored log-processing framework that fits their organization’s structure and scale.

Get started with Datadog Packs in Observability Pipelines

Packs are available today in Observability Pipelines for popular log sources, including AWS VPC Flow Logs, CloudTrail, Windows Event Logs, Palo Alto Firewall, Cloudflare, Zscaler, and more.

By applying Datadog’s best-practice filtering and enrichment before data reaches your SIEM or data lake, you can reduce costs, eliminate noise, and standardize how logs are processed across your environment.

We’re continuing to expand our library of ready-to-deploy Packs for additional log sources. If there’s a specific Pack you’d like to see next, share your feedback to help us shape what we build.

To start using Packs, visit our documentation for Observability Pipelines for setup guidance. If you’re new to Datadog, start a free 14-day trial.