Eric Metaj

As codebases grow across many different services, it becomes harder to see what test suites actually cover. AI-assisted development and faster release cycles increase the volume of changes landing in repositories, raising the risk that untested code will make it through to production. To maintain a high standard, teams need clear and scalable visibility across repositories, consistent testing standards, and a way to catch blind spots before they reach users.

Today, we’re introducing Datadog Code Coverage, a new capability that helps platform engineering and development teams uphold testing standards as the speed of development increases. Code Coverage enables teams to identify untested code early, enforce testing standards across repositories, and surface coverage gaps that would otherwise require manual investigation. By integrating coverage data into Datadog’s software delivery workflows, Code Coverage surfaces this information alongside existing metrics, traces, and CI signals.

In this post, we’ll look at how Code Coverage expands test suites with automatically generated tests within a single Datadog view of software quality.

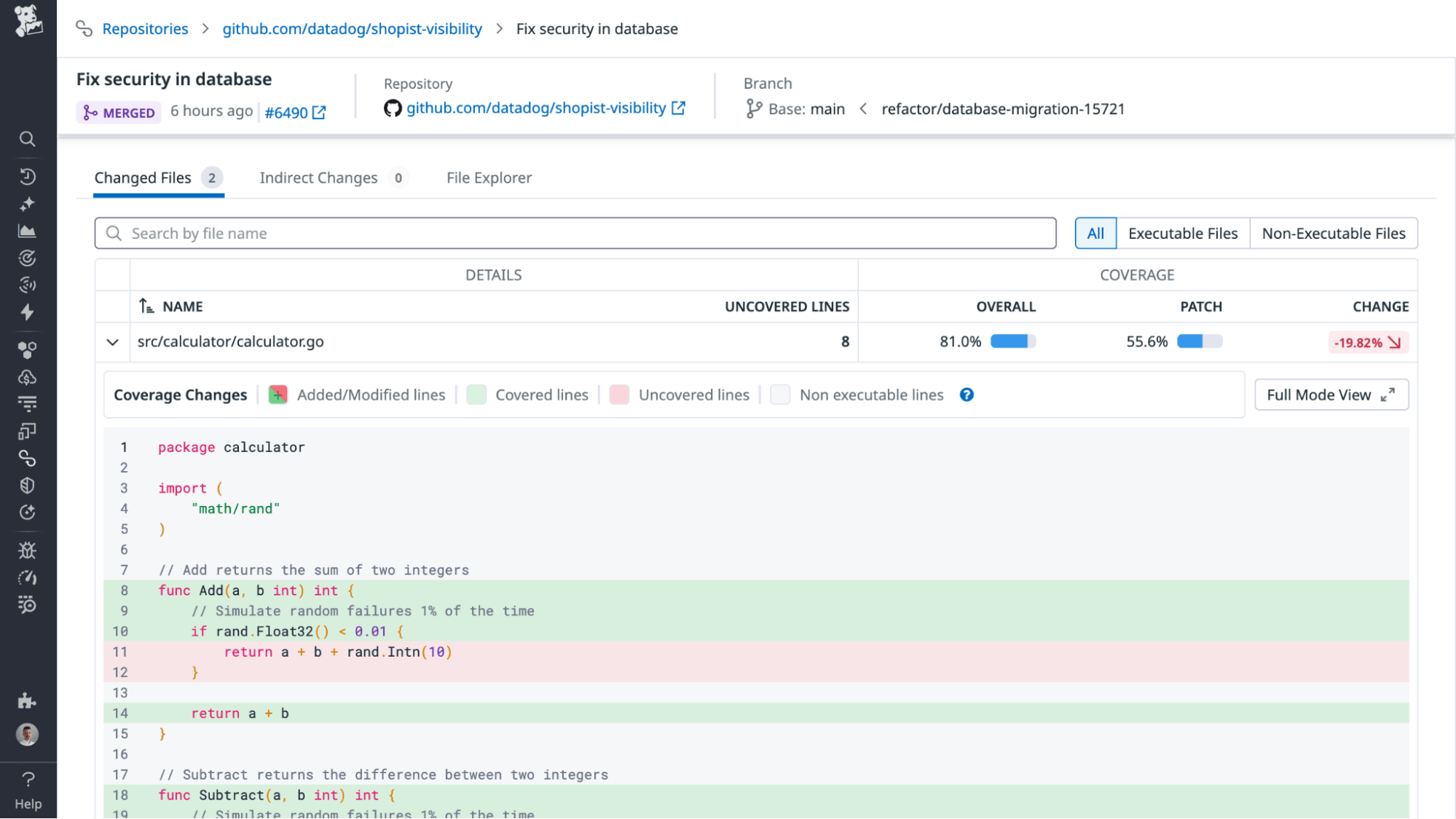

Identify untested code early as codebases evolve

Teams often struggle to understand which parts of a growing codebase are being exercised by tests. As organizations adopt AI-assisted development and ship changes more frequently, coverage reports may be generated per service, repository, or pipeline run, often in different formats. This scatters coverage data across tools and runs, making it harder to see gaps that persist and affect the reliability of individual services.

Datadog Code Coverage provides a comprehensive view across repositories. It breaks test activity down by line, file, service, and branch, giving developers an immediate understanding of which parts of their codebase are tested and which require attention.

Line-level annotations highlight specific logic that has not been covered by tests, helping teams focus efforts where additional testing is needed. As code changes over time, trends across branches and commits make it easier to track how testing quality evolves and where it improves or declines.

This level of visibility helps engineering teams identify untested code earlier as their codebase evolves.

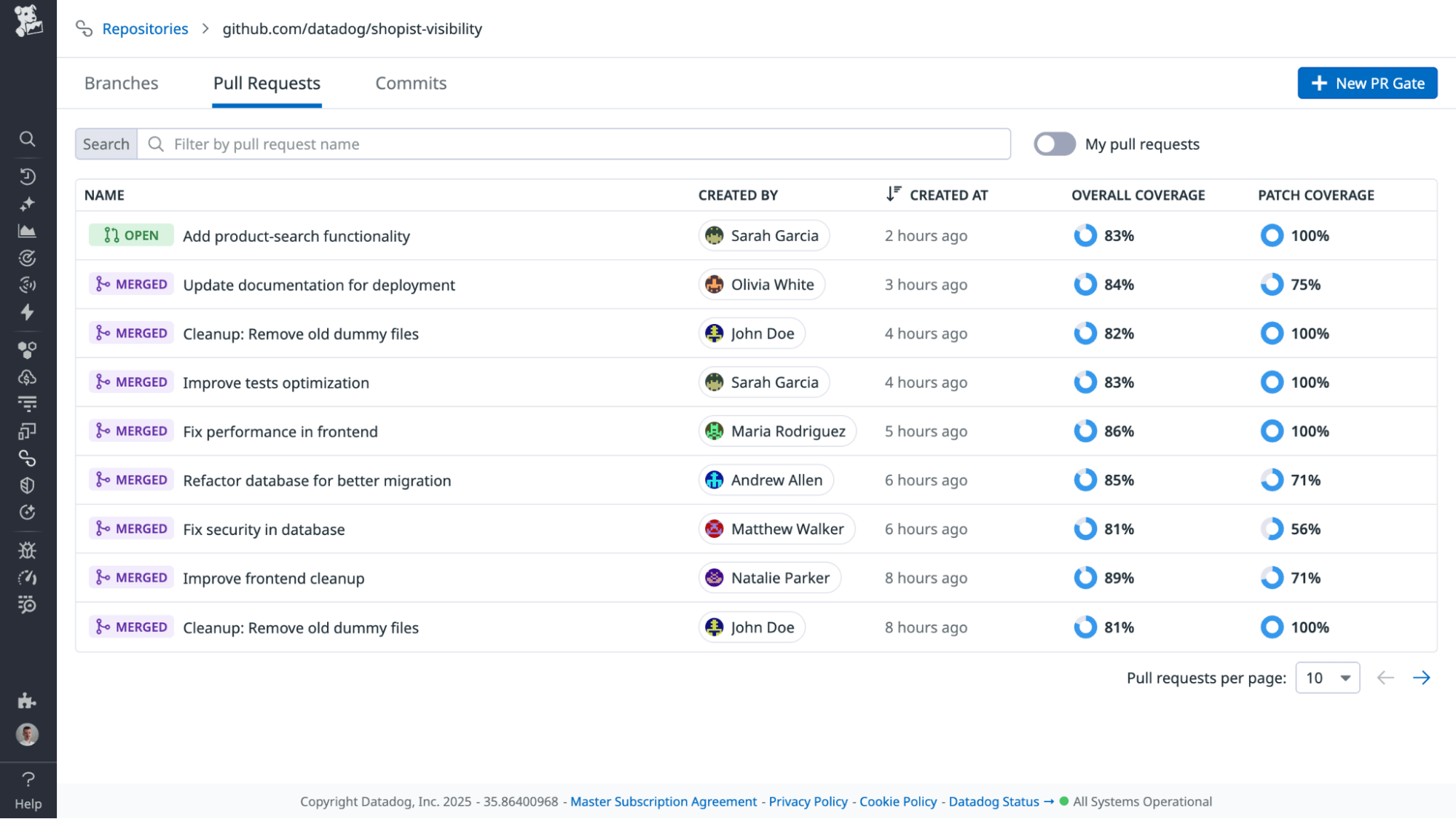

Enforce consistent testing standards across teams and repositories

Testing standards are often defined with good intentions but applied inconsistently across large engineering organizations. Some services adopt strict thresholds, while others may rely on ad hoc reviews. As code volume grows, these inconsistencies become harder to manage and create uncertainty about what level of code quality is required for changes to be merged.

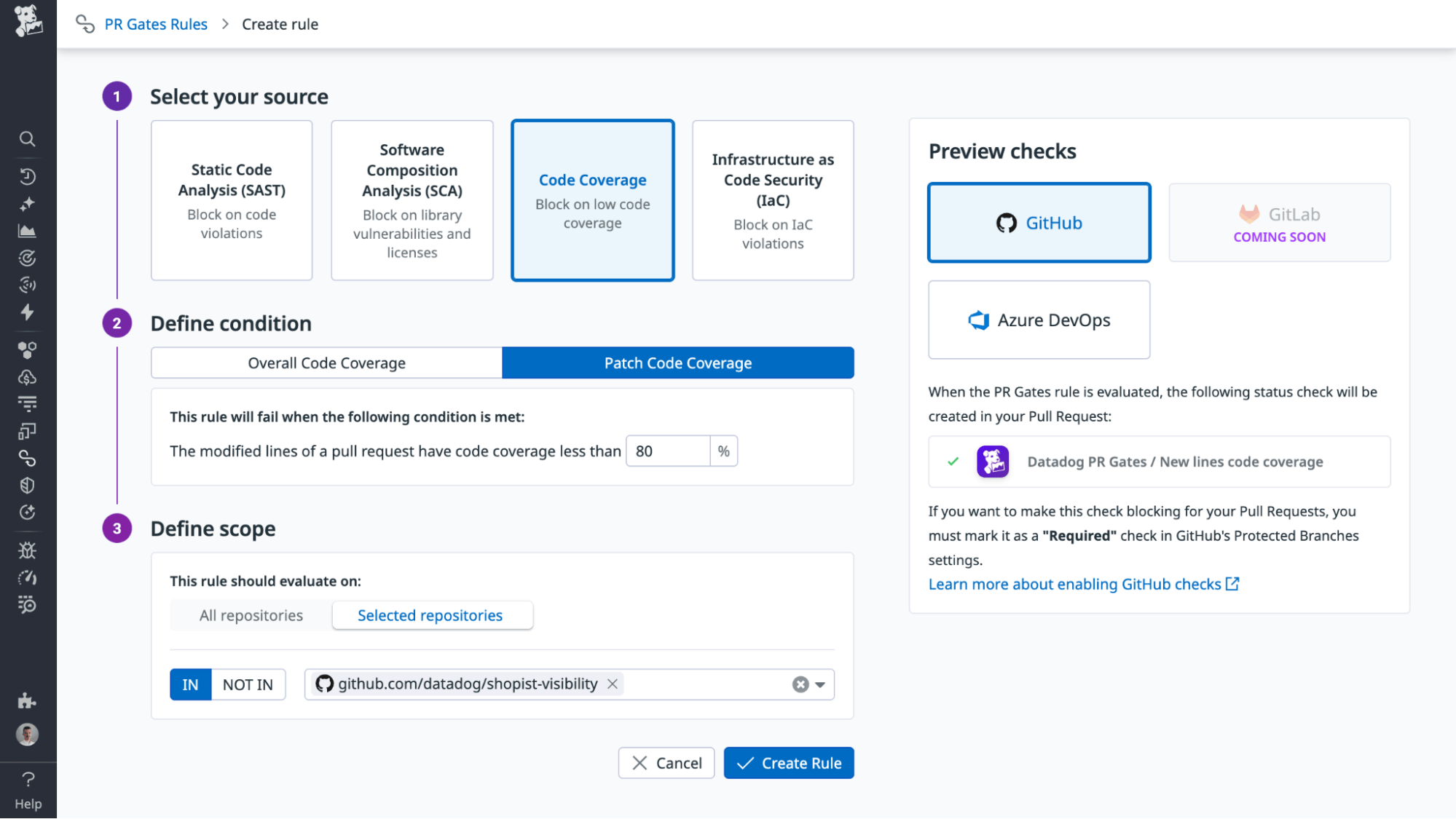

Datadog Code Coverage helps organizations apply consistent testing expectations across their repositories. Platform teams can set shared coverage thresholds that align engineering groups on clear testing goals. As developers open pull requests (PR), Code Coverage analyzes the impact of the change and surfaces coverage insights directly in the PR, giving contributors a clear view into what needs attention.

Automated quality gates evaluate both overall coverage and the specific coverage impact of new changes, helping prevent untested code from being merged, so reviewers don’t have to enforce testing rules manually.

Together, these capabilities create a predictable and scalable testing workflow that supports rapid development while allowing teams to uphold organizational quality standards.

Strengthen test suites proactively with automated test generation

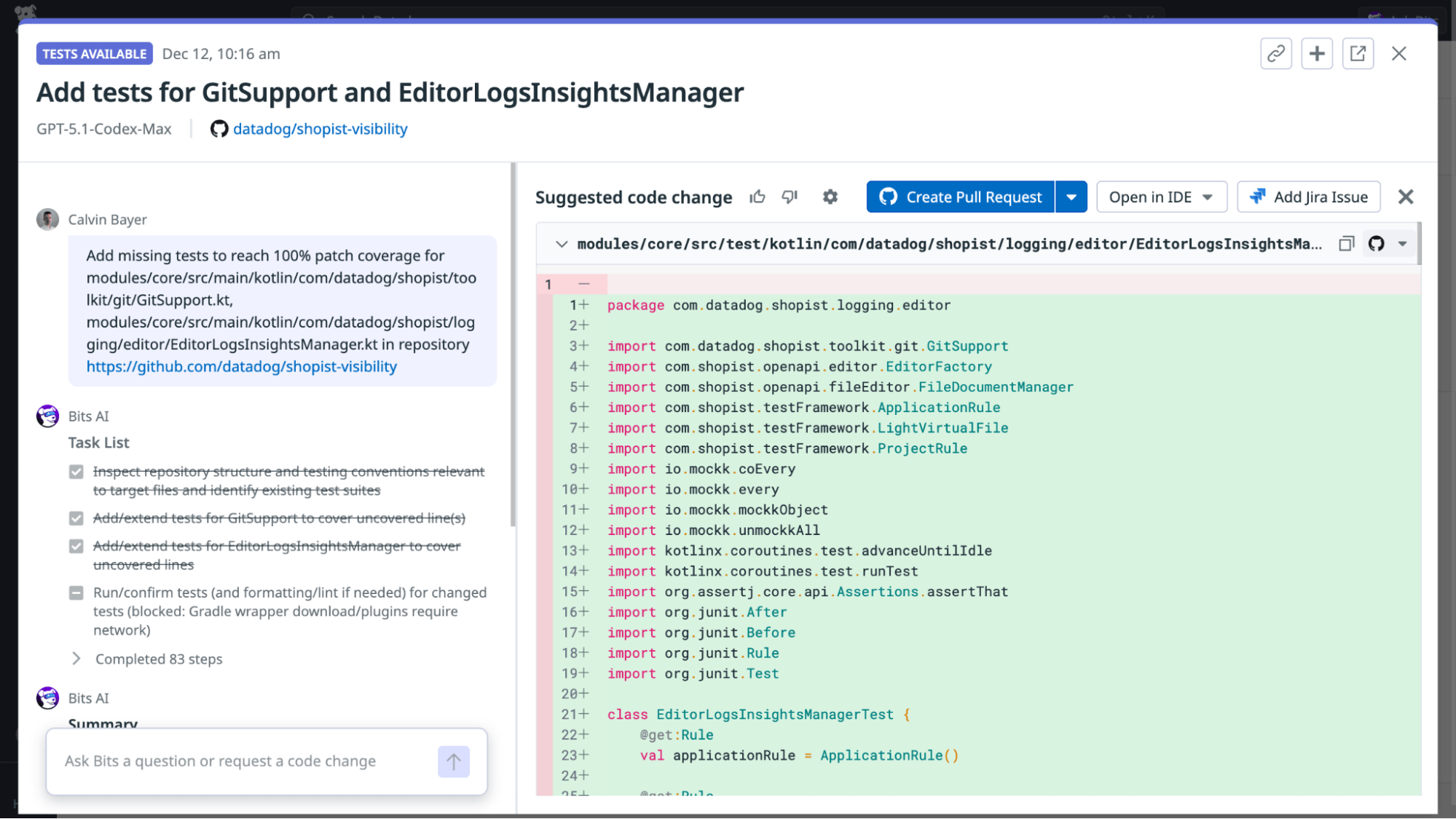

Improving test coverage often requires developers to spend time reconstructing scenarios, tracing untested logic, or writing boilerplate tests. This work slows them down and pulls attention away from building new features. As codebases grow, this manual effort becomes harder to scale efficiently.

Bits AI Dev Agent automates this work by generating tests for untested code paths using repository context. Instead of writing tests by hand, developers can use automated suggestions to more quickly and consistently fill coverage gaps. As a result, teams can move toward more complete test suites without disrupting development workflows.

By reducing the amount of time engineers spend creating foundational tests, teams can focus their efforts on higher-impact work such as designing features, refining application logic, and improving reliability.

Unify software quality signals on a single platform

Code Coverage extends the Datadog Software Delivery Suite by bringing test coverage data into the same workflows engineering teams use every day. Developers can move directly from viewing coverage results to examining test failures, deployment logs, or runtime performance issues, all within a single, consistent interface. That unified perspective helps teams understand not only whether their code is tested but also how that testing influences the overall stability and performance of their systems.

Whether organizations are scaling microservices, adopting AI-driven development tools, or standardizing testing expectations across dozens of teams, Datadog Code Coverage provides the visibility and consistency needed to help manage code quality as development velocity increases.

Get started with Datadog Code Coverage

Datadog Code Coverage is now generally available. Read our documentation to see how you can bring actionable coverage insights into your software delivery workflows. If you’re not already a Datadog customer, sign up for a free 14-day trial today.