Addie Beach

Testing ecosystems contain massive amounts of data, including outlined test scenarios, prerequisite configurations, and the tests themselves. As a result, these ecosystems are prone to data sprawl. This makes it difficult to prevent configuration drift and quickly spin up new tests, especially at the frequency needed to support a fast-growing application.

Teams can handle these challenges by treating their tests as part of their application infrastructure. This strategy involves bringing your test data into the platforms you use to manage the rest of your infrastructure, with Terraform being one of the most popular. Doing so enables you to keep your testing environments in sync, quickly provision new ones, and maintain robust backups.

In this post, we’ll explore how you can:

Configure synthetic tests in Terraform with Datadog

Datadog provides robust synthetic testing with built-in support for cross-region and cross-environment testing, as well as version control. In some cases, you may want to use Terraform to customize your setup further. For example, Terraform makes it easy to deploy tests within your own data centers. Some organizations may also require teams to keep backups of their data within separate platforms like Terraform.

Depending on how your workflows are structured, you can work with Datadog synthetic tests in several ways:

Creating tests in Terraform

In many organizations, developers are responsible for creating tests for their own projects. Since many developers already use Terraform for other tasks, they can extend it to include test creation and simplify their workflows even further. With the Datadog Terraform integration, developers can easily use Terraform to create Datadog synthetics test resources, including multistep API tests, browser tests, mobile tests, and granular API tests.

With this approach, multiple development teams across your organization may contribute to the same test configuration. To maintain consistency, it helps to follow a few shared best practices to ensure a standardized test ecosystem, such as those listed below.

Using global variables

Global variables in Datadog Synthetic Monitoring enable you to share data across synthetic tests. This data can include usernames, passwords, API keys, and mock payment information. Using global variables ensures that all teams have access to the same sample data, making it easy to create consistent tests quickly. Within Terraform, you can create global variables by defining variables of the type global. For example:

config_variable {name = “AUTHENTICATOR-API” id = “f4a2d0c8-3b7b-42fc-9d12-8c6bcb40a91e”type = “global”Additionally, global variables enable you to easily control who can access this information, with the option to obfuscate or password-protect sensitive data as needed. You can easily manage these controls in Terraform via the secure variable and datadog-restriction-policy resource.

Establishing ownership via tagging

Determining ownership can be difficult within Terraform, since the only way to see which users worked on specific resources is through Terraform audit trails. To help teams clearly establish responsibility, you can add team information to Terraform tests with the tags variable, for example tags=[“team:security”]. These tags make it easy to find which team created a test if it needs to be updated. Additionally, if a test fails, tags help you quickly figure out which team is responsible for troubleshooting.

You can enforce ownership attribution best practices by creating a tag policy for the team tag. Tag policies prevent users from saving Datadog synthetic tests—including ones created in Terraform—if they don’t include the required tags.

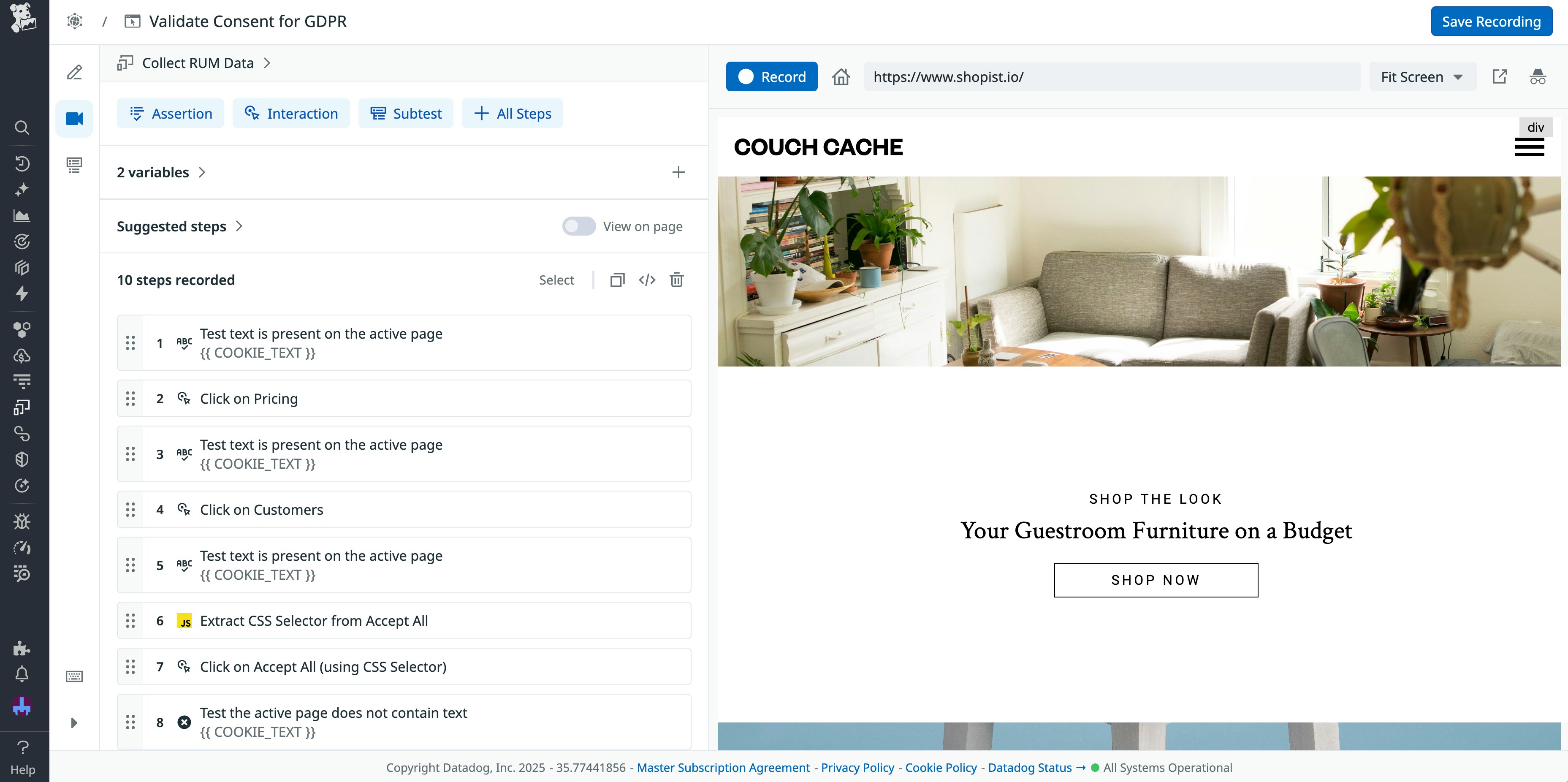

Creating resilient locators

Datadog provides two options for working with locators: built-in self-healing locators which are generated for each test and user-defined CSS or xPath-based locators. In the Datadog UI, the former are used by default, but you can create the latter as needed.

Because elements within Terraform must be defined manually, you’ll need to configure a locator for each element yourself. You can view our guide for more details on when CSS or xPath would make more sense. In Terraform, you can define them using the element_user_locator variable, as shown below:

element_user_locator { value { type = “css” value = “#checkout-button” }}For each user-defined locator in Terraform, Datadog automatically generates a self-healing locator. These locators will update as your UI changes, ensuring that your tests remain reliable across redesigns.

Import tests created within the Datadog UI

While Terraform-based test creation can be useful for developers who already know Terraform syntax, some organizations involve non-technical teams in their test design processes. In these situations, users can build initial tests directly within the Datadog UI. This enables teams to take advantage of Datadog’s code-free test creation features, such as test templates for common use cases and interaction-based test recording. The UI also clearly displays available actions, global variables, and assertions available for use. Developers can then import the finished tests into Terraform for storage or ongoing maintenance.

To do this, developers can start by retrieving the test identifiers and configuration files within Datadog. Then, they can import these resources into Terraform by configuring the Datadog provider and creating an import block, as shown below:

Datadog provider configuration

terraform {required_providers {datadog = {source = "DataDog/datadog"}}}provider "datadog" { api_key = var.datadog_api_key app_key = var.datadog_app_key}Import block

import { to = “datadog_synthetics_test.payment_test” id = “ad1-b2c-ds3”}This approach automatically creates the test configuration in Terraform. It differs from using the import command, which only updates the Terraform state file and requires you to manually enter the test configuration. Alternatively, you can skip importing your tests entirely and query them as external data sources instead. This approach is useful if you don’t want to store your test configuration and simply want to invoke certain test resources, such as subtests or global variables.

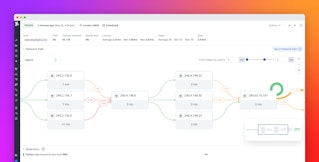

Simplify test creation with Datadog subtests

To better tailor your synthetic monitoring strategy with your teams’ workflows, you may choose to combine these methods. While Terraform-based test creation conveniently integrates with development workflows, manually coding tests step-by-step in Terraform can quickly become tedious. As such, teams may find it more efficient to create the foundation of their tests directly in Datadog Synthetic Monitoring.

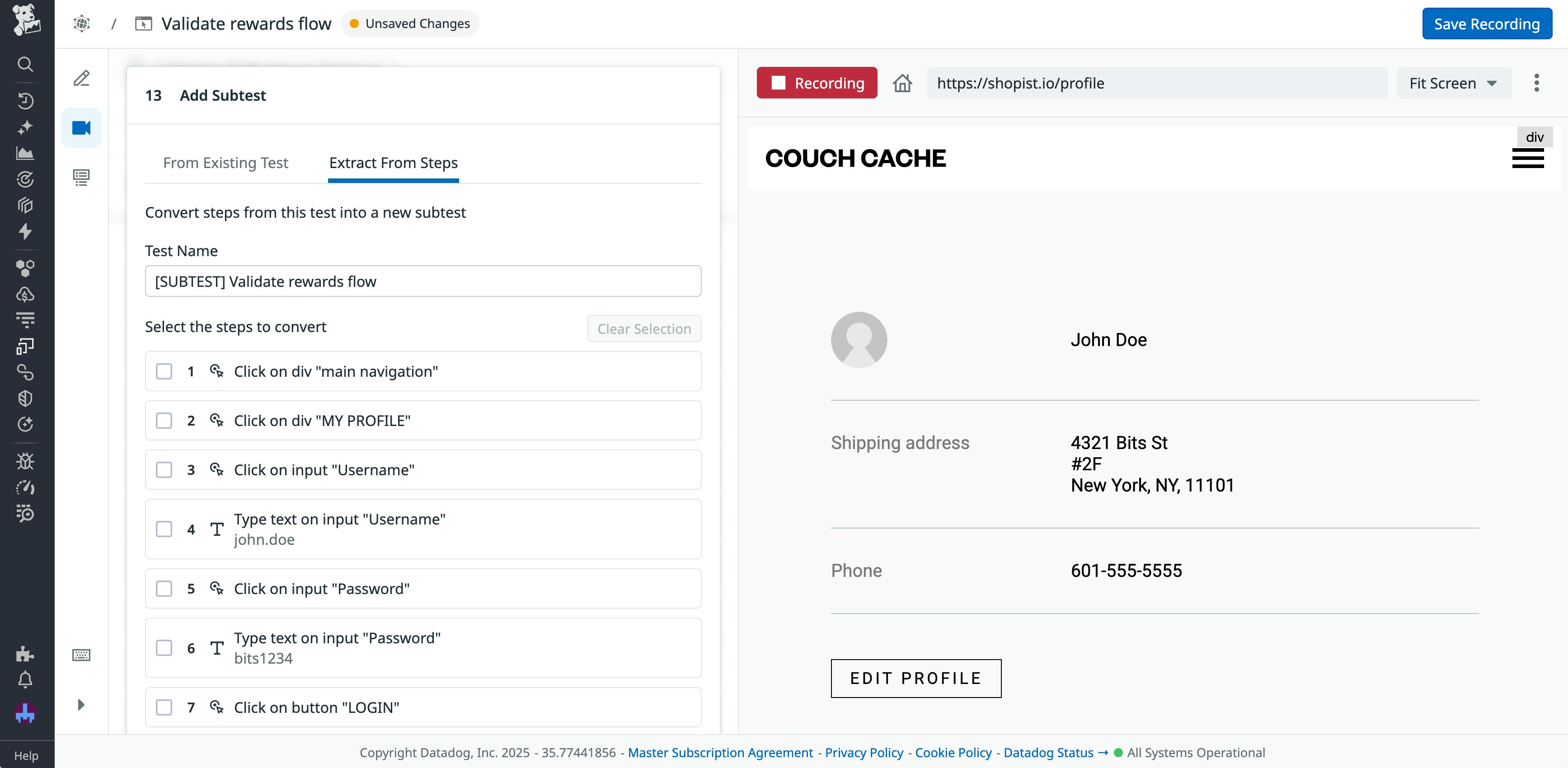

One of the most effective ways of doing so is by using subtests. Subtests enable you to modularize your tests by creating reusable groups of test steps. You can use subtests to develop the basic building blocks of your tests using the Datadog UI, then combine and recombine them within Terraform.

After recording synthetic tests within Datadog and importing them into Terraform, you can integrate them into new tests as subtests using a browser step with a playSubTest type. Within this browser step, specify the test you want to use as a subtest using the subtest_public_id variable. If you want the subtest to open within a new window or tab—for example, to display a newsletter signup screen in a popup window—you can configure this with the playing_tab_id variable. The code for a finished subtest browser step could look like this:

browser_step { name = “Test user authentication” type = “playSubTest” subtest_public_id = “ad1-b2c-ds3”Let’s say that you’re creating several lengthy browser tests that contain the same flow, which involves logging into a rewards account, redeeming rewards points, and viewing redemption offers. Rewriting these steps each time you created a new test would be inefficient. Instead, you can record these steps as a single rewards subtest in Datadog.

You can then import this subtest into Terraform and use it as the base of your tests. Because subtests are shared across your organization, this simplifies test creation for other teams as well.

Develop and manage your test ecosystem with Terraform and Datadog

As test collections grow, configuration data can spread across multiple sources. This can make maintenance difficult, leading to stale data and tedious test creation. By treating tests as infrastructure, you can manage them more efficiently with platforms like Terraform.

To learn more, read our documentation on getting started with Datadog Synthetic Monitoring and using it with Terraform. Or, if you’re new to Datadog, you can sign up for a 14-day free trial.