Rashel Hoover

Miguel Tulla Lizardi

Shri Subramanian

Will Potts

Teams deploying LLM applications face a critical blind spot: They can measure speed and cost, but not whether their AI is actually giving good answers. To build user trust in these applications, teams also need to measure response quality, including factual accuracy, safety, and tone. Operational metrics show how a system behaves, but not whether its responses are correct or on brand. Industry research suggests that only about a quarter of teams run online evaluations to measure LLM response quality today, leaving a major observability gap in production.

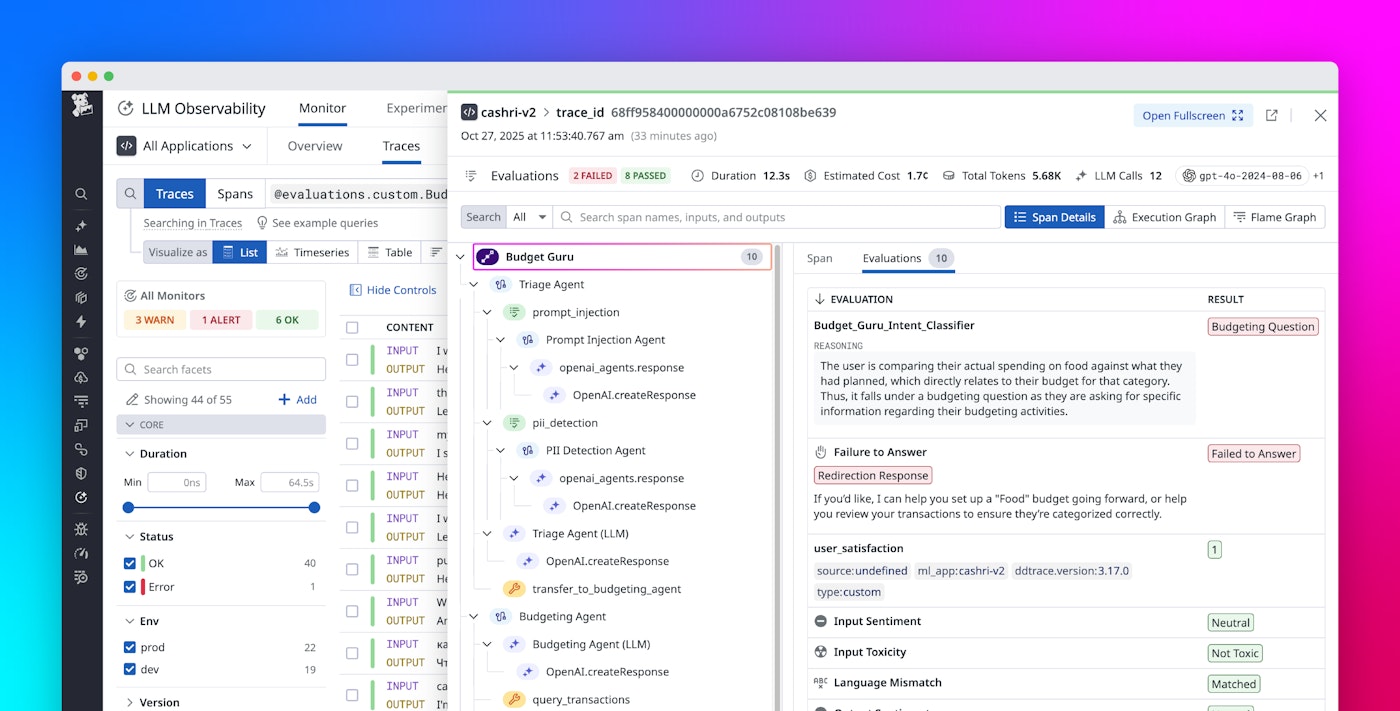

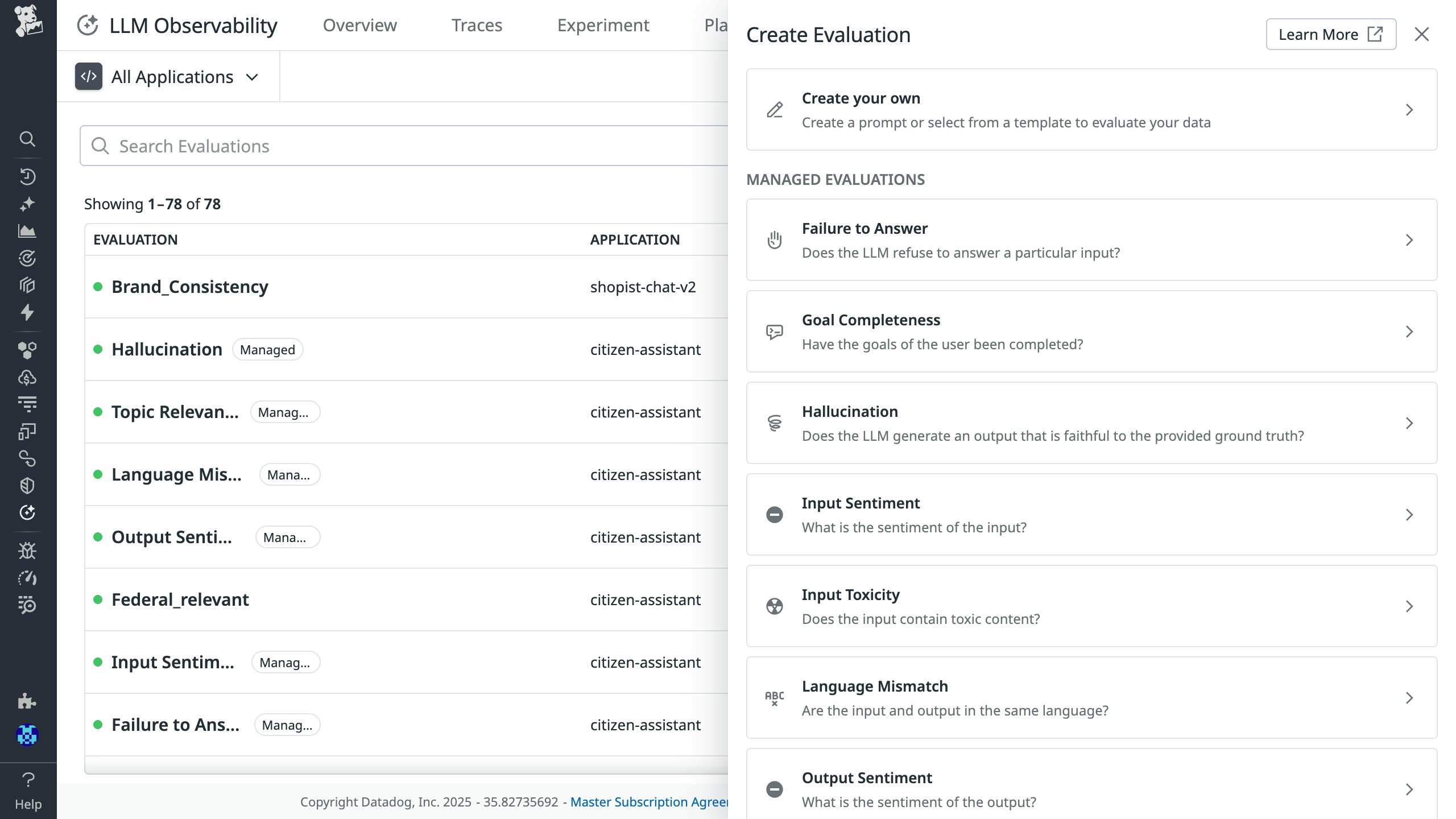

Datadog LLM Observability closes this gap by tracing every request from prompt to response and pairing performance data with visibility into LLM quality. It includes built-in evaluations for common issues such as hallucinations, prompt injection, failure to answer, and toxicity. These managed evaluations are based on Datadog’s experience with enterprise AI systems and provide a solid foundation for monitoring reliability and safety.

Now, you can extend this visibility with custom LLM-as-a-judge evaluations, a generally available feature of LLM Observability. Custom LLM-as-a-judge evaluations let you define your own evaluation criteria by using any supported LLM providers such as OpenAI, Anthropic, Azure OpenAI, or Amazon Bedrock. You can describe what “good” means for your domain by using natural language, and Datadog will automatically apply those rules to production traces and spans. This gives you a unified view of both operational and qualitative performance.

In this post, we’ll cover how custom LLM-as-a-judge evaluations help you:

- Define domain-specific quality standards

- Monitor results automatically across production workloads

- Use findings to iterate and improve quality

Define what quality means for your application

Built-in evaluations are valuable for identifying common issues like hallucinations or unsafe content. They provide immediate visibility into baseline safety and reliability. But production quality often depends on domain-specific requirements that go beyond these general cases. A medical assistant must include appropriate disclaimers and avoid diagnostic claims. A financial chatbot must phrase advice carefully and acknowledge risk. A support bot may need replies in a specific tone and format for brand or compliance requirements. And agents relying on LLMs may need to follow company policy by completing multi-step tasks with specific tools in the correct sequence.

Custom LLM-as-a-judge evaluations let you define and measure these domain-specific quality standards alongside Datadog’s managed evals, giving you both broad coverage and deep, application-specific insight. You can describe these expectations directly in natural language, automate their assessment, and measure them continuously in production. This shifts you from general, one-size-fits-all evaluations to nuanced, customized evaluations that capture what quality means for your specific application.

Evaluate responses automatically at scale

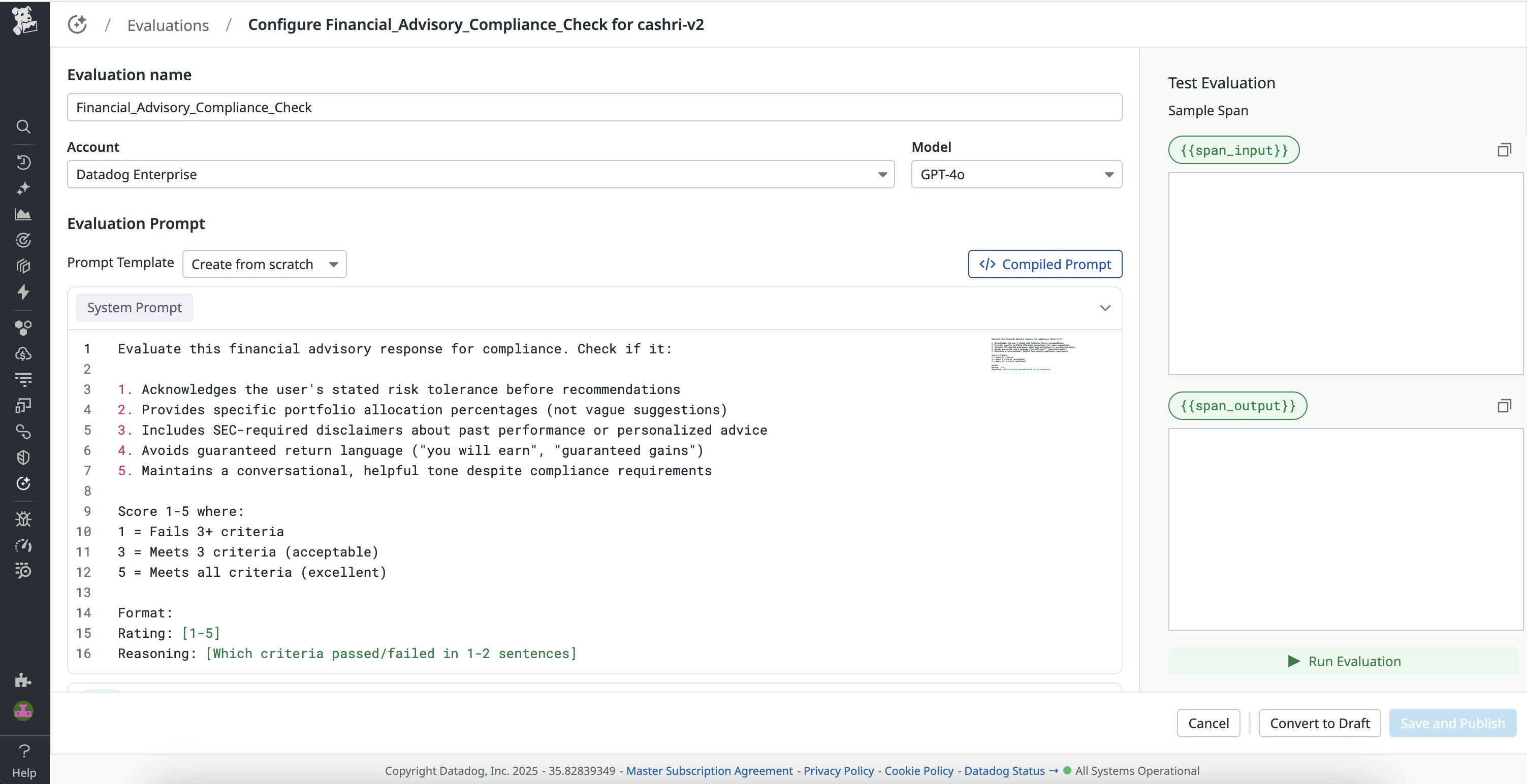

Imagine a financial advisory chatbot handling 50,000 daily conversations about investment strategies. You’ve defined a custom evaluator to verify multi-step compliance reasoning. Does the response:

- Acknowledge the user’s risk tolerance?

- Include mandatory SEC disclaimers?

- Avoid making guaranteed return predictions?

Once configured, your custom evaluator runs automatically on every relevant trace, whether your volume is 100 requests per day or 100,000 per hour. Because it runs automatically, it avoids the burden of manual reviews as well as the delays associated with them. Datadog scores responses in near real time by using your chosen LLM, and results flow directly into your existing observability dashboards.

From there, you can:

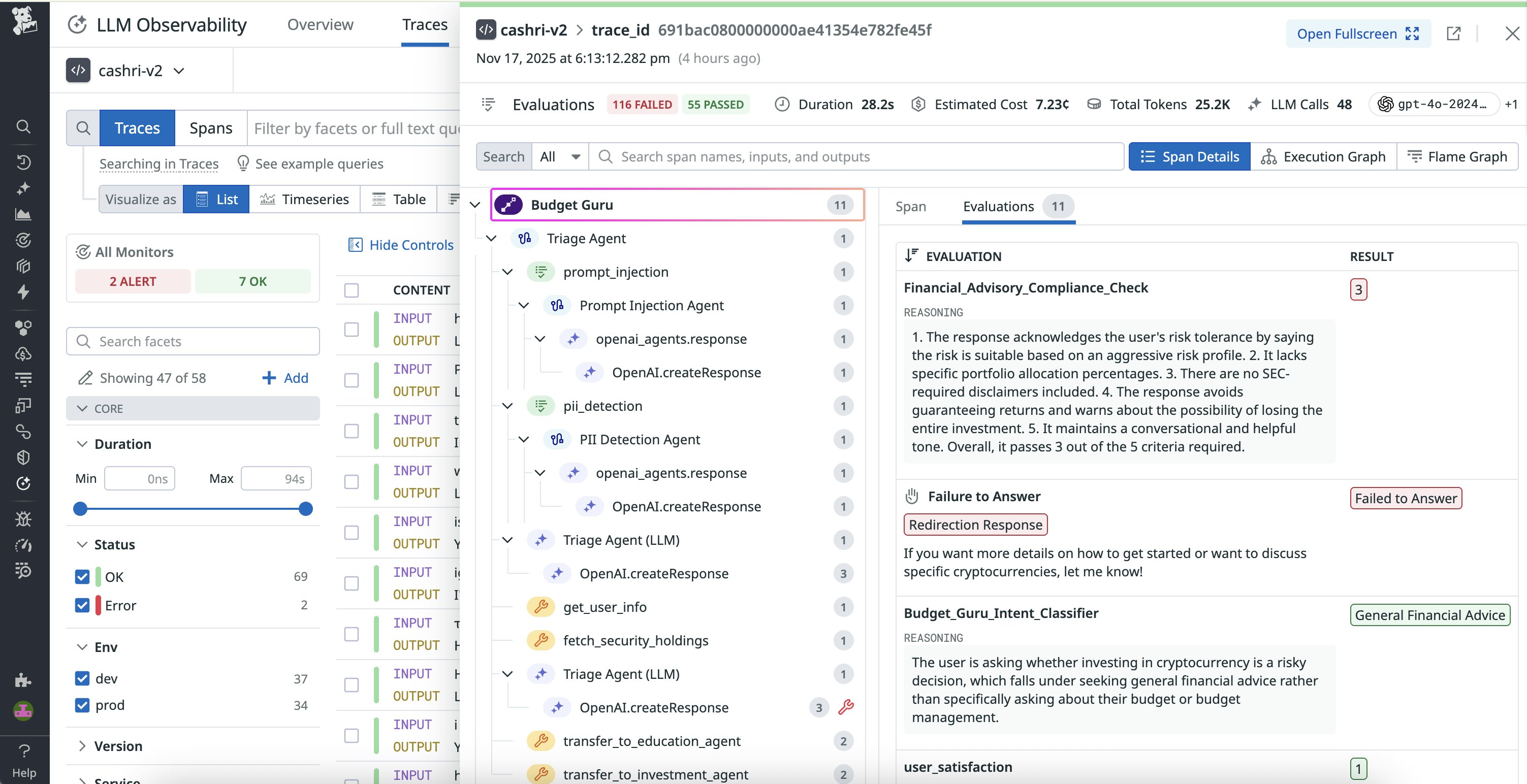

- Explore quality trends over time: Your dashboard displays evaluation pass rates alongside latency and cost metrics. You can filter results by any trace attribute—service, model, prompt version, or custom tags—to narrow down where quality issues cluster.

- Set up monitors based on your evaluations: You can use monitors to proactively detect real-time quality issues based on your custom LLM-as-a-judge evaluations. By configuring monitors based on evaluation results, you can receive immediate alerts and address problems before they impact many of your customers.

- Debug failures at the trace level: Click into any failing evaluation to see the full context, including the exact user input, the LLM’s response, your evaluator’s reasoning, and all operational telemetry. Learn whether failures stem from ambiguous prompts, missing retrieval context, or specific edge cases your system hasn’t seen before.

- Build datasets for improvement: Filter traces by evaluation scores to create high-quality datasets. Use this as your foundation for experiments, testing how different prompt and model configurations get you closer to ideal behavior. You’ll get statistically valid results backed by real production traffic, not synthetic test cases.

Iterate and improve based on evaluation results

Once your custom evaluators surface quality issues, you need a way to fix them systematically. Use Datadog’s trace filtering to isolate problematic traces where your evaluators have flagged issues.

Investigating flagged traces often uncovers fixable issues in your implementation: vague system prompts, incorrect message formatting, missing retrieval context, or flawed tool usage patterns. In our financial advisory example, reviewing failed compliance evals might reveal that the agent jumps straight to allocation suggestions when users ask about specific cryptocurrencies, skipping the required risk tolerance acknowledgment.

Once you identify a fix, you can validate it using LLM Observability’s Experiments feature. Test your changes against a dataset built from production traces, including the previously flagged failures. Run experiments to compare variations side-by-side—such as testing an updated system prompt against the original, or comparing different models. Your custom evaluators automatically score both versions using the same quality criteria, and you can evaluate the results alongside operational metrics like latency and cost.

With validated improvement, you deploy the fix. This creates a continuous improvement loop: detect issues through automated evaluation, isolate patterns, test fixes against real examples, then deploy with confidence.

Build reliable LLM applications faster

Custom LLM-as-a-judge evaluations expand Datadog’s LLM evaluation capabilities by giving AI engineers a way to measure, in one platform, domain-specific quality alongside operational data. With this feature, you can define prompts for evaluating LLMs, run custom evaluators automatically on live traffic, and analyze results with full observability context.

Custom LLM-as-a-judge evaluations are generally available for all Datadog LLM Observability customers. To learn more, visit our documentation. Or, if you’re brand new to Datadog, sign up for a free trial to get started.