Patrick Krieger

Ellie Cohen

Natasha Goel

Engineering teams need to deliver reliable, secure, and high-performing applications, all while keeping costs under control. But engineers often lack visibility into cloud cost data, relying on finance-driven reports that they receive only after the billing cycle closes. Without daily cost insights alongside observability data, they don’t know until it’s too late that an infrastructure change caused a significant cost increase.

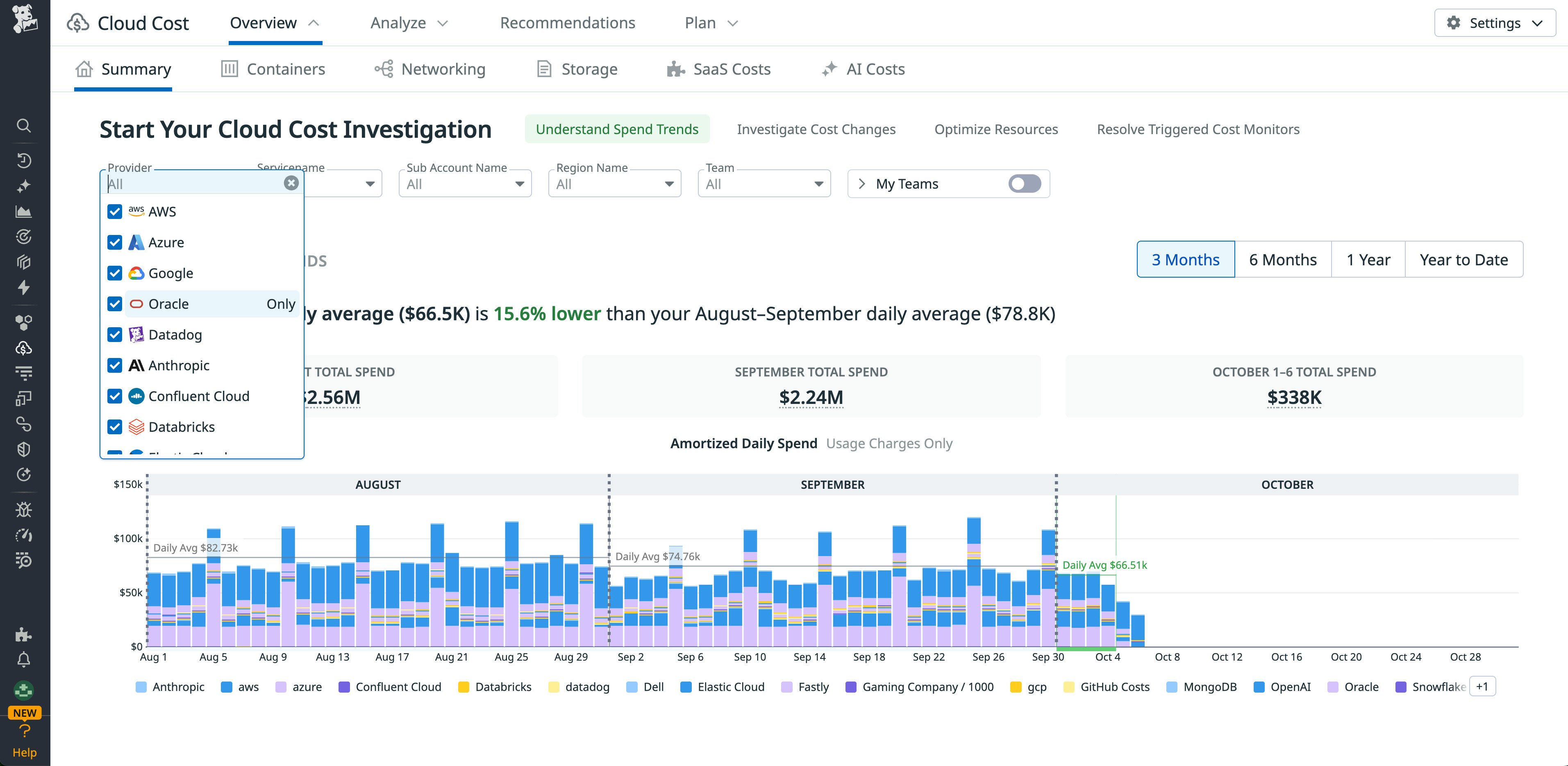

To help close this gap, Datadog Cloud Cost Management (CCM) now supports Oracle Cloud Infrastructure (OCI). Organizations that use OCI often operate in hybrid and multi-cloud environments, making unified cost visibility difficult. Now, these organizations and their engineers can access the same capabilities for daily cost visibility, allocation, and optimization that they already rely on for AWS, Microsoft Azure, and Google Cloud.

In this post, we’ll cover how OCI support in CCM helps you:

- Get granular visibility into OCI costs

- Uncover opportunities for cost savings

- Avoid cost overruns

- Control unpredictable AI costs

Get granular visibility into OCI costs

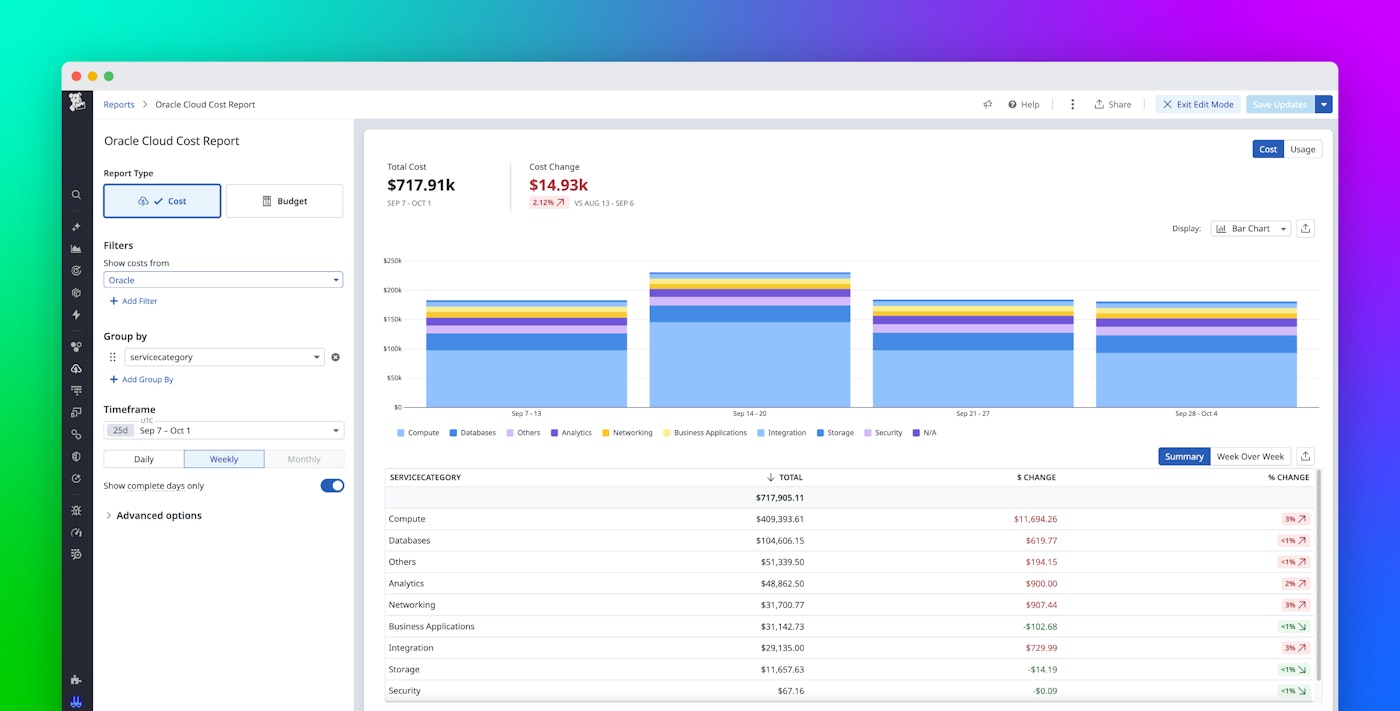

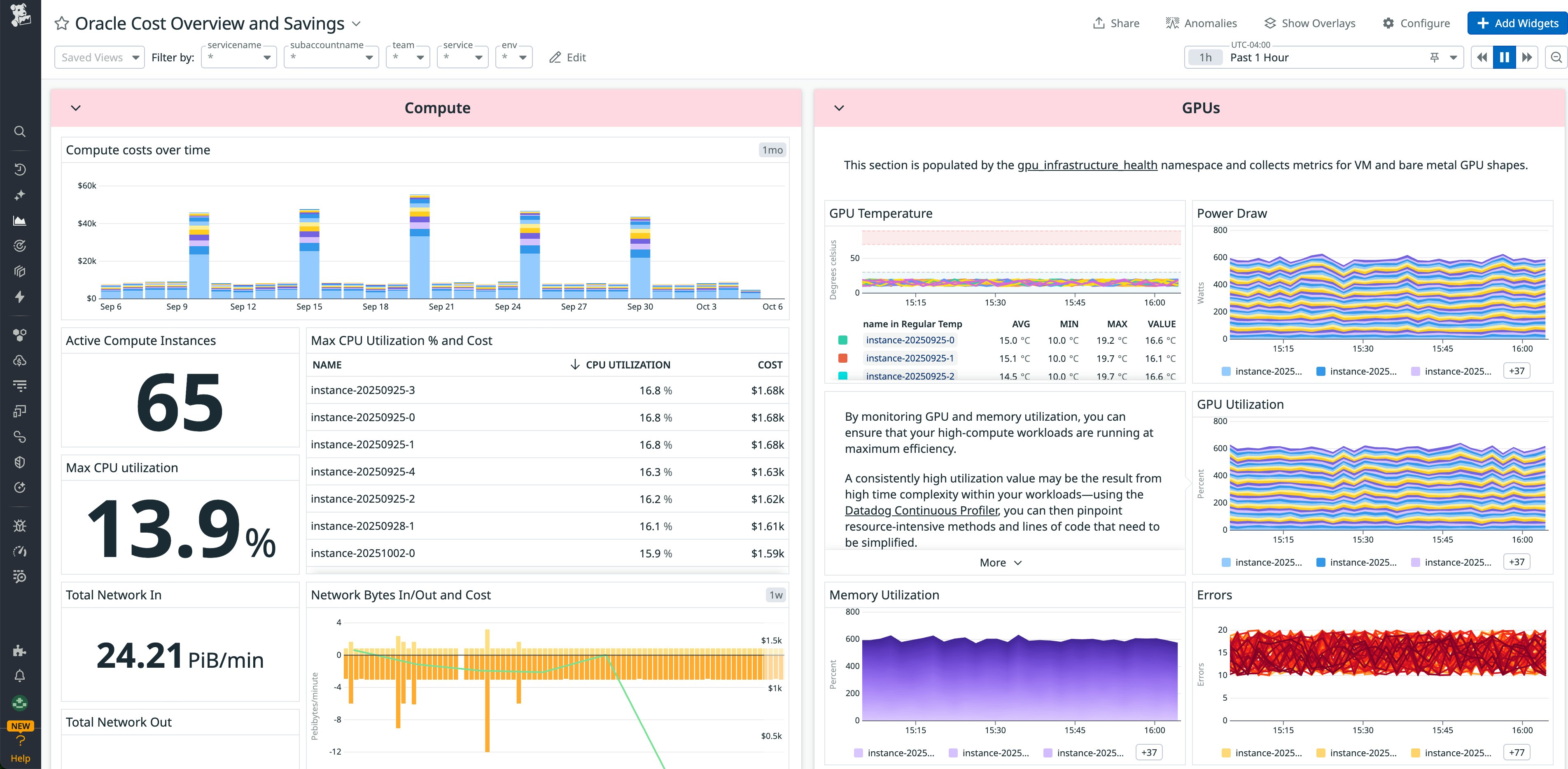

Instead of toggling between OCI’s billing console and other sources such as exported CSV files, you can now view all of your OCI costs directly in Datadog. CCM ingests OCI cost and usage data daily, letting you break down that data by service, compartment, and tag. You can update and replace tags by using Tag Pipelines, or you can create new tags to align with your business logic. With OCI costs displayed in CCM alongside expenses from AWS, Azure, Google Cloud, and software-as-a-service (SaaS) providers such as OpenAI and Anthropic, your teams can eliminate context switching and focus on understanding total cost of ownership across environments.

In the following screenshot, you can see day-over-day cost trends and quickly spot changes across cloud and SaaS environments. You can also use FinOps Cost and Usage Specification (FOCUS) tags, which are automatically ingested and normalized across all providers, to drill into and compare cost data across your providers. For example, the screenshot shows FOCUS tags for servicename and subaccountname.

Uncover opportunities for cost savings

Cloud bills often mask inefficiencies like underutilized compute instances and oversized storage. CCM highlights these opportunities by combining OCI cost data with Datadog observability insights.

For example, in the following screenshot, the compute instances that have high costs but low CPU utilization might be good candidates for downsizing or deleting. With Datadog Notebooks, you can collaborate directly with engineering teams on these insights by reviewing instance performance alongside cost data to make rightsizing recommendations in one shared workspace. This visibility across teams helps you optimize costs without sacrificing the performance that your applications need.

Avoid cost overruns

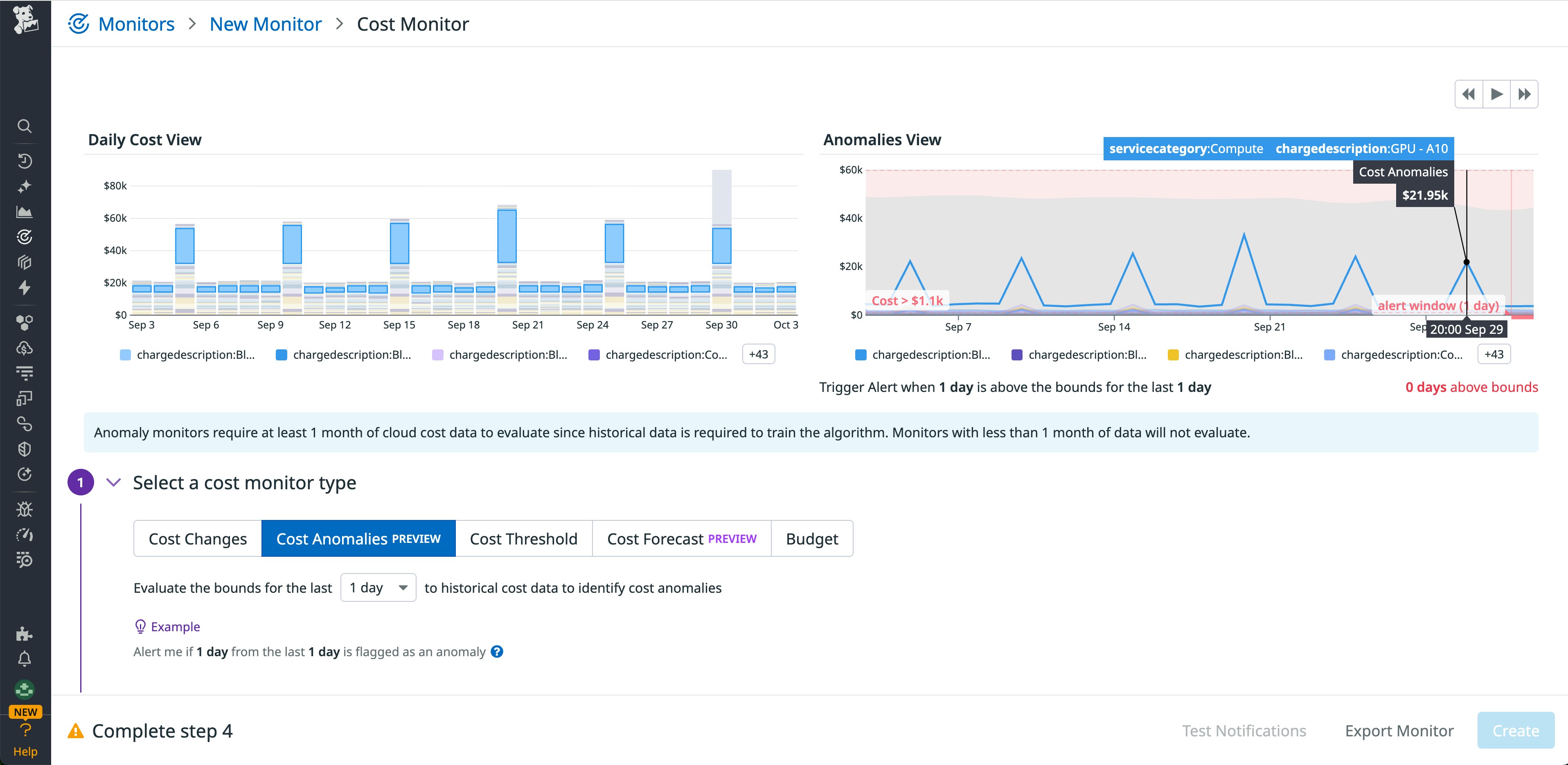

Waiting for end-of-month invoices makes it difficult to catch runaway spending before it becomes a problem. CCM brings anomaly monitors to OCI, alerting you to unusual cost increases in areas such as block storage and cross-region data transfer.

You can configure Datadog cost monitors to notify your team in real time via tools such as Slack and PagerDuty, giving you the chance to investigate issues before they escalate. Multi-provider budgets and forecasts help your teams track spending against targets and give you a clear picture of current and projected usage.

Control unpredictable AI costs

As organizations invest in AI, cost visibility has become just as important as performance monitoring. OCI provides a platform for large-scale AI training and inference, and these workloads can generate unpredictable costs. Causes of this unpredictability include GPUs that operate at high utilization, training runs that vary widely in duration, and inference jobs that scale rapidly to meet user demand.

CCM combines with Datadog LLM Observability and Datadog GPU Monitoring to give you a unified platform for AI cost and usage management. LLM Observability traces token usage and costs for models from providers such as OpenAI and Anthropic, and GPU Monitoring reveals utilization data, idle resources, and anomalies in your AI infrastructure. Together, these solutions help you allocate costs, empower your engineering teams with real-time insights, and optimize your AI investments.

Start managing your OCI costs today

With the addition of support for OCI, CCM expands your unified view of costs across clouds and services. Your engineers can use the data to understand the cost of provisioning their infrastructure and running their services, and they can make adjustments quickly to avoid unnecessary spending. To learn more, check out our CCM documentation.

If you’re new to Datadog, you can sign up for a 14-day free trial to get started.