Jordan Obey

Serverless platforms like Azure Functions and Azure Container Apps make it easier to scale your applications without managing infrastructure. But successful serverless apps require thoughtful planning. They must be designed to account for cold starts, unpredictable scaling behavior, and ephemeral compute lifecycles, all while ensuring secure data handling and end-to-end observability across highly distributed components.

The Azure Well-Architected Framework provides five pillars to guide this design process. In this post, we’ll explore how to build more robust serverless solutions by applying its principles to common architectural challenges.

We’ll also show you how Datadog supports each of these pillars by providing the insights needed to ensure that your serverless applications are secure, cost-efficient, and behaving as expected.

Reliability

In a serverless context, reliability is particularly important because you’re relying on several managed services that scale independently and operate on demand. If not properly isolated, outages or performance issues in any one component—whether it’s your API layer, messaging queue, or background function—can ripple through your application.

This section covers how to achieve reliability within your serverless workloads by ensuring high availability and managing function failures gracefully.

Ensure high availability

High availability refers to an application’s ability to remain operational even when part of the system fails. Azure Functions are hosted in multiple regions and availability zones by default, offering built-in high availability. However, this default behavior can be affected when you integrate other components—such as databases, queues, or virtual networks—which may require manual configuration.

For instance, if you deploy Azure Functions inside a virtual network (VNet), your app is placed in a single availability zone within that VNet’s region by default. This means that if that zone experiences an outage, your Functions app may become unavailable, even if the rest of the region remains healthy. To mitigate this risk, you should provision subnets across multiple availability zones and configure your infrastructure accordingly to preserve high availability and prevent a single point of failure from impacting your entire application.

As another example, Azure also recommends enabling multi-region writes in Azure Cosmos DB to keep your database accessible if a region becomes unavailable.

Manage function failures gracefully

Even with high availability, some functions may still fail due to unexpected errors, unhandled exceptions, or service timeouts. You’ll need to account for these failures and ensure that services retry safely and consistently.

For instance, Azure Functions triggered by queues or event streams automatically retry when a failure occurs. This means they may be invoked multiple times for the same event, unintentionally creating duplicate emails or repeated charges. To avoid this issue, ensure that functions are idempotent, meaning they can run multiple times with the same input and still produce the same result. For example, a checkout function should verify whether a payment has already been processed before submitting it again.

To handle persistent failures, you can configure a dead-letter queue (DLQ) to capture messages that have failed after a set number of retries. This prevents them from being retried indefinitely and allows for later analysis, remediation, or reprocessing. DLQs are especially critical when dealing with external systems or non-idempotent operations.

For functions that trigger synchronously (such as HTTP APIs), you’ll need to build retry logic into the calling service. Be sure that your function returns appropriate status codes so consumers can distinguish between client-side and server-side errors. Azure API Management or Application Gateway can help route and shape this traffic, but the function’s runtime code should still handle errors explicitly.

Performance

Serverless platforms like Azure are designed to scale quickly and handle bursts of traffic, but that doesn’t automatically guarantee fast response times. Cold starts, inefficient logic, and resource misconfiguration can still slow down your workloads, especially when usage is unpredictable and prone to spikes. The performance pillar focuses on using computing resources efficiently to deliver responsive user experiences at any scale.

Azure provides several tools to help serverless workloads perform well under pressure, including autoscaling, caching, and message queues. In the sections below, we’ll cover how to get the most out of these features and ensure that your Azure-hosted serverless workloads can scale and respond quickly to requests.

Minimize cold starts

Cold starts occur when a function or container has to initialize from scratch before responding to a request. This added startup time can significantly increase response latency, which is particularly detrimental for latency-sensitive services like login, search, or checkout APIs.

If you are using Azure Container Apps, you can reduce cold start latency by configuring a minimum replica count when you set scaling rules. This ensures that some containers remain warm and ready to respond immediately, even during low-traffic periods. For example, if you run an ecommerce API that sees unpredictable spikes during a sale or marketing campaign, configuring a minimum of one or two pre-warmed replicas helps absorb sudden traffic without forcing the platform to start from zero. A YAML-based configuration might include a scale block that looks like the following:

scale: minReplicas: 2 maxReplicas: 10 rules: - name: http-rule http: metadata: concurrentRequests: "50"The scale block defines how your container app adjusts its number of replicas based on incoming traffic and other conditions. In the scale block above, minReplicas: 2 keeps two containers running at all times to reduce cold start latency, while maxReplicas: 10 allows the app to scale up when needed. The http-rule tells Azure to add more replicas when any instance handles more than 50 concurrent HTTP requests.

If you’re using Azure Functions instead of Azure Container Apps, you can mitigate cold starts by running your functions on the Premium plan, which offers always ready and prewarmed instances, or by enabling provisioned concurrency. The Premium plan is well suited for APIs and interactive endpoints that require consistent performance. For example, a customer authentication service that runs on the Premium plan can avoid the latency spikes that might otherwise frustrate users logging in during peak hours. You may also consider running workloads with strict latency requirements in Azure Container Apps or long-running containers, where you can eliminate cold starts entirely and fine-tune performance at the runtime level.

Process functions efficiently

In serverless environments, where you’re billed based on execution time and resource consumption, streamlining your functions has both performance and cost benefits. Keep serverless functions efficient by scoping each one to a specific task. Scoping your functions to a single task simplifies debugging, improves cold start times, and makes scaling more predictable. You can also use monitoring tools like Datadog to get insight into metrics like memory usage, execution duration, concurrent invocations, and error rates, to help you ensure that each function is right-sized and tuned for its workload.

For example, it often makes sense to group related tasks—like validating input and saving data to a database—into a single function because they belong to the same logical unit of work. An action like sending a confirmation email, however, can be separated into its own function and triggered asynchronously. This keeps the critical path simple and reliable while allowing side effects to scale and fail independently.

Use batching and caching when possible

Caching and batching are effective ways to reduce latency and improve the performance of serverless applications. Caching allows your functions to quickly retrieve frequently used data—such as product details, user profiles, or configuration settings—without needing to repeatedly query a backend service. You can use Azure Cache for Redis for durable, distributed caching, or in-memory caching for functions running on the Premium plan with Always On enabled. This helps reduce the impact of cold starts and offloads pressure from databases and APIs.

Batching improves efficiency by grouping multiple data points or messages into a single function execution. For example, instead of writing each event or log entry individually, you can collect them into batches and send them to Cosmos DB or an external service in one operation. This reduces invocation overhead, lowers compute time, and helps you stay within throughput limits for downstream systems. Together, caching and batching help your application scale more smoothly and cost-effectively under load.

Security

Serverless architectures rely on a distributed system of managed services, each introducing its own configuration and potential exposure. Because serverless components often communicate over event-driven or asynchronous channels, the attack surface of serverless workloads can be difficult to define and even more challenging to monitor. For even more information about security best practices for serverless technologies, you can check out the Open Worldwide Application Security Project’s (OWASP) Top 10 recommendations.

Apply least privilege access with RBAC

Azure Role-Based Access Control (RBAC) enables you to define exactly which services and users can perform specific actions. Avoid granting broad default permissions to your functions or containerized workloads. Instead, assign roles that limit access to only the resources and operations that are required.

For example, a function that writes to a single Cosmos DB container should not be granted full database permissions. Instead, scope its identity to only that specific container and operation using managed identity and custom role definition. This limits the blast radius of a compromised function and helps enforce clear boundaries between components.

Authenticate and authorize every interaction

Each service-to-service call within your serverless application should be authenticated and authorized. Use Azure Managed Identities to enable secure authentication between functions, queues, and databases without managing credentials directly. When combined with RBAC, this allows for seamless yet secure internal communication.

For instance, an Azure Function that processes orders and writes to Cosmos DB can authenticate using its system-assigned managed identity. Cosmos DB can then validate the request using Microsoft Entra ID (formerly known as Azure Active Directory), eliminating the need for hardcoded credentials.

Encrypt data and secure network access

Protect sensitive data both at rest and in transit. Azure services like Blob Storage, Cosmos DB, and Service Bus support built-in encryption using Microsoft-managed or customer-managed keys. Enable these features consistently across your application’s data layer. In addition, restrict public exposure wherever possible. Use private endpoints to isolate traffic between Azure services within a virtual network. For containerized workloads in Azure Container Apps, you can configure environment-level network restrictions to ensure that only internal services can trigger certain APIs or send messages to a queue.

Cost optimization

Serverless environments offer a pay-as-you-go model, which is great for scaling workloads efficiently, but only if your usage is intentional and monitored. In Azure, misconfigured services, overprovisioned resources, or overlooked usage patterns can lead to unexpected costs.

Optimizing cost means selecting the right plans, scaling with precision, minimizing idle time, and using automation to right-size workloads. It also means aligning spending with business outcomes: not all savings are worth the trade-off in performance or reliability.

Azure’s cost analysis tools, autoscaling features, and workload-specific recommendations can help keep your app high-performing and affordable. By continuously measuring and adjusting usage patterns, you can ensure you’re spending wisely while delivering a smooth experience.

Keep functions lean

Function execution time directly impacts cost in a pay-as-you-go model. Slow functions not only delay user responses but also cost more to run. We discussed optimizing function performance earlier in this post, but a good rule of thumb to keep in mind is that reducing latency reduces cost.

To spot optimization opportunities, take advantage of profiling and tracing tools. For example, Azure Application Insights and distributed tracing with OpenTelemetry can help identify where functions are spending the most time—whether it’s on cold starts, external dependencies, or inefficient code paths.

For example, you might notice that a function’s execution time drops significantly after switching from a database lookup to a cache query. That performance gain directly lowers compute charges, especially at scale.

Avoid idle execution

Idle time can be a hidden cost in serverless apps, particularly in long-running workflows. If a function is waiting on I/O, holding a connection, or paused during a workflow, you’re still billed for the time it’s running.

Refactor long-running operations into shorter, event-driven steps, or use Durable Functions to offload state management. Durable Functions pause without consuming compute, making them a cost-effective choice for background tasks and multi-step workflows.

Choose the right pricing plan

Azure offers several hosting plans for functions. The Consumption plan is ideal for spiky or low-volume workloads since it charges strictly based on the number of executions. The Premium plan is better for high-throughput or latency-sensitive APIs, as it supports features like VNet integration, Always On, and prewarmed instances. However, the Premium plan comes at a higher baseline cost since you’re billed for provisioned instances even when idle.

Finally, the App Service plan runs Azure Functions on dedicated infrastructure shared with App Services. It offers predictable pricing with no per-execution charges but lacks autoscaling and can lead to underutilization if traffic is inconsistent. Choosing the right plan depends on your workload’s traffic patterns, latency requirements, and cost sensitivity.

Autoscale with intent

Autoscaling is one of the main advantages of serverless, but it needs to be configured thoughtfully. Set sensible minimum and maximum instance counts for Azure Container Apps to avoid unnecessary idle replicas.

For Cosmos DB, monitor request unit (RU/s) usage and enable autoscale throughput so that you’re not paying for capacity you don’t use. For example, if your Cosmos DB container serves traffic that spikes during lunch hours and drops in the evening, autoscale will automatically increase and decrease throughput limits, keeping performance stable while avoiding waste.

Operational excellence

Operational excellence means having the visibility you need in order to detect problems quickly, resolve them efficiently, and continuously improve how your system runs. Because serverless environments are dynamic and event-driven, traditional uptime monitoring isn’t enough. You need telemetry across metrics, traces, logs, and deployments to gain insight into application health and performance.

The Azure Well-Architected Framework encourages teams to build observability into every layer of their applications. This includes tracking error rates, cold starts, queue delays, and external dependencies. Datadog supports this approach by providing deep visibility into Azure serverless environments through integrated monitoring, tracing, and security tools.

Monitor workloads with Datadog’s Azure integration

Datadog’s Azure integration collects and visualizes metrics, traces, and logs from Azure Functions, Azure Container Apps, and other supporting services, providing a complete overview into your entire Azure infrastructure.

With this integration, you can set up out-of-the-box dashboards to monitor key metrics like cold start durations, function error rates, queue backlogs, and API latency. You can also set alerts to notify teams of any anomalies that have been detected or whether a specified threshold has been surpassed. For instance, if your checkout API starts experiencing latency due to increased load, you’ll be notified before it affects the user experience.

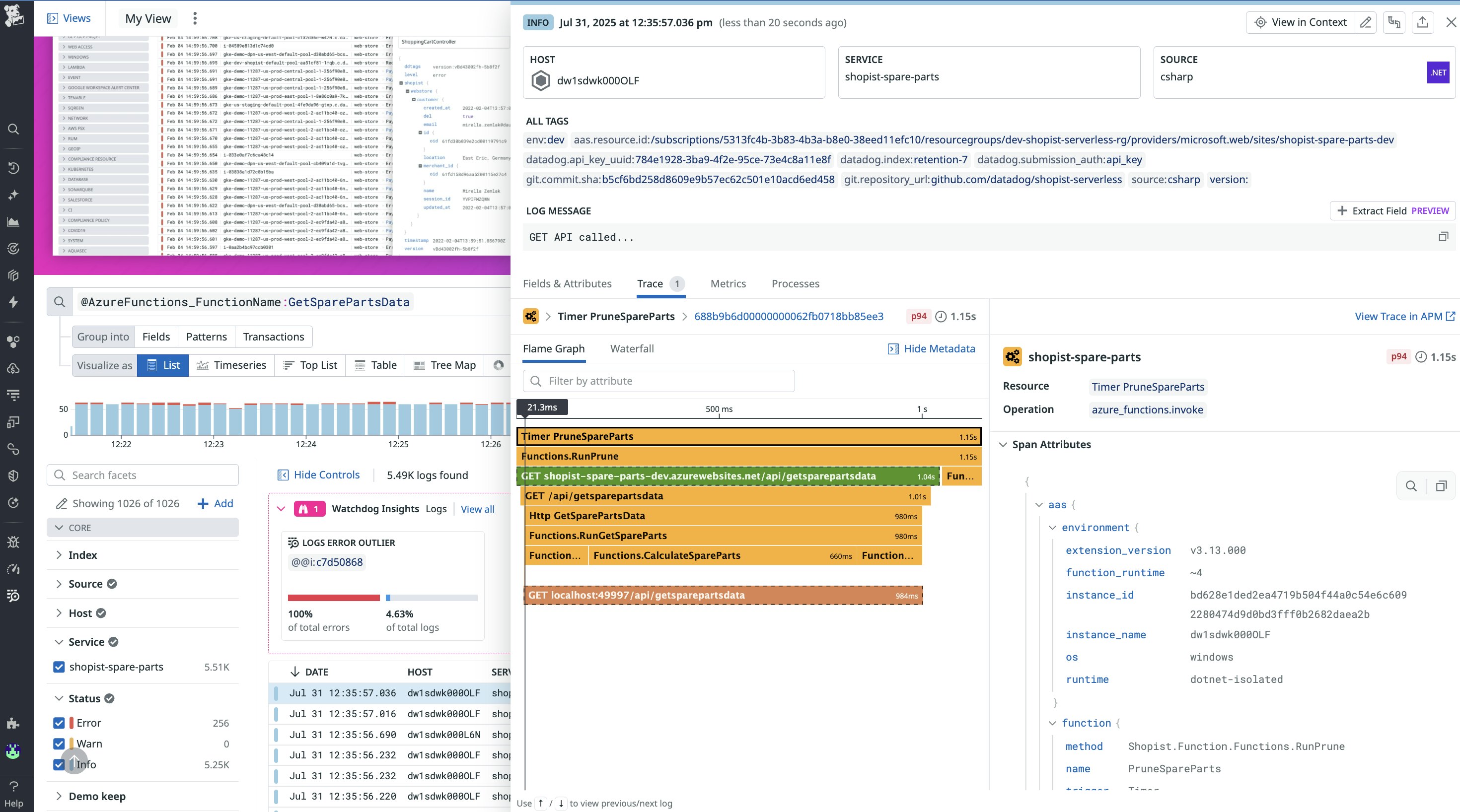

End-to-end tracing with Datadog APM

Datadog APM supports native distributed tracing for Azure Functions and Azure Container Apps, and automatically connects those traces to other Azure-managed services in your environment—such as Cosmos DB, Service Bus, and API Management. This gives you a complete picture of each request as it propagates across your serverless application, so you can identify slow components, errors, or retries at a glance.

Datadog also enriches trace spans with metadata from Azure Function invocations, including cold start indicators, memory usage, and trigger context. You can search, filter, and group spans by function name, request ID, or custom tags, making it easier to understand how individual services contribute to performance or failure. Whether you’re debugging a failed checkout flow or tracing queue latency during a traffic spike, Datadog helps you pinpoint the issue and take action quickly.

Tie together logs, metrics, and traces for faster troubleshooting

Datadog automatically links metrics, logs, and distributed traces from across your system. When a function fails, you can trace the request that triggered it, see where delays occurred, and view logs for that specific invocation—all within Datadog.

For example, if a checkout failure occurs in an Azure Function, you can use Datadog to show you the full trace, including upstream queue delays, memory usage, or Cosmos DB throttling that led to the failure. This correlation accelerates root cause analysis and reduces mean time to resolution (MTTR).

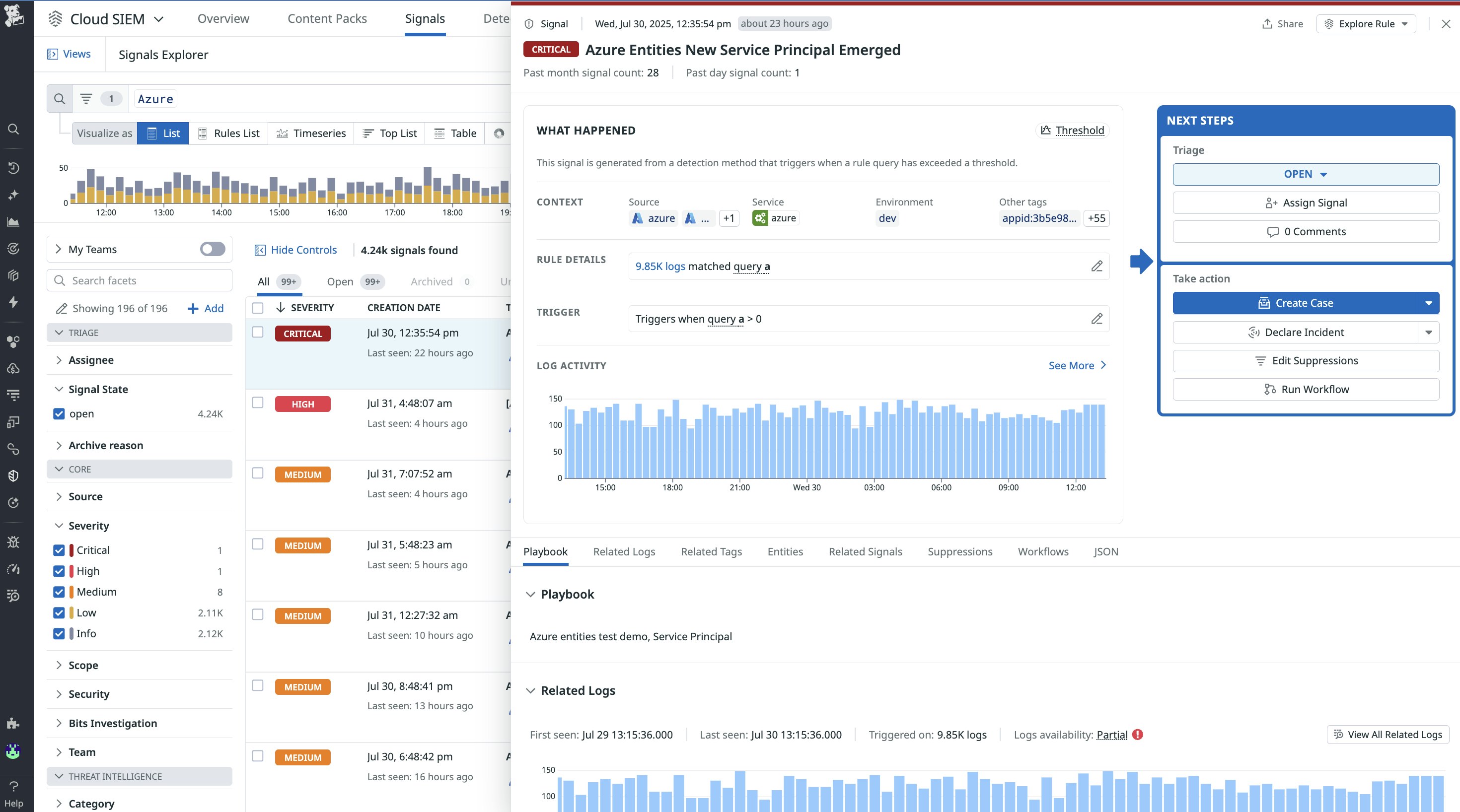

Securing your Azure environment with Datadog Cloud SIEM

Datadog Cloud SIEM (Security Information and Event Management) enhances your Azure environment’s security posture by applying out-of-the-box detection rules to all ingested logs and events to detect potential threats such as unauthorized access or communications with malicious IP addresses.

Datadog automatically surfaces suspicious activity as Security Signals, which are visible in the Security Signals Explorer. From there, teams can quickly triage and investigate threats using correlated log data and built-in context.

By combining telemetry and security in a single platform, Datadog gives you a unified, contextualized view of your Azure ecosystem, helping you achieve operational excellence with confidence and agility.

Supporting all five pillars with Datadog

Building serverless applications on Azure requires careful design decisions across reliability, performance, security, cost, and operations. The Azure Well-Architected Framework provides a strong foundation for making those decisions, and with the right tools, you can put those principles into practice. By decoupling services, managing cold starts, enforcing least-privilege access, and continuously monitoring your stack, you can deliver serverless systems that are both scalable and resilient.

Datadog gives you the insight you need to implement these best practices confidently. From real-time performance metrics and traces to CI/CD insights and cost-aware dashboards, Datadog brings full visibility to every part of your Azure serverless architecture. To see how Datadog can help you build and operate well-architected serverless applications, start a 14-day free trial.