Exploitable vulnerabilities are prevalent in web applications, particularly those that use Java

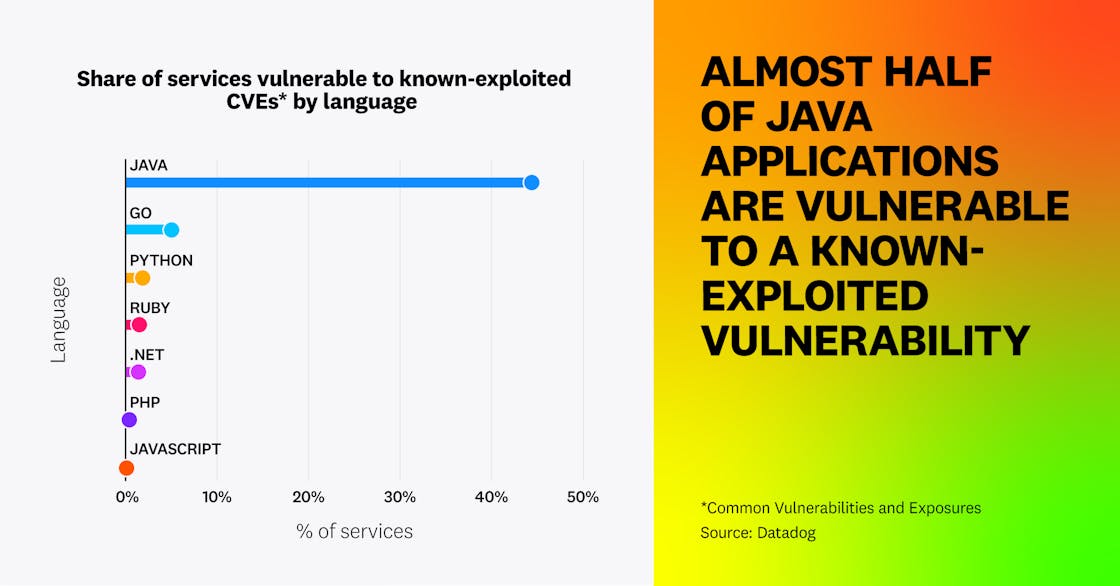

By analyzing our dataset of applications, we found that 15 percent of services are vulnerable to known-exploited vulnerabilities, affecting 30 percent of organizations. Vulnerabilities are particularly prevalent among Java services, 44 percent of which contain a known-exploited vulnerability. The next highest is Go at 5 percent, with the average for all non-Java services at 2 percent.

In fact, 14 percent of Java services still contain at least one vulnerability even when we look at only those that are known to be highly impactful, such as known remote code exploitation (RCE) vulnerabilities like Log4Shell, Spring4Shell, and other routinely exploited attack paths.

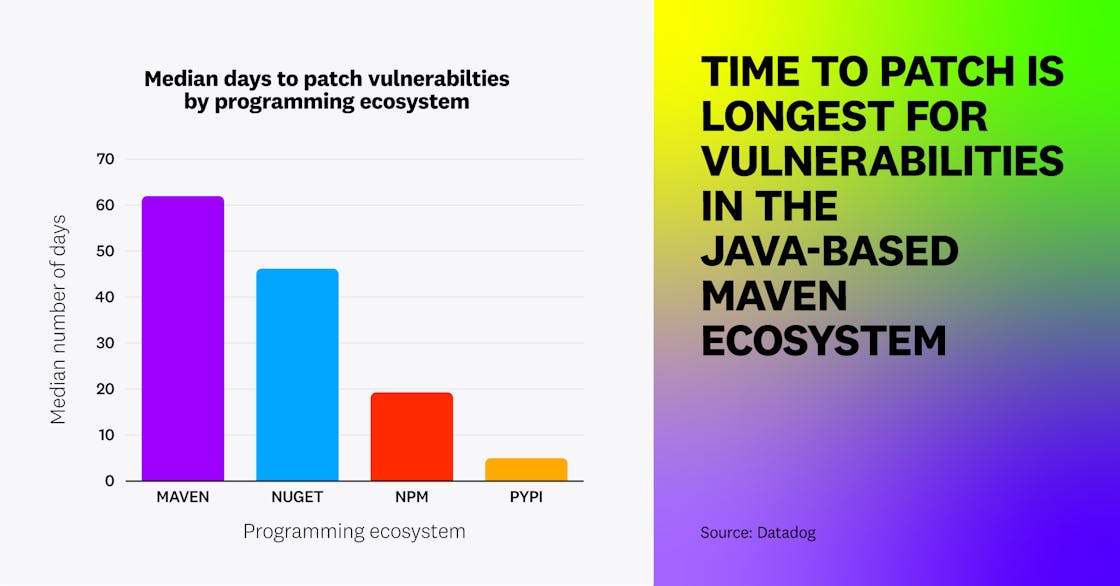

In addition to being more likely to contain high-impact vulnerabilities, Java applications are also patched more slowly than those from other programming ecosystems. We found that applications from the Java-based Apache Maven ecosystem had a median time to fix library vulnerabilities of 62 days, compared to 46 days for those in the .NET-based NuGet ecosystem and 19 days for applications built using npm packages, which are JavaScript-based.

Leaving known vulnerabilities unpatched for prolonged periods is particularly risky because web applications are often exposed to the internet. Attackers use automated scanners to continuously scan the internet for high-impact, easy-to-exploit vulnerabilities, and recent research shows that vulnerabilities are often exploited just hours after they are initially disclosed.

Our research confirms this pattern of attacker behavior. We found that 88 percent of organizations received untargeted malicious HTTP requests, such as to /backup.sql, scanning for potentially exposed sensitive files or API routes. In addition, 65 percent of cases where a specific attacker attempted to exploit a specific URL were untargeted—i.e., the same attacker had tried to exploit the same URL in at least one other organization that Datadog monitors.

Given that attackers frequently operate not by targeting specific applications but by broadly scanning the internet for easily exploitable vulnerabilities, keeping library dependencies updated with the latest patches is critical, as any known vulnerability represents a serious risk. In addition, due to the high prevalence of known-exploitable RCE and other vulnerabilities in Java libraries—and the higher likelihood that these vulnerabilities remain unpatched—teams should pay particular attention to remediating vulnerabilities in Java applications.

Attackers continue to target the software supply chain

In addition to searching web applications for known vulnerabilities, attackers also commonly attempt to trick developers into downloading and deploying malicious packages from open source libraries. Throughout 2024, Datadog identified thousands of malicious PyPI and npm libraries in the wild. Some of these packages were malicious by nature and attempted to mimic a legitimate package (for instance, passports-js mimicking the legitimate passport library), a technique known as typosquatting. Others were active takeovers of popular, legitimate dependencies (such as Ultralytics, Solana web3.js, and lottie-player). These techniques are used both by state-sponsored actors and basic cybercriminals.

Attackers exploit the fact that vetting third-party dependencies is challenging for developers, especially if the package’s metadata and functionality appear to be legitimate.

To mitigate the growing prevalence of malicious packages in open source ecosystems, we hope package manager attestations—such as those now available for npm and PyPI—and increased maintainer account security will become more widespread and decrease the risk that legitimate packages will be taken over by bad actors. In addition, open source tools such as GuardDog and Supply-Chain Firewall can help users identify malicious code in software packages before they get installed.

Usage of long-lived credentials in CI/CD pipelines is still too high, but slowly decreasing

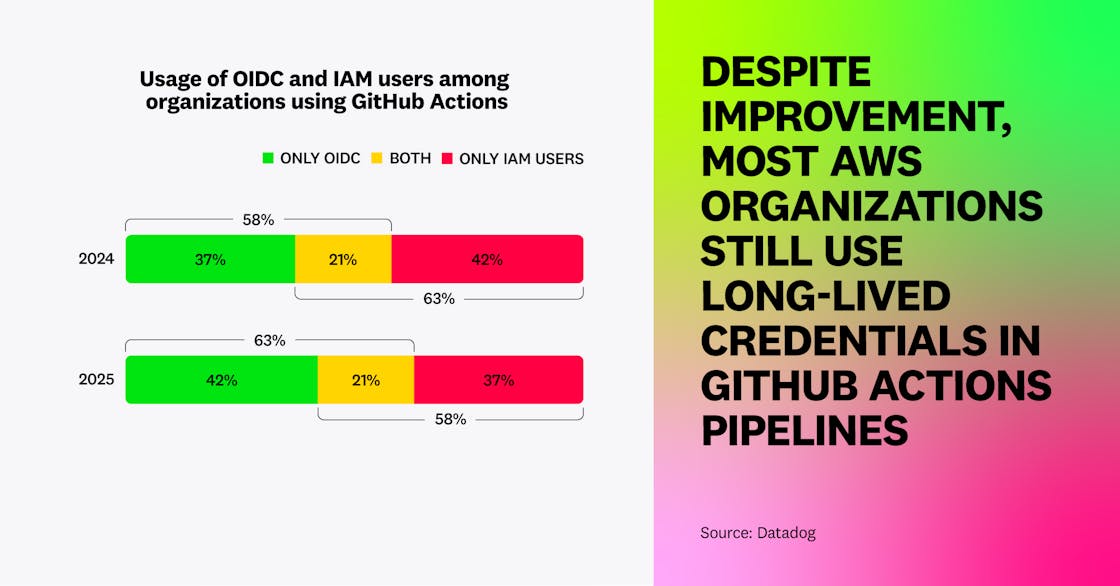

In addition to vulnerable code and malicious open source packages, the use of long-lived credentials that don’t expire is a common cause for cloud data breaches, and the practice is now widely frowned upon. Using long-lived credentials in CI/CD pipelines can be particularly risky, because their permissions are often highly privileged.

One scenario where long-lived credentials often make their way into CI/CD is when organizations use GitHub Actions to deploy applications to AWS, as these Actions can be configured with either short-lived credentials through OpenID Connect (OIDC) or AWS IAM user credentials, a type of long-term access key. We found that 37 percent of customers who send CloudTrail logs to Datadog are using IAM users in their GitHub Actions. We strongly recommend not relying on IAM users in this scenario but instead using OIDC.

While 63 percent of organizations using AWS and GitHub Actions leverage OIDC (up from 58 percent in 2024), 58 percent of organizations also leverage long-lived credentials from IAM users (down from 63 percent in 2024).

This shows that long-lived credentials, although a well-understood source of risk, remain a major source of technical debt, as they are typically challenging to migrate to more modern identity solutions.

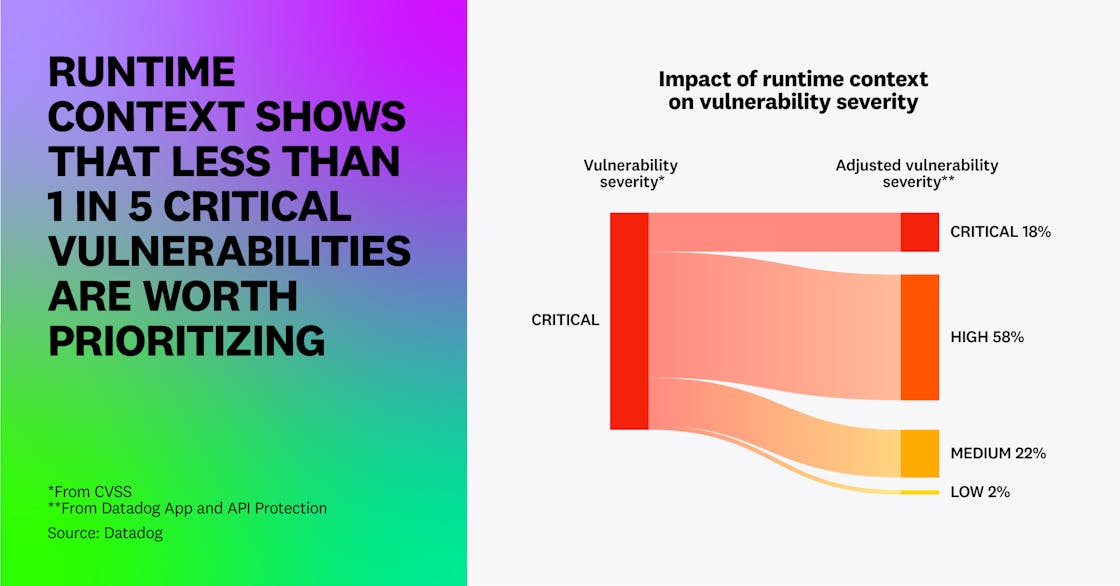

Only a fraction of critical vulnerabilities are truly worth prioritizing

The high prevalence of library vulnerabilities, malicious open source packages, identity misconfigurations, and other issues creates a noisy environment for defenders. In this context, security teams need to understand how to prioritize issues and determine which to remediate first. These teams often make decisions based on the severity of a vulnerability—typically measured by its CVSS base score, which is published along with the metadata for a known vulnerability. Vulnerabilities considered high-severity by CVSS score are only increasing: We found that the average service has 13.5 vulnerabilities with a high or critical CVSS severity score, up from 11.9 in 2024.

However, in order to truly gauge a vulnerability’s severity, it’s essential to have the appropriate runtime context—for example, whether the vulnerability is running in a production environment, or if the application in which the vulnerability is found is exposed to the internet. CVSS does not take these factors into account, which leads to excessive noise for defenders.

To reduce this noise, we developed a prioritization algorithm that factors in runtime context. After applying this algorithm, we found the average number of high or critical vulnerabilities per application drops from 12.2 to 7.5.

The reduction in noise is even more significant when looking only at critical vulnerabilities—i.e., those that require the most urgent prioritization. Just 18 percent of vulnerabilities with a critical CVSS score—less than one in five—are still considered critical after applying our algorithm.

This dramatic reduction in severe vulnerabilities represents hours saved each week, month, and quarter—and if defenders spend less time triaging issues, they can reduce their organizations’ attack surface all the faster. Focusing on easily exploitable vulnerabilities that are running in production environments for publicly exposed applications will yield the greatest real-world improvements in security posture.

“Without context, severity is just noise. True security comes not from patching everything, but from knowing what actually matters.”

Principal Software Engineer at Sanofi

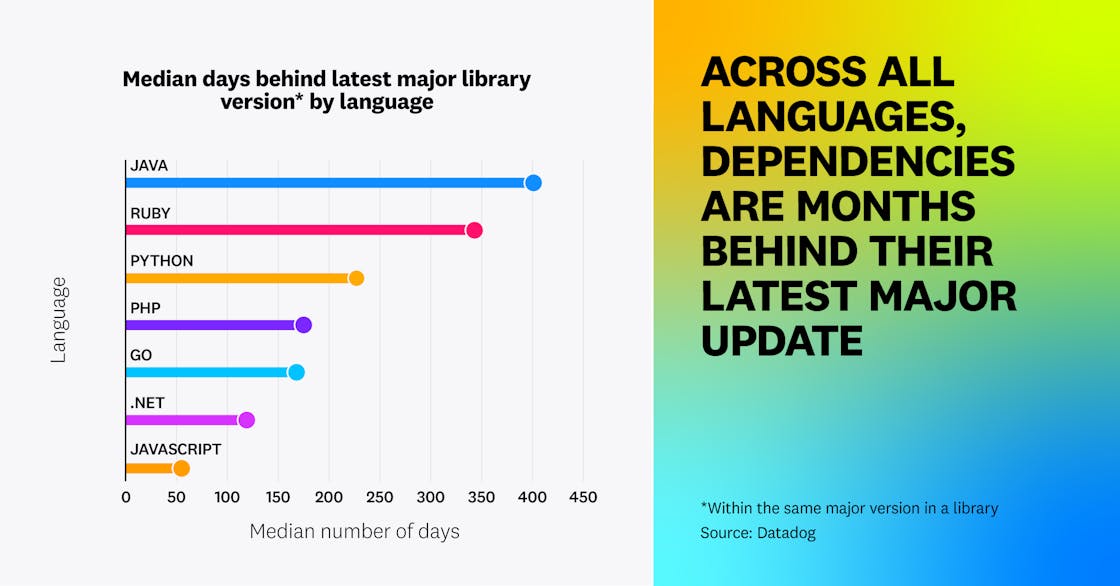

Keeping libraries up to date is a major challenge for developers

In our research, we found that the median dependency is 215 days behind its latest major version. This reinforces the idea that engineers across DevSecOps functions are stretched and need to reduce noise, as teams struggle to keep library dependencies in their code up to date. Out-of-date libraries can increase the likelihood that a dependency contains unpatched, exploitable vulnerabilities.

The trend that dependencies tend to be out of date is true for all ecosystems, and stronger for some. For example, while the median JVM dependency is behind by 401 days, the median for Go is 168 days.

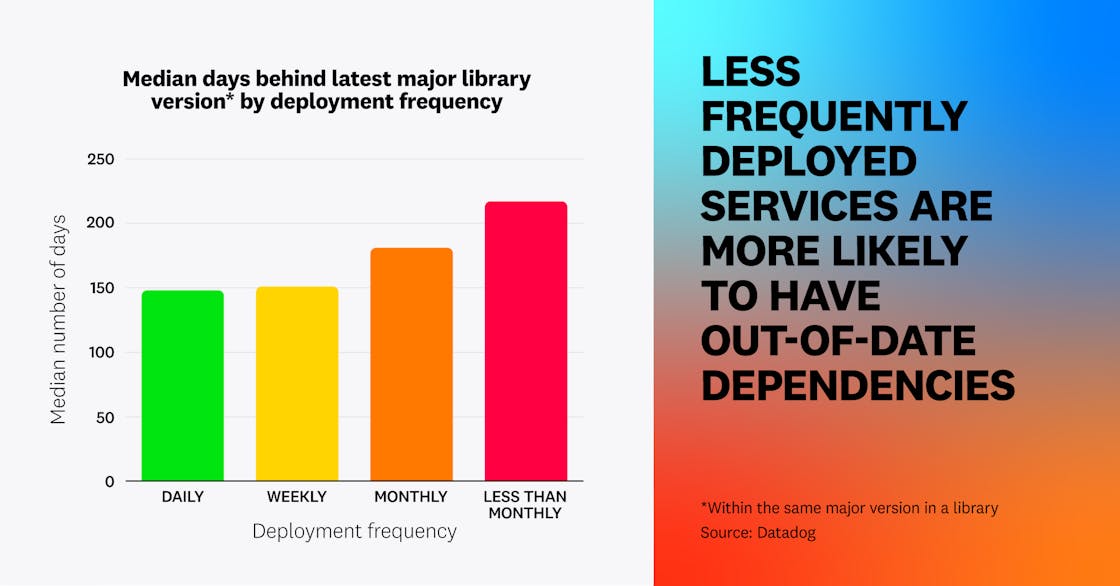

Among all services, those that are less frequently deployed are more likely to be using out-of-date libraries. We found that dependencies in services that are deployed less than once per month are 47 percent more outdated than those deployed daily, with a median of 217 days versus 148 days behind their latest version update.

To complicate matters further, one in two services use libraries that are not actively maintained. Because updates are no longer being released at all for these libraries, any newly discovered vulnerabilities will remain unpatched.

To lower exposure to vulnerabilities, teams should identify and update outdated libraries on a consistent basis. This holds especially true for unmaintained libraries, which should be treated like an end-of-life operating system and immediately updated to an actively maintained version. In addition, developers should deploy their services frequently. Not only is doing so an operational best practice, but because each fresh deployment gives teams a chance to upgrade their dependencies, it also simplifies the task of maintaining a strong security posture.

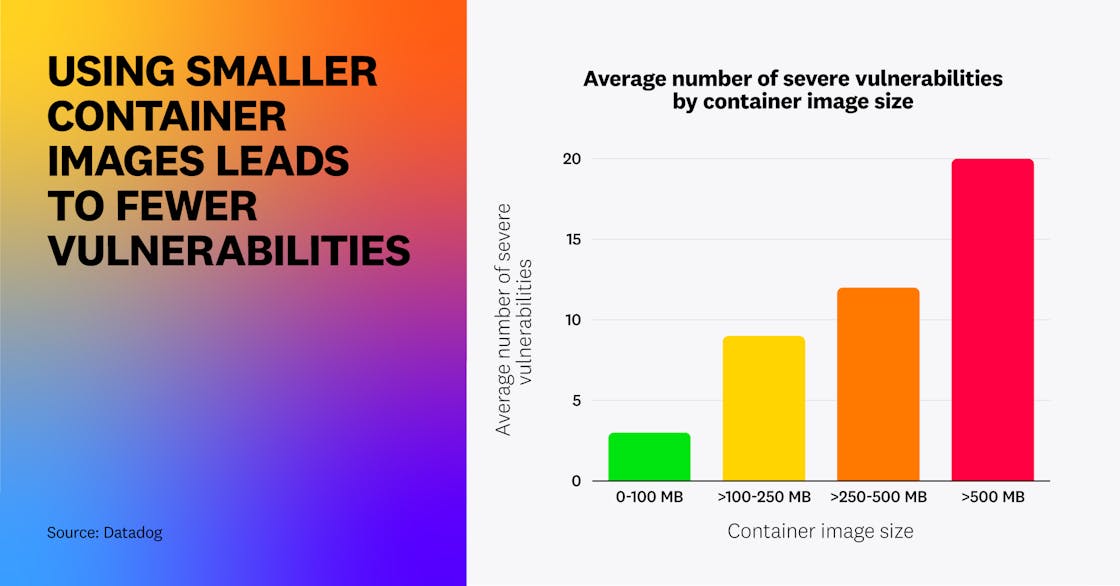

Minimal container images improve security posture

In recent years, engineering teams have moved toward adopting minimal container images in order to improve the efficiency and cost-effectiveness of their systems. This trend began with minimal distributions such as Alpine Linux and has evolved into even smaller distroless and from-scratch images, all of which drastically reduce the size of a container image. For instance, the default Docker image for python:3.11 weighs over 1 GB, while the minimal version python:3.11-alpine weighs 58 MB, reducing its size by 95 percent.

This “less is more” approach has benefits for security as well. By analyzing thousands of container images, we found that on average, an image of less than 100 MB has three severe—i.e., with a high or critical CVSS score—vulnerabilities (median 0), while a container image of greater than 500 MB has on average 20 such vulnerabilities (median 7).

In addition to containing fewer vulnerabilities in the first place, minimal container images also make it harder for attackers to “live off the land” by using system utilities like curl or wget to perform exploits (which is a very common behavior), since lightweight images typically don’t include this type of tooling. This reinforces the idea that teams should adopt lightweight container images wherever possible, both as an operational best practice and as a security measure.

“A smaller image means less to patch—and less to exploit. The more components you include, the larger the attack surface becomes. A distroless approach strips away all unnecessary packages and utilities, leaving only exactly what is needed for intended use; this minimizes vulnerabilities and ensures that attackers have far fewer footholds to work with. In our experience, minimal, hardened images not only cut down on CVEs but also help fast track compliance.”

Principal Product Manager, Product Security, Chainguard

In AWS, infrastructure-as-code usage is high, but many teams still use ClickOps as well

Infrastructure as code (IaC) has become the dominant practice in cloud environments because it tends to lead to better operational outcomes, such as improved version control and traceability. In addition, IaC is a key component of security best practices, as it helps enforce peer review and makes infrastructure easier to scan for misconfigurations.

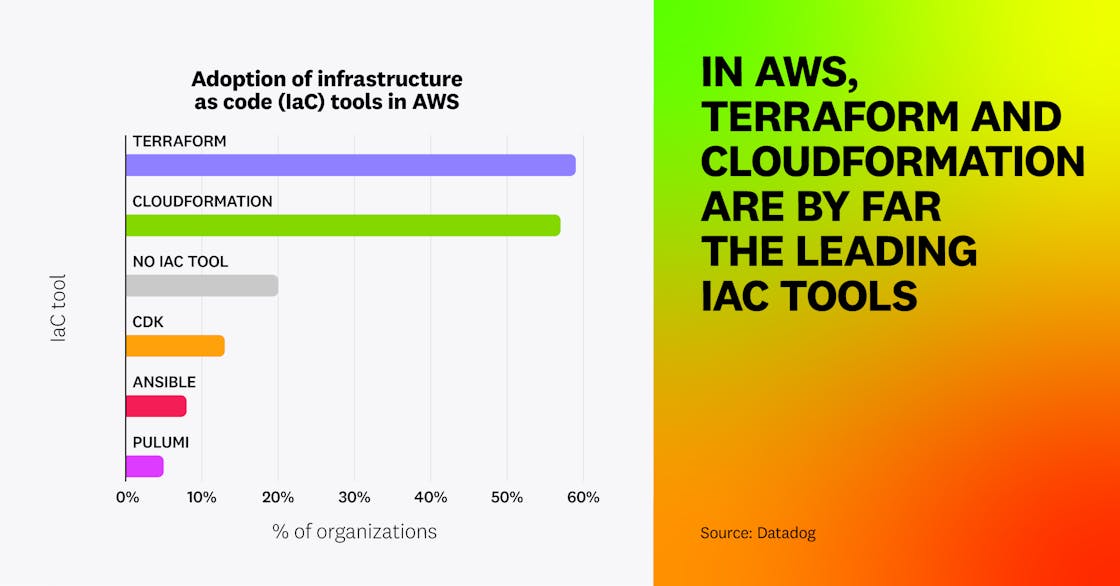

IaC adoption continues to grow more widespread. In AWS, 59 percent of organizations are using Terraform, and 57 percent use CloudFormation (slightly up from 2024). In all, 80 percent of organizations use at least one IaC tool.

At the same time, we also identified that at least 38 percent of organizations perform ClickOps deployments in production—i.e., having engineers manually log in to their AWS accounts to provision infrastructure through the AWS Console. ClickOps is generally less secure than IaC, as it introduces more opportunities for human error and results in a wider group of engineers having privileged access to the cloud environment.

Our research also shows that organizations using IaC are not exempt from ClickOps, as some are using both methods. This demonstrates that IaC, while an essential operational and security practice, is not enough—people are still accessing production environments manually. It remains critical to enforce IaC, limit privileged access, and continually scan cloud resources for vulnerabilities.