Multi-account environments allow organizational guardrails in AWS

Enforcing minimal privileges in a single AWS account is challenging. Similarly, running workloads from the different stages (dev, prod) in the same AWS account can be difficult due to quota, IAM, network, and performance issues. This is why a common best practice, both from a security and operational perspective, is to have multiple AWS accounts centrally managed through AWS Organizations, a governance service offered by AWS. Note that an “AWS organization” refers to one grouping of AWS accounts within this service and is not the same as an “organization.”

We found that at least 84% of organizations use more than one AWS account, while 86% of organizations have AWS accounts using AWS Organizations. AWS Organizations has become a popular way to centrally manage multi-account environments, with over two in three organizations (70%) having all their AWS accounts part of an AWS organization and three in four AWS accounts (74%) part of an AWS organization. A meaningful minority of organizations (14%) do not use AWS Organizations at all for any of their AWS accounts.

When using multi-account environments managed through AWS Organizations, it’s possible to enforce security invariants across all AWS accounts with organization-level guardrails. We found that among organizations using AWS Organizations, two in five (40%) take advantage of service control policies (SCPs), and 6% use resource control policies (RCPs)—a recently introduced type of organizational policy that applies at the resource level.

By analyzing the IAM condition keys in use in the explicit deny statements of these policies, we saw that SCPs are most commonly used to protect shared infrastructure and “landing zones” deployed in member accounts, as well as security mechanisms like CloudTrail and GuardDuty. When RCPs are defined, they are most commonly used to enforce encryption across all S3 buckets in an organization and ensure that all buckets in an AWS organization can only be accessed by principals from the same AWS organization.

However, using AWS Organizations comes with a few things to watch out for. By design, authorized principals in the organization management account can access all child accounts, creating an extremely powerful lateral movement vector. To protect against this, AWS recommends that the management account should not run any workloads, as doing so reduces the risk of it getting compromised. Still, we identified that 9% of organizations run EC2 instances in their AWS organization management account, up from 6% in 2024. In these configurations, an attacker who’s able to compromise a single EC2 instance may be able to escalate their privileges and access all child AWS accounts.

As organizations adopt data perimeters, most lack centralized control

In the cloud, identity is the new perimeter. There is little concept of an internal network, since cloud APIs are exposed to the internet by design. This means that an attacker stealing cloud credentials can call cloud APIs from anywhere in the world without the need to be in an internal network. With credential theft being a major attack vector, the concept of data perimeters has emerged in recent years. Data perimeters enable teams to restrict certain cloud API calls so they only succeed if coming from approved networks or trusted cloud accounts.

However, data perimeters are not implemented by default. They require enacting a set of various policies such as SCPs, RCPs, or resource-based policies—for example, at the S3 bucket or VPC endpoint level. Using a data perimeter requires using advanced IAM policy condition keys such as aws:SourceAccount or aws:PrincipalOrgID.

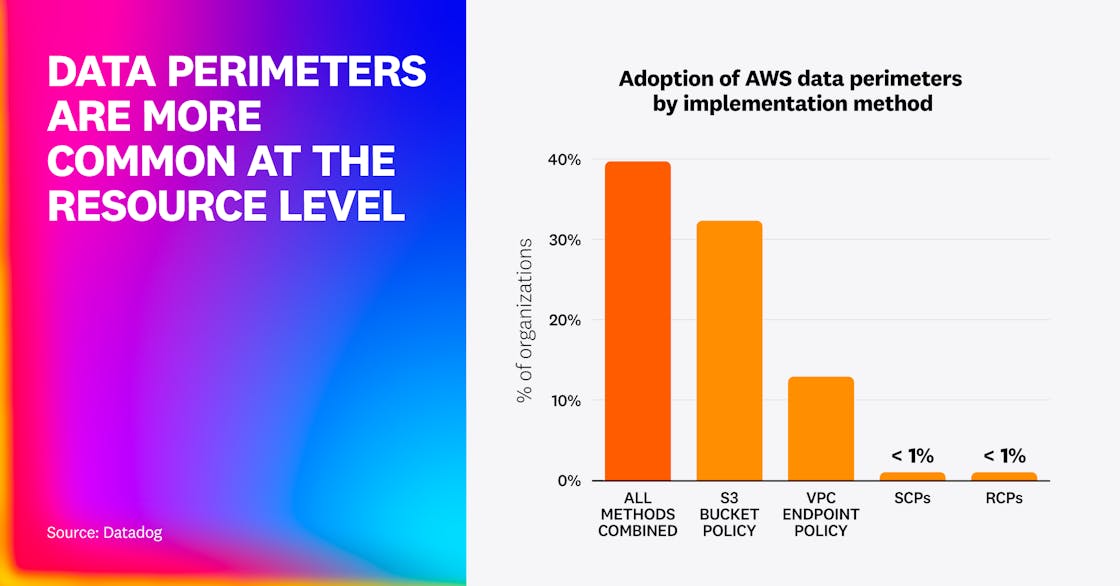

We determined that almost two in five (40%) organizations use data perimeters through either SCPs, RCPs, VPC endpoints, or S3 bucket policies.

Overall, the most popular ways to implement data perimeters are through:

- S3 bucket policies (32% of organizations), mostly through the

aws:SourceAccountcondition key. This ensures that nobody outside of a specific AWS account can access the bucket, even if its ACLs or individual files are mistakenly made public. - VPC endpoint policies (13% of organizations), mostly through the

aws:PrincipalAccountcondition key. This ensures that an attacker cannot exfiltrate data to an attacker-owned AWS account. - SCPs (0.6% of organizations), mostly through the

aws:PrincipalAccountcondition key. - RCPs (0.1% of organizations), mostly through the

aws:PrincipalOrgID,aws:SourceOrgID, andaws:SourceAccountcondition keys. These allow defenders to make sure that a specific type of resource can’t be accessed by anyone outside the organization, even if it’s misconfigured.

Although data perimeters are considered an advanced practice, over a third of organizations already use them. This reflects growing concern over credential theft and a willingness to adopt provider-supported safeguards for cloud data. But our analysis also reveals that most data perimeters are still applied per resource. Many organizations beginning to use data perimeters should shift toward modern controls like RCPs, which enforce guardrails at the organizational level, eliminating gaps in coverage.

Long-lived cloud credentials remain pervasive and risky

Long-lived cloud credentials continue to pose a major security risk, with previous installments of this report having shown that they are the most common cause of publicly documented cloud security breaches. For this year’s findings, we followed up on how organizations are using legacy versus modern authentication methods to authenticate humans and applications across AWS, Azure, and Google Cloud.

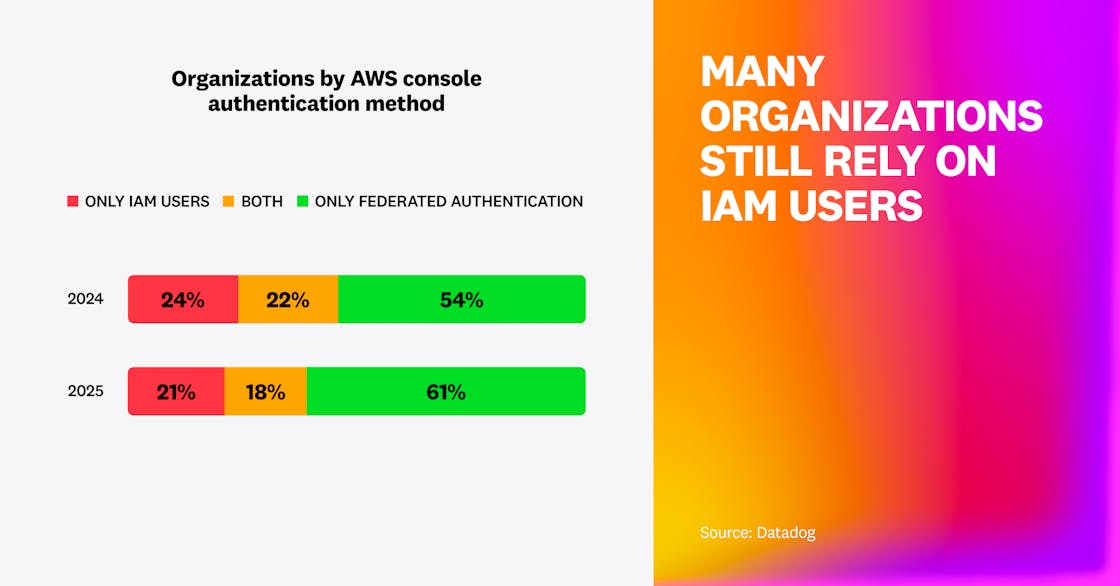

We found that most organizations (79%) continue to use federated authentication for human users to access the AWS console, such as through AWS IAM Identity Center or Okta. This represents a small increase in comparison to 2024, up from 76%, indicating that some organizations have transitioned fully to only federated authentication. However, almost two in five (39%) organizations still use IAM users in some capacity, and one in five organizations relies exclusively on IAM users. This shows that although organizations are increasingly adopting central human identities to access their cloud environments, they are struggling to get rid of their legacy IAM practices, such as humans using IAM users. It’s easier to add a new secure authentication practice than to get rid of a risky legacy one, but to ensure proactive security and mitigate risks, it’s critical to not only implement new solutions but also manage tech debt.

Similar to last year, we found that long-lived cloud credentials are often old and even unused, which increases the risks associated with these types of credentials.

- In AWS, 59% of IAM users have an active access key older than one year. Over half of these users have credentials that have been unused for over 90 days, hinting at risky credentials that could easily be removed. Crucially, this number hasn’t decreased in comparison to 2024, suggesting that organizations have plateaued in improving their security posture in this area.

- Google Cloud service accounts follow a similar pattern but have shown improvement year over year: 55% of Google Cloud service accounts have active service account keys older than a year, down from 62% a year ago. We attribute this not to a decrease in the number of service accounts (which can be used to generate short-lived credentials, such as with service account impersonation or workload identity federation), but to a decrease in the number of service account keys for these service accounts.

- Comparably, 40% of Microsoft Entra ID applications have credentials older than one year, down from 46% a year ago.

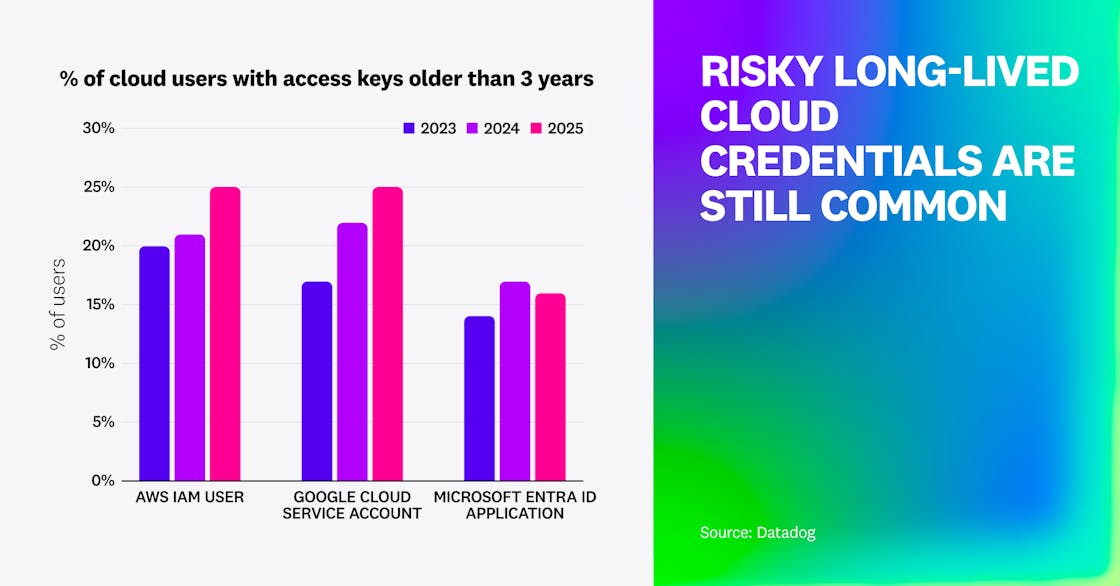

Analyzing access key age over time also shows that organizations using long-lived credentials are struggling to rotate them. The percentage of access keys older than three years has mostly increased across all clouds (AWS 21% → 25%, Google Cloud 22% → 25%, Azure stable)—same for five years (AWS 8% → 10%, Google Cloud 6% → 8%, Azure 6% → 10%). This increase is concerning, because as long as they exist and continue to grow stale, these access keys become increasingly risky. The only scalable response is to stop using them and switch to secure alternatives.

Instead of long-lived credentials, organizations should use mechanisms that provide time-bound, temporary credentials. For workloads, this can be achieved with IAM roles for EC2 instances or EKS Pod Identity in AWS, managed identities in Azure, and service accounts attached to workloads for Google Cloud. For humans, the most effective solution is to centralize identity management by using a solution like AWS IAM Identity Center, Okta, or Microsoft Entra ID and to avoid the use of an individual cloud user for each employee, which is highly inefficient and risky.

“From an incident response perspective, exposed access keys belonging to IAM users remain the primary initial access vector in AWS. Previously, we observed access key exposure more in scripts that were accidentally exposed through various code sharing platforms. In more recent incidents, the keys were exposed through improperly protected CI/CD pipelines and shared developer workstations.”

Founder and CEO, Invictus Incident Response

One in two EC2 instances enforces IMDSv2, but older instances lag behind

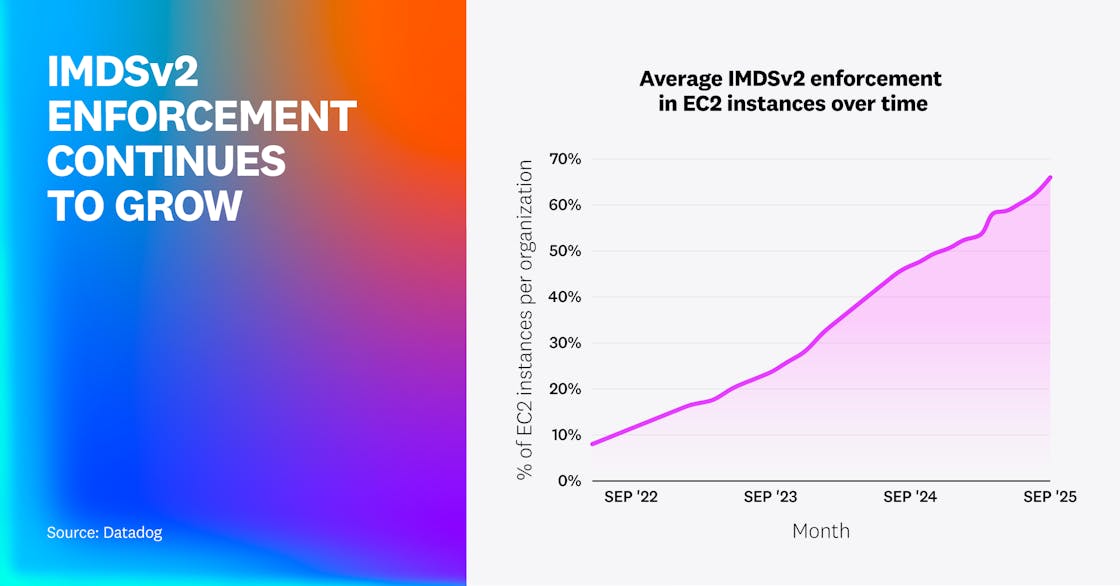

Adoption of IMDSv2—an AWS security mechanism that blocks credential theft in EC2 instances—continues to grow, largely driven by AWS’s move toward secure-by-default mechanisms that enable IMDSv2 automatically. Historically, IMDSv2 had to be manually enforced on individual EC2 instances or autoscaling groups.

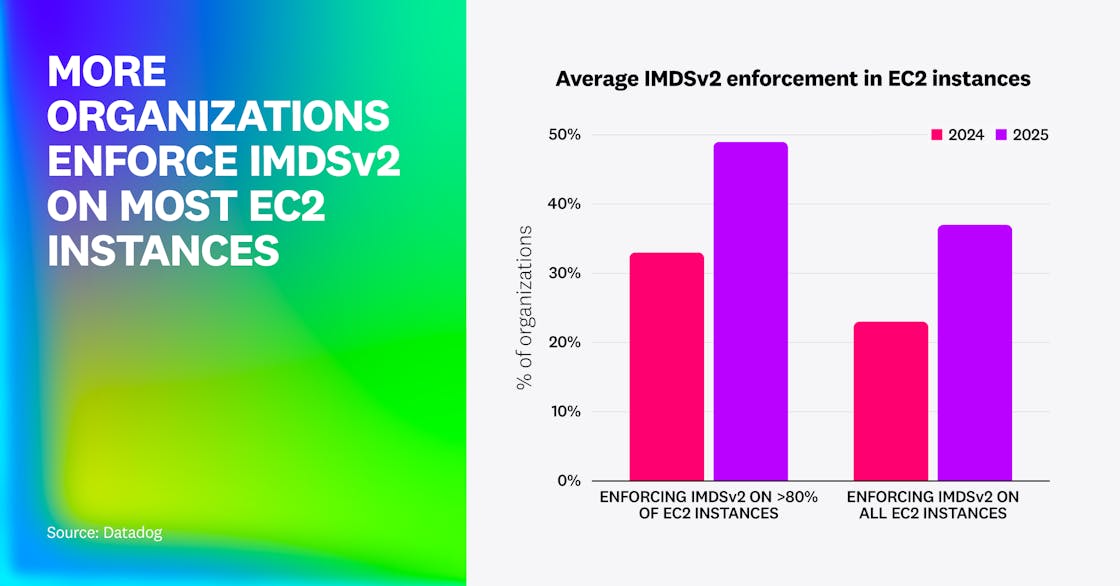

On average, organizations now enforce IMDSv2 on 66% of their instances, up from 47% a year ago and 25% two years ago. Overall, 49% of EC2 instances have IMDSv2 enforced, up from 32% a year ago, 21% two years ago, and 7% in 2022.

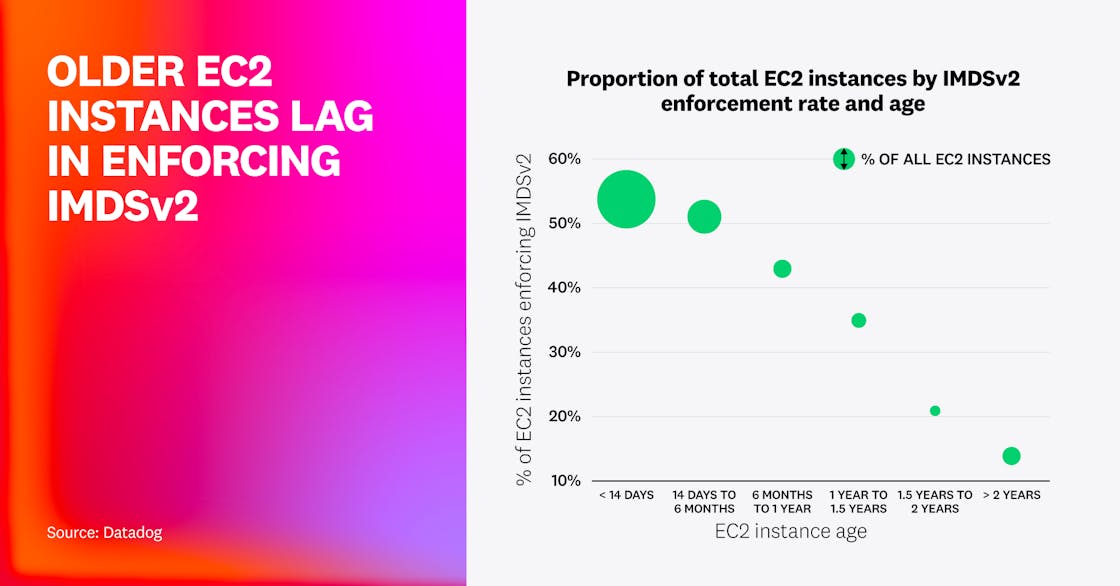

While this reinforces an ongoing trend, we also found that not all EC2 instances are created equal. Although over half of instances created in the two weeks preceding the collection of the data for this report enforce IMDSv2 (representing two-thirds of overall EC2 instances), only 14% of instances created over two years ago enforce it.

We also identified that more organizations are taking IMDSv2 seriously. Over one in three organizations (37%) enforces IMDSv2 on 100% of their EC2 instances, up from less than one in four in 2024. One in two enforces it on at least 80% of their instances (up from one in three), and only 24% enforce it on fewer than 20% of their EC2 instances—down from 39%.

Similar to last year’s data, while IMDSv2 enforcement is increasing, most instances still remain vulnerable. However, even when IMDSv2 is not enforced on an EC2 instance, individual applications and processes on that instance can use it. By looking at CloudTrail logs, we identified that although only 49% of EC2 instances have IMDSv2 enforced, 82% had exclusively used IMDSv2 in the past two weeks, meaning they could have enforced it with no functional impact. This data shows that there continues to be a disconnect between enforcement of IMDSv2 and actual usage, particularly for older EC2 instances that didn’t enable IMDSv2 by default. When mechanisms like IMDSv2 aren’t enabled by default, security settings fall behind even if they have no functional impact or don’t block developers.

Organizations looking to enforce IMDSv2 should implement secure-by-default mechanisms such as using the IMDSv2-by-default on AMIs and enforcing IMDSv2 by default on new instances on a per-region basis, as introduced in March 2024. We found that while fewer than 3% of organizations use this setting, when it is enabled, IMDSv2 enforcement reaches levels of 95%+. In most cases, security by default is a simple configuration change that can have an enormous impact on closing vulnerability gaps.

“While IMDSv2 adoption has been increasing year over year, enforcement still lags despite AWS introducing configurable defaults. Organizations should enable these security defaults and enforce IMDSv2 through SCPs across their environments. IMDSv2 is a simple, effective control that significantly reduces the impact of SSRF vulnerabilities. As long as IMDSv1 remains available, attackers will continue to exploit it, and the only way to close this gap is for AWS to deprecate IMDSv1.”

Senior Infrastructure Security Engineer, Zapier

Adoption of public access blocks in cloud storage services is plateauing

Paved roads and guardrails are two security practices that make it easy to standardize processes and prevent human mistakes from turning into data breaches, respectively. Because public storage buckets continue to be the source of a large number of high-profile data breaches, many modern controls—which fall into the category of guardrails—exist to ensure that a bucket cannot be made public by mistake.

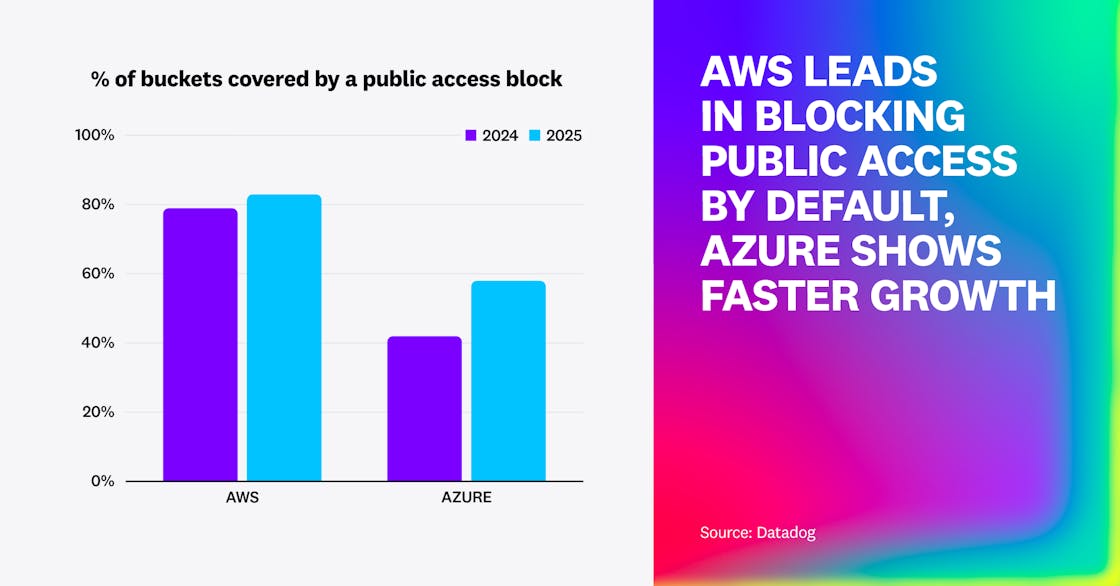

In this year’s report, we found that adoption of public access blocks continues to increase but at a slower pace than previous years. One percent of S3 buckets are effectively public, down from 1.5% in 2024 and 2023. Over four in five (83%) S3 buckets are covered by an account-wide or bucket-specific S3 Block Public Access, a small increase from 79% a year ago and 73% in 2023. This increase is likely caused by continuous wider awareness of the issue, and the fact that AWS proactively blocks public access for newly created S3 buckets as of April 2023.

On the Azure side, 1.3% of Azure Blob Storage containers are effectively public, down from 2.6% a year ago and 5% in 2023. Relatedly, almost three in five (58%) Azure Blob Storage containers are in a storage account that proactively blocks public access, up from 42% a year ago. This is likely due to Microsoft proactively blocking public access on storage accounts that were created after November 2023, making them secure by default.

To avoid mistakenly exposing S3 buckets, organizations should turn on S3 Block Public Access at the account level and protect the configuration with an SCP. At the organization level, it’s possible to deploy an RCP to apply a data perimeter to all S3 buckets in an AWS organization and ensure that they cannot be accessed externally from unauthenticated actors, even if the bucket policy or ACL of that bucket is misconfigured. In Azure, you can block public access in a storage account configuration, which prevents Blob Storage containers in that storage account from inadvertently becoming public.

For most common, legitimate use cases for public storage buckets, such as static web assets, it’s typically more secure, efficient, and cost-effective to use a content delivery network (CDN) such as Amazon CloudFront.

Overprivileged and misconfigured IAM roles for third-party integrations remain risks for AWS accounts

With more vendors integrating with their customers’ AWS accounts through IAM roles, a compromised provider AWS account increases cloud supply chain risks, as it’s likely that an attacker can access the same data as the provider can.

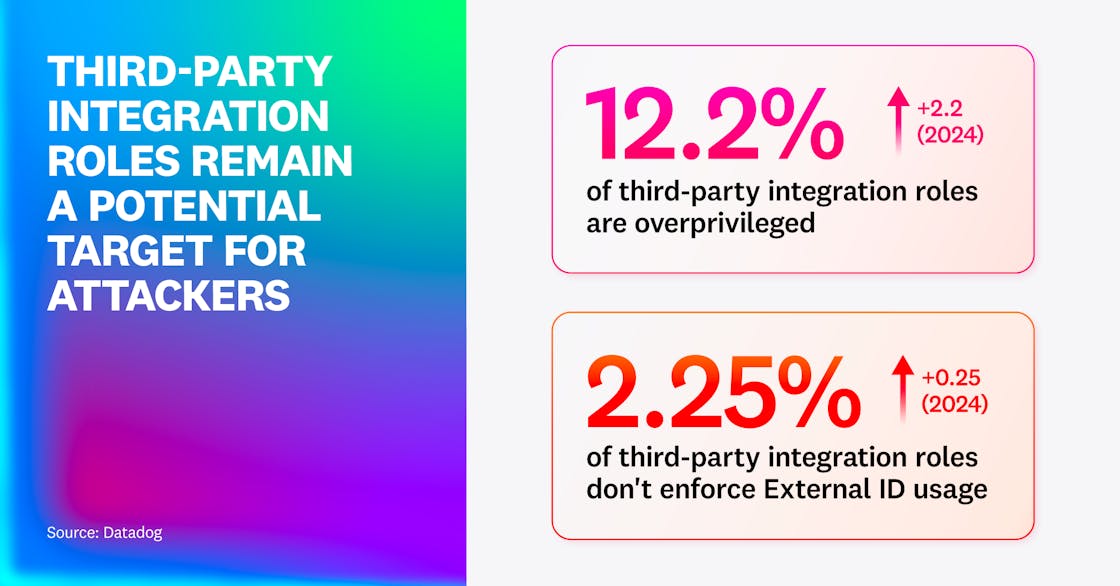

For this fact, we reviewed IAM roles trusting known AWS accounts that correspond to SaaS providers. We found that on average, an organization deploys 13 third-party integration roles (up from 10.2 in 2024), linked to an average of 2.5 distinct vendors (same as 2024).

We then looked at two types of common security risks in these integration roles. We found that 12.2% of third-party integrations are dangerously overprivileged, allowing the vendor to access all data in the account or to take over the whole AWS account. This is slightly up from 10% in 2024. We also identified that 2.25% of third-party integration roles don’t enforce the use of an external ID. This indicates that third-party risks are as prevalent as ever in cloud environments, and organizations should continue to remove unused roles and grant minimum permissions.

Insecure default configurations leave managed Kubernetes clusters at risk

Popular managed Kubernetes services, such as Amazon EKS, Azure AKS, and Google Cloud GKE, allow teams to focus on application workloads instead of complex Kubernetes control plane components such as etcd. But their default configurations still often lack adequate security. This can be problematic, as these clusters are intrinsically running in cloud environments; compromising a managed cluster opens up a number of possibilities for an attacker to pivot to the underlying cloud infrastructure. Anything exposed to the internet is scanned and creates noise, which can divert defenders from genuine threats. These internet-facing clusters also provide additional entry points for attackers to further abuse identities and escalate control.

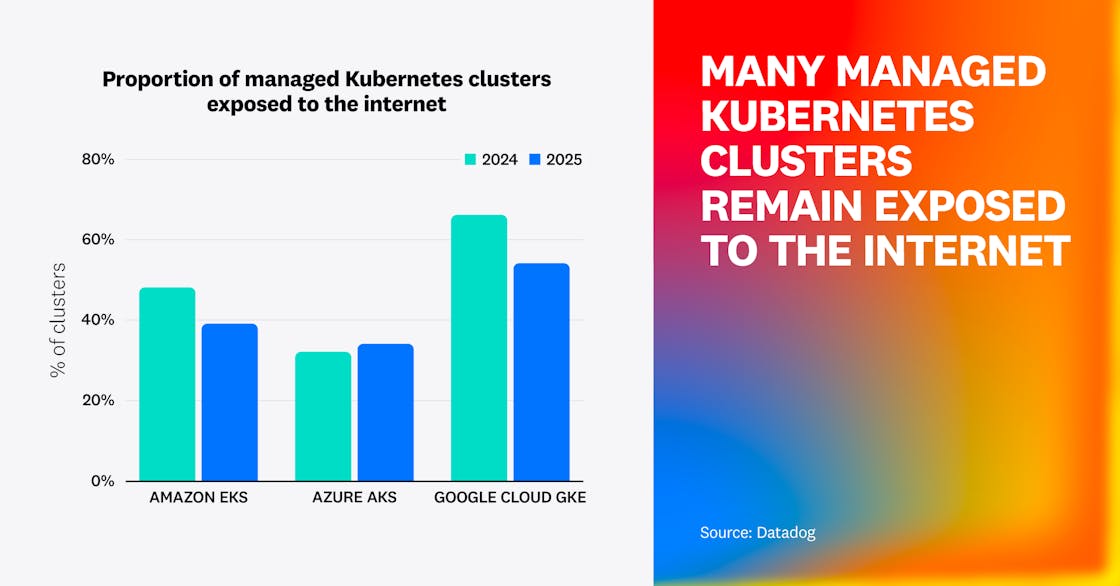

First, we found that the proportion of managed Kubernetes clusters exposing their managed API server to the internet has decreased in comparison to last year, but remains relatively high. Two in five (39%) EKS clusters and one in three (34%) AKS clusters are exposed to the internet. This number is much higher for GKE clusters, reaching over one in two (54%). Interestingly, we confirmed for EKS and GKE that the chance of internet exposure was unrelated to the age of the cluster, meaning that newer clusters aren’t getting less exposed than older ones.

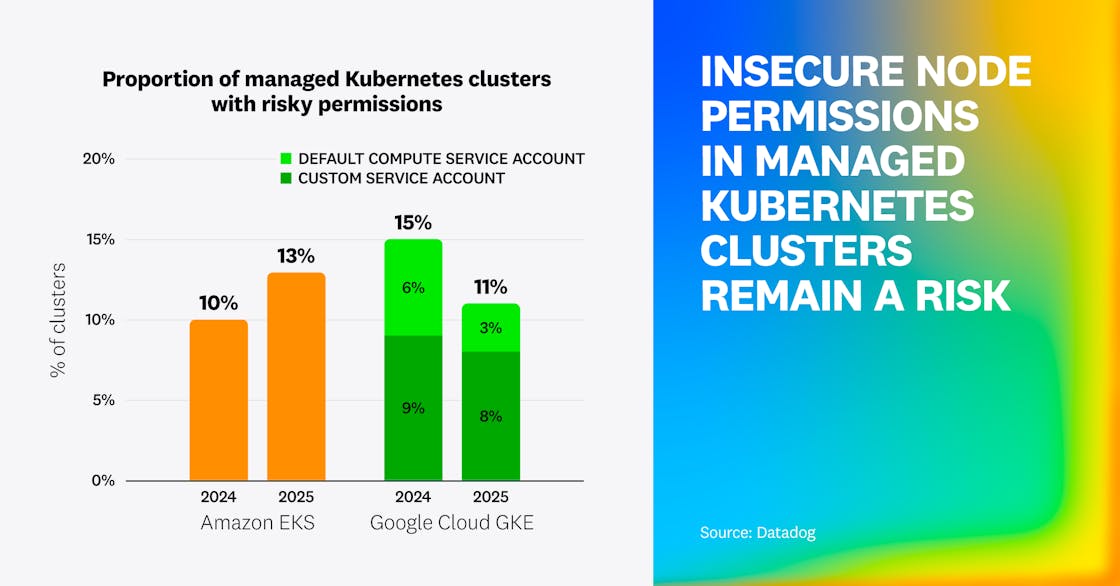

We also analyzed the IAM roles attached to these managed Kubernetes clusters to understand the likelihood that an attacker compromising them would be able to pivot to the cloud environment. For EKS, we determined that 13% of clusters have a dangerous node role that has full administrator access, allows for privilege escalation, has overly permissive data access (e.g., all S3 buckets), or allows for lateral movement across all workloads in the account. In Google Cloud, 10% of GKE clusters have a privileged service account: 3% through the use of default compute service accounts with the unrestricted cloud-platform scope, and 8% through customer-managed Google Cloud service accounts.

Although public exposure of managed Kubernetes clusters is decreasing, a large portion of these clusters is still exposed to the internet. This creates noise (automated attacks and scans), initial access risks (identity theft and exploitation), and pivot risks (if a cluster is compromised, cloud environments are at risk, too).

Organizations are also becoming more aware of the risks of attaching privileged cloud roles to cluster nodes, which lowers the risks that a compromised pod may be able to compromise other resources outside of the cluster. Risks related to default permissions had a noticeable change (down to 3% of GKE clusters), suggesting that organizations are becoming more aware of insecure-by-default configurations and taking action to fix them.

Organizations continue to run risky workloads

Assigning overprivileged permissions to EC2 instances or Google Compute Engine (GCE) virtual machines (VMs) continues to create substantial risk. Any attacker who compromises a workload—for instance, by exploiting an application-level vulnerability—would be able to steal the associated credentials and access the cloud environment.

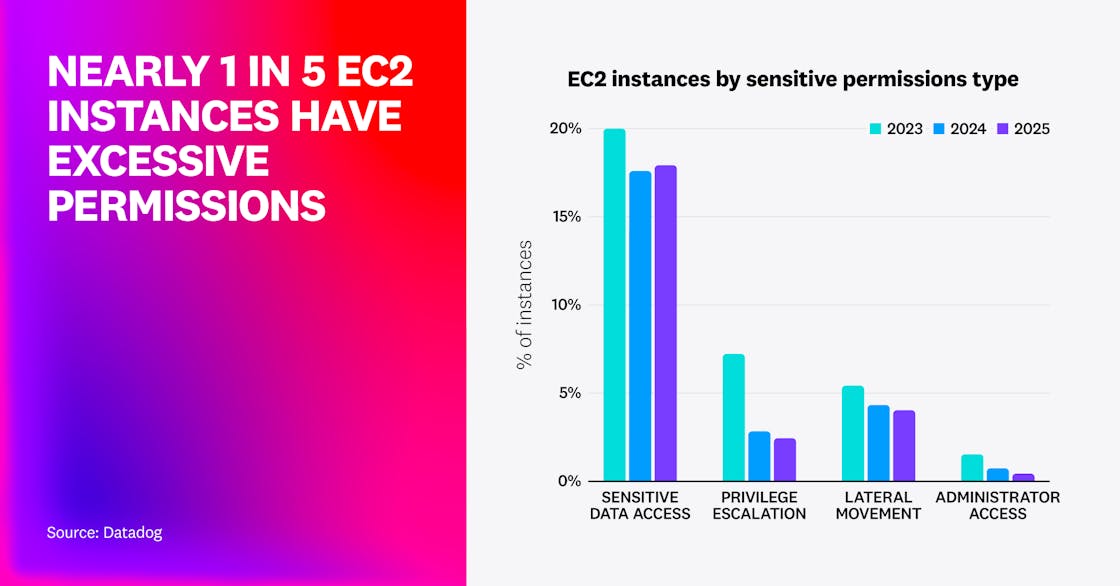

In AWS, while fewer than 0.4% of EC2 instances have administrator privileges, almost one in five (19.4%) is overprivileged—stable from 19% in 2024. Among these:

- 3.8% have risky permissions that allow lateral movement in the account, such as connecting to other instances using SSM Sessions Manager (slighty down from 4.3%). Lateral movement can allow an attacker to expand their scope beyond a single piece of infrastructure.

- 2.4% allow an attacker to gain full administrative access to the account by privilege escalation, such as permissions to create a new IAM user with administrator privileges (slightly down from 2.8%).

- 17.9% have excessive data access, such as listing and accessing data from all S3 buckets in the account (slightly up from 17.6%).

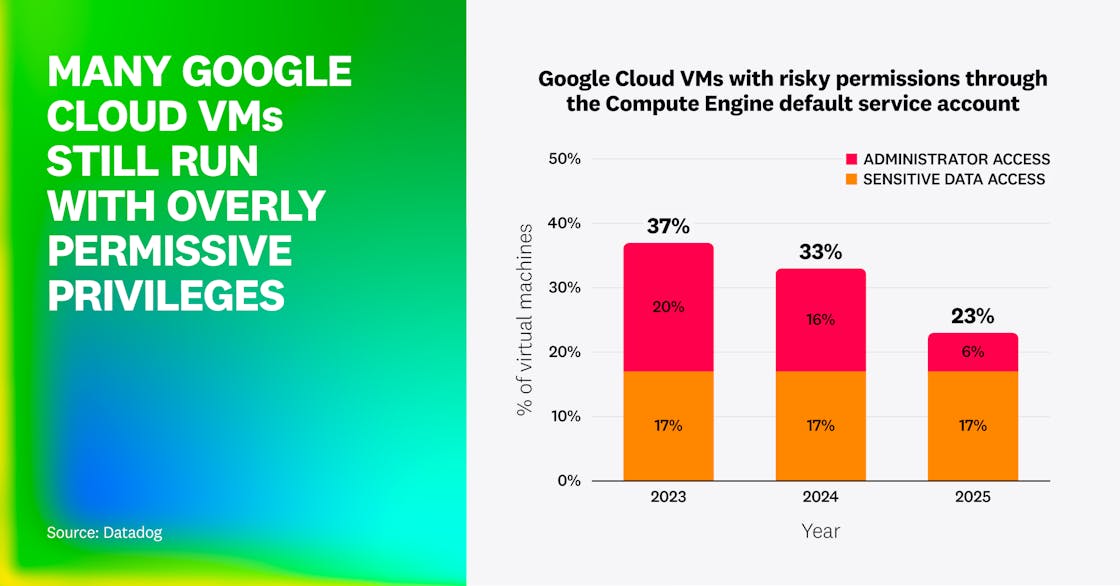

In Google Cloud, we found that 17% of VMs have full administrator “editor” permissions on the project they run in through the use of the Compute Engine default service account with the unrestricted cloud-platform scope. In addition, another 6% have full read access to Google Cloud Storage (GCS) through the same mechanism. In total, nearly one in four Google Cloud VMs (23%) has overly permissive access to a project.

Compared to 2024, the overall number of privileged Google Cloud VMs has decreased. Notably, the proportion of VMs using an unrestricted scope was divided by three, while the percentage of privileged VMs attributable to the Compute Engine default service account remains unchanged.