What is a Flaky Test?

A flaky test is a software test that yields both passing and failing results despite zero changes to the code or test. In other words, flaky tests fail to produce the same outcome with each individual test run. The nondeterministic nature of flaky tests makes debugging extremely difficult for developers and can translate to issues for your end users. In this article, we’ll discuss what causes flaky tests, how to identify them, and some best practices for reducing flaky tests in your environments.

What Causes Flaky Tests?

Since more developers are relying on CI/CD workflows to deploy their applications, automated testing has become a key part of the development process. Automation allows for continuous testing, which can help developers identify bugs earlier in the pipeline—but even automated test suites can break, causing flaky tests.

Testing needs to be reliable so that developers can push quality code into production, and if flaky tests are ignored, bugs or issues in code can be easily overlooked. There are several different factors that can cause flakiness, and all of them can slow your CI/CD pipelines and deployments or manifest issues for your application’s end user. Some of the more common causes of flakiness include:

Poorly written tests. A strong test should be as deterministic as possible. Therefore, when the test fails, that failure indicates a true regression or issue. If a developer does not create a test with enough assumptions or the test cannot enforce the assumptions made, the test will yield mixed results.

Async wait. When a test performs an action, the application requires time to complete the request. Developers can write tests that utilize sleep statements to make the test wait for a specific amount of time before checking if the action was successful. Sometimes, an application will need longer than the defined amount of time to complete a task, and this is when flakiness can occur.

Test order dependency. A test must be able to run independently in any order and create its own environment for execution, as well as clean up after itself. Issues in dependency arise when tests rely on shared resources, such as files, memory, or databases, and if that data is not mutated in a predetermined order, the test will fail.

Concurrency. Flakiness typically occurs when a developer makes an incorrect assumption about the order in which operations are being performed by the different threads. If the test will only accept a specific set of behaviors, even though there are multiple code behaviors that could be correct, then the test outcome will be nondeterministic.

While several studies have identified these above points as top causes, it’s worth mentioning that flaky tests can also be triggered by network issues, resource leaks, system time, and unreliable third-party APIs.

Remediating the Root Cause of Flaky Tests

As more developers turn to CI/CD to speed up deployments, the need to rapidly resolve flaky tests has increased to ensure that applications remain performant. To remediate flaky tests, developers need to know where to look for the root cause of flakiness, but this can prove to be challenging since it might require manually sifting through many lines of code. One way to identify flakiness is to re-run a test several times and document each time the test displays contradictory behaviors—this can also be time consuming, as well as difficult to predict how many test retries it will take to determine flakiness. So, what can developers do to quickly identify and remediate flakiness in their test suites?

Best Practices for Identifying and Reducing Flaky Tests

There are a number of techniques that can be used to identify and reduce flaky tests, including:

Configuring automatic test retries. Some tools allow developers to automate test retries to trigger a re-run as soon as the test fails, which allows them to prevent flaky tests from failing entire runs or CI builds.

Adjusting wait times. By changing wait times, your tests will have more time to allow requests to complete before marking the step as failed.

Checking for memory leaks. Memory leaks can be detrimental to your application’s performance. Developers can implement memory testing tools to eliminate leaks before they affect the application’s end user.

Leveraging Tools and Techniques to Mitigate Flaky Tests

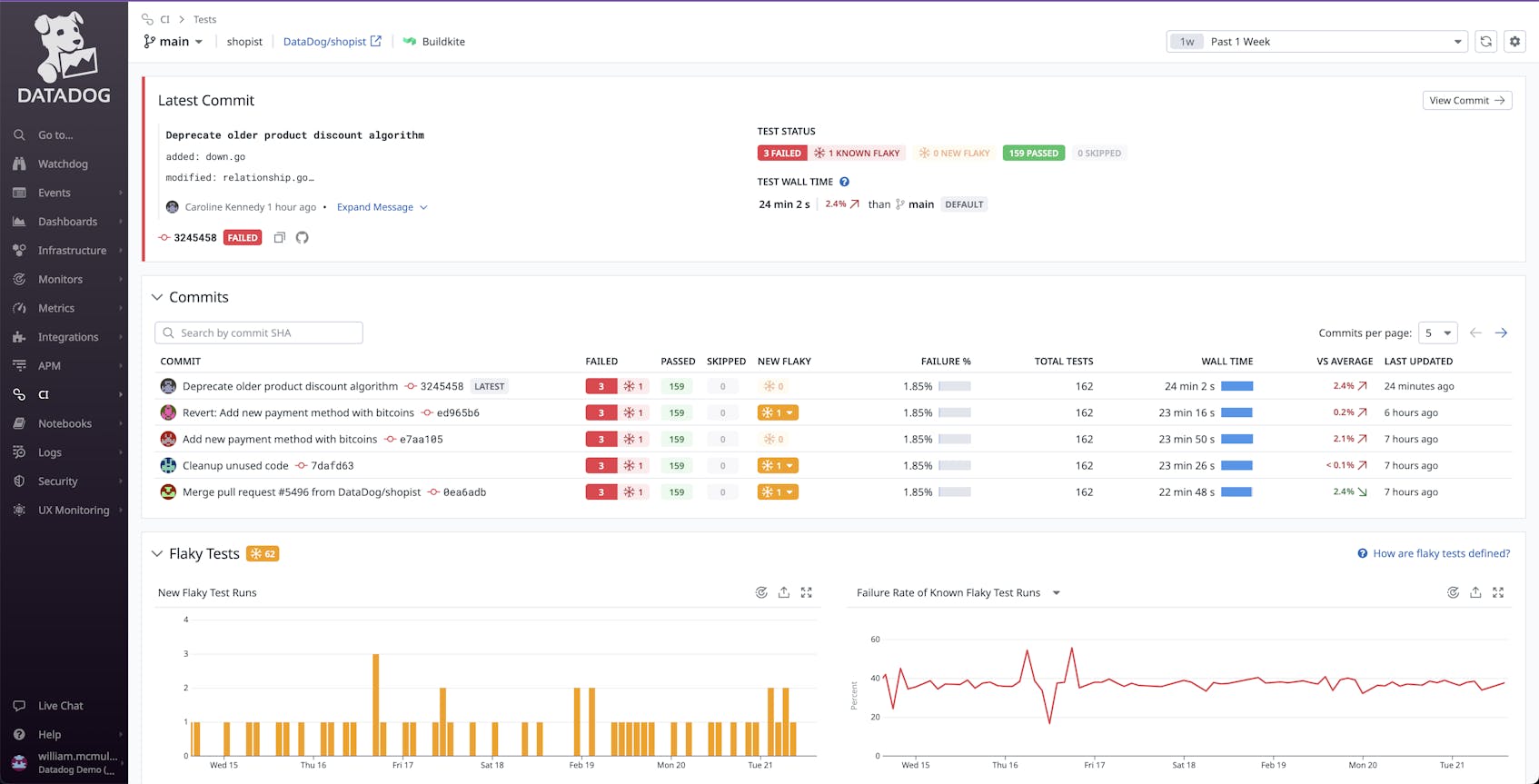

Flaky test management encompasses a collection of tools that quickly detect, report, and resolve flaky tests directly in-product. Many CI visibility platforms give valuable insights into your pipeline health by providing metrics and data from your tests. Some platforms offer detection tools that can surface flaky tests, as well as provide analytics surrounding wait times, test results, the number of flaky tests detected, and more. These types of tools can also help developers prioritize which tests are the most important to investigate and correct.

A strong solution for managing flaky tests will allow you to automate the systems in which you run your tests, as well as collect and display data around those tests. Datadog CI Visibility can help you optimize your test suite by automatically detecting when commits introduce flaky tests and visualize data over time to pinpoint trends and regressions. Our Flaky Test summary can provide you with information to drill down into test runs to determine which code changes are responsible for test flakiness. You can also set alerts based on when new flaky tests are detected for faster investigation and remediation.