What Are Containerized Applications?

Containerized applications are applications that run in isolated runtime environments called containers. Containers encapsulate an application with all its dependencies, including system libraries, binaries, and configuration files. This all-in-one packaging makes a containerized application portable by enabling it to behave consistently across different hosts—allowing developers to write once and run almost anywhere.

Notably, however, and unlike virtual machines, containerized applications don’t include their own operating systems. Different containerized applications running on a host system, instead, share the existing OS provided by that system. Without any need to bundle an extra OS along with the application, containers are extremely lightweight and can launch very fast. To scale an application, more instances of a container can be added almost instantaneously.

Developers typically use containers to solve two main challenges with application hosting. The first is that engineers often struggle to make applications run consistently across different hosting environments. Even when the base OS of host systems is the same, slight differences among those systems in hardware or software can lead to unexpected differences in behavior, causing, for example, issues to appear in production that were not apparent during staging or development. Containerizing an application avoids this problem by providing a consistent and standardized environment for that application to run in. The second challenge is that, although any hosted application needs to be isolated from all others to run securely and reliably, achieving this isolation with physical servers is resource-intensive. Though VMs provide this required isolation and are more lightweight than physical servers are, using a VM to isolate an application still requires considerable RAM, storage, and compute resources. Containerization protects applications more efficiently than even VMs do by using OS-native features, such as Linux namespaces and cgroups, to isolate each container from other processes running on the same host.

How Do Containerized Applications Work?

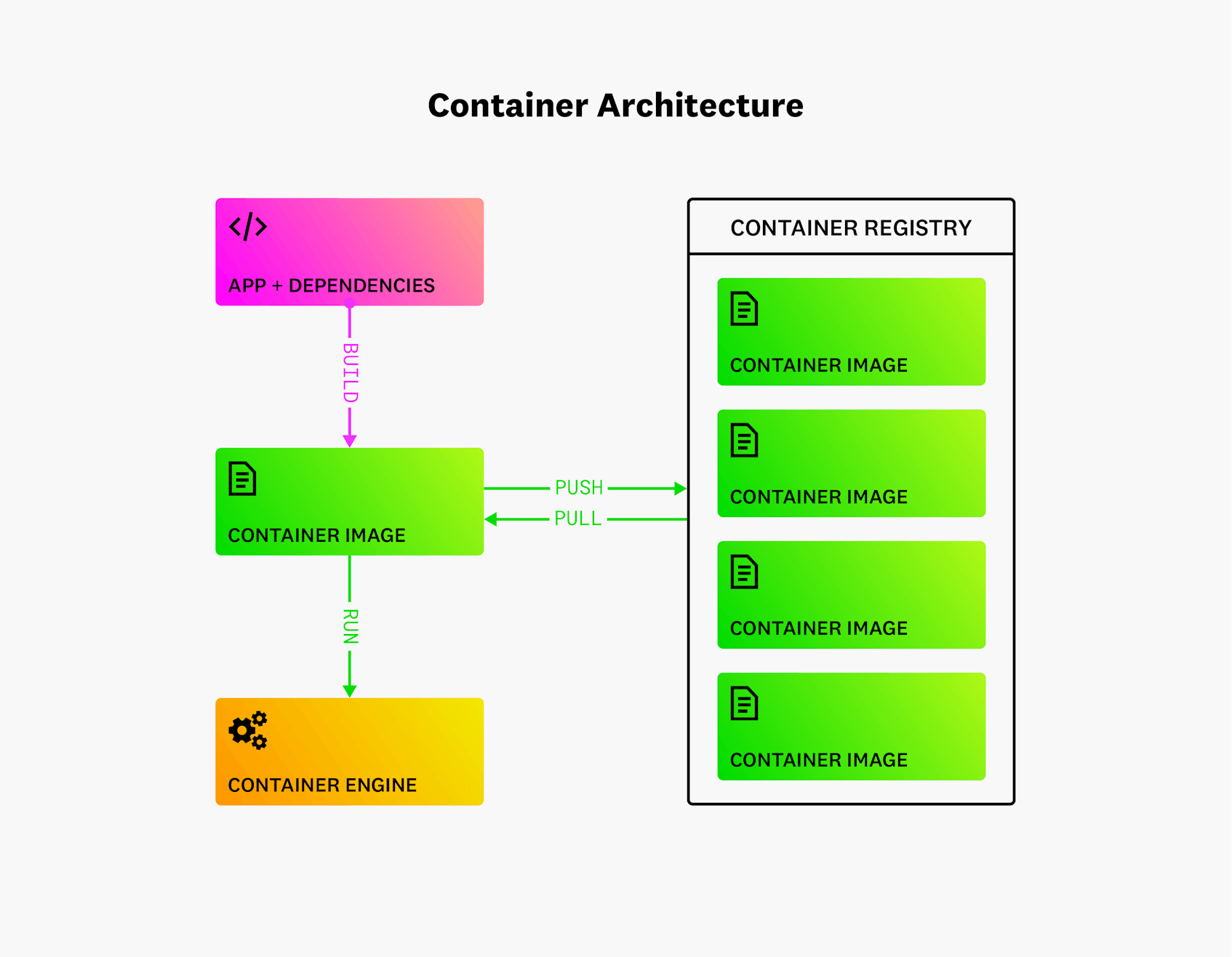

Several components work together to allow applications to run in a containerized environment.

What Are Container Images?

Each container consists of a running process or a group of running processes that is isolated from the rest of the system. When a container is not running, however, it exists only as a saved file called a container image. This container image is a package of the application source code, binaries, files, and other dependencies that will live in the running container. When a containerized application starts, the contents of a container image are copied before they are spun up in a container instance. Each container image can be used to instantiate any number of containers, and for this reason, a container image can be thought of as a container blueprint. Another important point to note about container images is that they can be shared with others via a public or private container registry. To promote sharing and maximize compatibility among different platforms and tools, container images are typically created in the industry-standard Open Container Initiative (OCI) format. Docker is the tool most often used for creating OCI-compliant container images.

What Are Container Engines?

Container engines refer to the software components that enable the host OS to act as a container host. A container engine accepts user commands to build, start, and manage containers through client tools (including CLI-based or graphical tools), and it also provides an API that enables external programs to make similar requests. But the most fundamental aspects of a container engine’s functionality are performed by its core component, called the container runtime. The container runtime is responsible for creating the standardized platform on which applications can run, for running containers, and for handling containers’ storage needs on the local system. Some popular container engines include Docker Engine, CRI-O, and containerd.

The Role of Container Orchestration Tools

Container orchestrators provide automated management for containerized applications, especially in environments in which large numbers of containers are running on multiple hosts. In complex environments such as these, orchestrators are usually needed to handle operations such as deploying and scaling the containers. Kubernetes and Amazon Elastic Container Service (ECS) are examples of popular container orchestration tools.

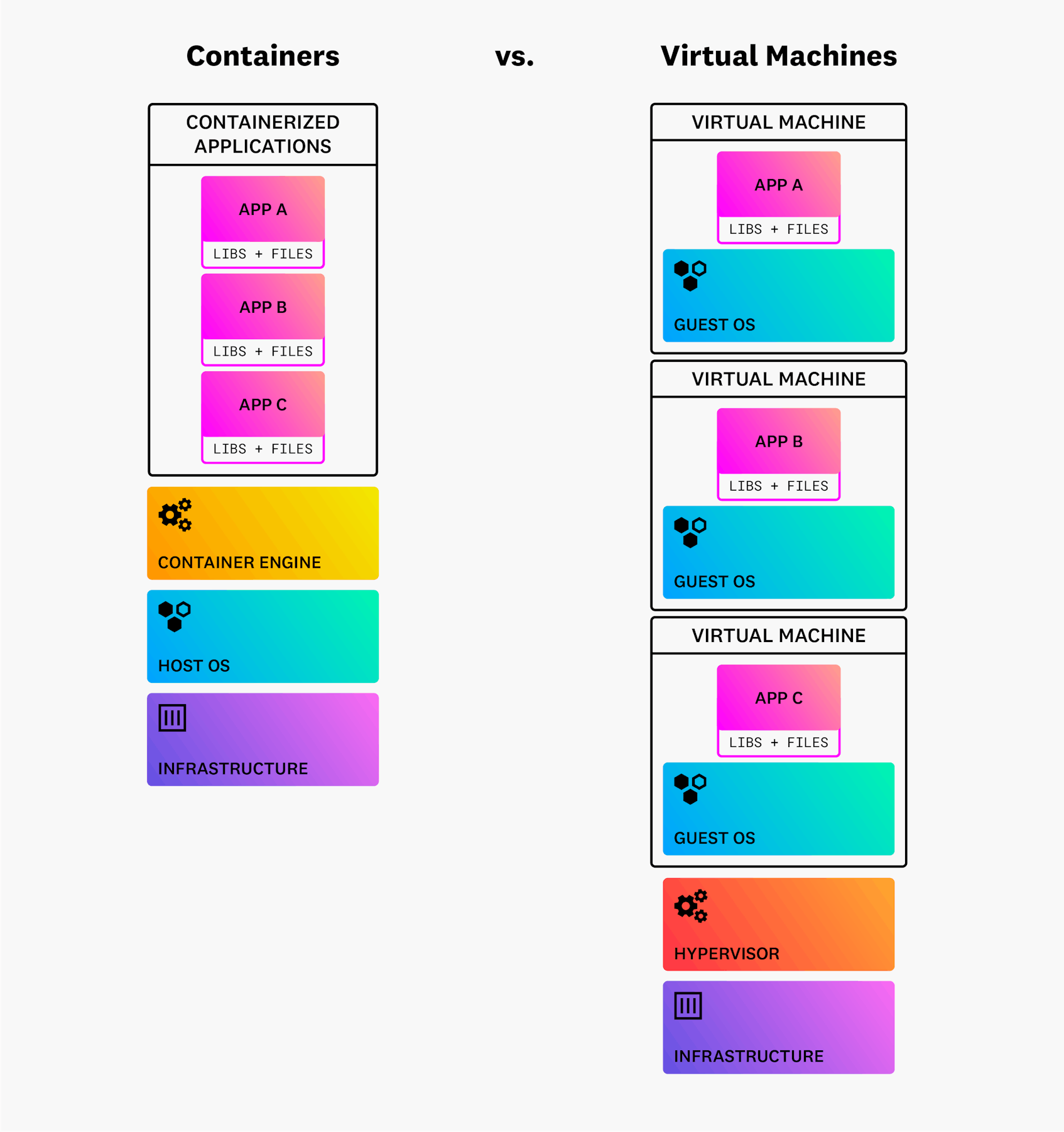

Containers vs. Virtual Machines

Containers and VMs can both provide a secure, reliable, and consistent runtime environment for hosted applications, but they offer different approaches. When multiple VMs run on the same host, each VM must include its own OS, along with the files and libraries the VM needs to support the application it is hosting. A hypervisor is software that runs the VMs on the underlying infrastructure. On the other hand, when multiple containers run on the same host, they all share the OS of that host and don’t require their own copy of an OS. As a result, containers are far more lightweight than VMs are, spin up and down much more quickly, and consume fewer resources.

On the flipside of that efficiency, however, is less security. VMs provide stronger isolation and security for applications than containers do. Because each VM includes its own OS, a strong security boundary exists between co-hosted VMs and between each VM and the host system. Containers, on the other hand, share resources with the host OS, which leaves each container more open to any vulnerabilities in the host system.

Containers vs. Serverless

Both containers and serverless computing allow teams to deploy applications more efficiently, but they differ in fundamental ways. The purpose of containerization is to provide a secure, reliable, and lightweight runtime environment for applications that is consistent from host to host. In contrast, the purpose of serverless technology is to provide a way to build and run applications without having to consider the underlying hosts at all. To support serverless applications, a cloud provider provisions and deallocates servers as needed behind the scenes.

Sometimes, teams may benefit from using containers and serverless computing together. For instance, the core of your application may run on containers, but some supplementary backend tasks, such as user authentication, may run on serverless functions.

eBook: Containerized Applications in AWS

When To Use Containerized Applications

Containers are commonly used to host applications today, and they’re especially well-suited for these use cases:

Microservices

Microservices-based applications are made up of many independent components, each of which is typically deployed in a container. The individual containers work together to form a cohesive application. This approach to application design provides the advantages of efficient scaling and updating. Instead of scaling up the entire application to handle increased load, only containers that receive the greatest load need to be scaled. Similarly, individual containers can be updated rather than the whole application.

CI/CD pipelines

Containerized applications allow teams to easily test applications in parallel and speed up their Continuous Integration/Continuous Delivery (CI/CD) pipelines by spinning up as many container instances as needed. Also, since containers are portable between different host systems, running a containerized application in a test environment will closely reflect how it will perform in production.

Repetitive jobs

Containers are effective for recurring background processes, such as batch jobs and database jobs. With containers, each job can run without interrupting other jobs that are occurring simultaneously.

DevOps

Containerized applications make it possible to quickly create a consistent and lightweight runtime environment for an application. These advantages give DevOps teams more agility as they build, test, deploy, and iterate applications.

What are the Benefits of Containerized Applications?

Here is a summary of the important benefits of containerizing your applications:

Scalability

Through containerization, container instances can be added quickly and efficiently to handle increased application load. VMs by comparison require more resources on each host system, which limits the number of VMs you can add. They also take longer to spin up when the load increases, reducing responsiveness.

Portability

After you create your container image, you can use it to deploy a containerized application on any platform that provides access to the same base OS. This portability gives you flexibility throughout the lifecycle of your application.

Quick Creation and Deployment

Creating a new container is faster than creating a new VM, which requires more decisions about configuration settings and resource allocation.

Lightweight

Containers hold only the dependencies needed for your application and don’t require a separate OS, so they can start up much more quickly than VMs can and in any host environment.

Fault Isolation

Since each containerized process runs in an isolated space, a bug in one container won’t affect other processes running on other containers or on the local system. This separation allows teams to limit the scope of incidents.

What are the Challenges of Containerized Applications?

Containers also pose some important challenges and limitations:

Lower Built-in Security than with VMs

Namespaces isolate a container’s running processes from those outside the container, and they allow each container on a host to receive dedicated resources from the host OS. However, because containers on the same host system share the same OS, any existing vulnerabilities in the host OS can impact all containers on the host. Additionally, an attacker who accesses just a single container could potentially access the host itself or other containers (if network settings are compromised).

Lack of Built-in Persistent Storage

Whenever you stop a running container, the data inside of it will disappear. To save the data, you need a persistent file system. Most orchestration tools provide persistent storage, but the quality and implementation of offerings vary among vendors.

Potential for Sprawl

A benefit of containers is how quickly they can be created, but this advantage can also easily lead to uncontrolled container sprawl and administrative complexity.

Monitoring Challenges

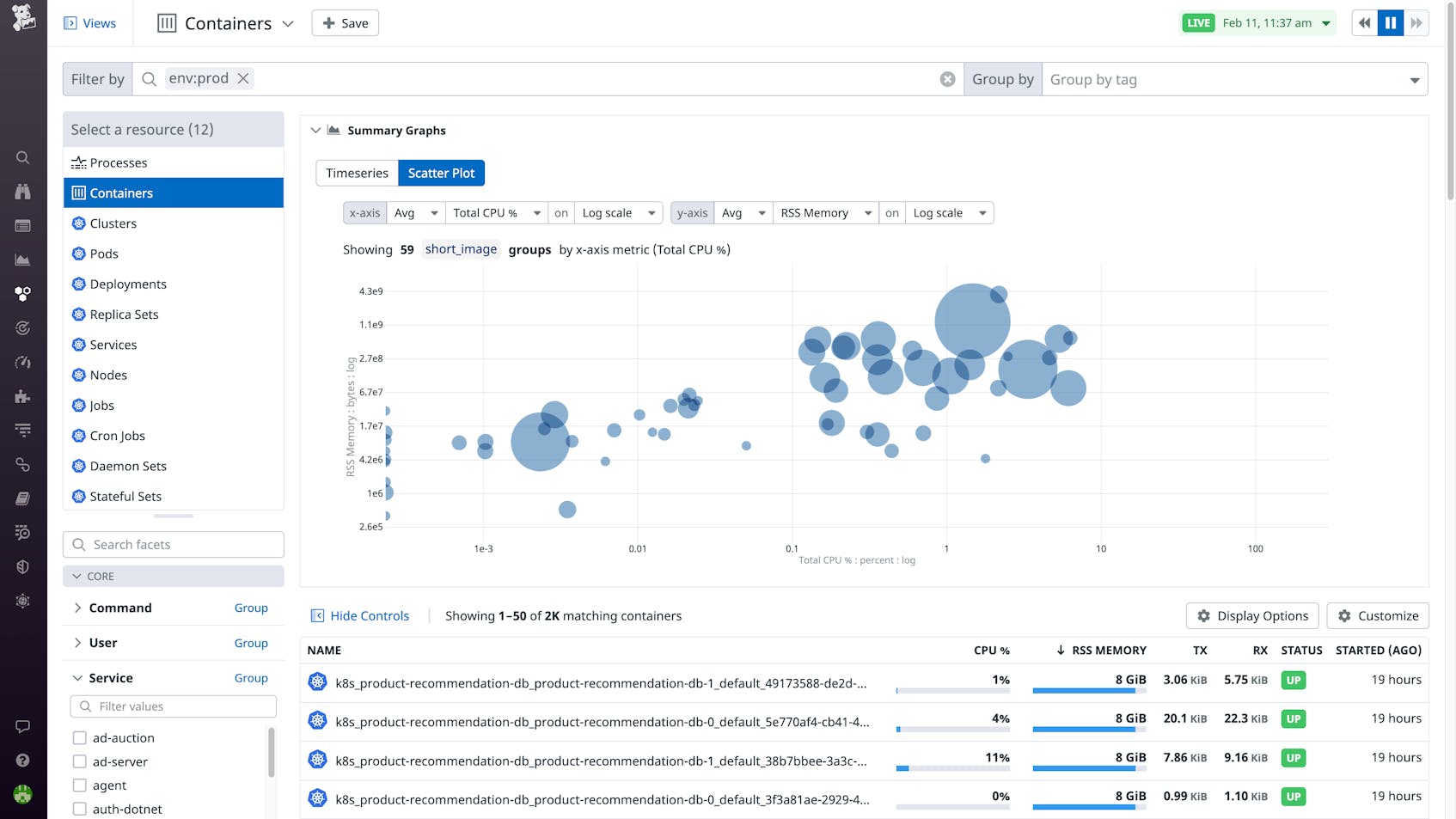

Because containers can quickly be spun up and down, teams often find it difficult to keep up to date about which containers are running. In fact, containers churn 12 times faster than traditional hosts, which makes it virtually impossible to track containers manually.

How to Monitor Containerized Applications

Monitoring containerized applications requires visibility at multiple layers of the tech stack. Not only do you need visibility into container metrics, but you also need to observe the health and performance of the host and your applications themselves.

Datadog Infrastructure Monitoring provides metrics, visualizations, and alerting for any backend infrastructure, including containerized applications. With Live Containers, teams can get real-time visibility into their containers in real time. Datadog automatically discovers and starts collecting metrics from new containers as soon as they spin up. The Container Map shows you at a glance if any containers are experiencing errors, and if you’re using Kubernetes, you can also get data on clusters, nodes, pods, and other resources. Beyond metrics, Datadog also allows you to correlate container metrics with application logs and traces in the same platform. Finally, Datadog’s integrations with Kubernetes, Docker, and other container technologies give you complete visibility into your entire container environment.