What is container orchestration?

Container orchestration is the automated process of deploying, managing, and coordinating all the containers that are used to run an application. Engineering teams often use orchestration technologies, such as Kubernetes, to manage containerized applications throughout the entire software lifecycle, from development and deployment to testing and monitoring.

What problems does container orchestration solve?

Container orchestration helps reduce the difficulty of managing resources in containerized applications. When containerization first became popular, teams began containerizing simple, single-service applications to make them more portable and lightweight, and managing those isolated containers was relatively easy. But as engineering teams began to containerize each service within multi-service applications, those teams soon had to contend with managing an entire container infrastructure. It was challenging, for example, to manage the network communication among multiple containers and to add and remove containers as needed for scaling.

Today, containerized applications can comprise dozens or even hundreds of different containers. To manage such a complex application manually would be impossible. Engineering teams need automation to handle tasks such as traffic routing, load balancing, and securing communication, as well as managing passwords, tokens, secrets, SSH keys, and other sensitive data. Service discovery presents an additional challenge, as containerized services must find each other and communicate with each other securely and reliably. Finally, multi-container applications require application-level awareness of the health status of each component container so that failed containers can be restarted or removed as needed. To make health status information available about all application components, an overarching, cluster-aware orchestrator is needed.

How does container orchestration solve these problems?

Container orchestration streamlines the process of deploying, scaling, configuring, networking, and securing containers, freeing up engineers to focus on other critical tasks. Orchestration also helps ensure the high availability of containerized applications by automatically detecting and responding to container failures and outages.

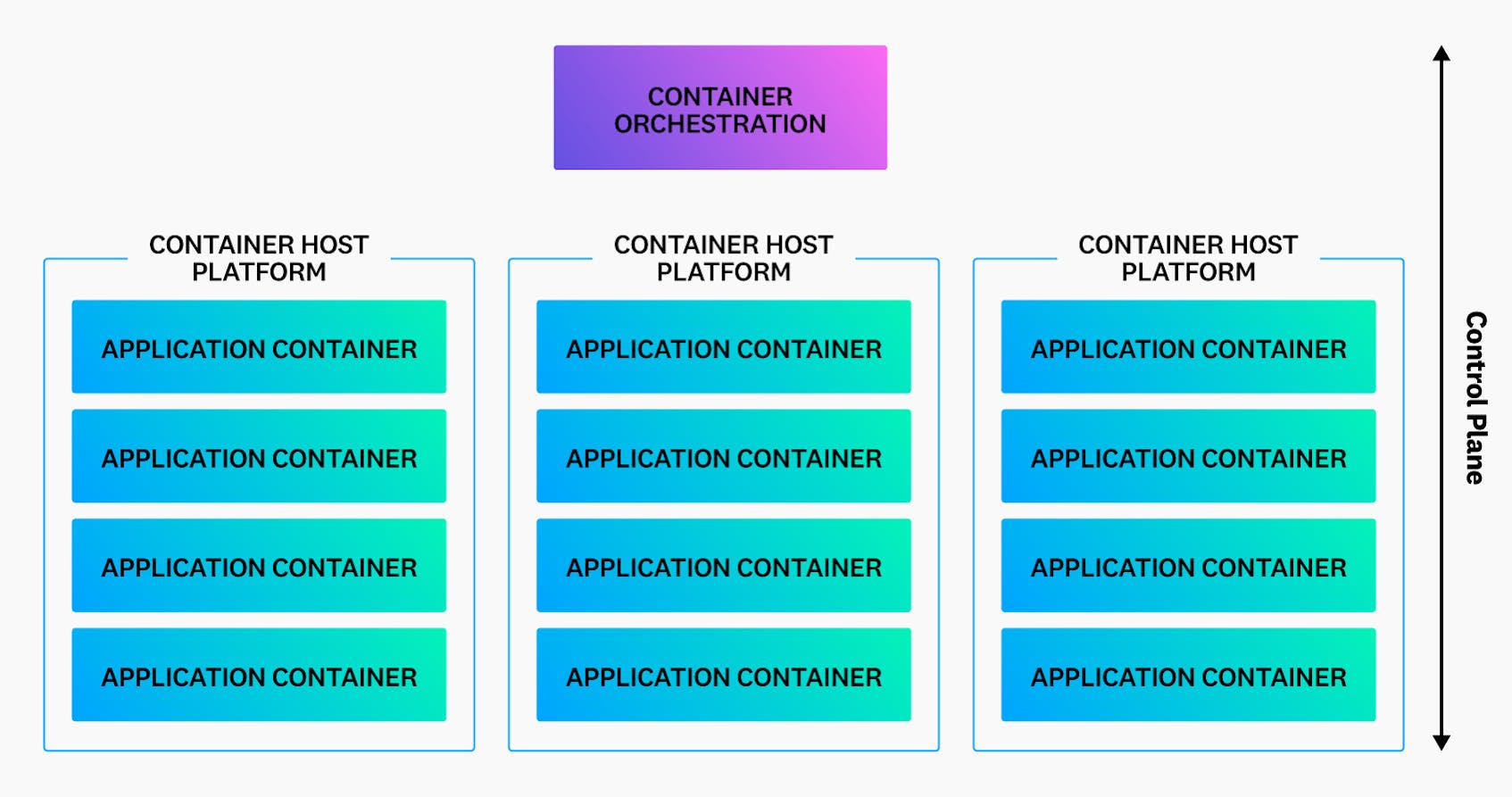

Different container orchestrators implement automation in different ways, but they all rely on a common set of components called a control plane. The control plane provides a mechanism to enforce policies from a central controller to each container. It essentially automates the role of the operations engineer, providing a software interface that connects to containers and performs various management functions.

Imposing order on the container infrastructure

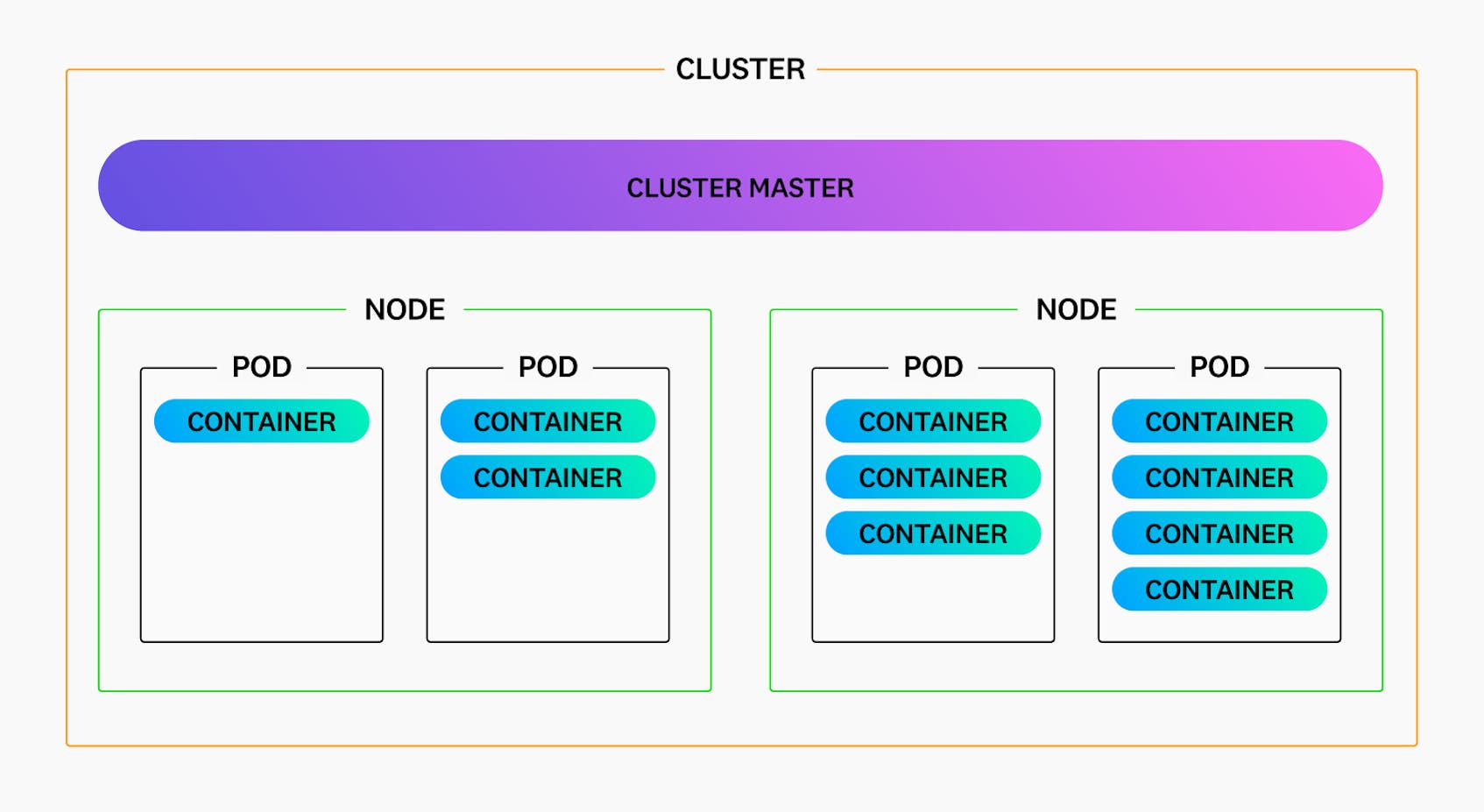

Container orchestration also reduces complexity by organizing the components of a container infrastructure into a unified and manageable whole. For example, through orchestration, containers can be organized into groups called pods, which allow containers to share storage, network, and compute resources. Meanwhile, nodes are the physical or virtual machines that host the containers. The term cluster is usually given to collections of nodes that work together to provide the resources needed to run a single containerized application. Finally, a cluster master or master node typically acts as a control point for a cluster.

Although the specific terminology can differ among orchestrator technologies, components within a container infrastructure typically relate to each other as illustrated in the following image:

Configuring automation

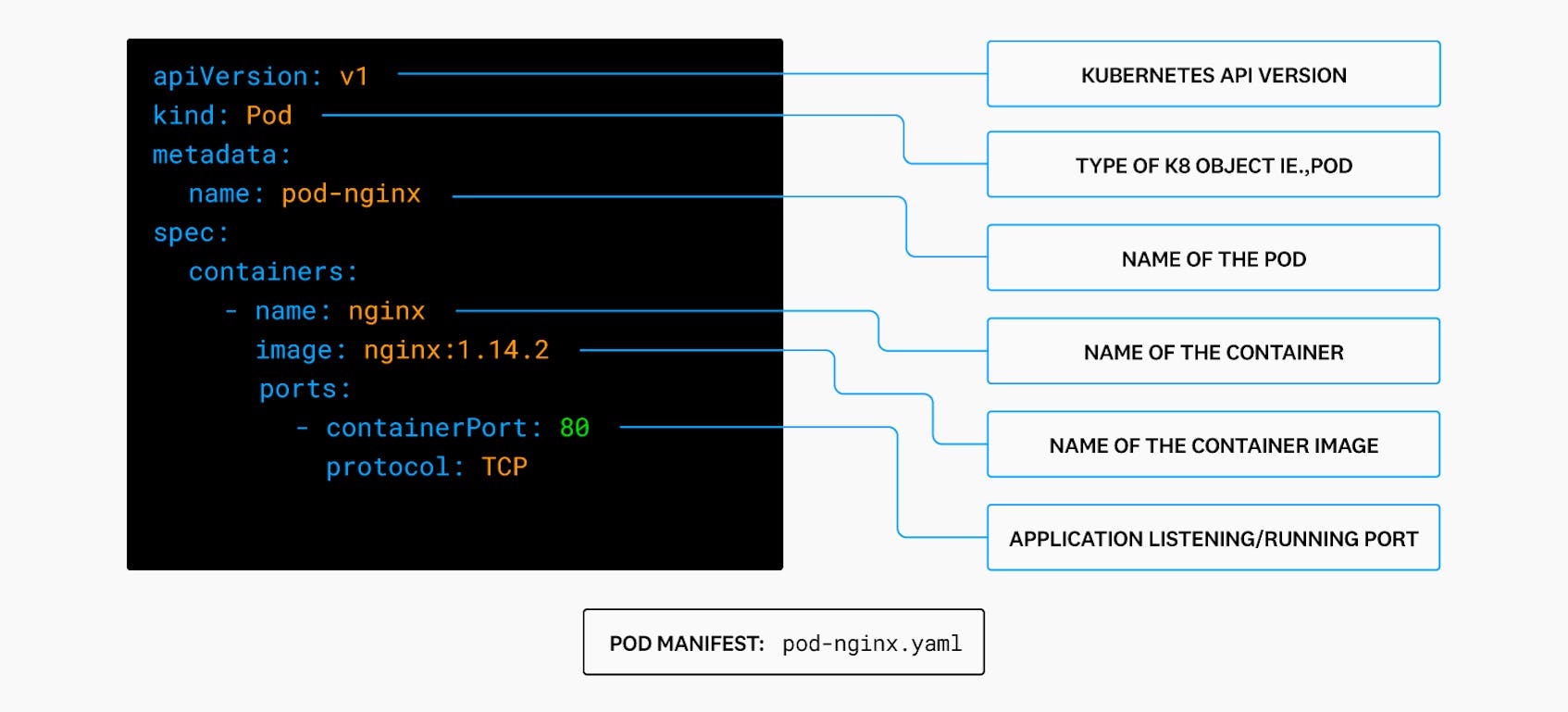

When working with a container orchestrator, engineers normally use configuration files in YAML or JSON format to define the “desired state” of system components. These configuration files determine various behaviors, such as how the orchestrator should create networks between containers or mount storage volumes. By defining the desired state, engineering teams can delegate the operational burden of maintaining the system to the orchestrator.

As an example of a configuration file, the following image shows a portion of a YAML file used to configure a pod in Kubernetes:

Container orchestration at scale

Container orchestration dramatically reduces the administrative overhead associated with managing containerized environments. It improves almost every facet of managing containerized applications at scale, including:

- Deployments

Deploying microservice-based applications normally requires a number of containerized services to be deployed in a sequence. The orchestrator can handle the complexity of these deployments in an automated way.

- Scheduling

An orchestrator automates scheduling by overseeing resources, assigning pods to particular nodes, and helping to ensure that resources are used efficiently in the cluster.

- Scaling

Orchestration allows a containerized application to handle requests efficiently by scaling up and down as needed in an automated way.

- Traffic routing

When an orchestrator is available, containers in an application can all communicate efficiently with each other through the orchestrator (as opposed to communicating with each other directly).

- Load balancing

An orchestrator normally handles all aspects of network management, including load balancing containers.

- Security

Orchestration eases administrative burden by taking on the responsibility of securing inter-service communication at scale.

- Service discovery

An orchestrator provides a stable external endpoint for different services to communicate with, creating a consistent and highly available service model that does not depend on the state of individual containers.

- Health monitoring

An orchestrator can readily plug into monitoring platforms like Datadog to gain visibility into the health and status of every service.

What are the current standards for container orchestration?

The rise of container orchestration through Kubernetes has been one of the largest shifts in the industry recently. Today, in fact, Kubernetes is generally considered the standard implementation model for applications.

Kubernetes, however, is not the only option. Organizations can also choose from orchestration tools such as Docker Swarm, Apache Mesos, Red Hat OpenShift, Hashicorp Nomad, and Amazon ECS. Options also include newer “serverless” solutions, such as AWS Fargate, that make the infrastructure supporting containers transparent to the customer.

When to host and when to use managed services

Orchestration service offerings are generally divided into two categories, managed and unmanaged.

Managed services, such as AWS ECS, AWS EKS, and GKE, reduce the operational burden of setting up and managing an orchestration solution. A managed service provider offers the customer a simpler interface and accepts operational responsibility for the infrastructure, typically at a higher price than with unmanaged options. On the other hand, unmanaged services–which typically involves running an orchestrator on self-managed nodes–offer more flexibility but provide only basic infrastructure that the customer must configure and support independently. To further illustrate the distinction, a team of 5-10 developers probably will not have the resources or knowledge to handle an unmanaged orchestration solution. However, a large enterprise organization may require a proprietary configuration or a complex system architecture that can only be achieved with a self-managed deployment.

What are some challenges associated with container orchestration?

Kubernetes or other container orchestrators may not be suitable for all applications. Containerization typically favors application architecture that does not require extended persistence of application state or user sessions. If a container orchestrator needs to handle a large number of long-running user sessions, it might have limited ability to balance and scale workloads.

It’s also important to remember that container orchestration increases the complexity of an application infrastructure. Most solutions, after all, do not run completely on their own. Engineering teams need to use additional tools (often command-line tools), each with its own learning curve, to manage networking, state, and service discovery effectively in an orchestration infrastructure. Smaller development teams often do not have the resources to handle this challenge, so they might need to take on the additional cost of engaging a third party for management help. Alternatively, they might opt to deploy their workloads on a managed solution and, in so doing, end up with high operating expenses or vendor lock-in.

Container orchestration observability

The complexity of managing an orchestration solution extends to monitoring and observability as well. A large container deployment normally produces a large volume of performance data that needs to be ingested, visualized, and interpreted with the help of observability tools. To be effective, your observability solution needs to make this process as easy as possible and help teams quickly find and fix issues within these complex environments.

Datadog offers powerful container orchestration monitoring for every deployment size, container infrastructure, and implementation scenario. It provides the flexibility to support self-managed container orchestration or popular managed orchestration platforms such as Amazon ECS and EKS, Microsoft AKS, and GKE—with platform-specific integrations and custom OOTB dashboards for each. Additionally, it is built to monitor large clusters with hundreds or thousands of nodes. Features such as the Datadog Cluster Agent, for example, reduce the load on the API server to streamline cluster-level monitoring at any scale. Datadog facilitates monitoring large infrastructures also through its Autodiscovery feature, which automatically detects whenever containers start on a node, discovers the services on them, and begins collecting associated metrics. Other Datadog features such as Recommended Monitors and Live Containers with curated views further help reduce the complexity of monitoring these environments regardless of their size.