What Are Serverless Microservices?

Serverless microservices are cloud-based services that use serverless functions to perform highly specific roles within an application. Serverless functions, which execute small segments of code in response to events, are modular and easily scalable, making them well-suited for microservice-based architectures. Serverless functions can also be easily integrated with a range of managed services, which minimizes the overhead that is often associated with other microservice implementations.

In this article, we’ll discuss how serverless microservices are structured, the benefits and challenges of adopting them, and the tools you can use to monitor their performance.

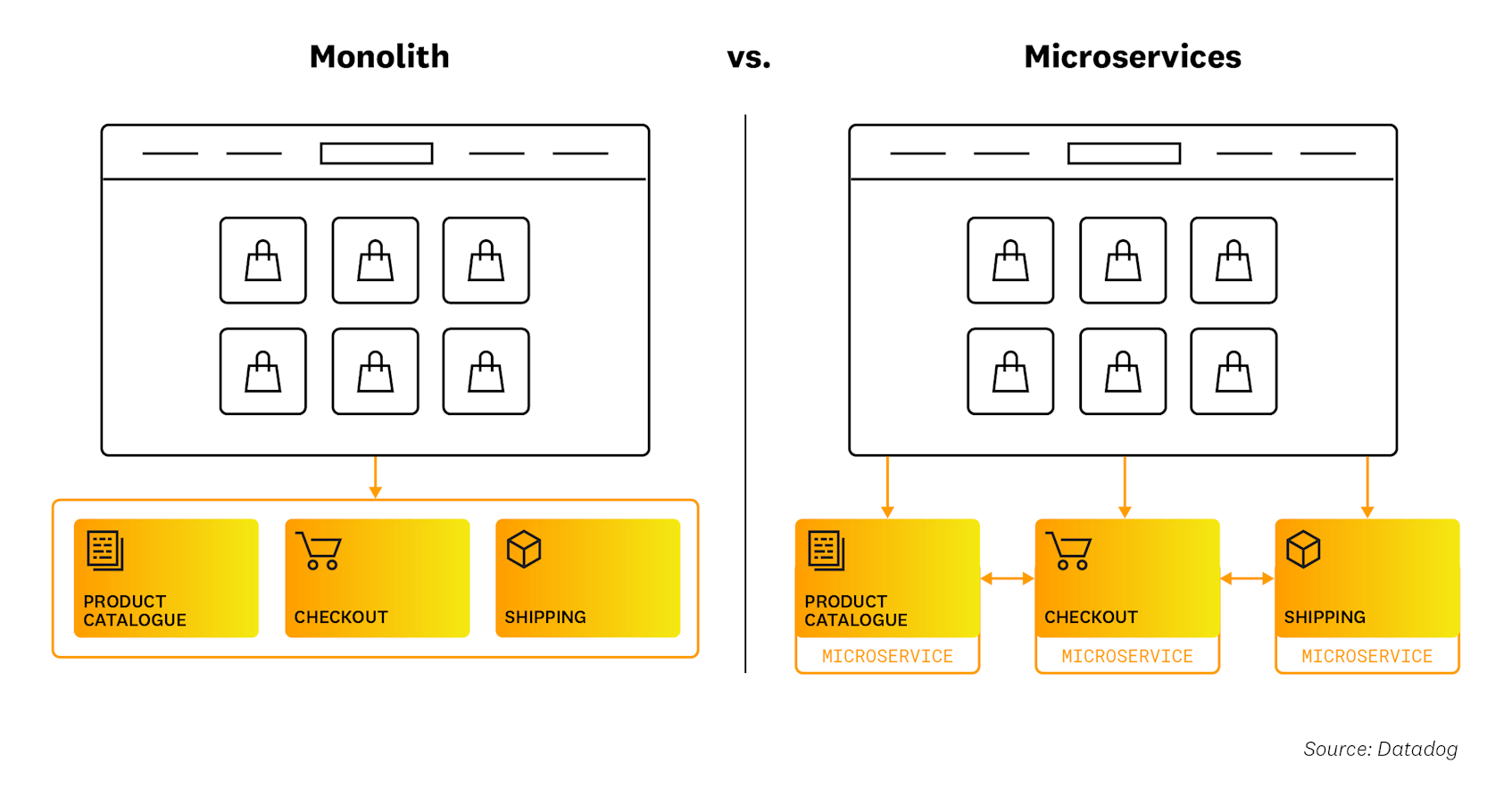

Monoliths vs. Microservices

Whereas monolithic applications are built and deployed as one holistic unit, microservice-based applications consist of multiple small services that can be scaled and managed independently of one another. For example, an e-commerce application may include different microservices for the product catalogue, checkout workflow, and shipping process, and each microservice may use its own language, database, and libraries. This design paradigm increases an application’s resilience, as an error in one service won’t necessarily affect others. Microservices can be hosted on containers, virtual machines, on-premise servers, or serverless functions.

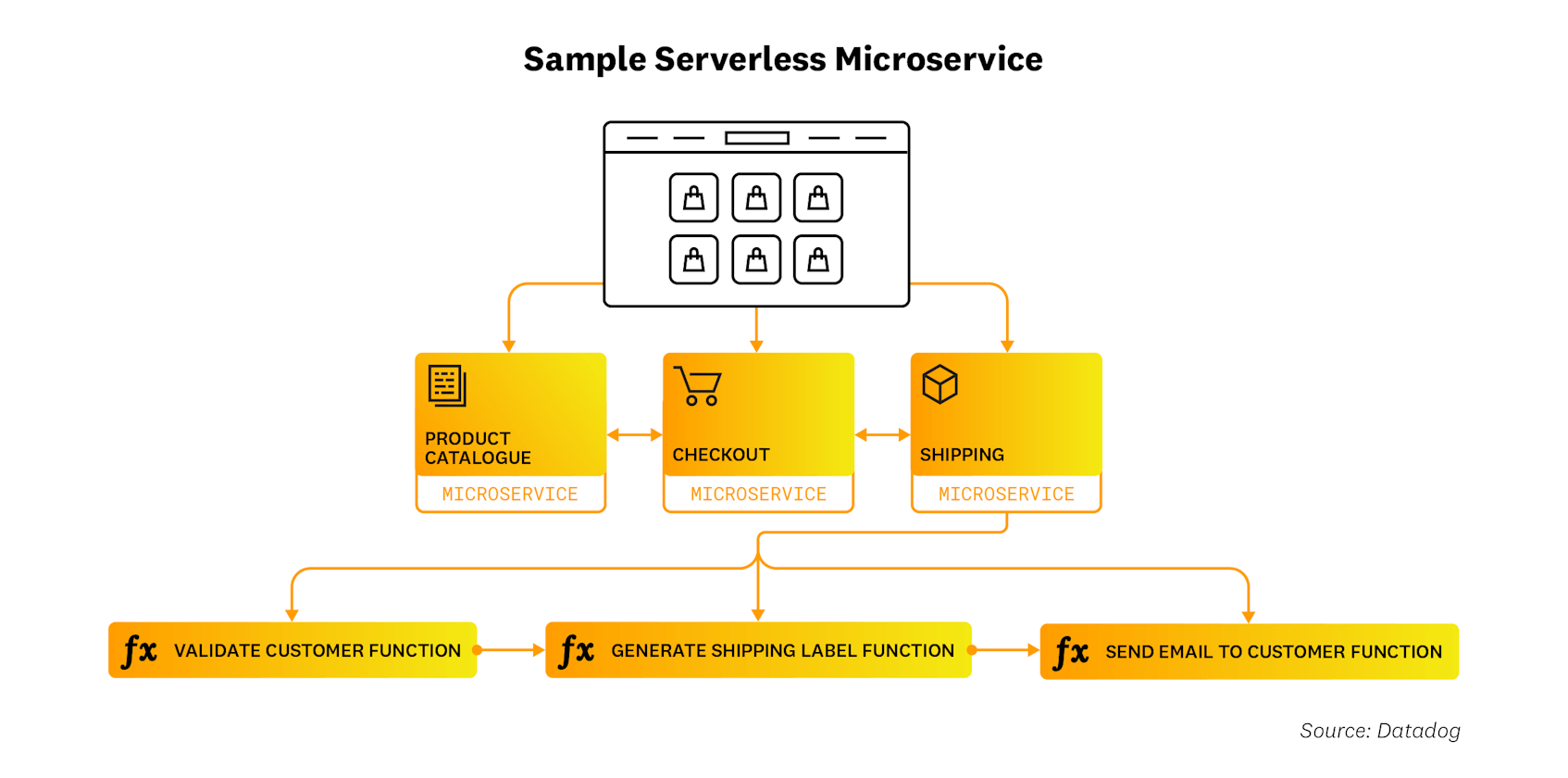

How Do Serverless Microservices Work?

Serverless microservices are built with serverless functions, which execute small blocks of code in response to HTTP requests, file downloads, database updates, and other events. Cloud providers manage all of the underlying infrastructure that is necessary to run function code, which reduces operational overhead and enables developers to focus on application logic.

A single microservice may include one or more functions that are all deployed at the same time. For instance, a shipping microservice for an ecommerce site might be broken down into a series of functions. When an order is marked as ready to ship, that event could trigger a function that validates the customer’s address. A successful validation could trigger another function that generates a shipping label. And finally, creation of the label could trigger a final function that sends a shipping confirmation email to the customer.

Benefits and Use Cases of Serverless Microservices

While serverless microservices carry the general advantages of serverless architecture, such as less overhead and improved cost efficiency, their primary benefit is the ease with which you can combine serverless functions with other managed services. Functions can be integrated with databases, message queues, and API management tools in similar ways for different use cases, which means the functions and resources that you use in one microservice can serve as the foundation for other microservices. For instance, a validation workflow might use the same basic logic and components as an authentication workflow. This flexibility reduces the amount of boilerplate code that you have to write as you scale out your application.

Serverless microservices are a good fit for complex, evolving applications, as their modularity makes them easy to manage and scale. Additionally, if your application can be broken down into several discrete services, each of which can in turn be broken down into short-running, event-driven tasks, then it’s probably a good candidate for serverless microservices. On the other hand, applications that receive a consistent load or that handle long-running tasks work better as monoliths.

Challenges of Serverless Microservices

Serverless microservices enable engineering teams to iterate quickly and cost-efficiently, but they also pose several challenges:

- Defining function boundaries

Teams may find it challenging to define the scope of each function within their microservices. It’s important for each function to have a limited role, but stretching your logic across too many functions can make it challenging to make updates or add new features. Teams need to strike a balance between deploying a manageable number of functions and assigning each function a clearly defined responsibility.

- Optimizing function performance

Certain performance problems are unique to serverless environments. For example, cold starts, which occur when a function is invoked after a period of inactivity, can lead to increased latency. Teams can use Provisioned Concurrency to combat cold starts, but this approach can drive up costs and is not always successful.

- Monitoring

A single application may consist of multiple microservices and short-lived functions that interact with a range of other resources. Given this level of complexity, it can be difficult to trace requests across your environment, understand dependencies, and isolate the root cause of errors. To optimize and troubleshoot serverless microservices, teams need visibility into how their functions and services interact with each other.

Teams can address some of the challenges noted above by introducing microservices and functions in an incremental way. For instance, they might first choose to shift all of a specific service’s code to a single function in order to familiarize themselves with writing and deploying function code. They might also decide to migrate only noncritical microservices to serverless architecture and slowly ramp up their use of functions over time.

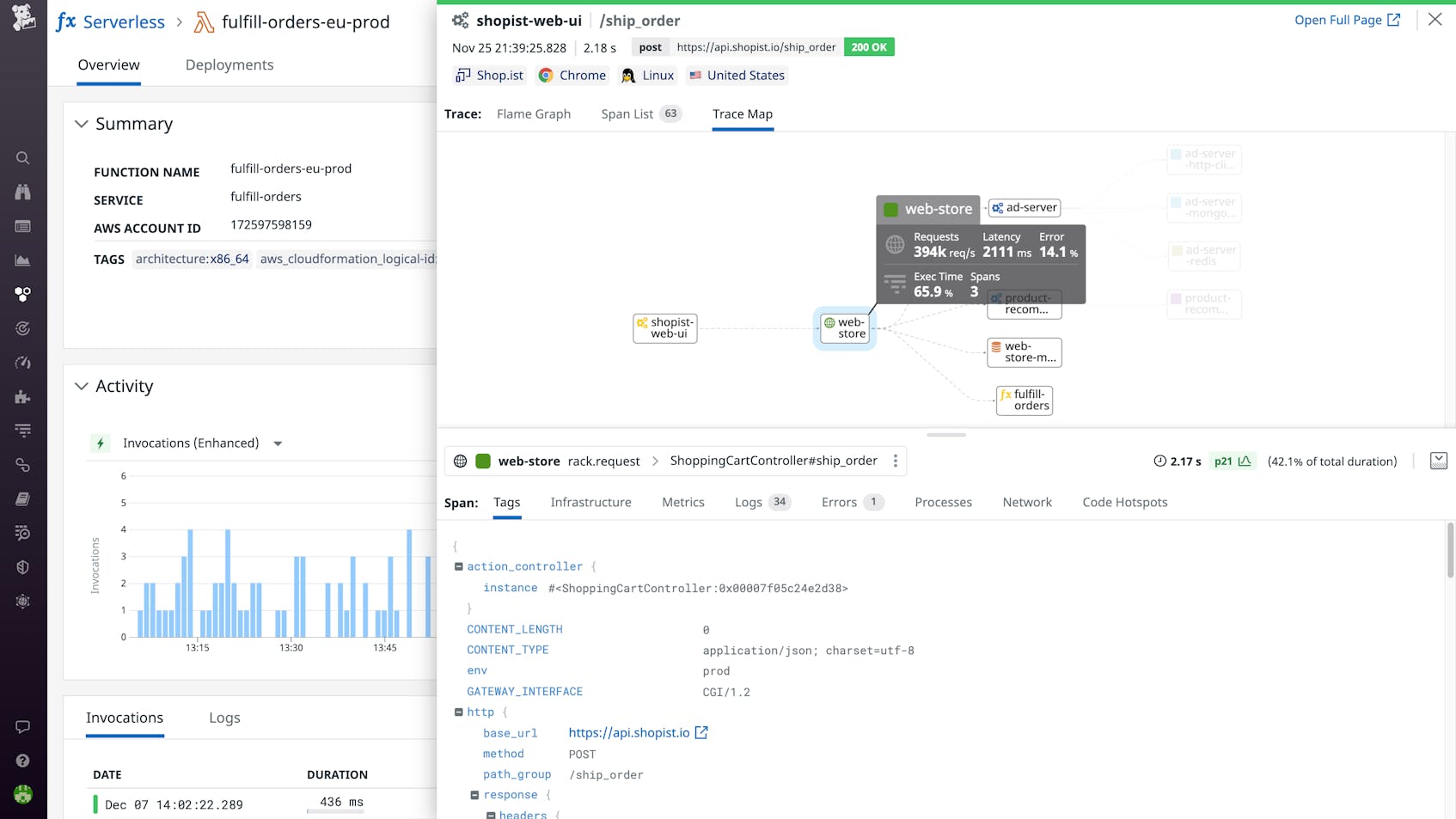

Monitoring Serverless Microservices

As discussed above, monitoring is one of the main obstacles to successfully implementing serverless microservices. Their inherent complexity makes it difficult to determine, for instance, whether an error is the result of a function timeout or an issue with another downstream service.

Datadog provides a single pane of glass for monitoring all of your microservice dependencies. You can get a bird’s-eye view of how requests flow across multiple services in your environment, which makes it easy to identify bottlenecks. Datadog Serverless Monitoring allows you to analyze each function alongside the resources that invoke it. And machine learning-based tools proactively surface issues in your serverless microservices as soon as they occur. To get more detail and investigate a problem, you can pivot with a single click between metrics, traces, and logs from across your stack.