Editor’s note: This is Part 1 of a five-part cloud security series that covers protecting an organization’s network perimeter, endpoints, application code, sensitive data, and service and user accounts from threats.

Cloud-native infrastructure has become the standard for deploying applications that are performant and readily available to a globally distributed user base. While this has enabled organizations to quickly adapt to the demands of modern app users, the rapid nature of this migration has also made cloud resources a primary target for security threats. According to a recent study from ThoughtLab, surveyed organizations saw a 15 percent increase in the average number of digital attacks and data breaches between 2020 and 2021.

With the steadily growing threat landscape, organizations are prioritizing efforts to secure their data, applications, and infrastructure from digital attacks. Cloud security, which focuses on implementing controls that protect all cloud-based resources, has become especially critical for modern environments. However, it also introduces some unique challenges. For example, cloud environments often include resources that are not managed by the organization but by one or more third-party cloud providers. As a result, it can be difficult to determine who is responsible for keeping these resources secure, which can lead to vulnerabilities in an environment.

To mitigate these risks, organizations and cloud providers often rely on a shared responsibility model to appropriately manage expectations for securing cloud resources. With this approach, teams can create the necessary policies for not only securing their environments but also detecting and mitigating activity from threat actors who may be attempting to exploit system weaknesses.

In this five-part series, we will look at how organizations can create a manageable scope for cloud security in the following key areas:

- Network boundaries

- Devices and services that access an organization’s network

- Access management for all resources

- Application code

- Service and user data

Part 1 of this series looks at the evolution of network perimeters in modern cloud environments as well as some best practices for securing them.

A primer on network perimeters

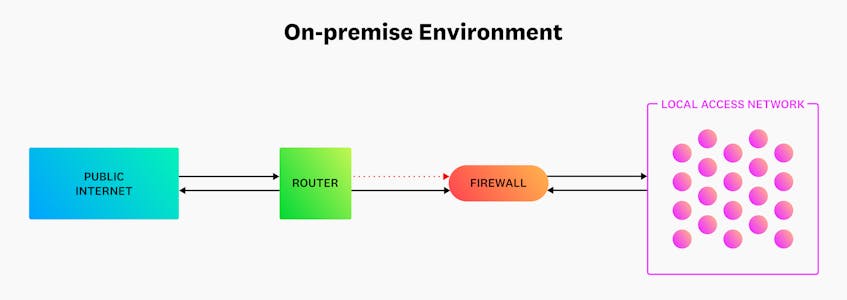

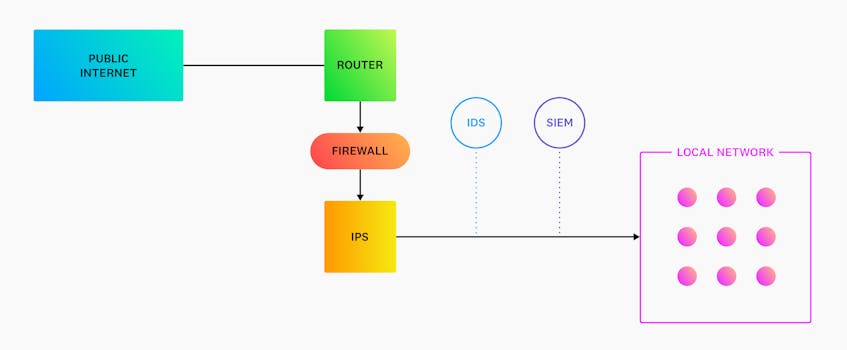

An organization’s network perimeter is the dividing line between any external network, such as the public internet, and their managed network. Much like the physical walls of an office, the network perimeter creates a distinguishable border around what an organization has control over. The following diagram depicts the boundaries of a network supporting a traditional on-premise environment:

Resources, services, and workloads supported by on-premise networks share the same key characteristics. For example, organizations consistently modify, patch, or update the same group of resources until they reach their end of life. Since these resources are long lived, they often run on physical hardware that’s stored in a single location, such as an office or data center.

Because on-premise environments are limited in scope, their network’s attack surface—all the different entry points that a threat actor can exploit—is also relatively small and easy to manage. With these types of configurations, perimeter firewalls and routers are often considered the “first line of defense” as they are solely responsible for blocking connections from potentially malicious sources. Most perimeter firewalls leverage IP-based policies out of the box, which allow them to restrict access to a network based on the source’s or destination’s IP address.

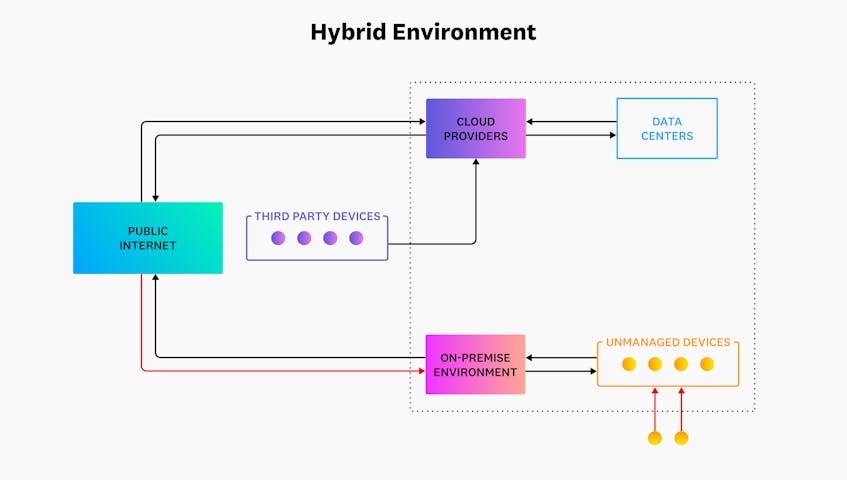

As one might expect, network boundaries become increasingly more complex in the cloud since they need to account for traffic from many more sources than a traditional configuration. The following diagram shows traffic to and from a hybrid environment, which leverages both on-premise and cloud infrastructure:

Unlike on-premise environments, fully cloud-hosted or hybrid environments deploy resources, services, and workloads that are short-lived and immutable. Resources like containers, for example, are often only deployed to accomplish specific tasks and then simply replaced instead of continually patched or updated. These types of environments can also be co-managed with one or more public cloud providers and therefore deployed across multiple data centers and regions. A distributed cloud infrastructure like this enables organizations to not only support more customers but also allow employees to access services remotely.

Together, these factors significantly increase a cloud environment’s attack surface by creating more points of entry for a threat actor to exploit. To combat this, the following best practices can help organizations mitigate any vulnerabilities along the edge of their network that could grant an attacker access:

- Inventory all network entry points and secure existing boundaries

- Use Zero Trust architecture to restrict access

- Segment networks to control traffic from potentially vulnerable entry points

- Get visibility into all network traffic

Next, we’ll look more closely at the importance of inventorying a network’s entry points as a first step in securing it.

Take inventory of and secure all network entry points

Entry points are areas along a network’s edge that allow traffic to and from external sources. Using our previous office example, if walls are the boundary of an office, then its doors and windows are the entry points. In cloud environments, entry points can include hardware like the firewalls and routers that manage network traffic, internet-connected printers, and publicly available services, such as databases and API gateways. In addition to physical hardware, certain hardware settings, such as open TCP ports on a server, can allow traffic to an organization’s network. For example, port 80 allows external hosts to connect to one of your services via HTTP, which is one of the most commonly used communication protocols.

With more points of entry, organizations can easily overlook holes in their network, such as overly permissive firewall policies, misconfigured cloud resources, and insufficient isolation for vulnerable devices. For example, an attacker may attempt to find and connect to an office’s unsecured wireless access point in order to scan for systems on the network.

Network visibility is key to inventorying entry points, which can be broken down into two main categories: perimeter devices and their configurations and network traffic. Tracking perimeter resources with solutions like network device monitoring tools, for example, can help organizations quickly visualize all of the devices along a network perimeter and their interconnections, including firewalls, wireless access points, and routers.

Once an organization has a better understanding of the various types of entry points and activity along the edge of their network, they can take the necessary steps for securing inbound traffic. For example, placing firewalls between the public internet, special networks like demilitarized zones, and internal network zones can filter and prevent unwanted sources from accessing critical resources. For employees who need to access an organization’s network remotely, virtual private networks (VPNs) can encrypt their traffic in order to prevent threat actors from intercepting it. To monitor all inbound and outbound traffic for unusual behavior, such as a sudden spike in the number of server requests, organizations can leverage security monitoring systems.

It’s also important to ensure that the hardware deployed along the boundary of a network is appropriately configured and up to date with the latest software patches. For example, open or unpatched ports on a web server can allow a threat actor to intercept critical data, such as usernames and passwords. Patch management has become particularly critical for cloud-native environments as unpatched vulnerabilities are one of the primary entry points to an environment that threat actors try to exploit. Organizations can manage configurations for perimeter resources, such as a web app’s underlying Amazon EC2 instances, with Cloud Security Posture Management (CSPM) solutions. These tools flag misconfigurations that could leave a resource along a network’s perimeter more vulnerable.

Inventorying and securing entry points along the borders of a network sets a solid foundation for applying the appropriate network policies to all cloud users, workloads, and devices, which we’ll look at next.

Use the Zero Trust model to assign identities to users and services

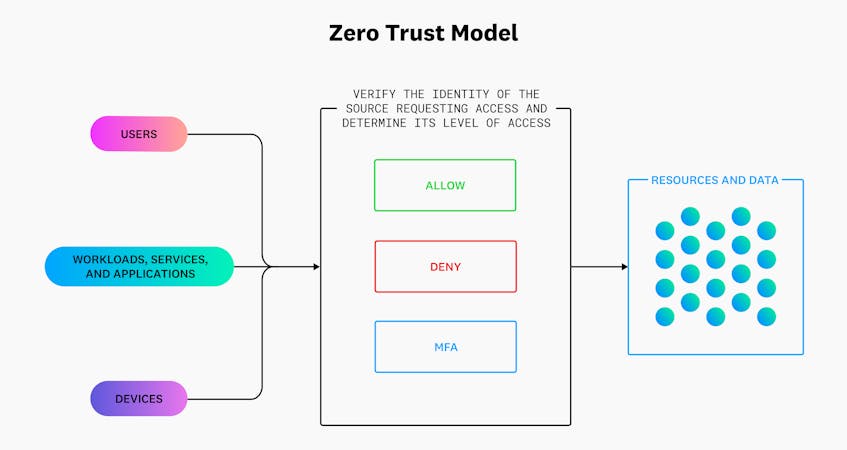

Traditional perimeter security models, such as those that support the on-premise environment we looked at earlier, are designed to prevent external sources from accessing an organization’s data and resources while granting access to any source already within the network. However, this means that once a threat actor has bypassed a firewall or found an unsecured entry point along a network’s perimeter, they have complete access to an organization’s systems. To mitigate these kinds of scenarios, organizations can use the Zero Trust model to create an additional layer of security for their networks, which is commonly referred to as a “defense in depth” strategy.

Zero Trust architecture (ZTA) focuses on identity-based network policies instead of IP-based ones. This means that every user, device, and workload attempting to access an organization’s systems need to verify their identities first, regardless of whether or not they are already within network boundaries. In this context, identities are a set of data attributes that make a particular source recognizable to the network. For example, employees may have identities based on their work email address (email:maddie.shepherd@datadoghq.com) and role (role:security-ops) within the organization.

Before an employee can gain access to their organization’s network (or resources within the network), they must first verify their identity via multi-factor authentication methods (MFA), such as an access code generated by a third-party device. These kinds of mechanisms—whether they use tokens or sessions—are short lived and require identities to routinely re-authenticate before attempting to access resources again. The diagram below shows an example Zero Trust setup that leverages MFA controls to allow or deny access to resources and data.

ZTAs and identity-based network policies are especially useful for containerized and Lambda-based workloads, which can regularly migrate between hosts, data centers, and clouds—and subsequently change IP addresses. Instead of relying on traditional firewall rules that allow or deny traffic based on a source’s or destination’s IP address, organizations can create network rules based on the workload’s identity in order to efficiently control traffic every time it migrates.

Zero Trust models also typically apply the principle of least privilege to identities, which means that they only have the set of permissions they need to accomplish a particular task. For example, an organization may grant a contractor temporary read-only privileges to a database while giving a member of their security team write privileges. This control ensures that no single user, device, or workload has more access than necessary to an organization’s resources, which would in turn give threat actors more control if they exploited a vulnerability in the network perimeter.

Next, we’ll take a look at how ZTAs play a role in helping organizations isolate traffic from specific identities in more detail.

Segment networks to better isolate traffic

As part of the Zero Trust model, organizations are encouraged to segment their networks in order to add multiple layers of protection to the resources and data contained within them. Network segmentation is a technique that divides a network into multiple separate networks, which are referred to as sub-networks. Much like restricted areas within an office, segmentation can strengthen network boundaries by isolating potentially vulnerable devices, such as those that store sensitive data, from critical resources. When this technique is employed, even if threat actors gain access to a network via a weakness in its perimeter, they will not be able to access any devices in its sub-networks.

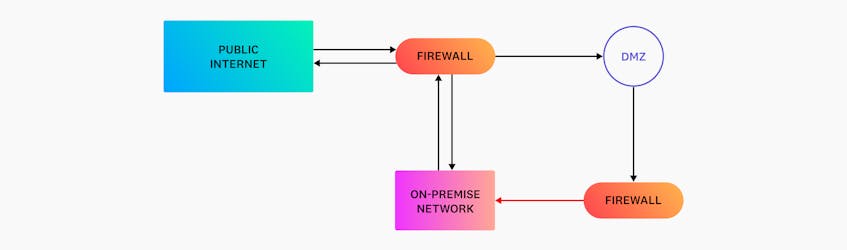

Traditional networks, such as those that support on-premise infrastructure, have typically used a type of network segmentation known as demilitarized zones (DMZs). DMZs are sub-networks that enable organizations to create a logical separation between their internal systems and public-facing services, such as web, email, and Domain Name System (DNS) servers. These services are common targets for threat actors because they can easily be misconfigured to allow unwanted traffic from the public internet.

As seen in the following diagram, DMZs are deployed outside an organization’s private network and leverage firewalls to further isolate traffic between the sub-network and critical systems.

While DMZs have been fundamental in segmenting networks for on-premise environments, in cloud-based environments their architecture focuses more on service and resource isolation rather than just network isolation. To configure segmented networks for cloud resources, providers like GCP, AWS, and Azure offer the following services:

- Virtual private cloud (VPC) networks: logically isolated and secure virtual networks that run cloud resources

- Public or private subnets: a range of IP addresses that serve as network groupings within a VPC

- Security groups: inbound and outbound rules that control traffic to and from specific cloud resources (e.g., Amazon Elastic Compute Cloud (EC2) instances)

- Network access controls lists (ACLs): a set of subnet-level rules that manage specific inbound or outbound traffic

Together, these features allow organizations to build cloud-based DMZs that separate their public-facing services and workloads from the rest of their infrastructure. For example, organizations can create a custom VPC network that is divided by both a private and public subnet to serve as their DMZ. The public subnet can house resources that need to accept requests from the public internet, such as web servers hosted on a cloud provider’s compute instances, while database servers can be deployed to the private subnet. In order to manage traffic, organizations can assign these resources to a particular security group. For instance, they may configure a database’s security group to only allow inbound database queries from a web server’s group.

It’s important to note that these features can vary in name and capabilities across cloud providers, as seen in the following table:

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Virtual network | Virtual Private Cloud | Virtual Network (VNet) | Virtual Private Cloud |

| Controlling traffic | network ACLs, security groups | network security groups, application security groups | managed firewall |

Next, we’ll look at how security monitoring tools can help organizations ensure that their network policies and controls are working as expected.

Get visibility into network traffic to detect threats

In addition to implementing more effective cloud security controls, organizations need visibility into traffic that’s traveling to and from their network. This is critical for detecting and mitigating threats before they become more consequential. Without this capability, organizations risk overlooking not only weaknesses in their network perimeter but also activity from threat actors that are attempting to exploit them.

To accomplish this, organizations with on-premise environments have often used a combination of security monitoring tools to actively scan for and block threats from their networks, including the following:

- Intrusion Detection Systems (IDS): detects and alerts on suspicious activity from the public internet

- Intrusion Prevention Systems (IPS): monitors network traffic and prevents vulnerability exploits

- Security Information and Event Management (SIEM) system: collects, aggregates, and analyzes both real-time and historical data across an organization’s infrastructure in order to discover and alert on security trends and threats

As you can see in the preceding diagram, IDS and SIEM in on-premise environments are typically out-of-band tools, meaning they are deployed behind a firewall but out of the direct flow of traffic. These systems are designed to passively process copies of data so that they do not affect network performance. IPS, on the other hand, sits inline and directly behind firewalls at the edge of a network in order to actively scan for threats. These security monitoring systems can also leverage built-in threat intelligence databases in order to compare network data, such as associated IP addresses, against a list of known malicious activity.

As more environments transition to the cloud, detection and prevention tools have needed to evolve in order to support new types of infrastructure and how they manage traffic across public and private subnets. This means that instead of relying on the previously mentioned out-of-band configuration, these tools need to be deployed at multiple levels of cloud infrastructure, including both the broader network and individual hosts and workloads.

For example, AWS, GCP, and Azure all support host-based IDS and IPS, which enable organizations to deploy agents to virtual machines and containers to detect unusual changes to system files, memory, and data. They also provide managed, network-based detection and prevention systems, such as the following:

- AWS: AWS GuardDuty, AWS Network Firewall

- Azure: Azure Threat Protection, Azure Firewall

- GCP: Cloud IDS, Google Cloud Firewall

Detection systems like Cloud IDS are deployed as endpoints to each region that an organization wants to monitor. Similar to traditional on-premise IDS systems, these endpoints can perform threat detection analysis on mirrored traffic and generate logs that can be forwarded to a cloud SIEM.

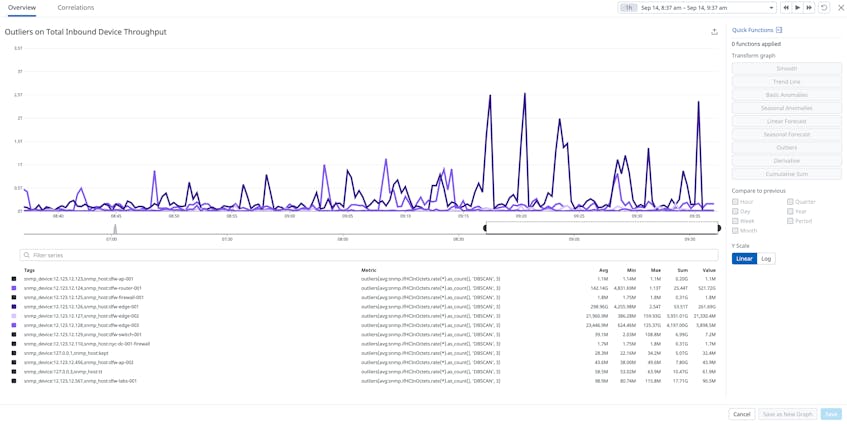

Cloud providers also integrate with monitoring platforms like Datadog to give organizations a comprehensive view into network traffic and activity logs via network performance monitoring and cloud SIEM tools. Datadog NPM, for instance, allows organizations to map the flow of traffic in and out of their entire network and quickly surface unusual behavior, such as a sudden surge of requests for a particular perimeter device. Elevated levels of inbound traffic, as seen in the following screenshot, could indicate that a threat actor is attempting to flood an entry point along a network’s perimeter with connection requests in order to limit its ability to process legitimate ones.

Datadog Cloud SIEM takes this visibility a step further by aggregating and analyzing all related log data in order to confirm the source of this activity. For example, Cloud SIEM can help organizations determine if the account initiating these requests is compromised, which would require immediate intervention before an attacker gains access to other resources.

Secure boundaries for cloud-native infrastructure

In this post, we discussed the importance of defining and securing the network boundaries of cloud-native infrastructure. Cloud environments have a large and ever-changing attack surface, but there are some best practices that organizations can implement to ensure that they can reliably manage the flow of traffic while preventing external threats. In Part 2 of this series, we’ll take cloud security a step further by looking at how to manage and protect connected devices. To learn more about Datadog’s security offerings, check out our documentation, or you can sign up for a 14-day free trial today.