This is a guest post from Ben McCann, co-founder of Connectifier.

At Connectifier we’ve been helping great companies grow quickly, and placing ten of thousands of candidates into new jobs. Connectifier runs distributed across dozens of machines examining hundreds of millions of datapoints to help optimize the distribution of human capital. We consistently push our infrastructure to its limits and Datadog is helping us to do that more effectively.

Datadog reveals root causes

In August, the Connectifier application began slowing down until requests could no longer be processed, requiring our SRE team to reboot services a few times a day to restore functionality. We had several theories including the possibility of a memory leak in the application server or database contention.

In order to determine what portion of the service was causing the performance degradation we needed a better way to correlate statistics from multiple services. We had been playing around with a number of solutions and the issue we were facing provided a good testing ground for the solutions. One of the engineers on the team had a partial Datadog install setup and demoed it to the team. We were immediately hooked.

We initially didn’t get metrics back from Datadog because our MongoDB database was so slow that it couldn’t connect. This is where the Datadog agent’s open source status really began to help us since we were able to send a PR to make the MongoDB connection timeout configurable. Even MongoDB’s own tools were having the same problem, so we also worked on getting the issue fixed there. After deploying the patched Datadog agent, we quickly narrowed the problem down to an issue with the database.

Debugging MongoDB’s WiredTiger

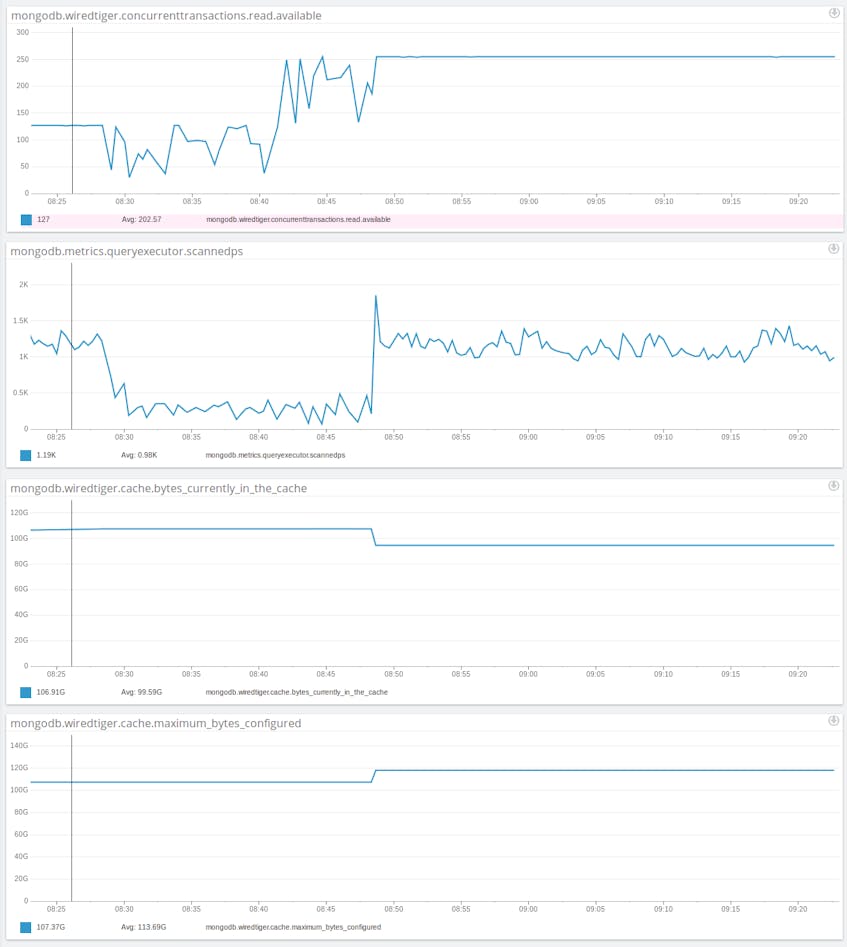

We didn’t know why the database was slowing down. We saw that it would queue up lots of queries, but no one query seemed particularly problematic. What we were seeing seemed like resource contention, but CPU, RAM, and disk I/O all seemed okay. We theorized that maybe we were running out of WiredTiger read tickets, so we added metrics to track them. We saw that read tickets out spiked from a baseline of about zero when we were having problems. Increased read tickets now seemed like a symptom. We increased the max number of read tickets, which didn’t make things any better and confirmed it was a symptom. Luckily, it was a symptom that started before our customers saw problems. We were now able to setup a Datadog alert to let us know the site was about to go down and could bounce the database server before customers were impacted.

We looked at the other Datadog graphs and saw that the metrics we added for WiredTiger cache utilization showed the cache was filling up right before the read tickets, latency, etc. spiked. We increased the WiredTiger cache, which we were able to do with an administrative command and no restart, while the server was running and immediately all problems ceased. Aha!

Deploying WiredTiger fix

We continued to have some problems. Even with our larger cache, we would end up filling it and running into problems again. We added tcmalloc tracking and improved cursors tracking to get further insight into our problems. We also got the MongoDB team to expose tcmalloc metrics by default. Now that we knew we were looking for memory problems we also began searching the MongoDB JIRA. We found numerous open issues that appeared related and were fixed in 3.0.5 and 3.0.6. We continued preemptively bouncing the server, waiting a few days until 3.0.6 was released. We upgraded the night that it was released and haven’t had a problem with the cache holding more than the configured number of bytes since.

Conclusion

The team at Connectifier has found Datadog to be a developer-friendly service for collecting, analyzing, and alerting on metrics. We were surprised how immediately useful Datadog was and that it’s never gotten in our way. The software integrations that come with Datadog make it easy to get up and running quickly. And the work we did to understand the issue is still immensely useful for us after the problem was resolved. Especially critical for us has been the open source model under which the monitoring agents are developed. While submitting pull requests, we were very pleased with the responsiveness of the Datadog team in reviewing our suggestions, incorporating them and making further improvements based on our experiences.