We recently released the State of Cloud Security study, where we analyzed the security posture of thousands of organizations using AWS, Azure, and Google Cloud. In particular, we found that:

- Long-lived cloud credentials continue to be problematic and expose cloud identities

- Multi-factor authentication (MFA) is not always consistently enforced for cloud users

- Adoption of IMDSv2 in AWS is rising, though still insufficient

- Use of public access blocks on storage buckets varies across cloud platforms and is more prevalent in AWS than Azure

- A number of cloud workloads have non-administrator permissions that still allow them to access sensitive data or escalate their privileges

- Many virtual machines are exposed to the internet

In this post, we provide key recommendations based on these findings, and we explain how you can leverage Datadog Cloud Security Management (CSM) to improve your security posture.

Minimize the use of long-lived cloud credentials

Credentials are considered long-lived if they are static (i.e., never change) and also never expire. These types of credentials are responsible for a number of documented cloud data breaches, and organizations should avoid using them.

In AWS, this means you should avoid using IAM users. Using IAM users to authenticate humans is both cumbersome and risky, especially in multi-account environments. AWS IAM Identity Center (formerly AWS SSO) and IAM role federation provide a more secure and convenient way of managing and provisioning human identities, while supporting common command-line applications such as the AWS CLI or Terraform.

AWS workloads—such as EC2 instances—should not use IAM users either. Depending on the compute service in use, AWS provides mechanisms to leverage short-lived credentials by design, so that applications can transparently retrieve short-lived credentials without additional effort when using the AWS SDKs or CLI. These mechanisms include:

- IAM roles for EC2 instances

- Lambda functions execution roles

- IAM roles for EKS service accounts

- IAM roles for ECS tasks

- CodeBuild service roles

You can also use a service control policy (SCP) to proactively block the creation of IAM users at the account or organization level.

In Google Cloud, humans should leverage their own identity—typically from Google Workspace—and should not authenticate to the Google Cloud APIs using service account keys.

Google Cloud Workloads should not use service account keys, as they don’t expire and can easily become exposed. Instead, you can attach service accounts to workload resources such as virtual machines or cloud functions. For the common case of workloads running in a Google Kubernetes Engine (GKE) cluster, you can leverage Workload Identity to transparently pass short-lived credentials for a specific service account to your applications. Google Cloud also provides several organization policy constraints that you can enable at the project, folder, or organization level to disable service account or service account key creation.

In Azure, users accessing resources should authenticate using their Azure AD identity. They should not use long-lived credentials of Azure AD applications.

Similarly, Azure workloads such as virtual machines should not embed static credentials of an Azure AD application. Instead, you can leverage Managed Identities to transparently and dynamically retrieve short-lived credentials bound to a specific app registration. Services running in Azure Kubernetes Service (AKS) can use Azure AD Workload ID.

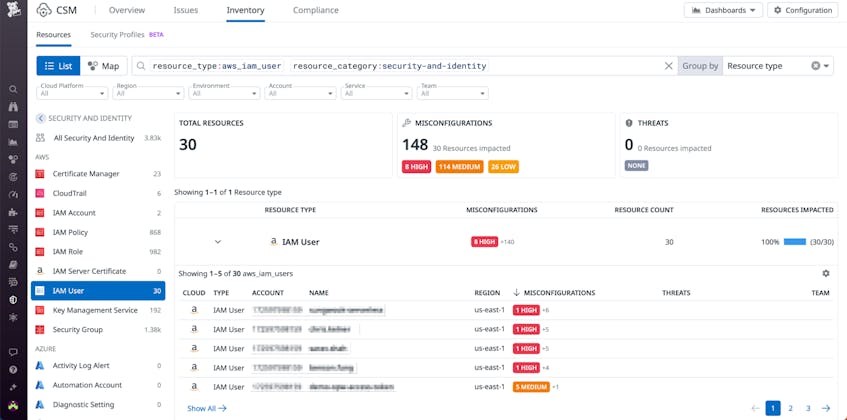

Using Datadog CSM to identify long-lived cloud credentials

You can use the Inventory in Datadog CSM to identify IAM users and Google Cloud service accounts or service account keys across all your cloud environments.

The cloud configuration rule “Service accounts should only use GCP-managed keys” (open in-app) also allows you to quickly identify Google Cloud service accounts with active user-managed access keys.

Track down stale cloud credentials

As discussed above, long-lived cloud credentials are problematic because they never expire, and can become exposed in places such as source code, container images, configuration files, etc. Older credentials carry an even greater risk. Consequently, tracking down old and unused cloud credentials is a highly valuable investment for security teams.

You can use an AWS credential report to identify IAM users with unused credentials. In Azure, you can use the Microsoft Graph API through the Azure CLI to identify all Azure AD applications with credentials and pinpoint old ones:

az rest --uri 'https://graph.microsoft.com/v1.0/applications/?$select=id,displayName,passwordCredentials'

In Google Cloud, you can use Policy Analyzer to retrieve the last authentication time of every service account and identify unused ones:

gcloud policy-intelligence query-activity \

--activity-type=serviceAccountLastAuthentication \

--project=your-project

It’s also possible to use Recommender, including at the organization level.

Use Datadog CSM to track down stale cloud credentials

With Datadog CSM, you can identify stale and risky cloud credentials at scale. In particular, you can use the following rules:

- AWS: “Inactive IAM access keys older than 1 year should be removed” (open in-app)

- AWS: “Access keys should be rotated every 90 days or less” (open in-app)

- Google Cloud: “Service accounts should rotate user-managed or external keys every 90 days or less” (open in-app)

Enforce MFA for cloud users

When users authenticating to cloud environments don’t use MFA, they are vulnerable to credential stuffing and brute-force attacks. Enforcing MFA is critical to prevent account compromise and unauthorized access to sensitive data in the cloud.

In AWS, IAM users should be avoided as much as possible, as discussed earlier in this post. When exceptional circumstances warrant their use by human users, you can enforce MFA through the use of the aws:MultiFactorAuthPresent condition key. Azure AD allows you to enforce MFA at the tenant level with a conditional access policy. For this policy to be effective, you also need to block legacy authentication. Finally, you can enforce MFA in Google Cloud at the organization or organizational unit level.

Use Datadog CSM and Cloud SIEM to identify risky users without MFA

You can use Datadog CSM and Cloud SIEM to identify users without MFA and risky logins through the following rules:

- “Multi-factor authentication should be enabled for all AWS IAM users with console access” (open in-app)

- “Azure AD login without MFA” (open in-app)

- “AWS console login without MFA” (open in-app)

- “AWS ConsoleLogin without MFA triggered impossible travel scenario” (open in-app)

Enforce the use of IMDSv2 on Amazon EC2 instances

The EC2 Instance Metadata Service Version 2 (IMDSv2) is designed to help protect applications from server-side request forgery (SSRF) vulnerabilities. By default, newly created EC2 instances allow using both the vulnerable IMDSv1 and the more secure IMDSv2. It’s critical that EC2 instances—especially publicly exposed ones hosting web applications—enforce IMDSv2 to protect against this type of vulnerability, as SSRF vulnerabilities are frequently exploited by attackers.

AWS has released a guide to help organizations transition to IMDSv2, as well as a blog post about the topic. AWS also recently released a new mechanism to enforce IMDSv2 by default on specific Amazon Machine Images (AMIs). And in November 2023, AWS announced a set of more secure defaults for EC2 instances started from the console.

While it’s more efficient to enforce IMDSv2 at the design phase—for instance, by updating the source infrastructure-as-code templates—it’s also possible as a last resort to use an SCP to block access to credentials retrieved using IMDSv1.

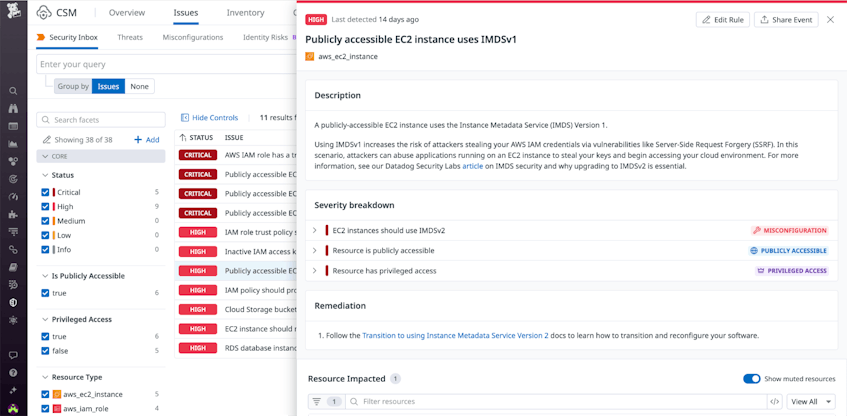

Use Datadog CSM to identify EC2 instances that don’t enforce IMDSv2

You can use Datadog CSM to identify EC2 instances that don’t enforce IMDSv2 through the Misconfigurations rule “EC2 instances should use IMDSv2” (open in-app) and the CSM Issue “Publicly accessible EC2 instance uses IMDSv1” (open in-app).

Block public access proactively on cloud storage services

Cloud storage services such as Amazon S3 or Azure storage are highly popular and were among the earliest public cloud offerings. While storage buckets are private by default, they are frequently made public inadvertently, exposing sensitive data to the outside world. Thankfully, cloud providers have mechanisms to proactively protect these buckets, ensuring that a human error doesn’t turn into a data breach.

In AWS, S3 Block Public Access allows you to prevent past and future S3 buckets from being made public, either at the bucket or at the account level. It’s recommended that you turn this feature on at the account level and ensure this configuration is part of your standard account provisioning process. It’s important to note that since April 2023, AWS blocks public access by default for newly created buckets. However, this doesn’t cover buckets that have been created before this date.

A similar mechanism exists in Azure, through the “allow blob public access” parameter of storage accounts. At the time of writing, new storage accounts don’t block public access by default. Microsoft however plans to migrate to a more secure default in November 2023.

In Google Cloud, you can block public access to Google Cloud Storage (GCS) buckets at the bucket, project, folder, or organization level using the “public access prevention” organization policy constraint.

When you need to expose a storage bucket publicly for legitimate reasons—for instance, hosting static web assets—it’s typically more cost-effective and performant to use a content delivery network (CDN) such as Amazon CloudFront or Azure CDN rather than directly exposing the bucket publicly.

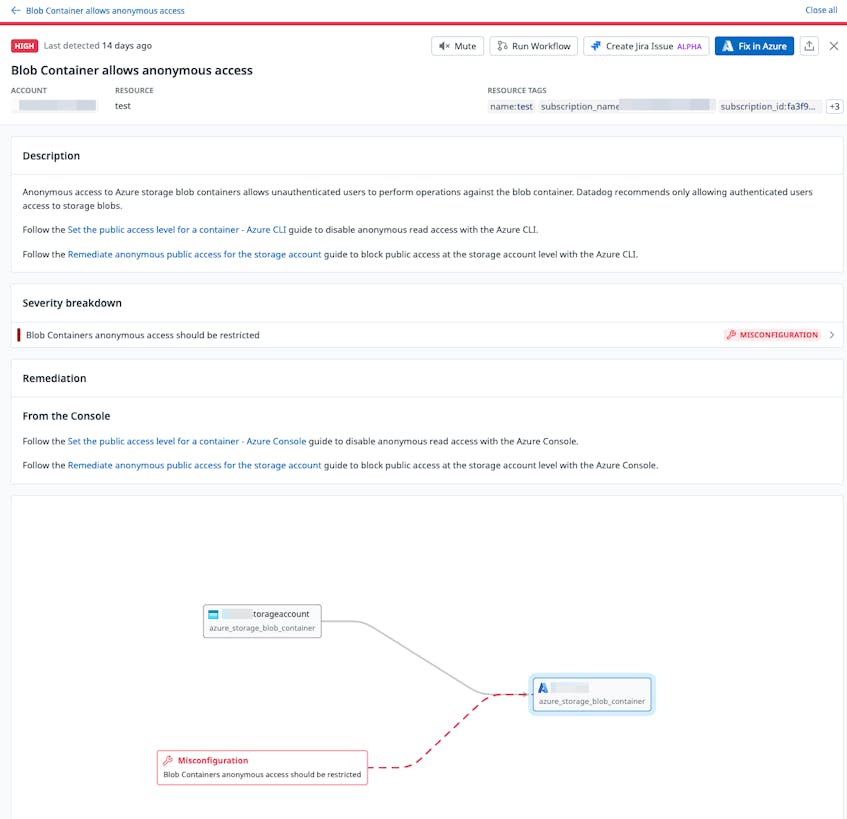

Use Datadog CSM to identify vulnerable cloud storage buckets

You can use Datadog CSM to identify vulnerable cloud storage buckets through the following rules:

- “S3 bucket contents should only be accessible by authorized principals” (open in-app)

- “S3 buckets should have the ‘Block Public Access’ feature enabled” (open in-app)

- “S3 bucket ACLs should block public write actions” (open in-app)

- “Azure Storage Blob Containers anonymous access should be restricted” (open in-app)

- “Google Cloud Storage bucket access should be restricted to authorized users” (open in-app)

Limit privileges assigned to cloud workloads

Cloud workloads such as virtual machines are frequently assigned permissions to perform their usual tasks, such as reading data from a cloud storage bucket or writing to a database. However, overprivileged workloads can allow an attacker to access a wide range of sensitive data in the cloud environment—or even gain full access to it. Ensuring that workloads follow the principle of least privilege is critical to help minimize the impact of a compromised application.

Right-sizing permissions is a continuous process that usually follows three steps:

- Determine what actions the workload needs to perform.

- Apply the associated policy to the workload role, with minimally scoped permissions. For instance, if an EC2 instance needs to read files from an S3 bucket, it should only be able to access this specific bucket.

- Avoid “permissions drift” by ensuring that the workload still requires and actively uses these permissions.

At development time, you can use tools like iamlive to discover what cloud permissions a workload needs. At runtime, cloud provider tools such as Amazon IAM Access Analyzer or Google Cloud Policy Intelligence can compare granted permissions with effective usage, to suggest scoping down permissions of specific policies. Note that Google decided to make Policy Intelligence accessible only to organizations that use Security Command Center at the organization level instead of providing it as part of their base offering, starting January 2024.

In addition, it’s important to note that seemingly innocuous permissions can allow an attacker to gain full access to a cloud account through privilege escalation. For instance, while the AWS-managed policy AWSMarketplaceFullAccess may give the impression it’s only granting access to the AWS Marketplace, it also allows an attacker to gain full administrator access to the account by launching an EC2 instance and assigning it a privileged role.

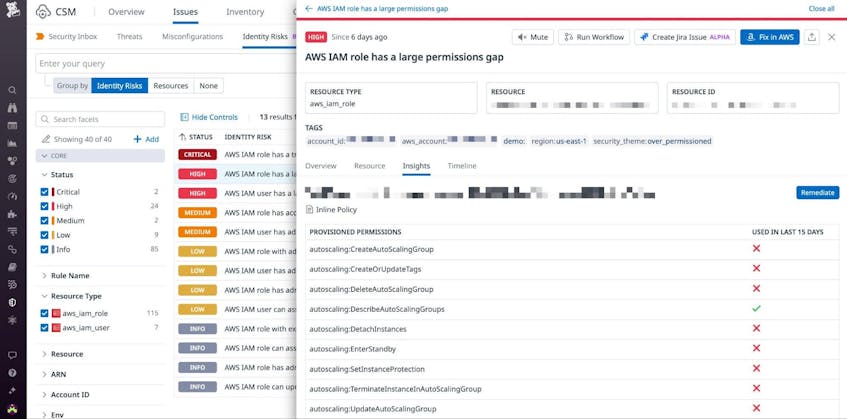

Use Datadog CSM to find overprivileged roles

You can use Datadog CSM Identity Risks to identify AWS IAM users and IAM roles with a large permissions gap—i.e., those that have been granted permissions they’re not using and can be deprovisioned.

Limit network exposure of cloud workloads

When you expose a network service such as SSH to the internet, it immediately becomes a target for automated bots and attackers who attempt to run brute-force attacks or exploit vulnerabilities against it. This means minimizing internet exposure is a key component of reducing your organization’s attack surface.

In AWS, you can use SSM Sessions Manager to securely access virtual machines that are in a virtual private cloud (VPC) and don’t have a public IP address. You can also take advantage of EC2 Instance Connect. In Azure environments, Azure Bastion allows you to securely connect to private resources and offers first-class support for SSH and RDP. Google Cloud Identity-Aware Proxy (IAP) provides a free and seamless way to access resources on a private internal network, through tunneling and using your existing identity. Google Cloud OS Login is also an option for secure remote access to virtual machines.

When none of these options is available, restricting public access to specific IP ranges can add a valuable (but not bulletproof) layer of security.

Use Datadog to find publicly exposed workloads

You can use Datadog CSM to identify publicly available compute resources with these queries:

You can also manually select or deselect public accessibility as a facet in the CSM sidebar.

In addition, CSM takes public exposure into account to help you better prioritize security issues, as publicly accessible resources are likely to be higher-risk:

- Publicly accessible EC2 instance connected to known attack domain

- Publicly accessible EC2 instance performed cryptomining operations

- Publicly accessible EC2 instance performing SSH scanning

- Publicly accessible EC2 instance should not have open administrative ports

- Publicly accessible EC2 instance uses IMDSv1

- Publicly accessible EC2 instances should not have highly privileged IAM roles

- Publicly accessible Azure VM connected to known attack domain

- Publicly accessible Azure VM performed cryptomining operations

- Publicly accessible Azure VM performing SSH scanning

- Publicly accessible GCP compute instance connected to known attack domain

- Publicly accessible GCP compute instance performed cryptomining operations

- Publicly accessible GCP compute instance performing SSH scanning

Conclusion

Our 2023 State of Cloud Security study saw clear improvements across a number of measures of cloud security posture. That said, there is progress still to be made across organizations using all cloud platforms, and maintaining a secure cloud infrastructure is an ongoing process. In this post, we covered how organizations can remediate some of the most common security issues our study surfaced, and how Datadog CSM can help.

To get started with Datadog CSM, check out our documentation. If you’re new to Datadog, sign up for a 14-day free trial.