Just like shopping on Black Friday, AWS re:Invent has become a post-Thanksgiving tradition for some of us at Datadog. We were excited to join tens of thousands of fellow AWS users and partners for this annual gathering that features new product announcements, technical sessions, networking, and fun.

As if I didn't love @datadoghq enough, they have a slide here pic.twitter.com/Opq7BGW3KG

— Travisty (@traviskhoover) November 28, 2022

This year, we saw three themes emerge from the conference announcements and sessions:

- Using data and observability to improve control of your systems

- Strengthening security by gathering intelligence to augment mitigation

- Increasing performance to deliver results faster and save costs

Using data and observability to improve control of your systems

In Tuesday’s opening keynote, AWS CEO Adam Selipsky talked about the exponential growth of data and how companies are using data insights to innovate. These innovations range from better business decision-making to dynamically controlling and scaling your digital stack.

One of the new features announced at the conference was AWS Control Tower Account Factory Customization. Whether managing multiple environments for testing, ensuring resource isolation, or grouping resources for better cost accounting, it’s common for companies to use multiple AWS accounts. AWS Control Tower makes managing multiple accounts easier, and the new Account Factory Customization feature gives users even more control over their accounts. Along with this new feature, Datadog released a Datadog AWS Integration blueprint that makes it easy to connect all of your AWS accounts managed by AWS Control Tower to Datadog.

Once your AWS accounts are connected to Datadog and you’ve installed the Datadog Agent, you can take advantage of our new Universal Service Monitoring (USM). USM automatically detects all of your services and monitors them for latency, errors, traffic, and resource saturation without needing to update your application’s code.

In his session “Observability in the real world,” Datadog VP of Engineering Junaid Ahmed discussed this automated observability as part of a larger, iterative approach to adopting observability. When organizations are just starting their observability journey, the road can seem daunting and leave them stuck, questioning where to start. By leveraging tools like USM, eBPF, and Kubernetes admission controllers, getting fundamental insight into your entire system becomes easier and gives you a foundation from which you can direct further efforts.

Junaid was joined on stage by Arielle Allen, Director of Platform Engineering at Starbucks. Arielle shared how the Starbucks team adopted this iterative approach and how it helped them identify meaningful service level indicators (SLIs). Those SLIs helped them collaborate with stakeholders to establish service level objectives (SLOs), which has helped bring observability to even more parts of their organization.

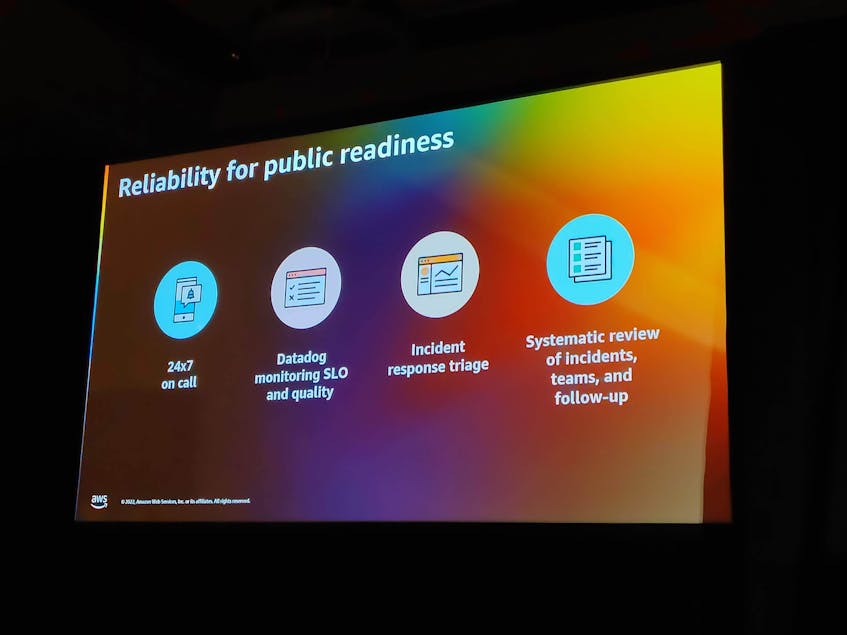

In his session “Scaling a SaaS company for public company readiness,” Braze cofounder and CTO Jon Hyman discussed how and where startups need to mature to become publicly traded companies. He highlighted four areas: reliability, security, cost management, and team efficiency. For each area, he discussed how the Braze team identified performance indicators and evolved control processes that allowed them to scale.

Strengthening security by gathering intelligence to augment mitigation

With modern environments producing an increasing amount of data, security has become a primary concern. AWS released several new products, including Amazon Macie and Amazon Verified Permissions to make securing your data and implementing compliance and access control processes easier.

Following the larger conference theme of leveraging data, AWS announced Amazon Security Lake, a tool that helps you store, manage, and analyze your security telemetry. To help standardize security data, Amazon has been contributing to the Open Cybersecurity Schema Framework (OCSF) project, which aims to create an extensible standard to facilitate integrating security data providers. As a launch partner, Datadog simultaneously released a Security Lake integration that makes it simple to forward data from your Amazon Security Lake to Datadog and analyze it using tools such as the new Datadog Cloud SIEM Investigator. Cloud SIEM Investigator presents security information in a visually intuitive way that makes correlating signals and drilling down into the details of specific entities easier. By improving contextual understanding, Cloud SIEM Investigator helps teams collaborate better when responding to and investigating security incidents.

Andrew Krug, head of Security Advocacy at Datadog, and Alex Hardman, a technical marketing engineer at LaunchDarkly, teamed up to present an innovative way to secure systems. Andrew and Alex started the session by discussing how threats have evolved and the novel ways that attackers use to gain access to modern cloud-based systems. They then discussed how organizations can better detect attacks and intelligently respond to them using feature flags. Rather than simply blocking access, they demonstrated ways to engage attackers in ways that could confuse them, misdirect them, or even convert them into champions.

Earlier this year, Datadog released the State of AWS Security: A Look Into Real-World AWS Environments. This report uses anonymized customer data to surface trends in customer misconfiguration. One of the key areas to focus on for many AWS customers is cloud identity and increasing the scrutiny of AWS IAM policy. In the talk “Security alchemy: How AWS uses math to prove security,” Brigid Johnson and Neha Rungta discussed the impact that using theorem proofs has on the evaluation of customer IAM policies at scale via AWS IAM Access Analyzer. While automated reasoning using proofs isn’t new to the AWS cloud, users are seeing a maturing of this technology and broader applications as IAM Access Analyzer expands to cover more services. IAM Access Analyzer is a free feature in all AWS accounts and can reason about access conditions inside single accounts and across multiple accounts. As a customer, it’s really exciting to see AWS growing this capability and leveraging its scale to provide increased security benefits at zero cost.

Increasing performance to deliver results faster and save costs

Performance was the third theme that we saw throughout the conference. AWS announced several new EC2 instance types that offer substantial performance gains over previously available ones.

- R7iz instances are powered by the latest fourth-generation Intel Xeon processors and, as memory-optimized R-type instances, they feature incredibly fast memory—they’re the first instances to use DDR5.

- Hpc6id instances are high-performance computing nodes that use Elastic Fabric Adapter (EFA), which enables up to 200 Gbps network communications between nodes. Adam Selipsky also announced that Graviton-based Hpc7g instances are coming soon.

- C7gn instances use new AWS Graviton3E processors that are up to 25 percent faster than Graviton2 processors and have up to 35 percent faster vector processing than standard Graviton3 processors. They also offer up to 200 Gbps of network bandwidth, which is substantially higher than the 30 Gbps limit on standard C7g instances.

The new Graviton-powered instances caused a lot of excitement and hallway discussions about migrating to the new processors. Jason Yee, Staff Technical Evangelist at Datadog, presented “What to know before adopting Arm,” which provided insight into what’s required for an Arm migration. In his session, he discussed the work that Datadog has done to migrate some of our most cost-intensive services. While sharing some of the challenges encountered, he shared three lessons: measure everything to ensure you’re receiving the performance you expect, keep all of your software up to date (to avoid Arm64-related bugs), and take a critical look at your software to find ways that you can optimize code and improve performance.

Graviton-powered nodes have become popular because they are substantially cheaper to run than equivalent x86 instances. But no matter which processor you use, AWS Computer Optimizer is designed to help you avoid over- or under-provisioning resources. At re:Invent, AWS announced that AWS Compute Optimizer can now ingest memory utilization metrics from Datadog and other observability tools to further improve optimization recommendations.

The Nasdaq team presented a unique performance perspective in their session “Nasdaq: Moving mission-critical, low-latency workloads to AWS.” What made their story interesting was that they worked with AWS to design and deploy ultra-low-latency AWS Outposts—fully managed server racks deployed on-premise that seamlessly extend existing AWS services. By hosting resources locally and using optimized network interfaces, they can achieve sub-millisecond latency.

Dive deeper

As always, the number of new product announcements from AWS re:Invent exceeds what we could cover in a single blog post. To catch up on the AWS announcements, visit the AWS What’s New page. To learn more about Datadog announcements, visit the Datadog re:Invent microsite. Videos of all the conference sessions are available on the AWS Events Youtube channel.